Member of Technical Staff @perplexity_ai building @Comet

10 subscribers

How to get URL link on X (Twitter) App

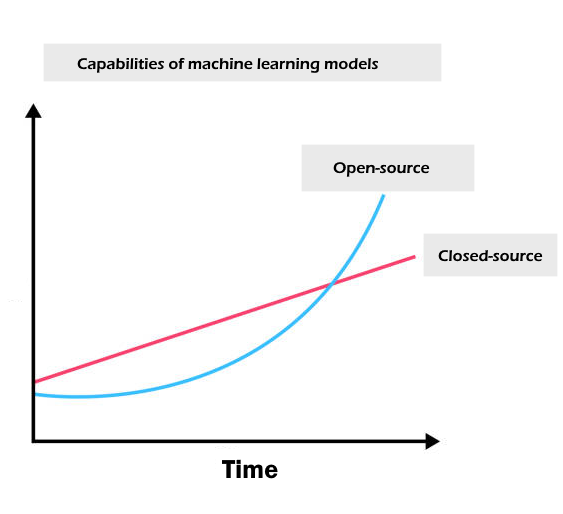

Now there are much smaller models that beat GPT-3, and some models that arguably (ARGUABLY) come close to GPT-3.5-turbo.

Now there are much smaller models that beat GPT-3, and some models that arguably (ARGUABLY) come close to GPT-3.5-turbo.

https://twitter.com/marktenenholtz/status/1685654899575427072

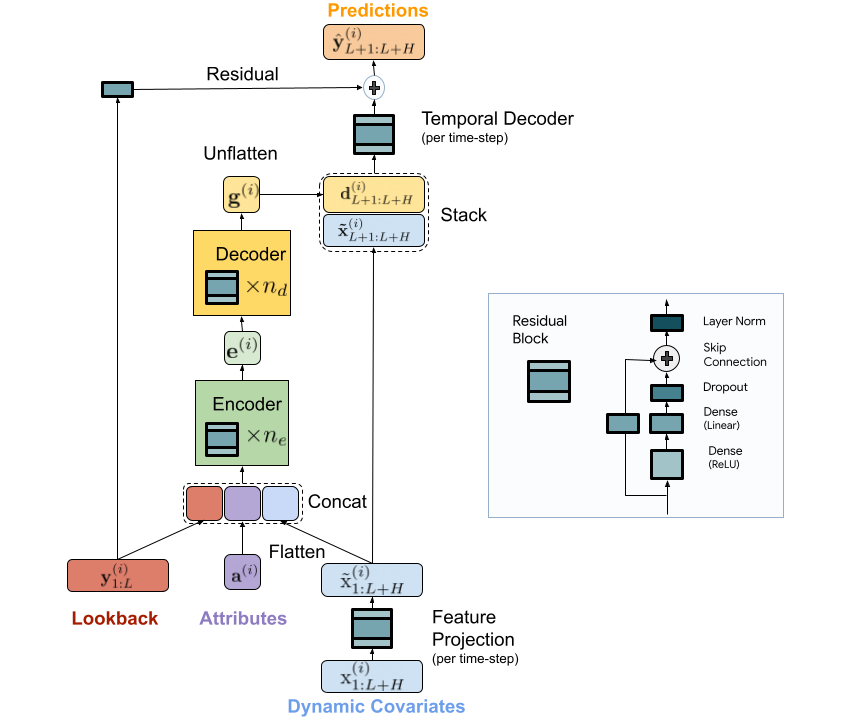

The most common loss function in time-series models is MSE.

The most common loss function in time-series models is MSE.