AI/ML engineer. Previously at Google: Product Manager for Keras and TensorFlow and developer advocate on TPUs. Passionate about democratizing Machine Learning.

How to get URL link on X (Twitter) App

To be fair, some LLMs can already do that, if they are trained with a specific positional encoding like Alibi (). And before LLMs, Recurrent Neural Networks (RNNs) could do this trick as well. But was lost in Transformers.arxiv.org/abs/2108.12409

To be fair, some LLMs can already do that, if they are trained with a specific positional encoding like Alibi (). And before LLMs, Recurrent Neural Networks (RNNs) could do this trick as well. But was lost in Transformers.arxiv.org/abs/2108.12409

This paper is, in part, a traditional algorithm, a "depth-first search algorithm over the facts and the rules", starting from the desired conclusion and trying to logically reach the premises (facts and rules).

This paper is, in part, a traditional algorithm, a "depth-first search algorithm over the facts and the rules", starting from the desired conclusion and trying to logically reach the premises (facts and rules).

@geoffreyhinton I seems very unlikely that the human brain uses back propagation to learn. There is little evidence of backprop mechanics in biological brains (no error derivatives propagating backwards, no storage of neuron activities to use in a packprop pass, ...).

@geoffreyhinton I seems very unlikely that the human brain uses back propagation to learn. There is little evidence of backprop mechanics in biological brains (no error derivatives propagating backwards, no storage of neuron activities to use in a packprop pass, ...).

https://twitter.com/yixuan_su/status/1590034008758312960

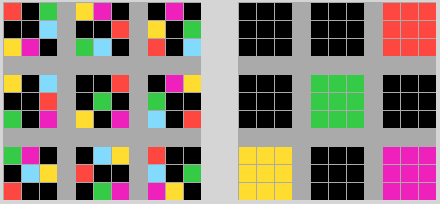

In the experiment results above, the model continues a given text and human raters evaluate the result.

In the experiment results above, the model continues a given text and human raters evaluate the result.

The first answer "It [Eiffel Tower] was constructed in 1887" is generated directly, but also recognized as containing a factual statement. This sends the whole context to LaMDA-Research which is trained to generate search queries, here "TS, Eiffel Tower, construction date"

The first answer "It [Eiffel Tower] was constructed in 1887" is generated directly, but also recognized as containing a factual statement. This sends the whole context to LaMDA-Research which is trained to generate search queries, here "TS, Eiffel Tower, construction date"

The nice part is that it's a purely architectural change of the detection network, with a new contrastive loss which does not introduce additional hyper-parameters. No additional data required !

The nice part is that it's a purely architectural change of the detection network, with a new contrastive loss which does not introduce additional hyper-parameters. No additional data required !

It's a bit different from the "memory layer" I tweeted about previously, which provides a large learnable memory, without increasing the number of learnable weights. (for ref: arxiv.org/pdf/1907.05242…)

It's a bit different from the "memory layer" I tweeted about previously, which provides a large learnable memory, without increasing the number of learnable weights. (for ref: arxiv.org/pdf/1907.05242…)

The conceptual principle of the R-CNN family is to use a two-step process for object detection:

The conceptual principle of the R-CNN family is to use a two-step process for object detection:

Their basic building block is called the "Inverted Residual Bottleneck", compared here with the basic blocks in ResNet and Xception (dw-conv for depth-wise convolution).

Their basic building block is called the "Inverted Residual Bottleneck", compared here with the basic blocks in ResNet and Xception (dw-conv for depth-wise convolution).

and just as good and old VGG19:

and just as good and old VGG19:

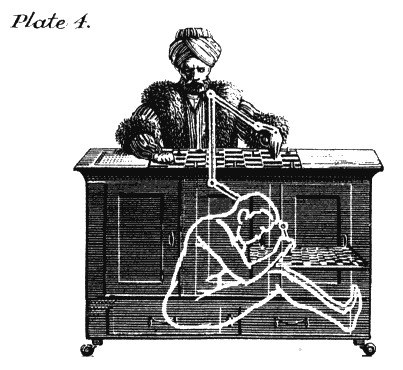

@fchollet Chess was considered the pinnacle of human intelligence, … until it was solved by a computer and surpassed Garry Kasparov in 1997. Today, it is hard to argue that a min-max algorithm with optimizations represents “intelligence”.

@fchollet Chess was considered the pinnacle of human intelligence, … until it was solved by a computer and surpassed Garry Kasparov in 1997. Today, it is hard to argue that a min-max algorithm with optimizations represents “intelligence”.

Detailed instructions:

Detailed instructions: