I build & teach AI stuff. Founder @TakeoffAI where we’re building an AI coding tutor. Come learn to code + build with AI at https://t.co/oJ8PNoAutE.

9 subscribers

How to get URL link on X (Twitter) App

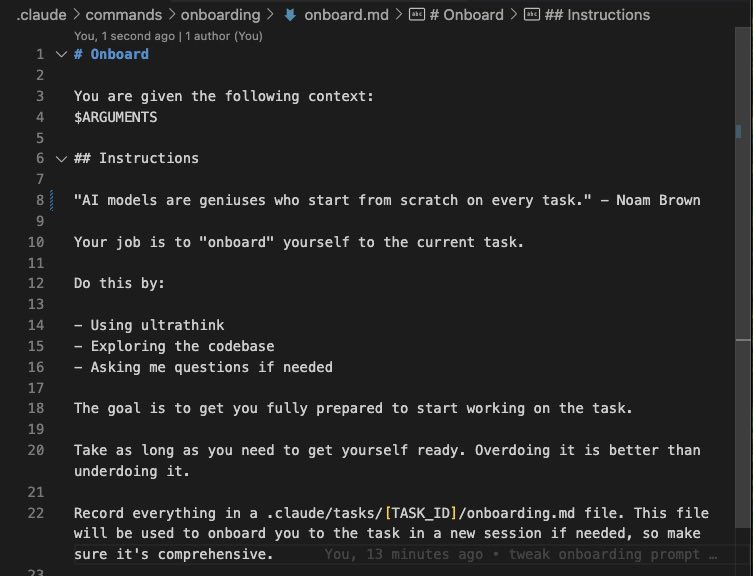

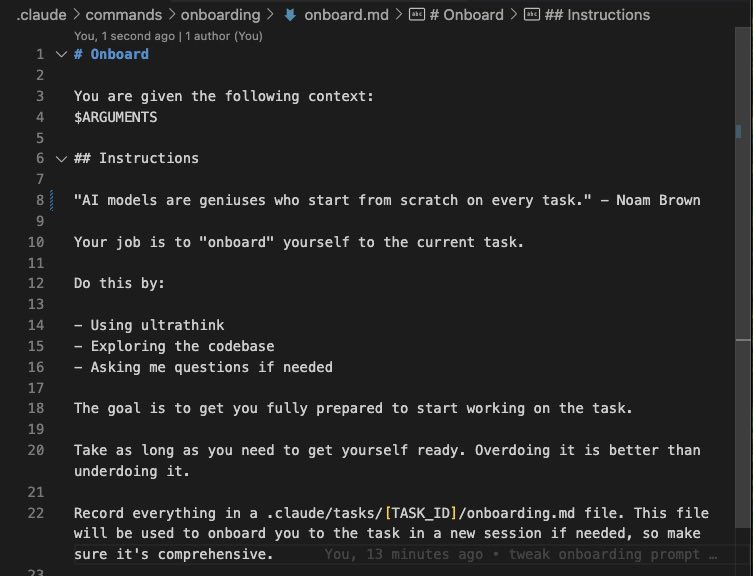

It is the single highest leverage thing you can do to improve perf of coding agents.

It is the single highest leverage thing you can do to improve perf of coding agents.

There is absolute no situation in which you will outcompete someone who is using o3 and you are not.

There is absolute no situation in which you will outcompete someone who is using o3 and you are not.

Including my o1 workflow video + GitHub link to my xml parser for the ai code cowboys out there who want to come explore the wild west.

Including my o1 workflow video + GitHub link to my xml parser for the ai code cowboys out there who want to come explore the wild west.https://twitter.com/davidsholz/status/1833588201472344388

The 101 track will be relatively basic.

The 101 track will be relatively basic.https://twitter.com/grimezsz/status/1650304051718791170