How to get URL link on X (Twitter) App

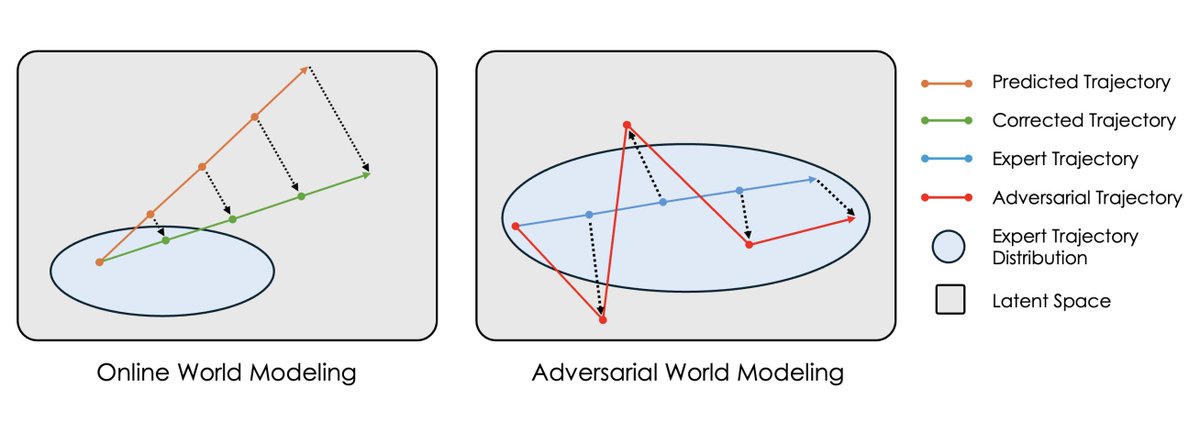

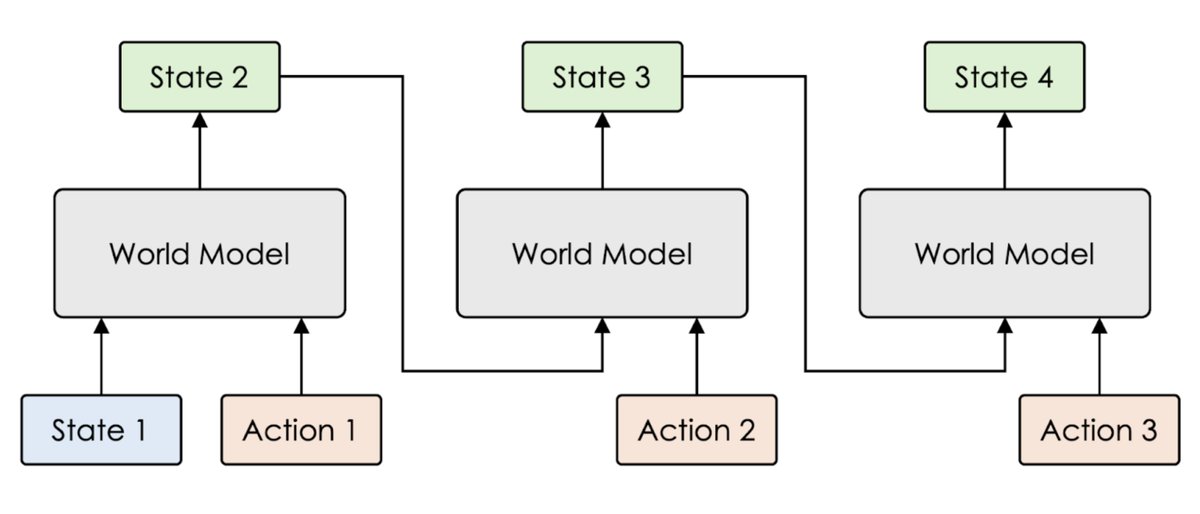

Gradient-based planning starts with a world model that takes in the current state (e.g. an image) and an action, and predicts the next state. We can stack this world model on top of itself again and again, turning it into a differentiable simulator of the environment. 2/11

Gradient-based planning starts with a world model that takes in the current state (e.g. an image) and an action, and predicts the next state. We can stack this world model on top of itself again and again, turning it into a differentiable simulator of the environment. 2/11

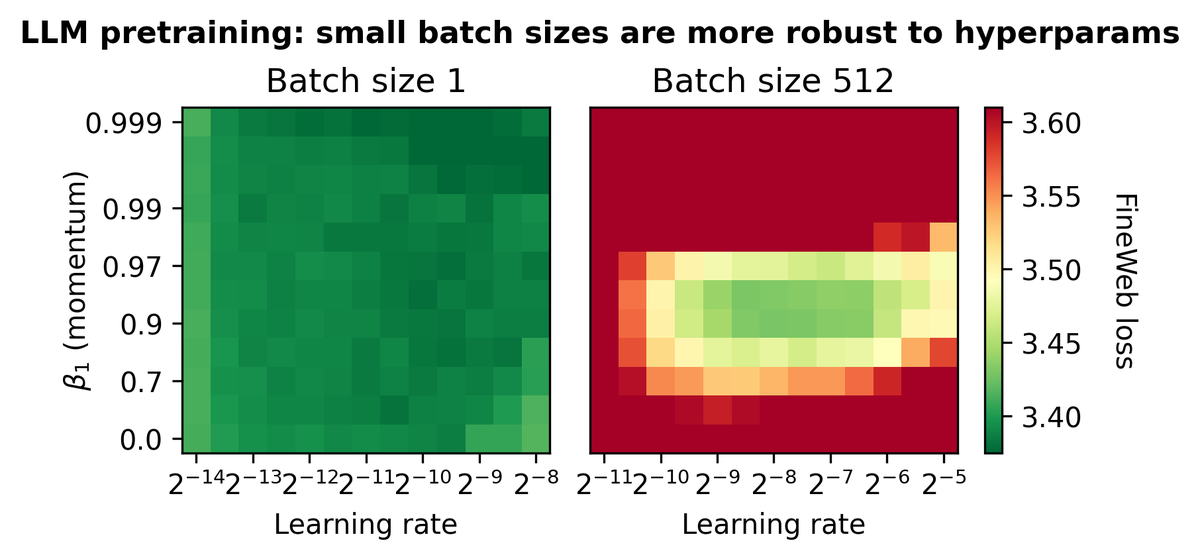

Here's ★how to make small batch LLM training fast, ★how to pretrain LLMs efficiently via vanilla SGD without momentum, and ★why you should consider getting rid of LoRA and gradient accumulation. 2/n

Here's ★how to make small batch LLM training fast, ★how to pretrain LLMs efficiently via vanilla SGD without momentum, and ★why you should consider getting rid of LoRA and gradient accumulation. 2/n

https://twitter.com/ylecun/status/1591463668612730880While it is true that a model which can only express few functions needs few samples to learn, the converse is not true! This underscores the failure of ideas like VC dimension and Rademacher complexity to explain neural network generalization. 2/8