Unofficial Midjourney shill. Playing with AI & sharing learnings.

43 subscribers

How to get URL link on X (Twitter) App

first thing I did was find style codes I like and blend them together

first thing I did was find style codes I like and blend them together

When you use the style reference feature, you're essentially sending MJ to a specific location in "style space"

When you use the style reference feature, you're essentially sending MJ to a specific location in "style space"

MJ default is --cw 100, but try lower --cw values if you plan to specify an outfit different from your reference img

MJ default is --cw 100, but try lower --cw values if you plan to specify an outfit different from your reference img

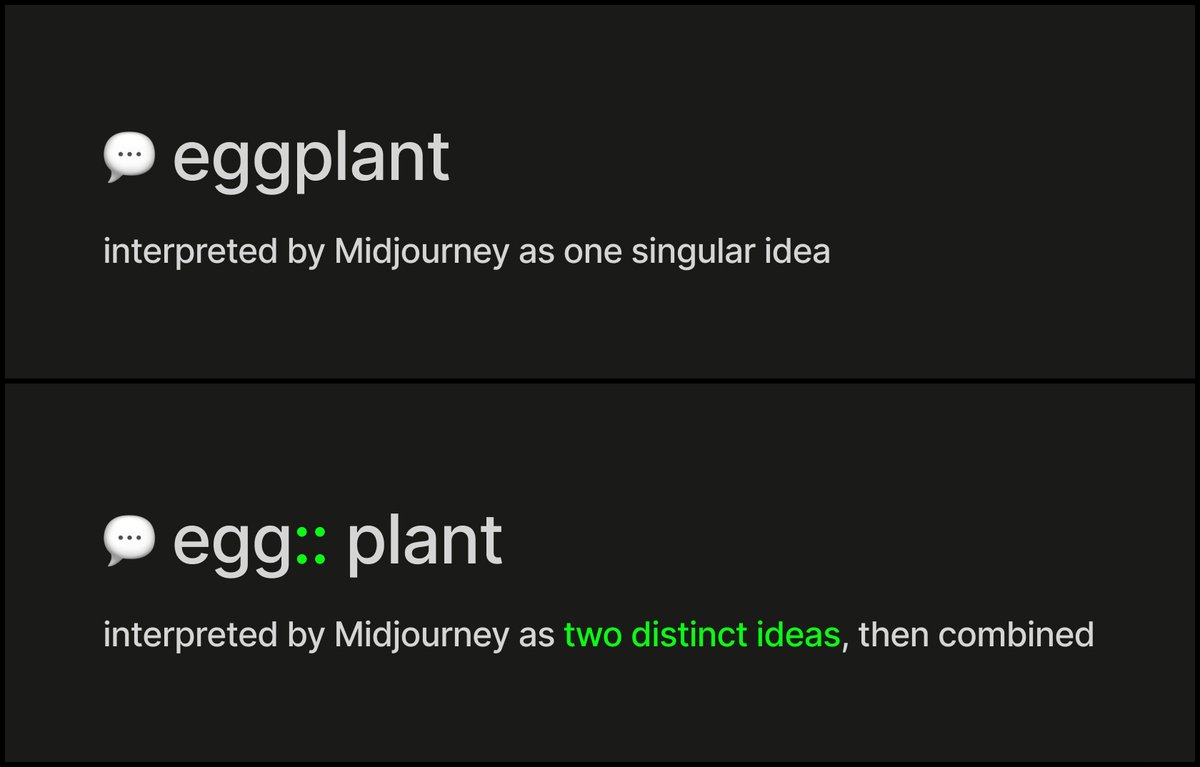

When multi-prompting, MJ considers each concept separated by the '::' as its own unique prompt > imagines them individually > then blends them

When multi-prompting, MJ considers each concept separated by the '::' as its own unique prompt > imagines them individually > then blends them

💬 Prompt setup:

💬 Prompt setup:

a corner bar with a neon sign that says "open late"

a corner bar with a neon sign that says "open late"