How to get URL link on X (Twitter) App

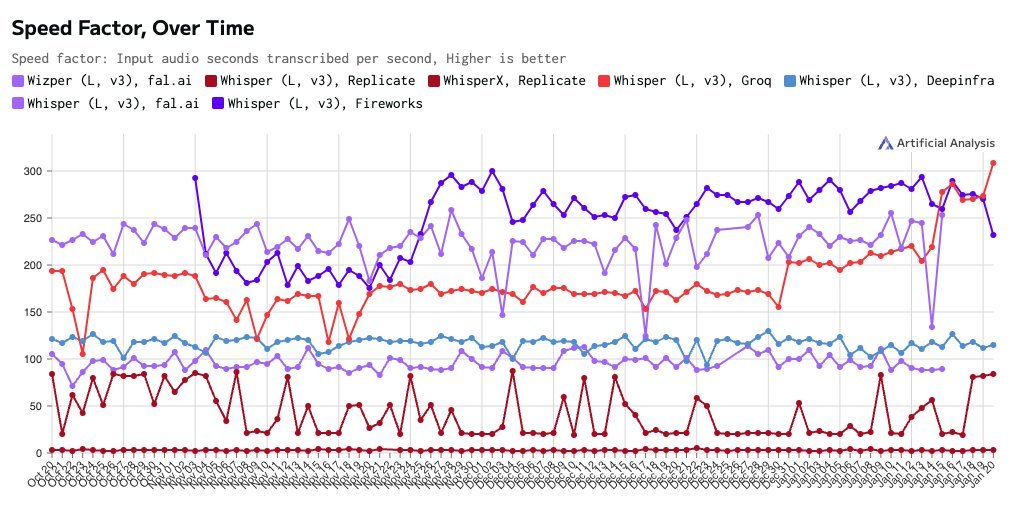

https://twitter.com/vercel/status/19125883419730659721/4 and just why are developers switching? the survey shows that 23% of you cite latency/performance as a top technical challenge, which is exactly what we're solving with our lpu inference engine and offering you access to that powerful hardware via groq api.

https://twitter.com/ArtificialAnlys/status/18873003586844795231/5 What is speculative decoding? It's a technique that uses a smaller, faster model to predict a sequence of tokens, which are then verified by the main, more powerful model in parallel. The main model evaluates these predictions and determines which tokens to keep or reject.

https://twitter.com/karpathy/status/18872111930998252542/7 Karpathy explains how parallelization is possible during LLM training, but output token generation is sequential during LLM inference. Specialized HW (like Groq's LPU) is designed to optimize such computational reqs, particularly sequential token gen, for fast LLM outputs.

2/4 Flex Tier gives on-demand processing with rapid timeout when resources are constrained - perfect for workloads that need fast inference and can handle occasional request failures.

2/4 Flex Tier gives on-demand processing with rapid timeout when resources are constrained - perfect for workloads that need fast inference and can handle occasional request failures.