Associate Professor in computer science @Stanford @StanfordHAI @StanfordCRFM @StanfordAILab @stanfordnlp #foundationmodels | Pianist

3 subscribers

How to get URL link on X (Twitter) App

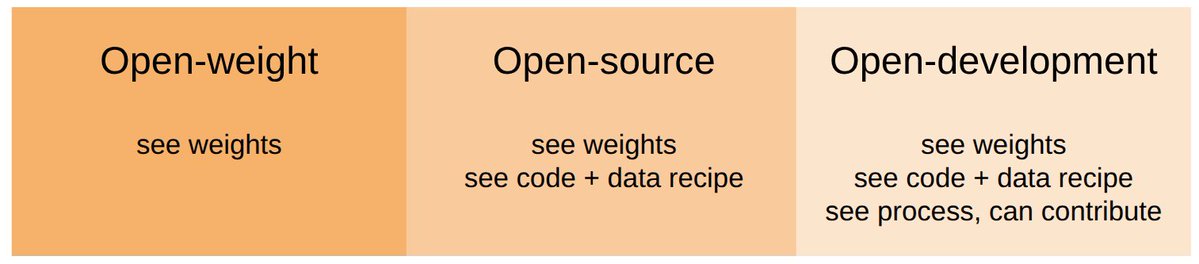

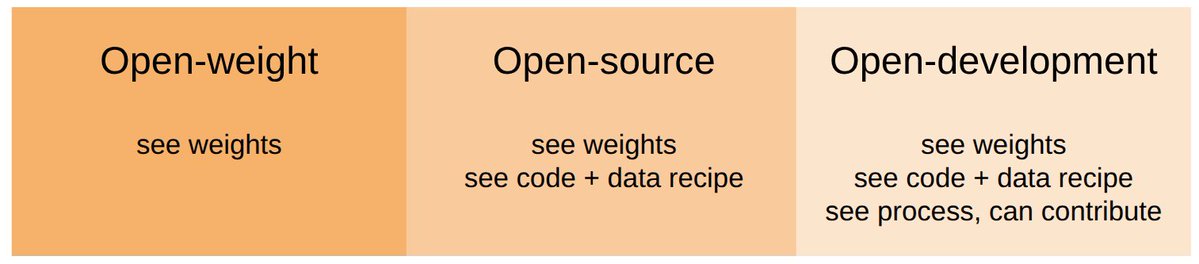

Marin () repurposes GitHub, which has been successful for open-source *software*, for AI:

Marin () repurposes GitHub, which has been successful for open-source *software*, for AI:

The tasks are taken from 4 CTF competitions (HackTheBox, SekaiCTF, Glacier, HKCert) - big thanks for releasing these challenges with solution writeups; this work would not have been possible without them.

The tasks are taken from 4 CTF competitions (HackTheBox, SekaiCTF, Glacier, HKCert) - big thanks for releasing these challenges with solution writeups; this work would not have been possible without them.

By transitivity, the links are also available from @arxiv:

By transitivity, the links are also available from @arxiv: