Comms @MIRIBerkeley. RT = increased vague psychological association between myself and the tweet.

How to get URL link on X (Twitter) App

Jon Wolfsthal, former Special Assistant to the President for National Security Affairs

Jon Wolfsthal, former Special Assistant to the President for National Security Affairs

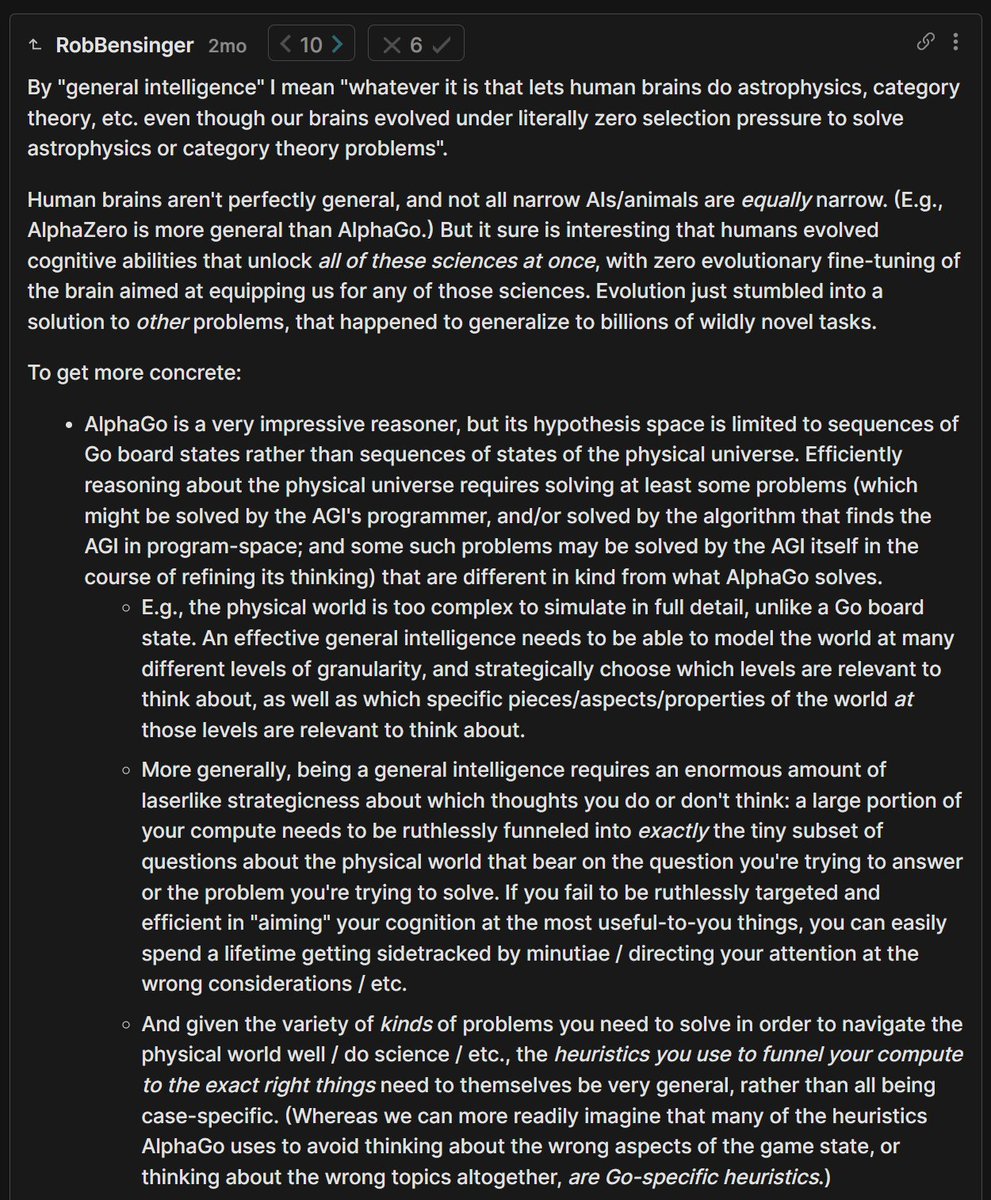

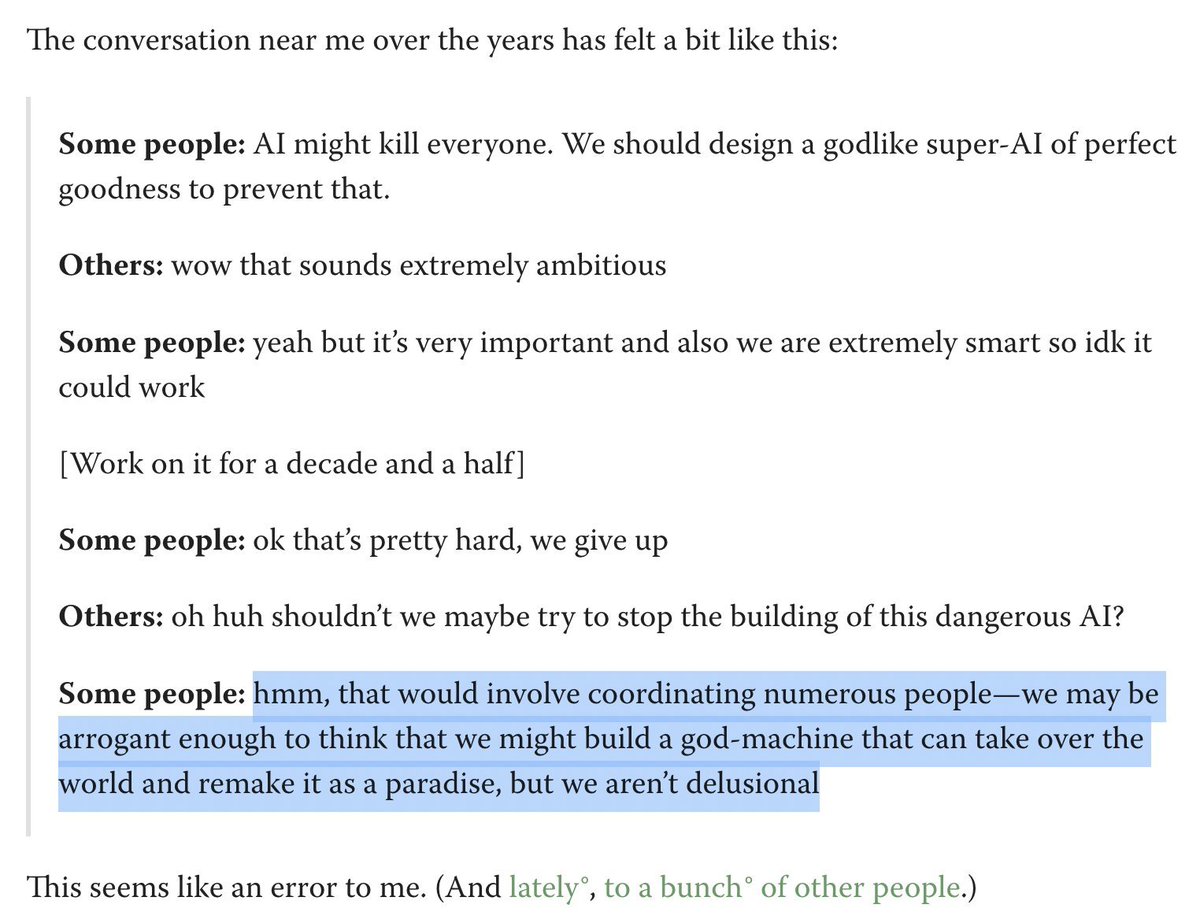

Like, yes, point taken, this feels like a bizarre situation to be in. And I agree with lesswrong.com/posts/uFNgRumr… that there are sane ways to slow progress to some degree, which are worth pursuing alongside alignment work and other ideas to cause the long-term future to go well.

Like, yes, point taken, this feels like a bizarre situation to be in. And I agree with lesswrong.com/posts/uFNgRumr… that there are sane ways to slow progress to some degree, which are worth pursuing alongside alignment work and other ideas to cause the long-term future to go well.

https://twitter.com/elonmusk/status/1607584023932719104Rules 1, 8, 20-23, 25, 32, 35-36, 38, 44-45, 49-50, 56, 58-59, 72-75, 80, 82, 86-89, and 110 are OK? But most of the rules are some version of "it is shameful to have an itchy elbow" or "be wary of speaking your mind if it might hurt anyone's feelings".

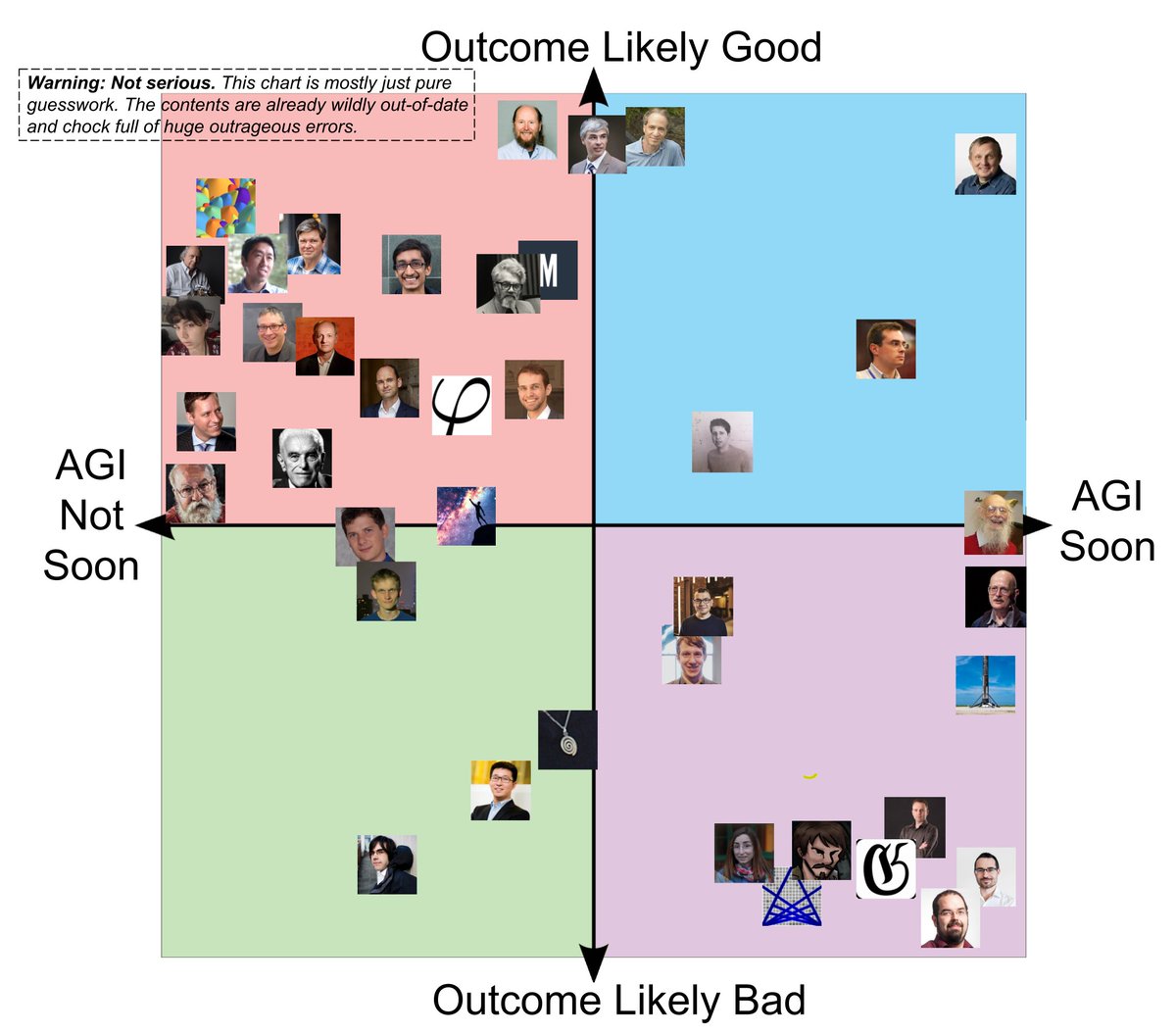

I did a tiny bit of Googling, but a lot of the comparisons are very subjective, or based on guesswork, or based on info that's likely super out-of-date. Treat this like an untrustworthy rumor you heard someone casually toss out at a party, not like a distillation of knowledge.

I did a tiny bit of Googling, but a lot of the comparisons are very subjective, or based on guesswork, or based on info that's likely super out-of-date. Treat this like an untrustworthy rumor you heard someone casually toss out at a party, not like a distillation of knowledge.