Host of the 80,000 Hours Podcast.

Exploring the inviolate sphere of ideas one interview at a time: https://t.co/2YMw00bkIQ

3 subscribers

How to get URL link on X (Twitter) App

Even crazier: if humans remain necessary for just 1% of tasks, wages could increase indefinitely.

Even crazier: if humans remain necessary for just 1% of tasks, wages could increase indefinitely.

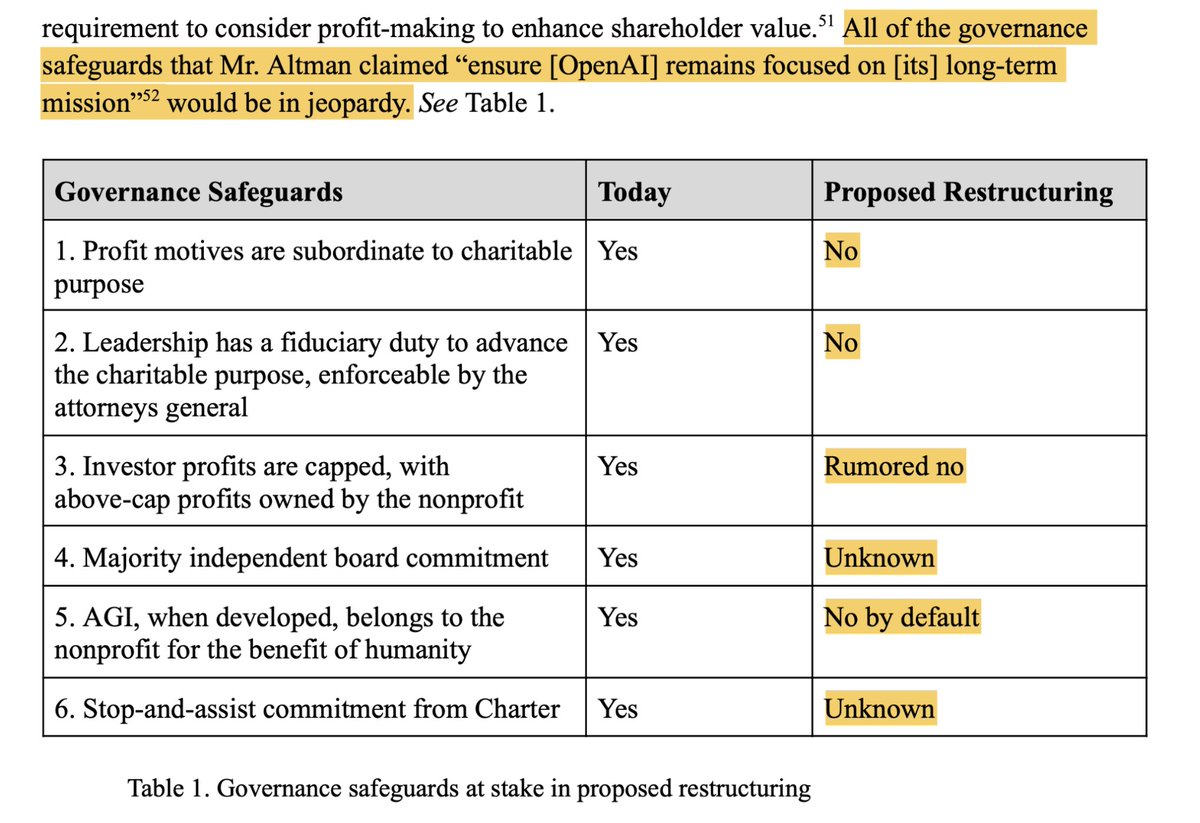

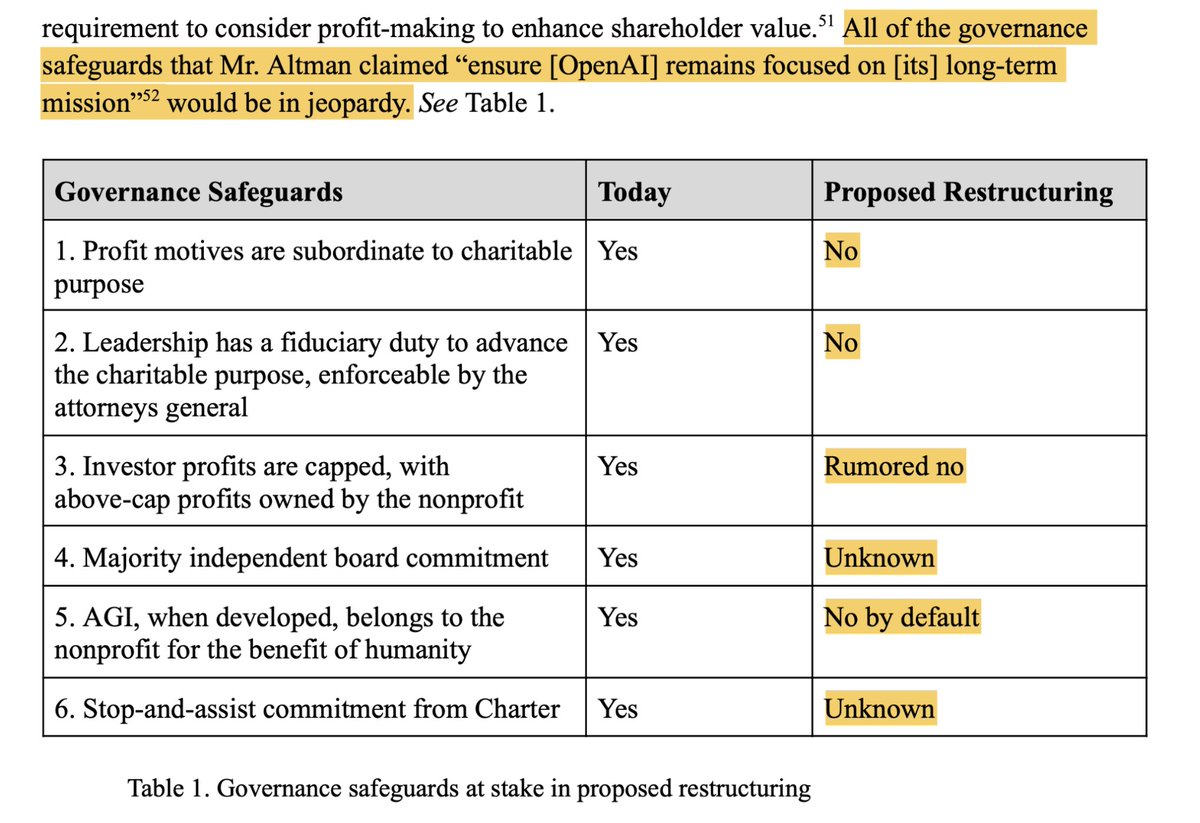

What could possibly justify this astonishing betrayal of the public's trust, and all the legal and moral commitments they made over nearly a decade, while portraying themselves as really a charity? On their story it boils down to one thing:

What could possibly justify this astonishing betrayal of the public's trust, and all the legal and moral commitments they made over nearly a decade, while portraying themselves as really a charity? On their story it boils down to one thing:

Musk is trying to stop the OpenAI business effectively converting from a non-profit to for-profit.

Musk is trying to stop the OpenAI business effectively converting from a non-profit to for-profit.

2. If the planet were four times as big would it be sensible to read four times as much news to keep up with it all?

2. If the planet were four times as big would it be sensible to read four times as much news to keep up with it all?