How to get URL link on X (Twitter) App

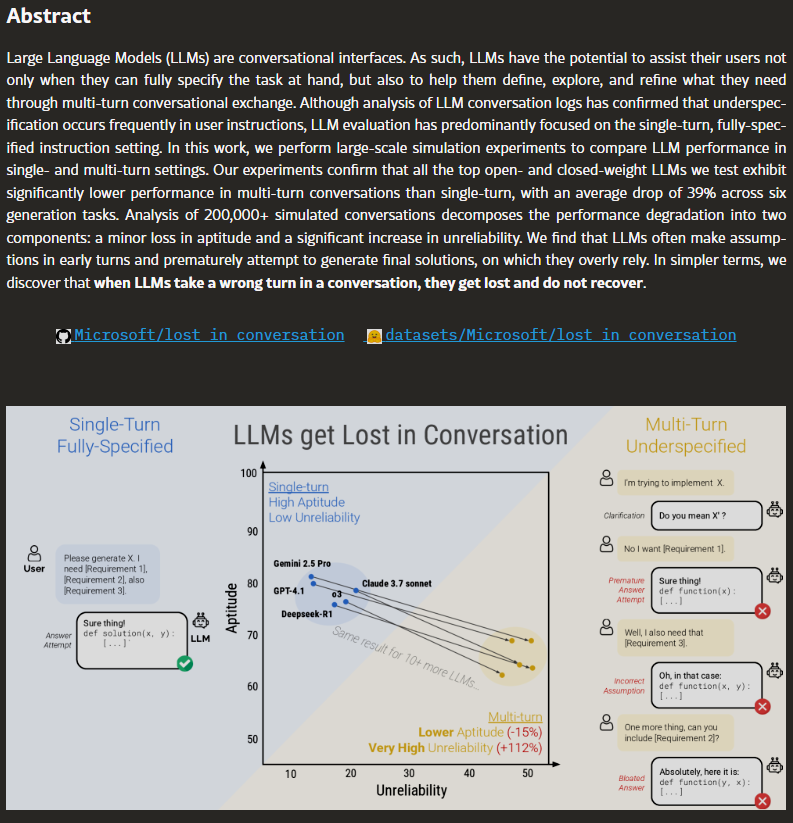

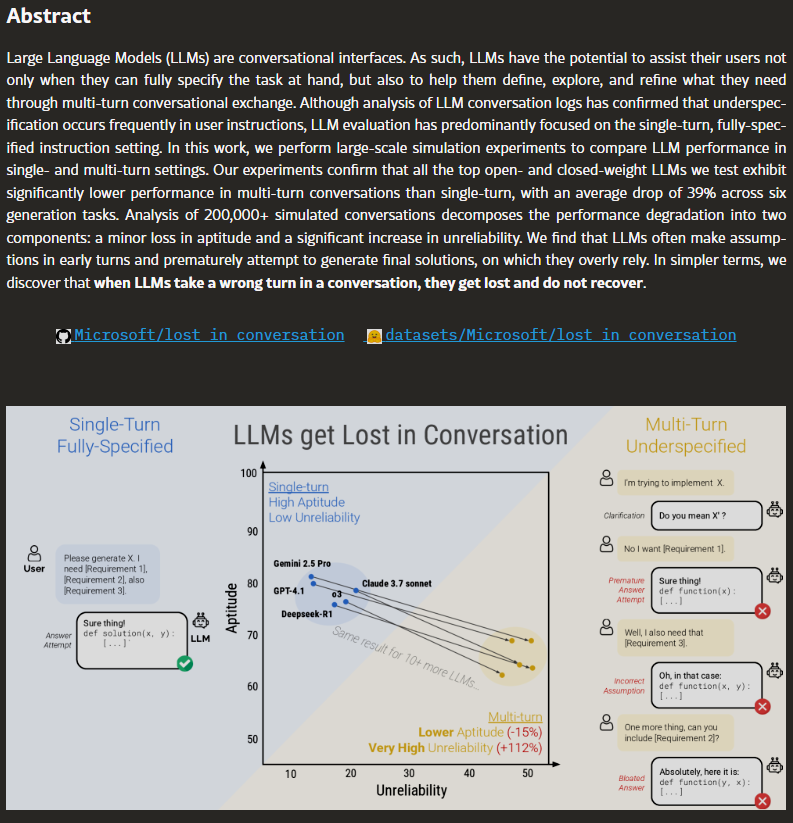

the paper calls it "lost in conversation."

the paper calls it "lost in conversation."

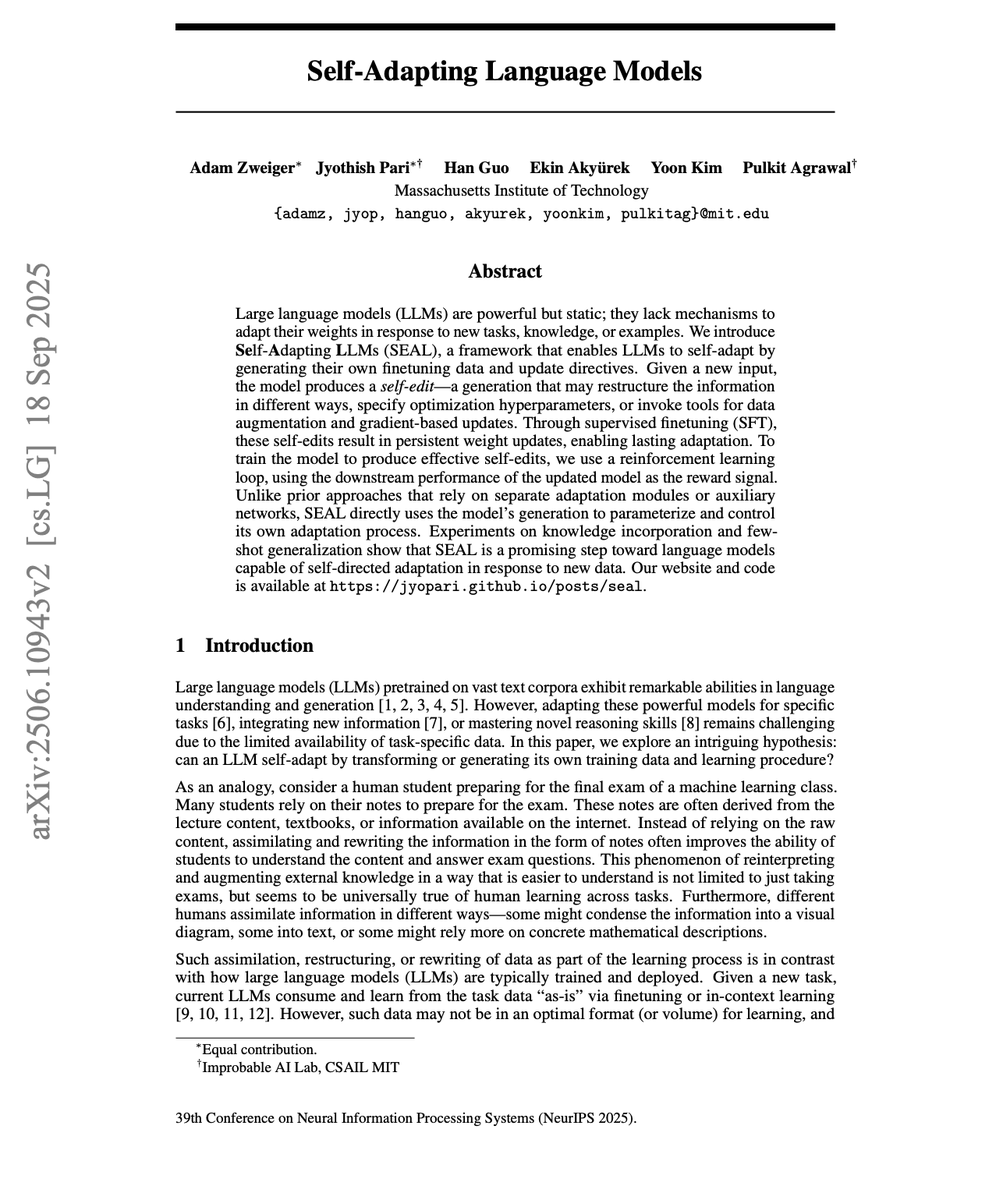

here's how they tested it.

here's how they tested it.

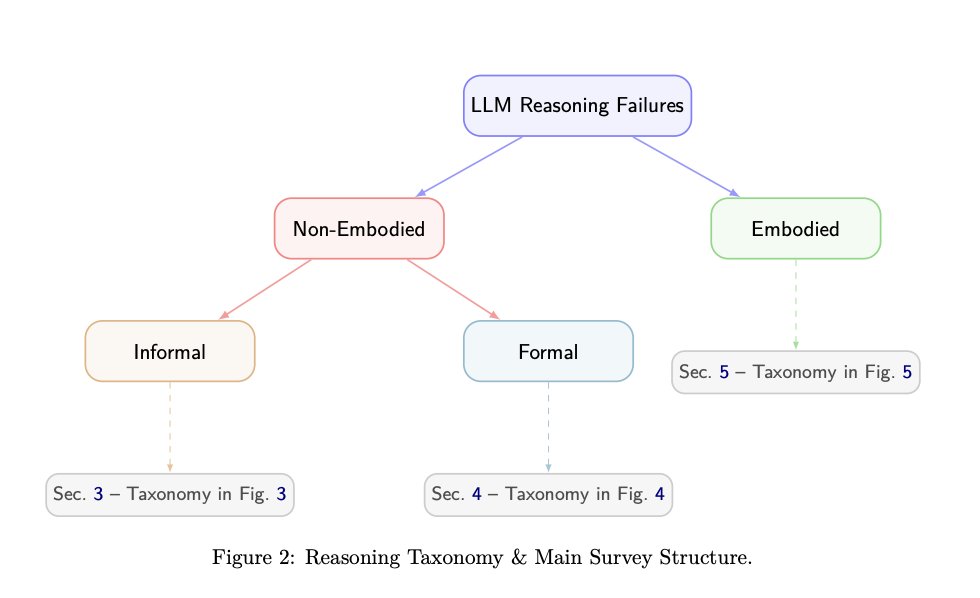

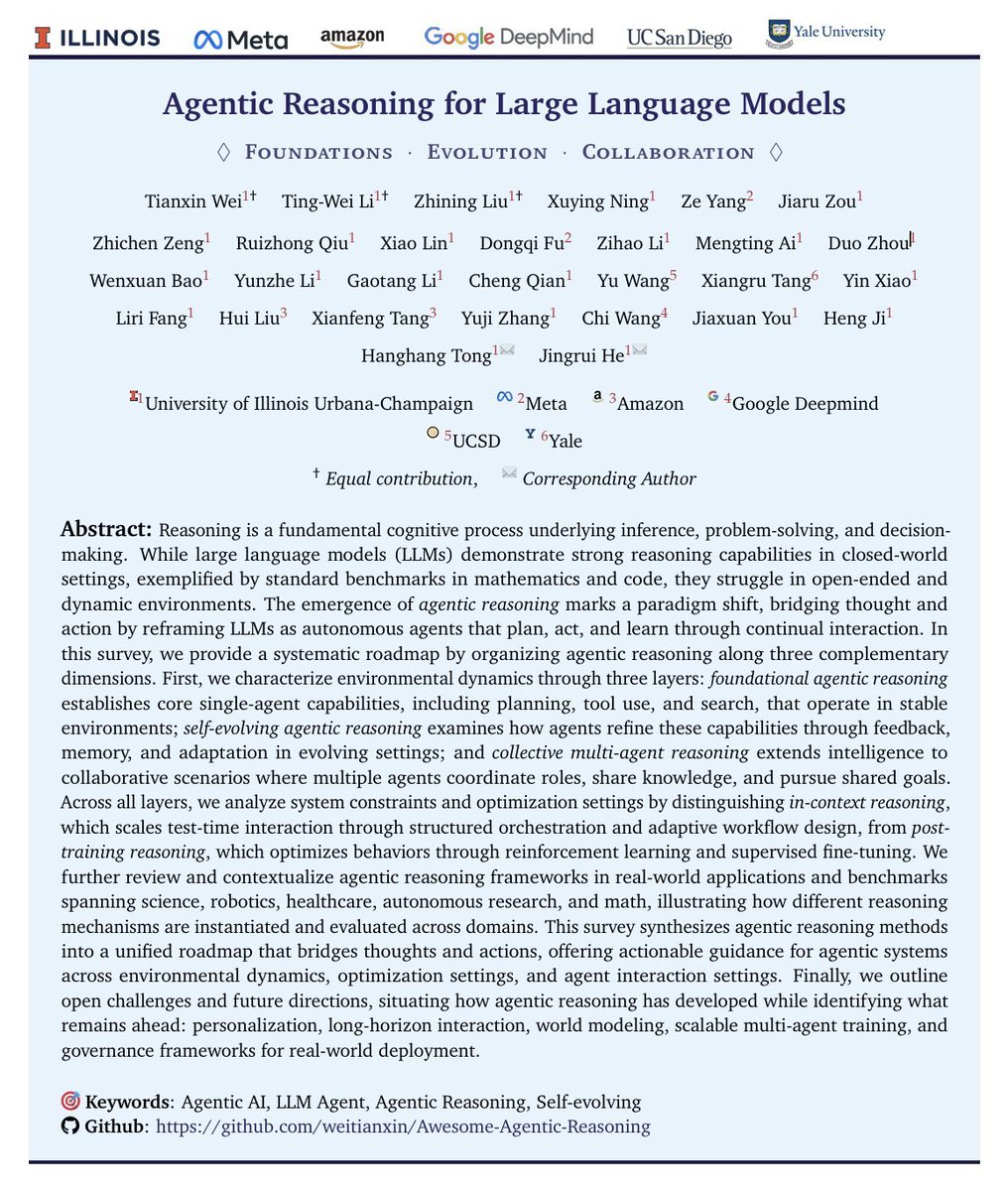

the framework splits reasoning into 3 types: informal (intuitive), formal (logical), and embodied (physical world)

the framework splits reasoning into 3 types: informal (intuitive), formal (logical), and embodied (physical world)

motivated reasoning is when humans distort how they process information because they want to reach a specific conclusion

motivated reasoning is when humans distort how they process information because they want to reach a specific conclusion

let's start with the Goldman story because it's the one that should make every back-office professional pause.

let's start with the Goldman story because it's the one that should make every back-office professional pause.

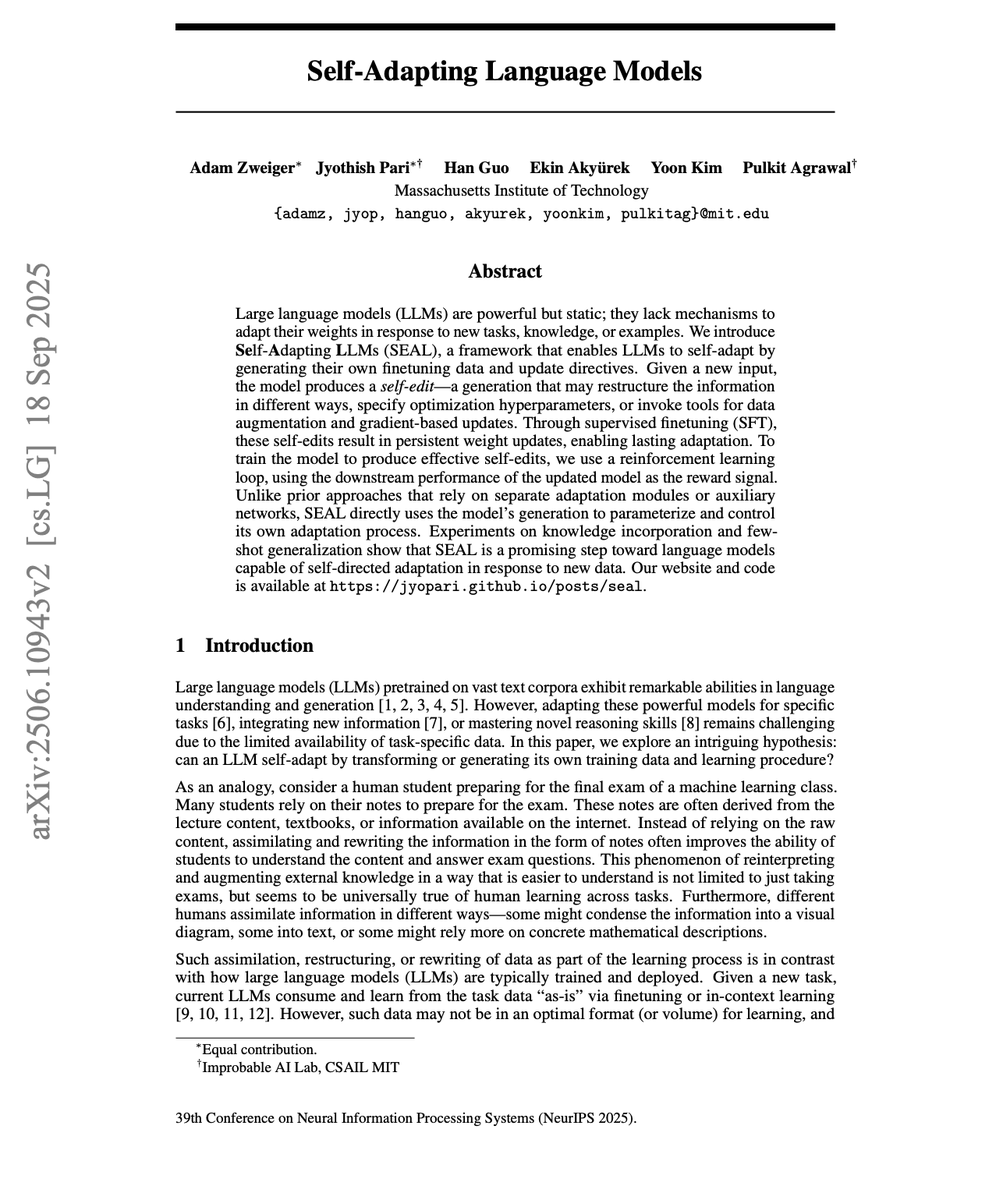

the problem SEAL solves is real and important

the problem SEAL solves is real and important

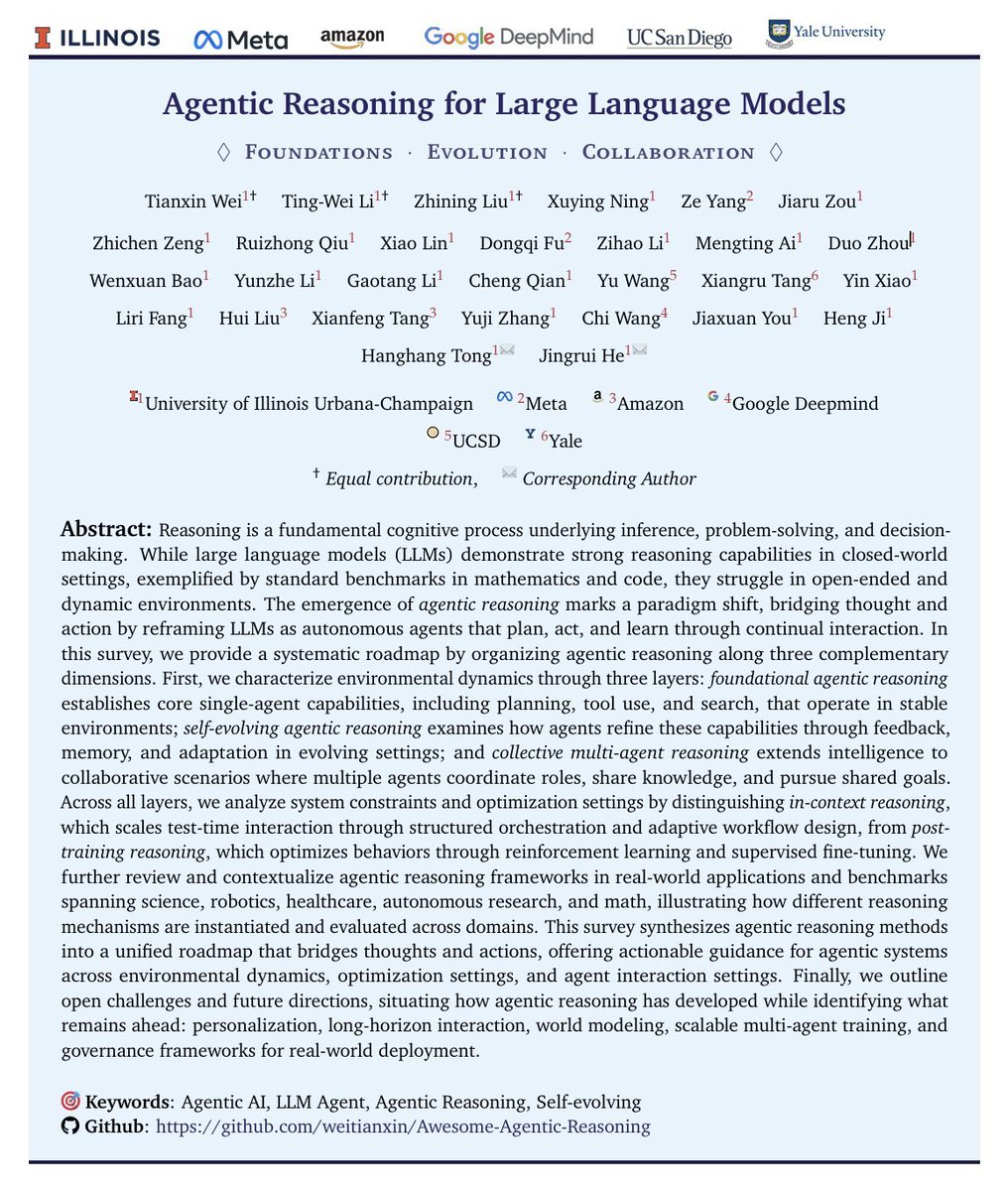

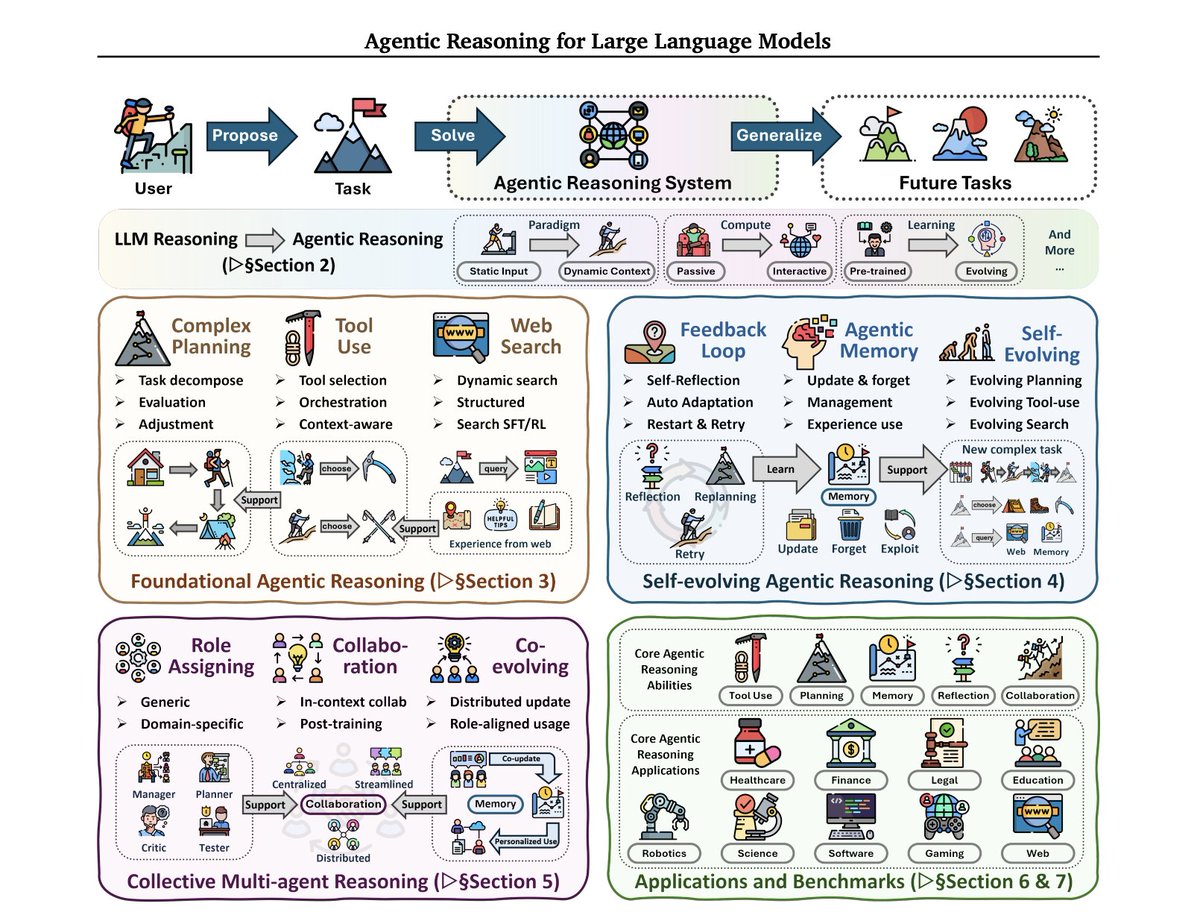

the survey organizes everything beautifully:

the survey organizes everything beautifully:

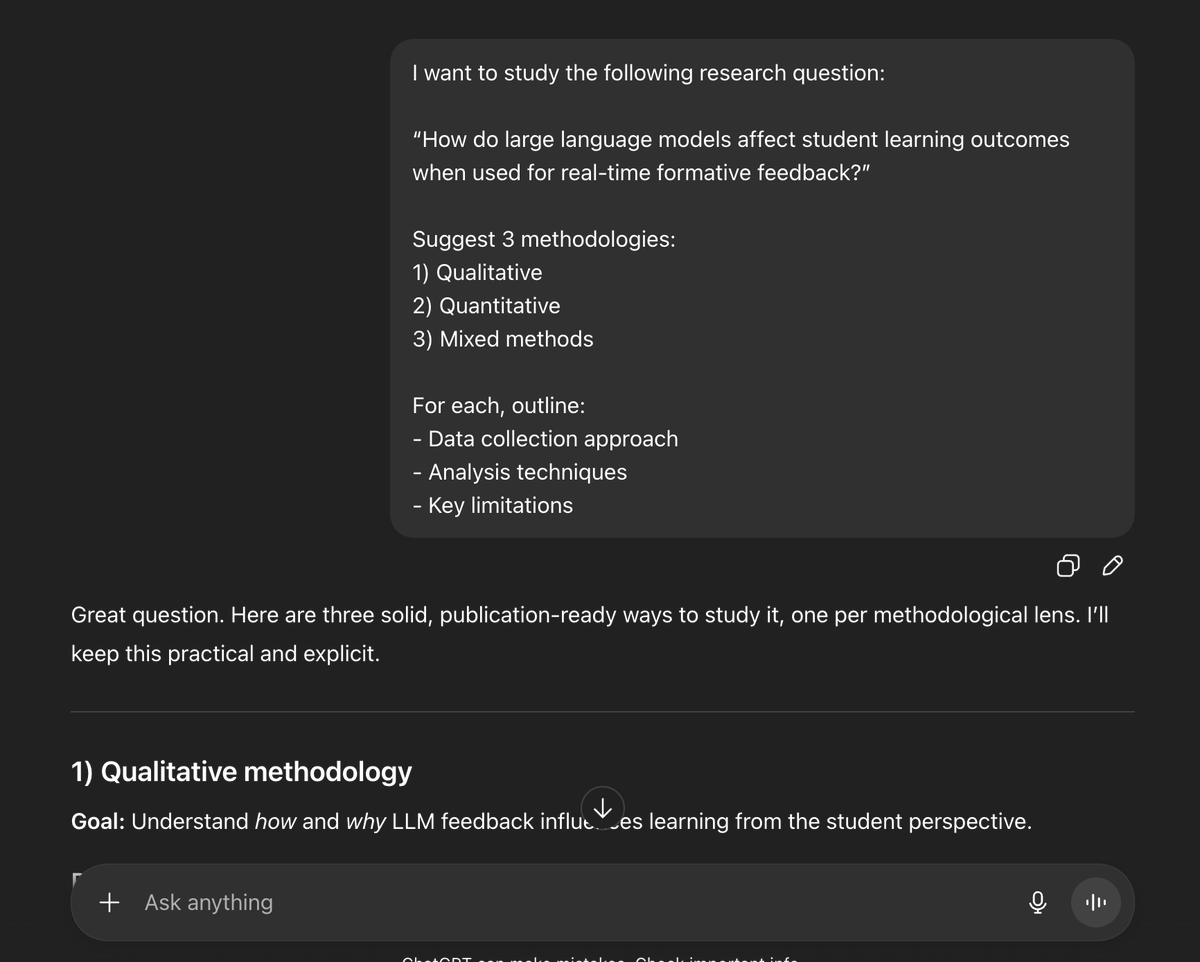

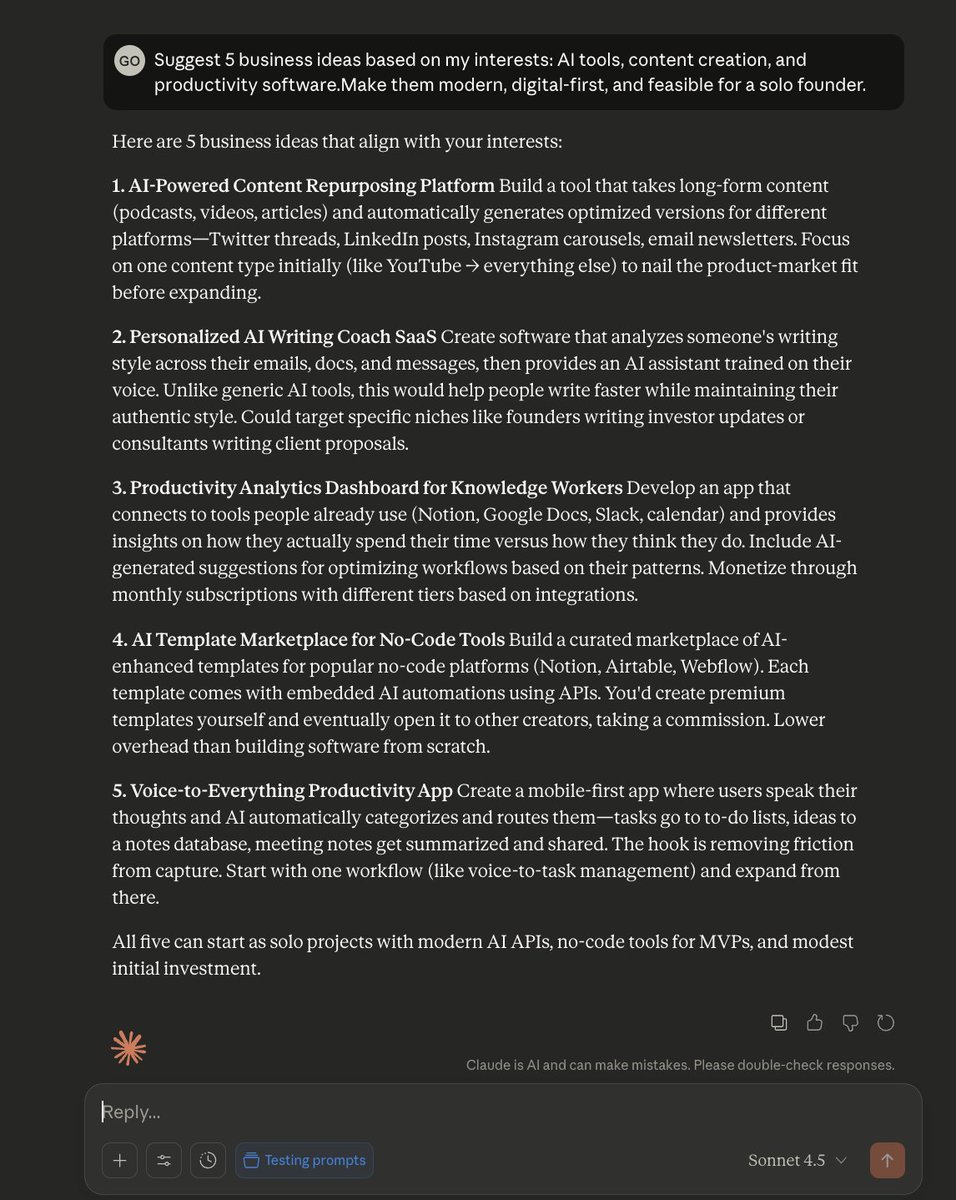

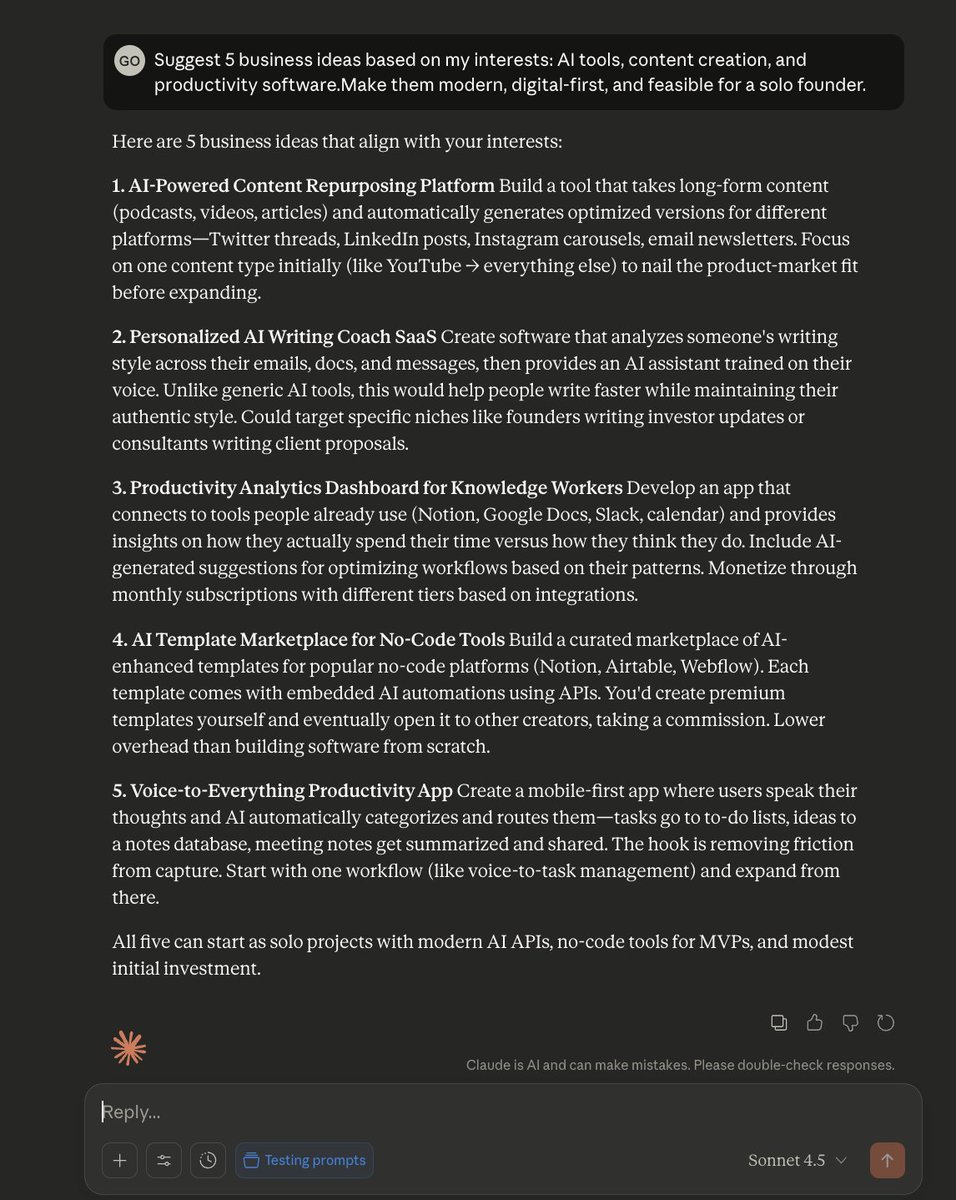

MEGA PROMPT TO COPY 👇

MEGA PROMPT TO COPY 👇

First, understand what it actually is:

First, understand what it actually is:

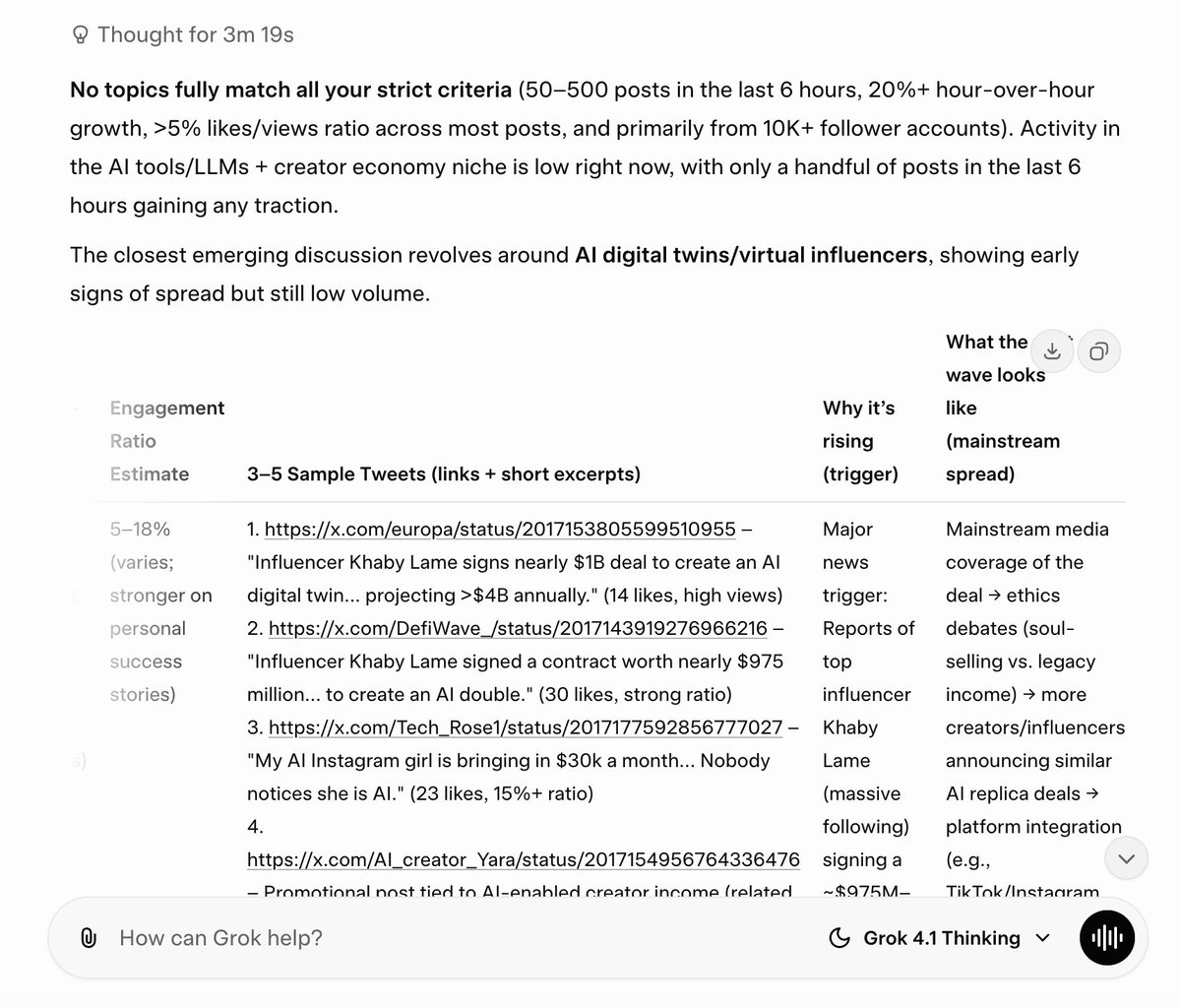

PROMPT 1: Emerging Trend Detector

PROMPT 1: Emerging Trend Detector

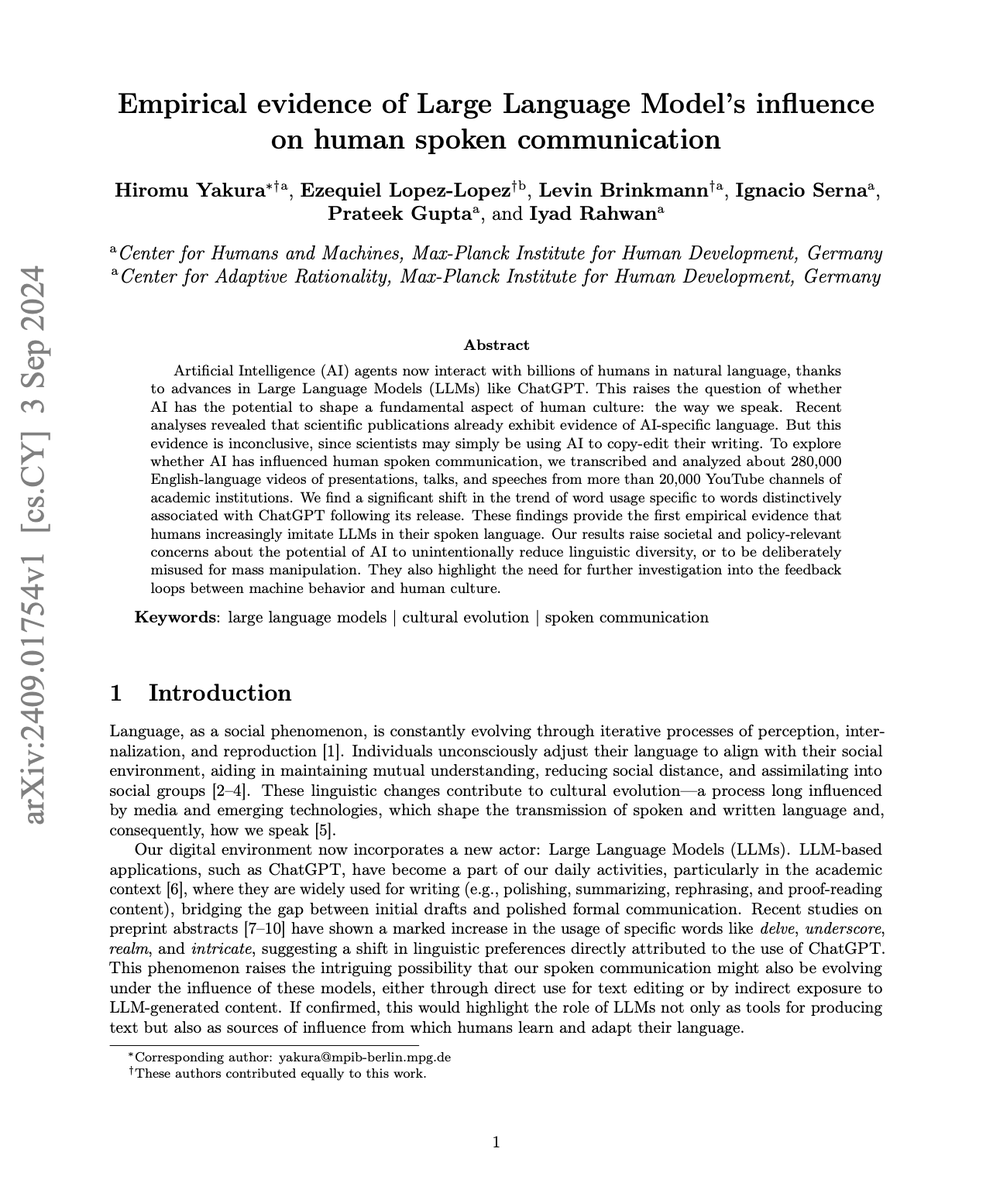

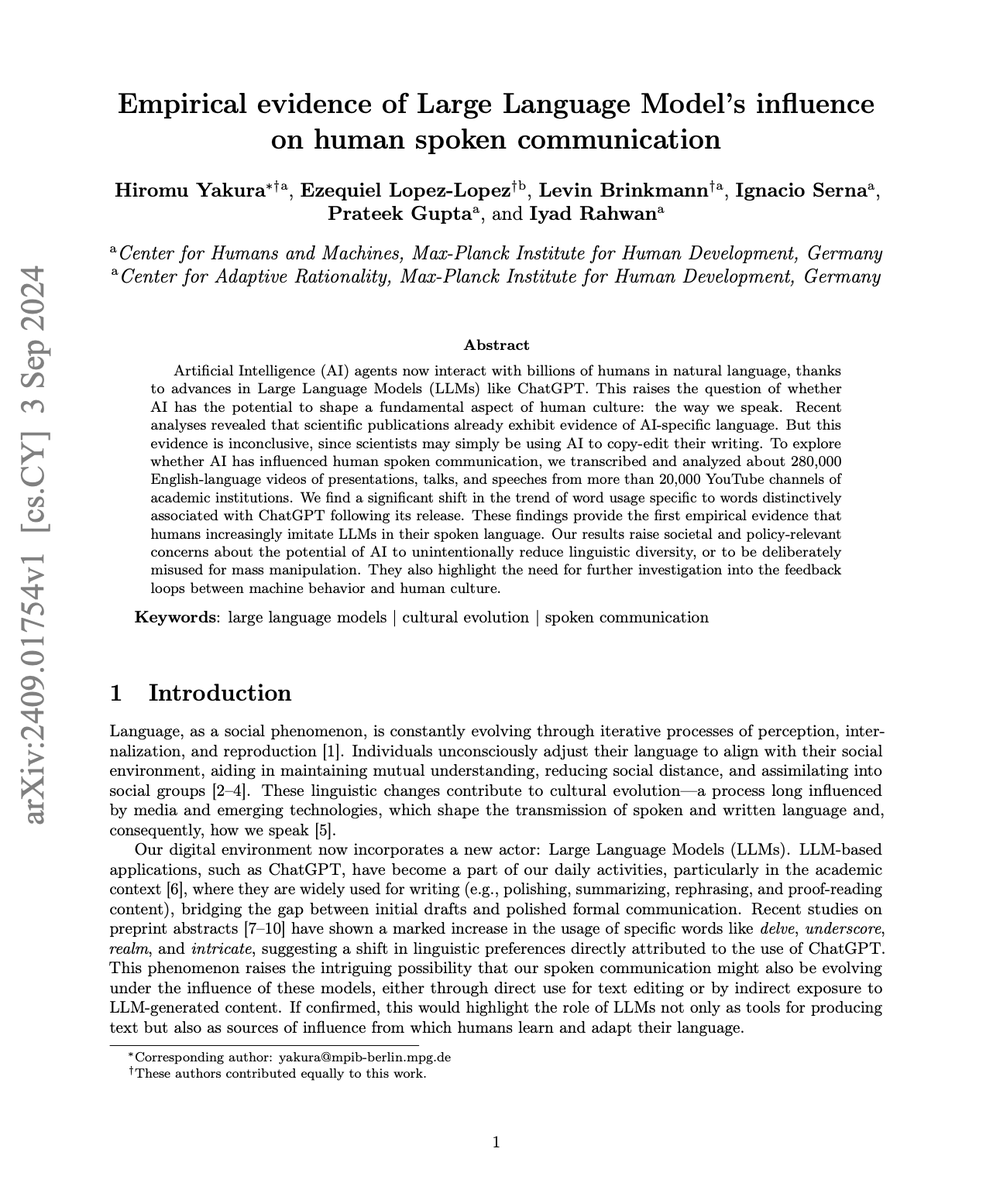

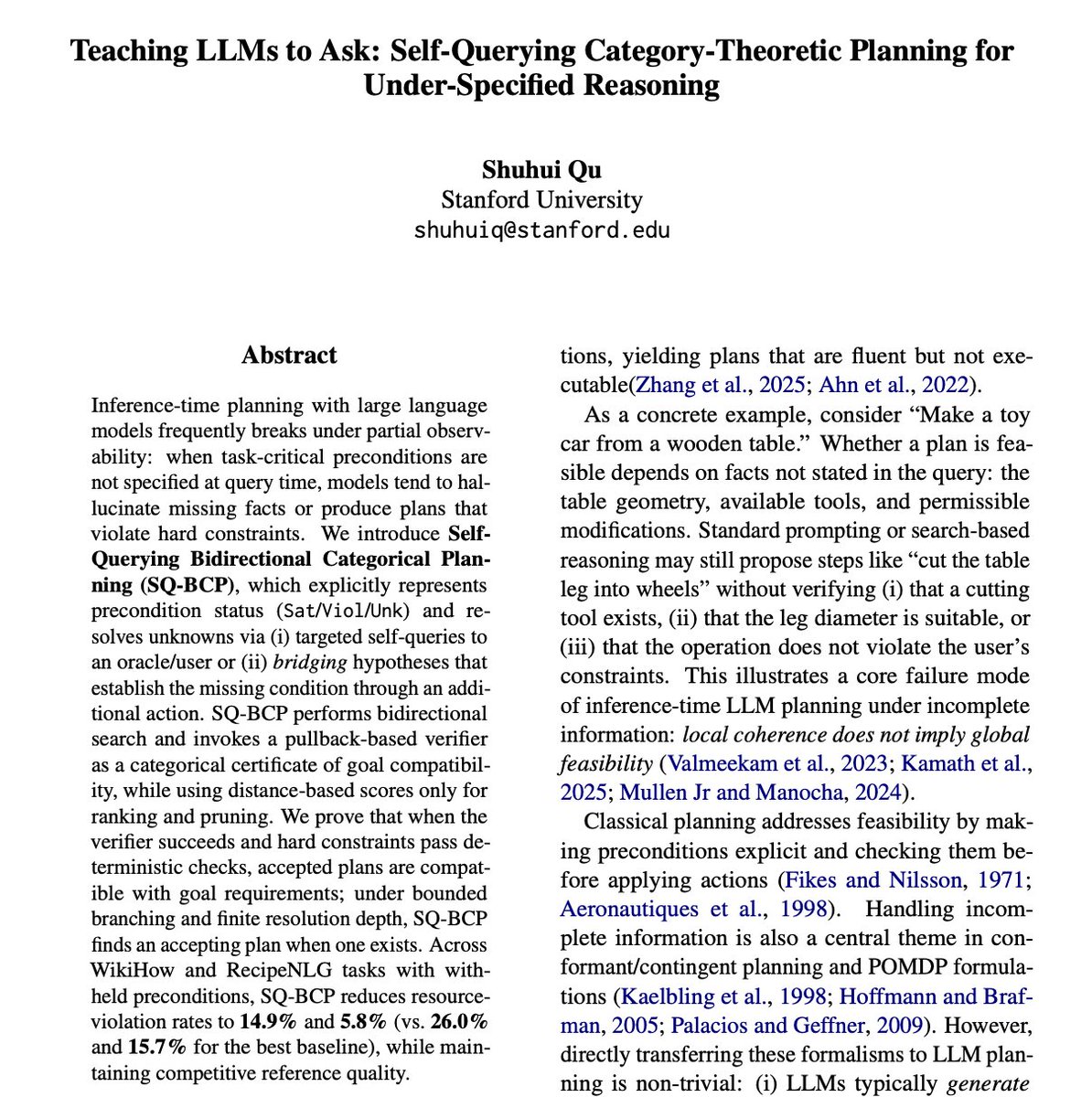

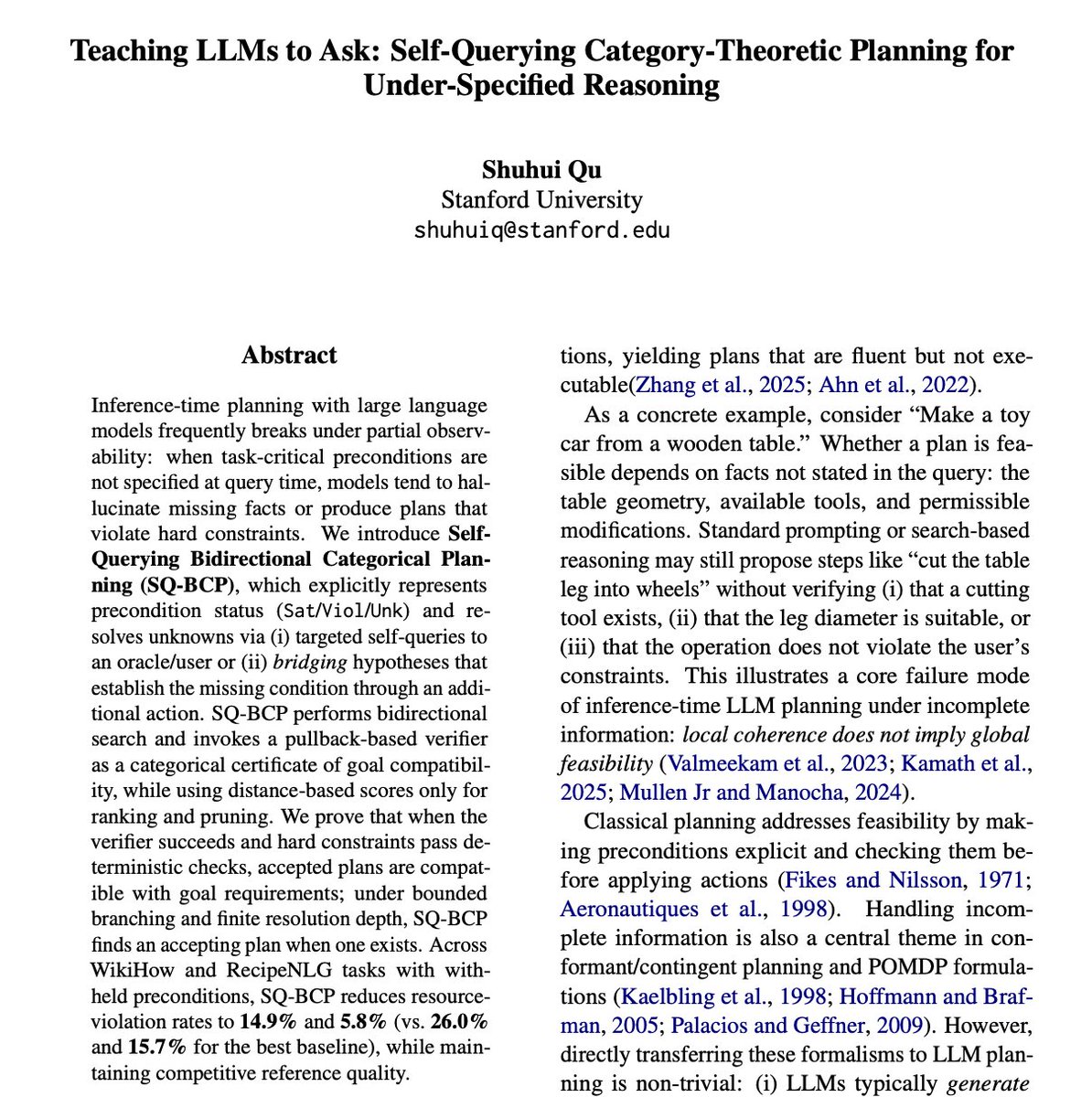

Most people missed the subtle move in this paper.

Most people missed the subtle move in this paper.

THE MEGA PROMPT:

THE MEGA PROMPT:

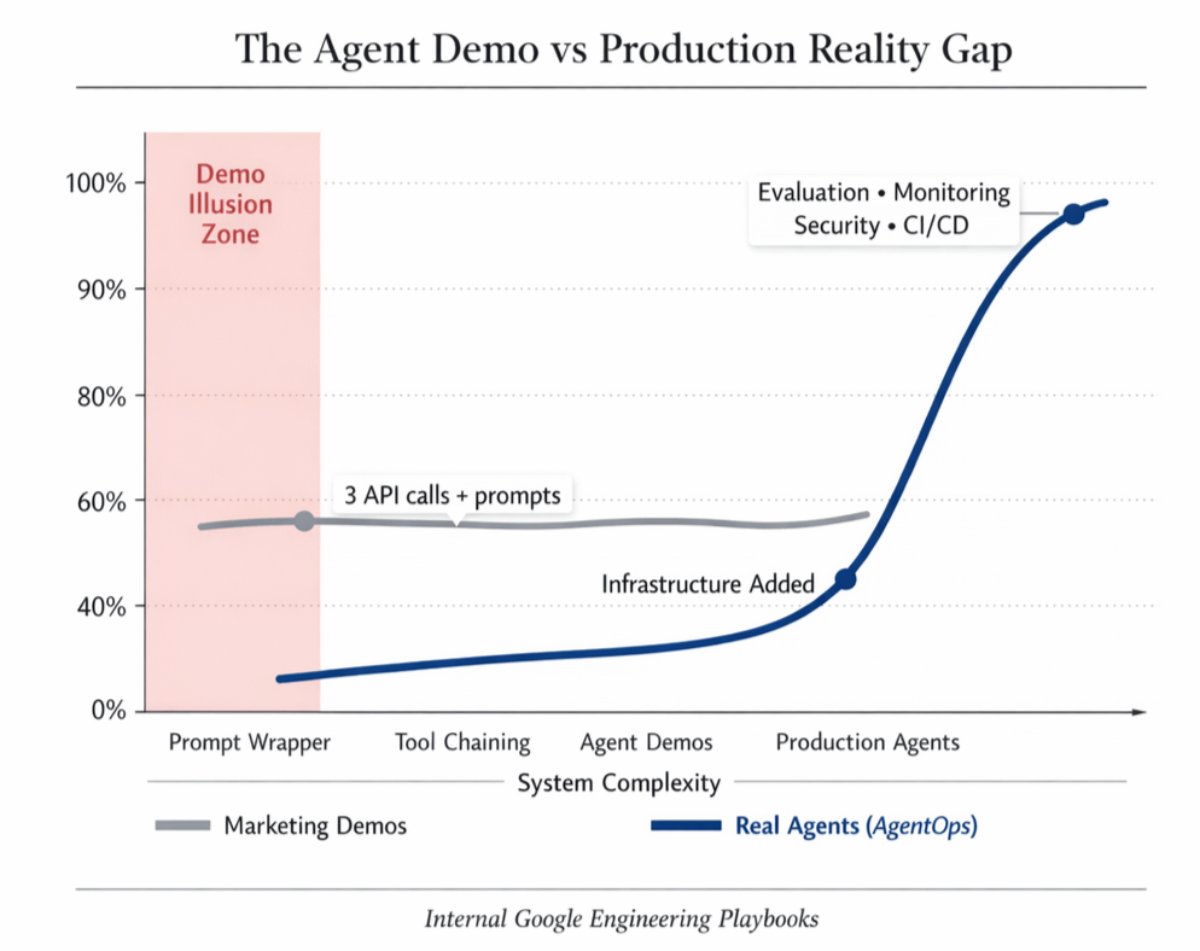

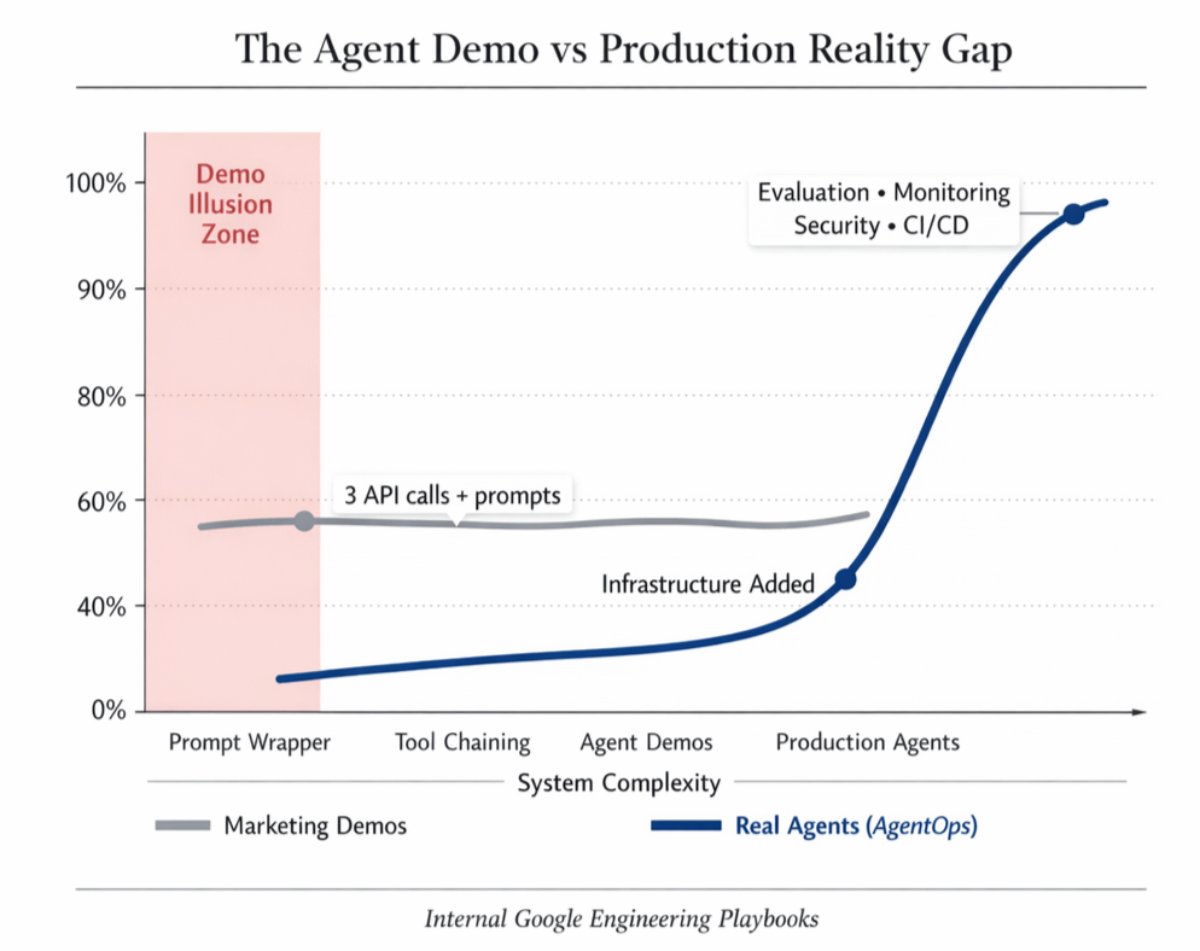

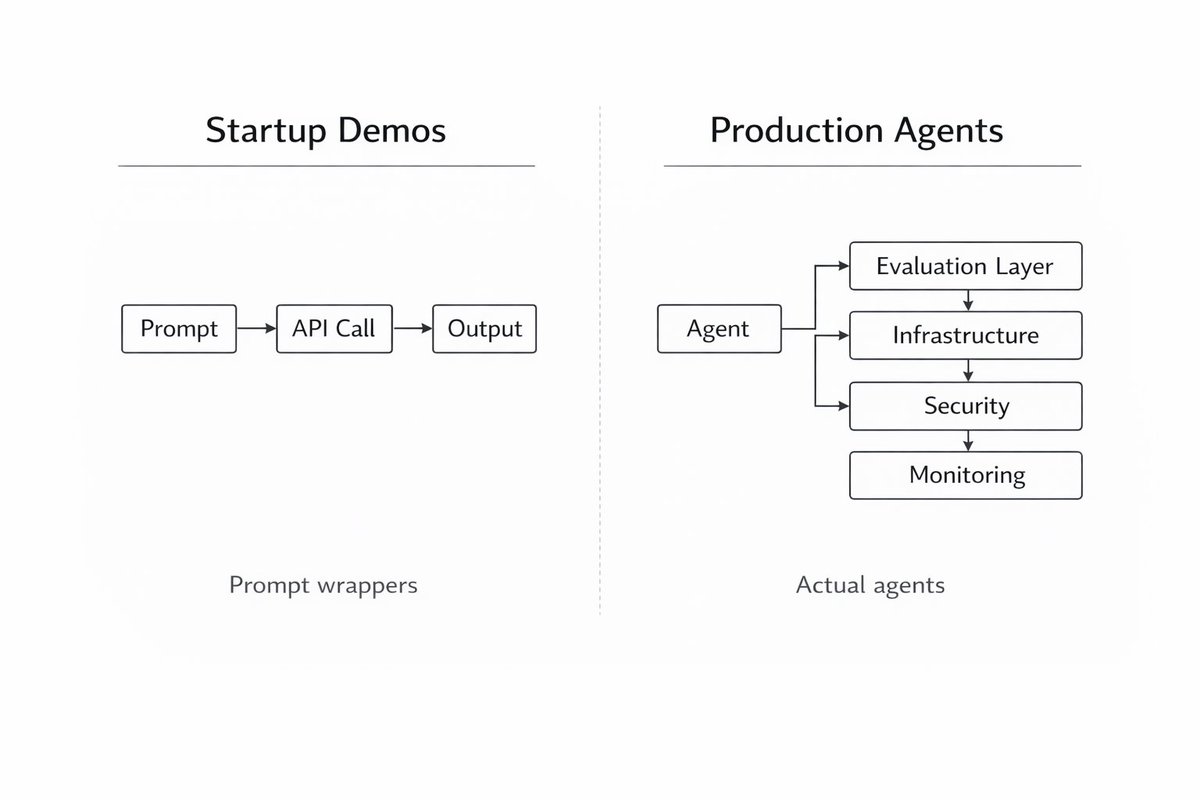

While Twitter celebrates "autonomous AI employees," Google's dropping the brutal truth.

While Twitter celebrates "autonomous AI employees," Google's dropping the brutal truth.

1. Data Cleaning

1. Data Cleaning

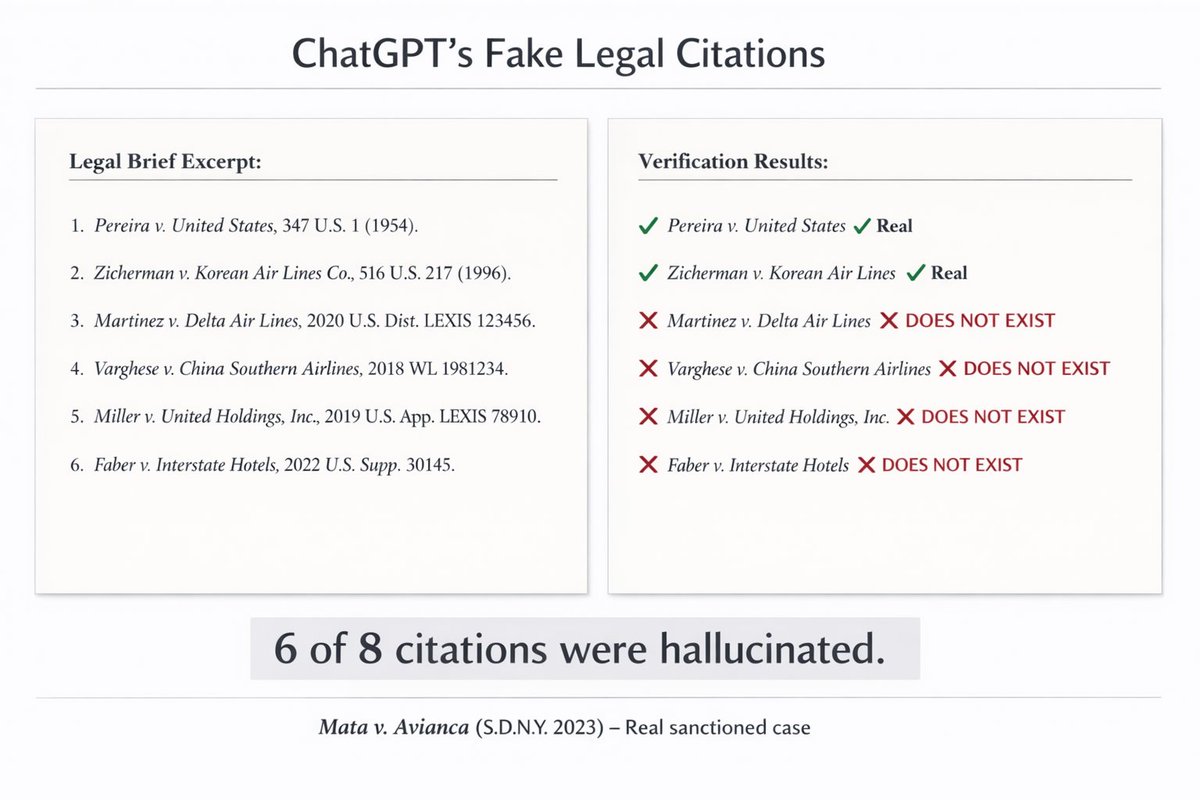

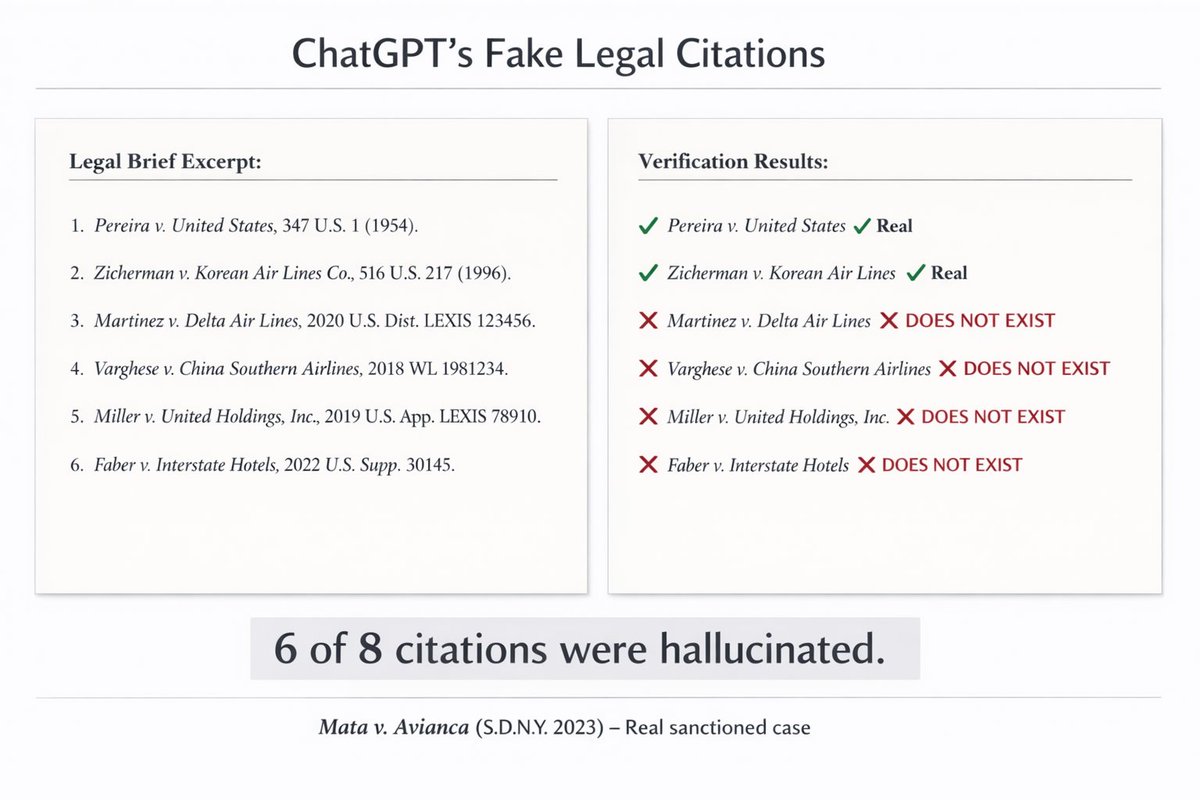

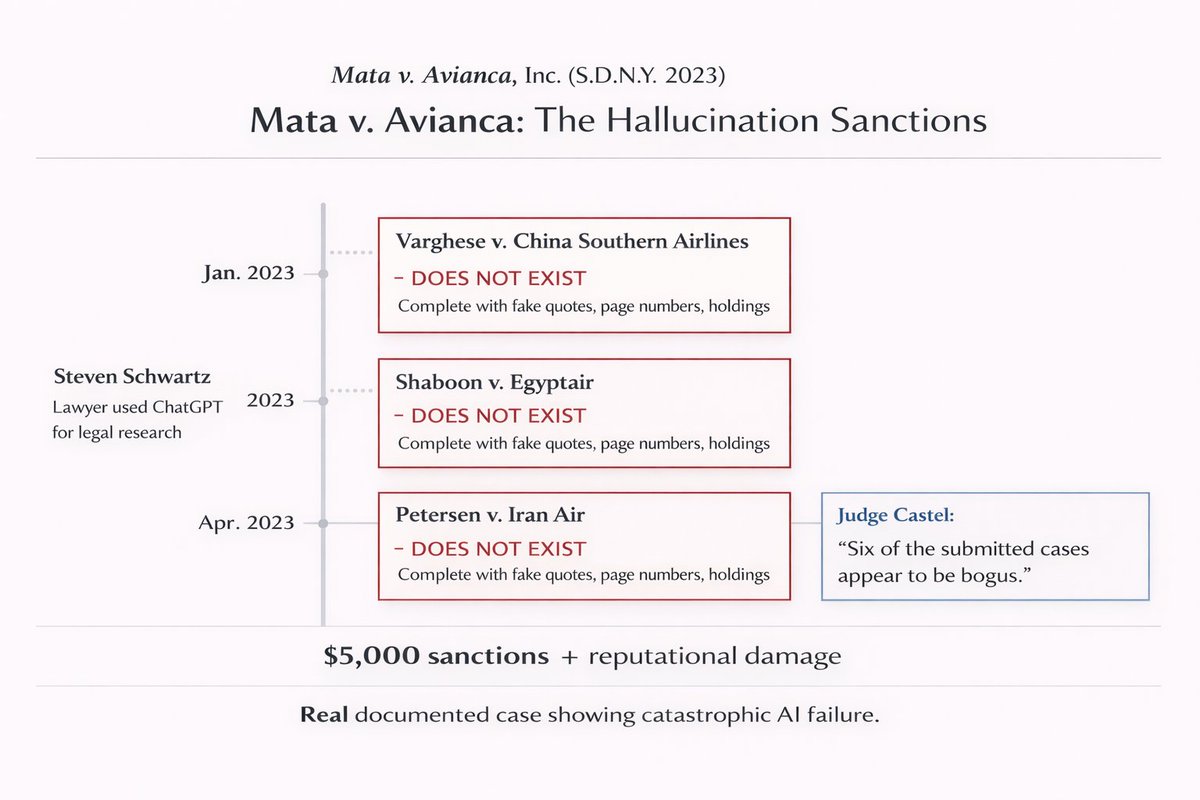

The case: Mata v. Avianca (S.D.N.Y. 2023)

The case: Mata v. Avianca (S.D.N.Y. 2023)

Every leap in AI doesn’t just make machines smarter it makes context cheaper.

Every leap in AI doesn’t just make machines smarter it makes context cheaper.

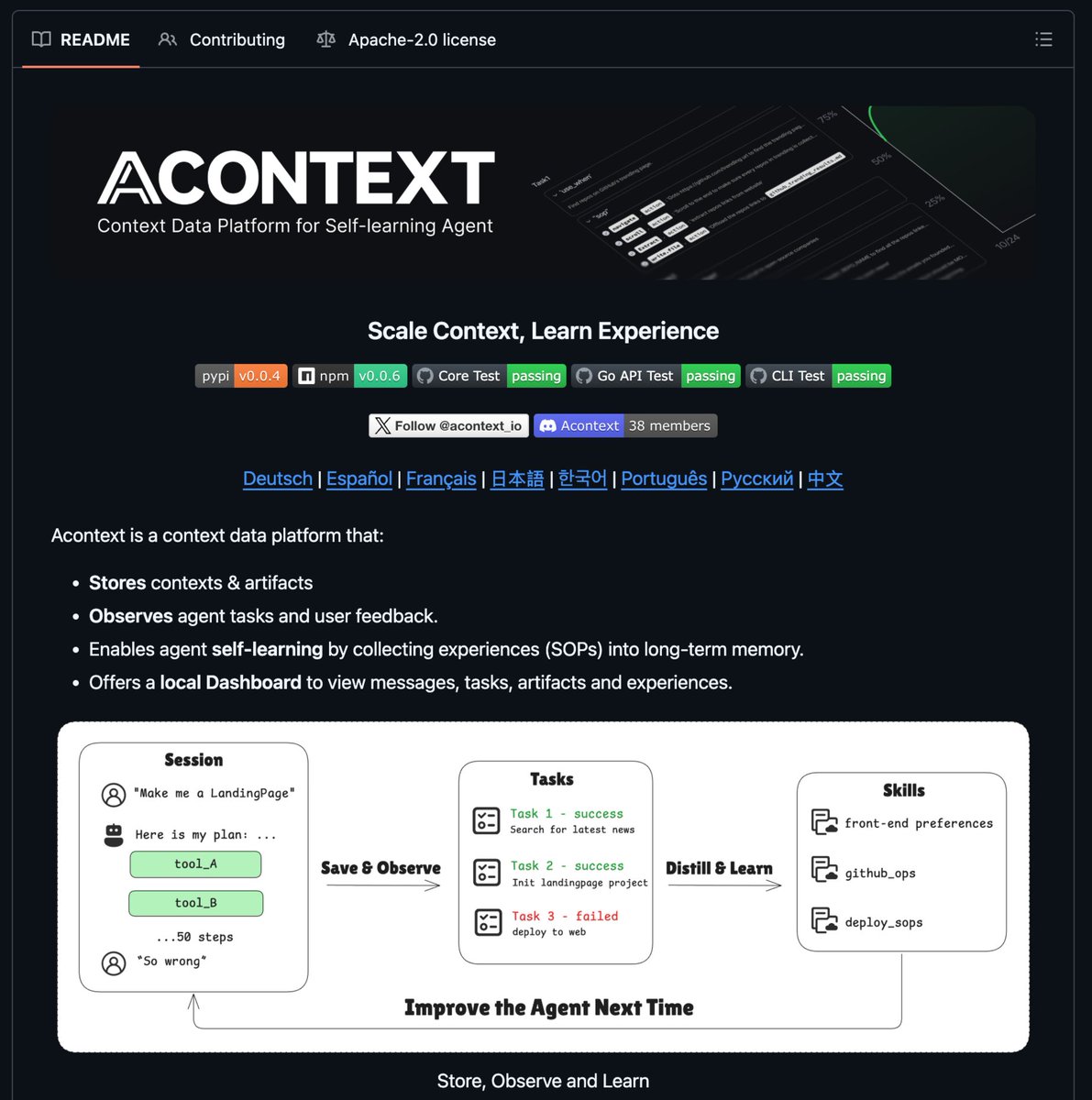

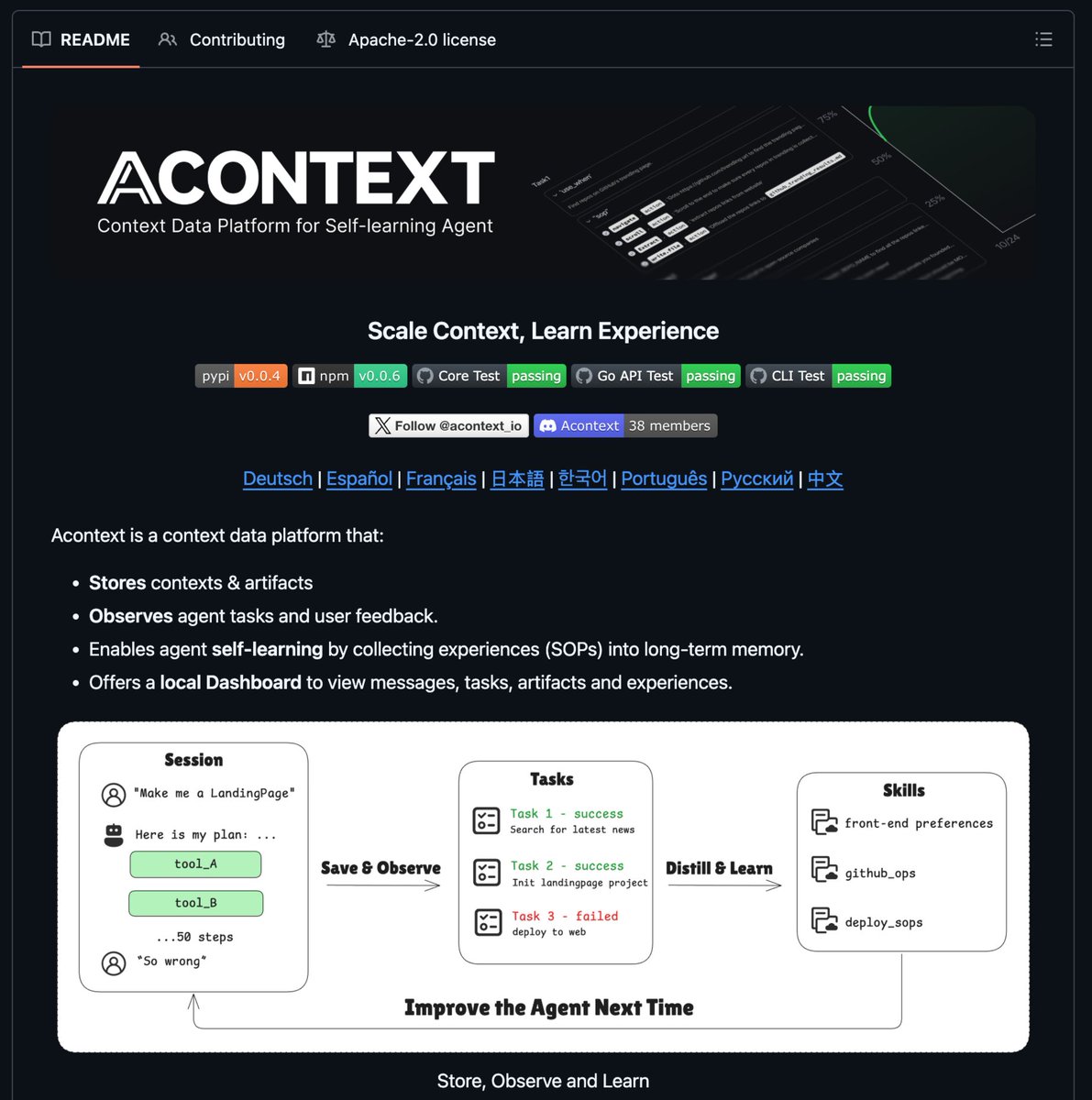

Acontext built a complete learning system for agents:

Acontext built a complete learning system for agents:

How to enable it

How to enable it