Building intelligence that evolves @adaptionlabs. Built @Cohere_Labs, @GoogleBrain, @GoogleDeepmind. ML Efficiency, Multimodal\lingual.

2 subscribers

How to get URL link on X (Twitter) App

We spent 5 months analyzing 2.8M battles on the Arena, covering 238 models across 43 providers.

We spent 5 months analyzing 2.8M battles on the Arena, covering 238 models across 43 providers.

What is missing from this conversation is that the success of synthetic data hinges on how you optimize in the data space.

What is missing from this conversation is that the success of synthetic data hinges on how you optimize in the data space.

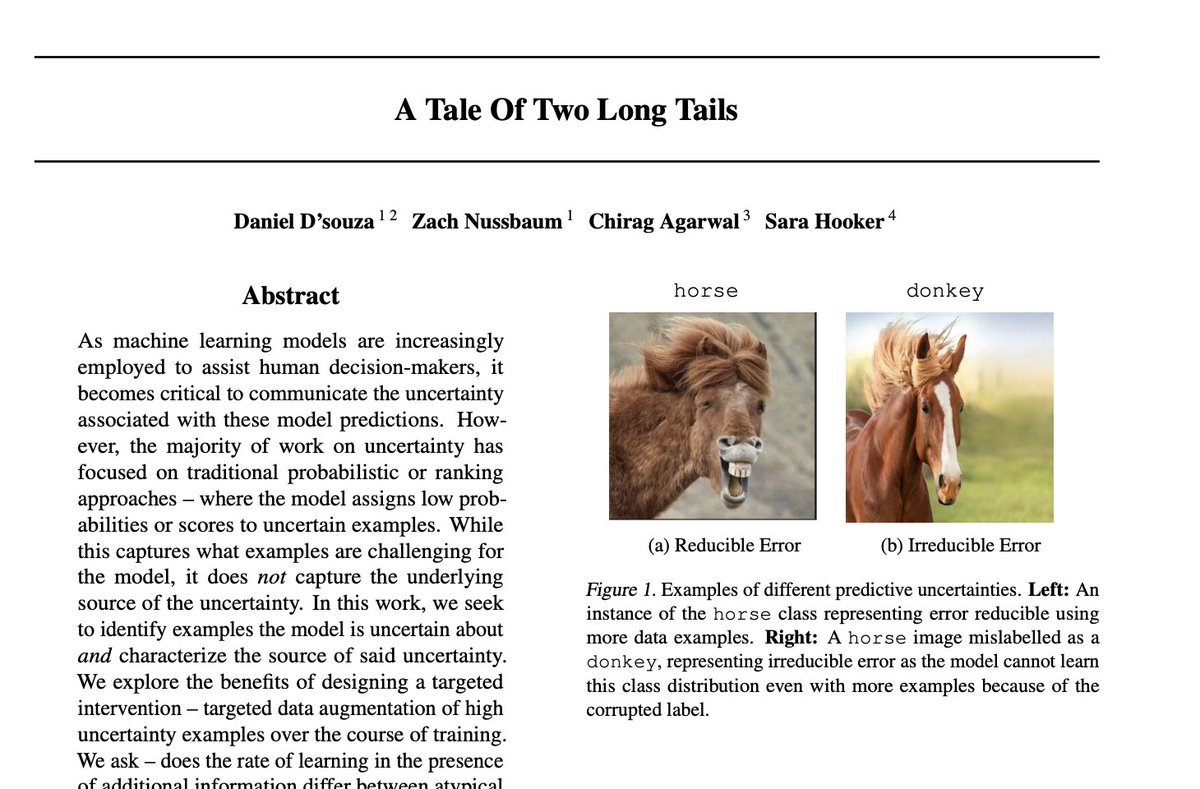

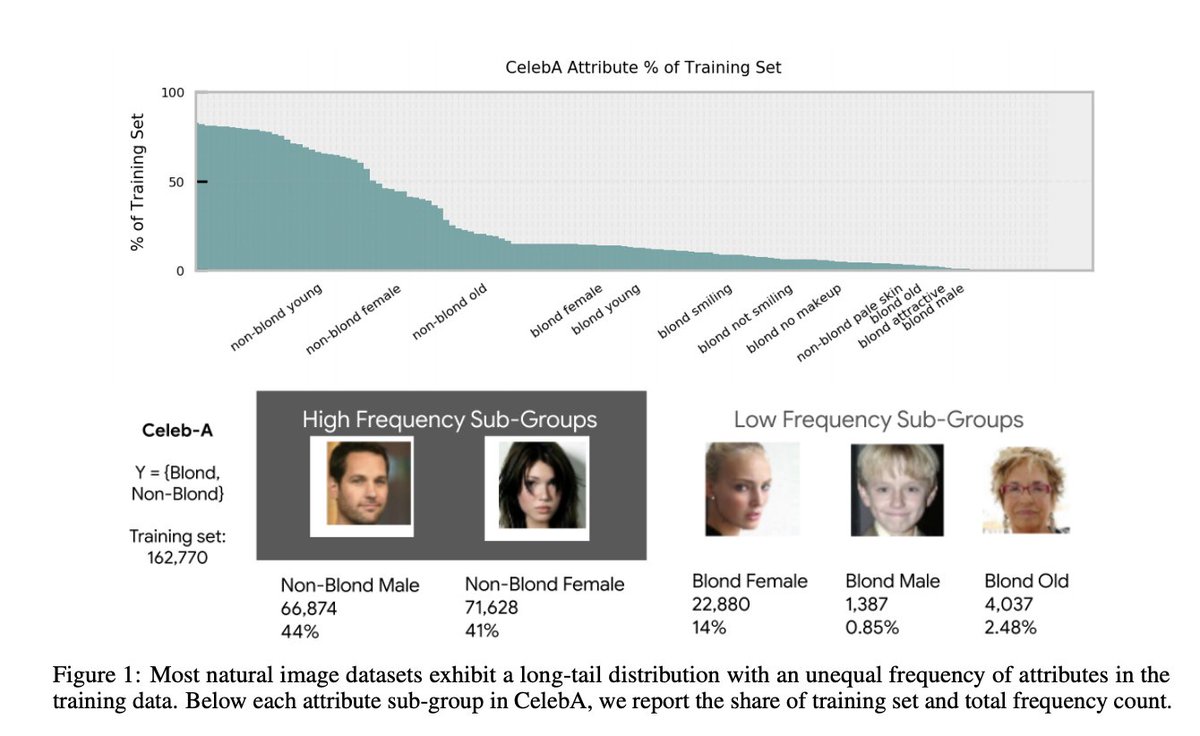

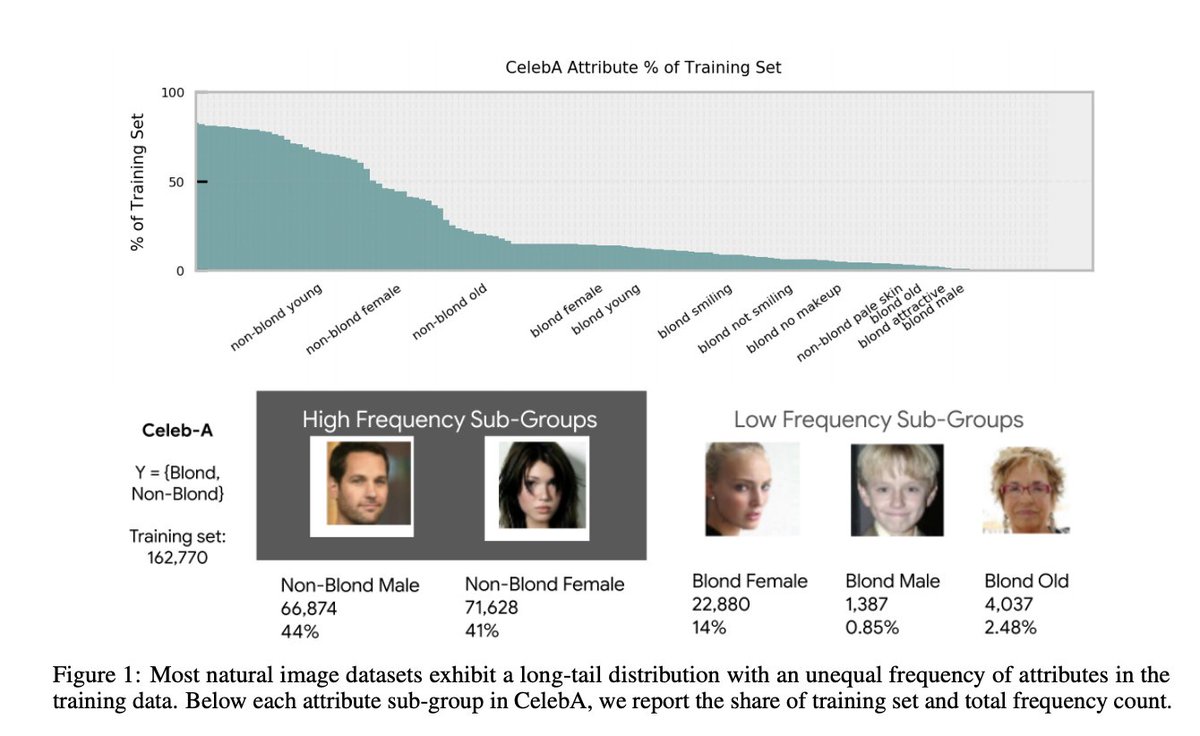

In subset ML network tomorrow, Neil Hu and Xinyu Hu explore where simply prioritizing challenging examples fails -- motivating a more nuanced distinction between sources of uncertainty.

In subset ML network tomorrow, Neil Hu and Xinyu Hu explore where simply prioritizing challenging examples fails -- motivating a more nuanced distinction between sources of uncertainty.

At face value, deep neural network pruning appears to promise you can (almost) have it all — remove the majority of weights with minimal degradation to top-1 accuracy. In this work, we explore this trade-off by asking whether certain classes are disproportionately impacted.

At face value, deep neural network pruning appears to promise you can (almost) have it all — remove the majority of weights with minimal degradation to top-1 accuracy. In this work, we explore this trade-off by asking whether certain classes are disproportionately impacted.