How to get URL link on X (Twitter) App

https://twitter.com/scaling01/status/2008387917899546671again, not saying it's not smart

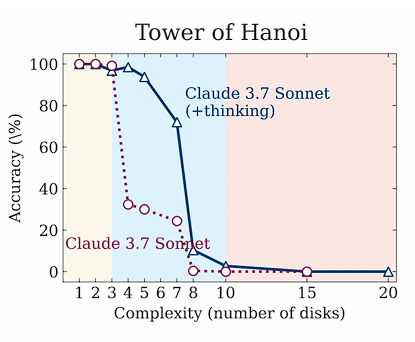

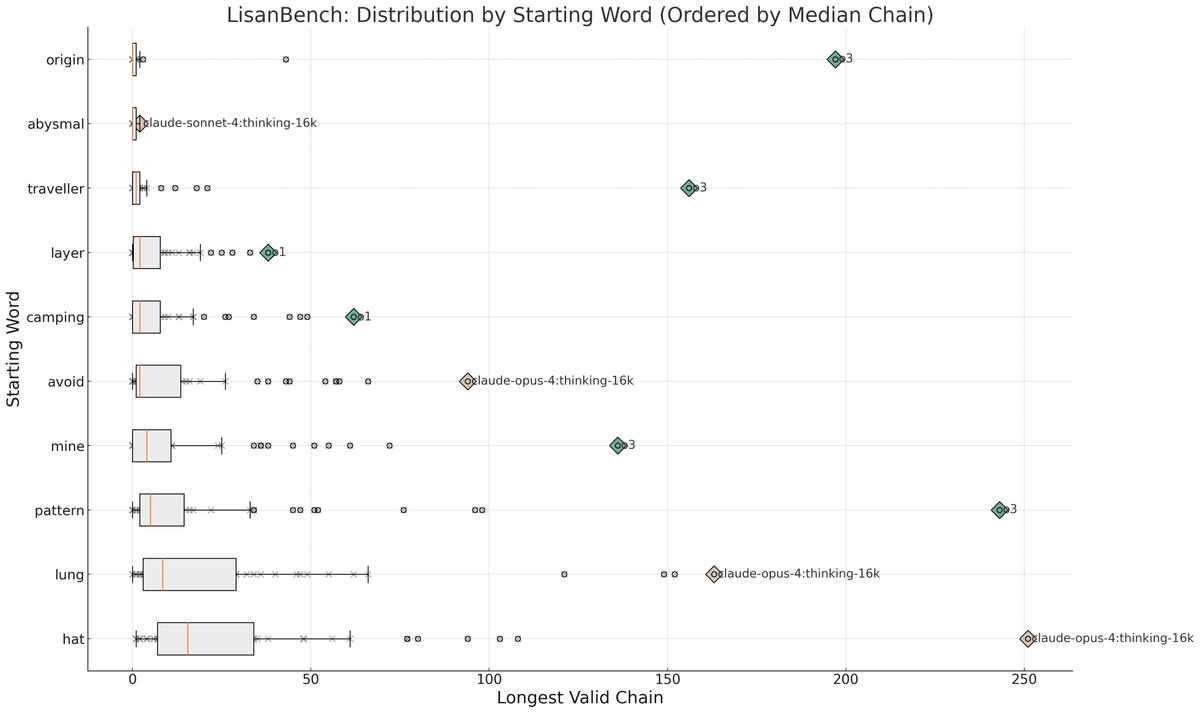

Decomposing helps the model to focus more on reasoning as it keeps the problem size smaller but it will basically get lost in the algorithm and repeat steps.

Decomposing helps the model to focus more on reasoning as it keeps the problem size smaller but it will basically get lost in the algorithm and repeat steps.

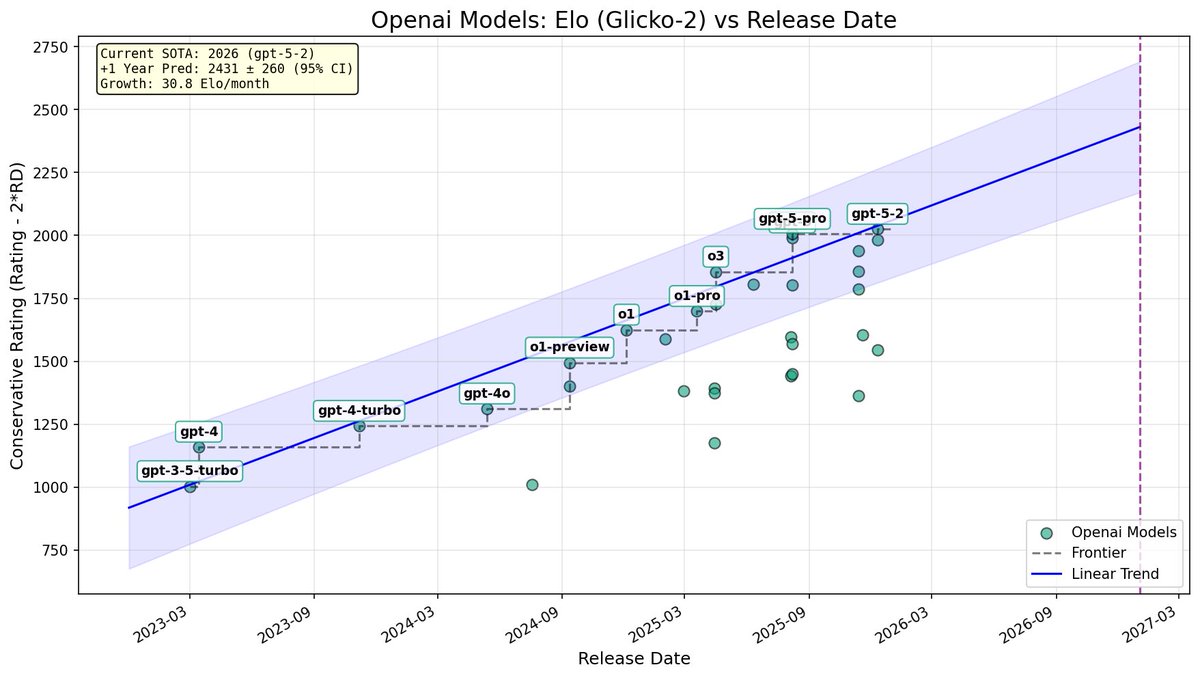

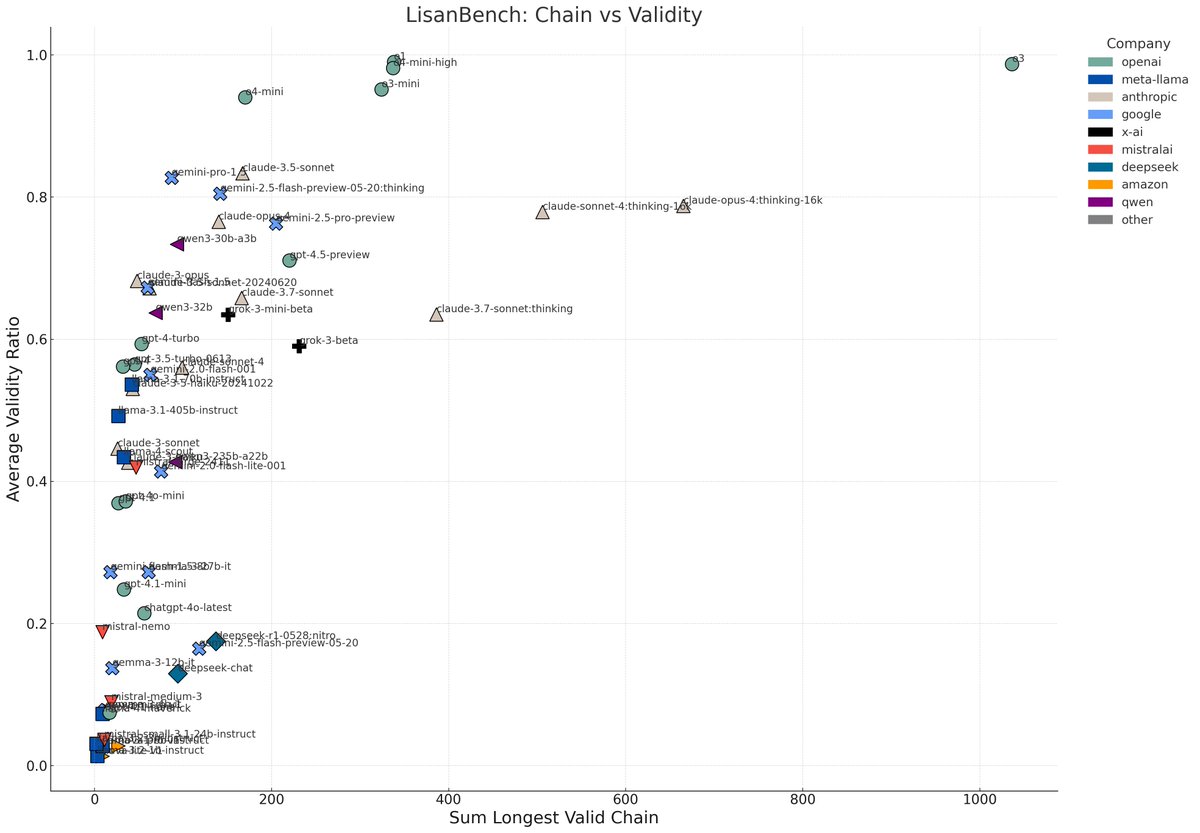

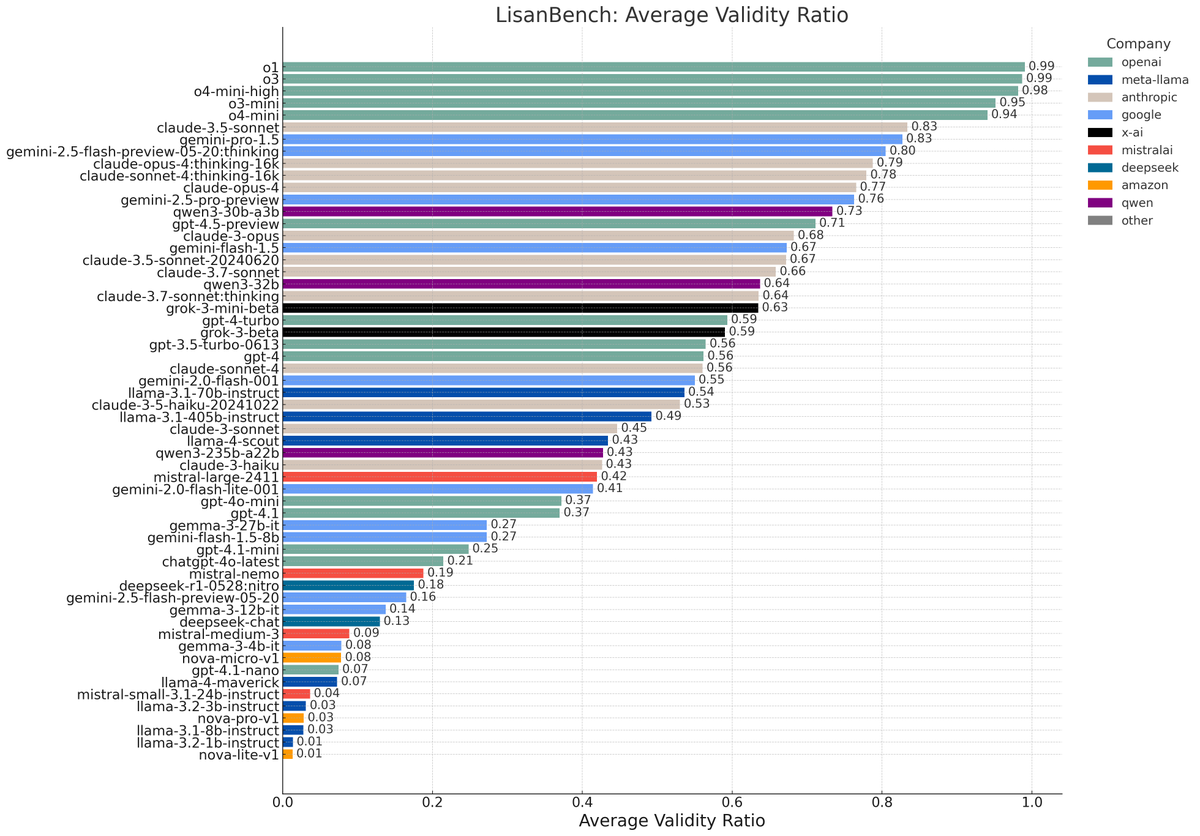

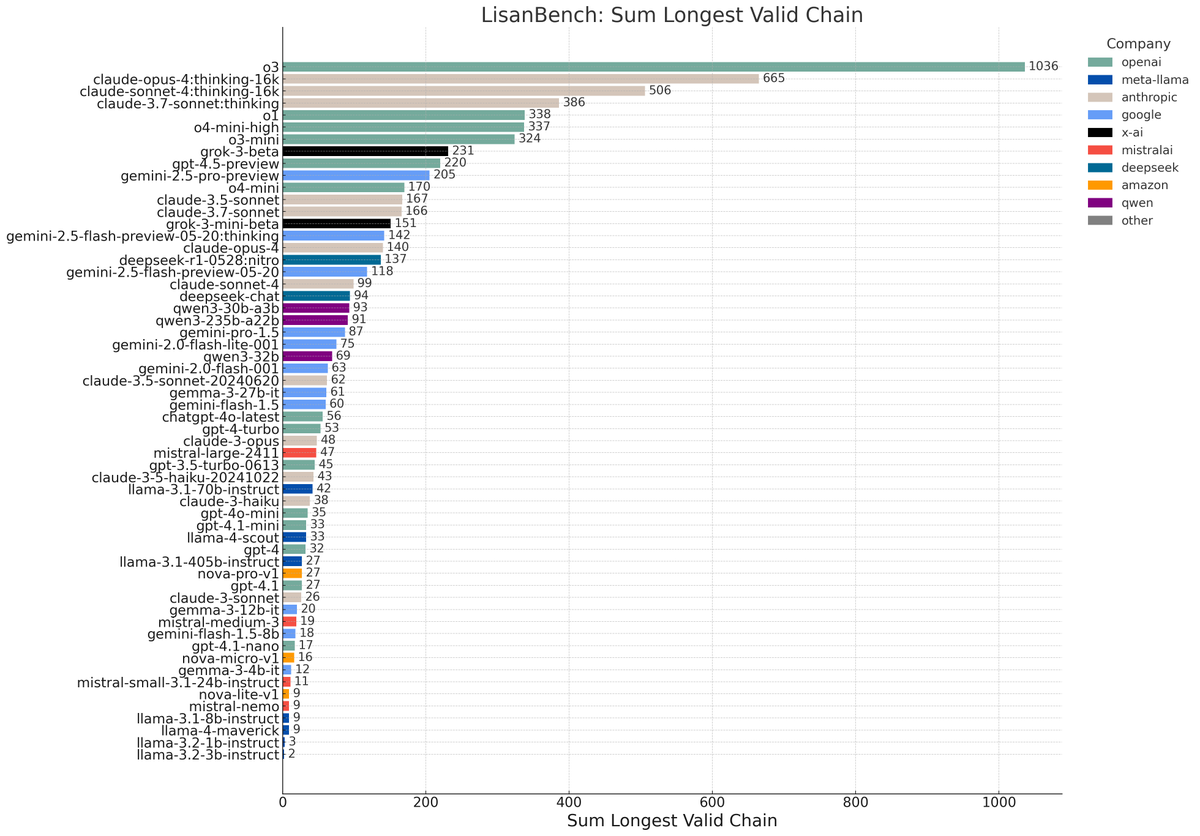

Full Leaderboard:

Full Leaderboard:

https://x.com/scaling01/status/1866268414517387299

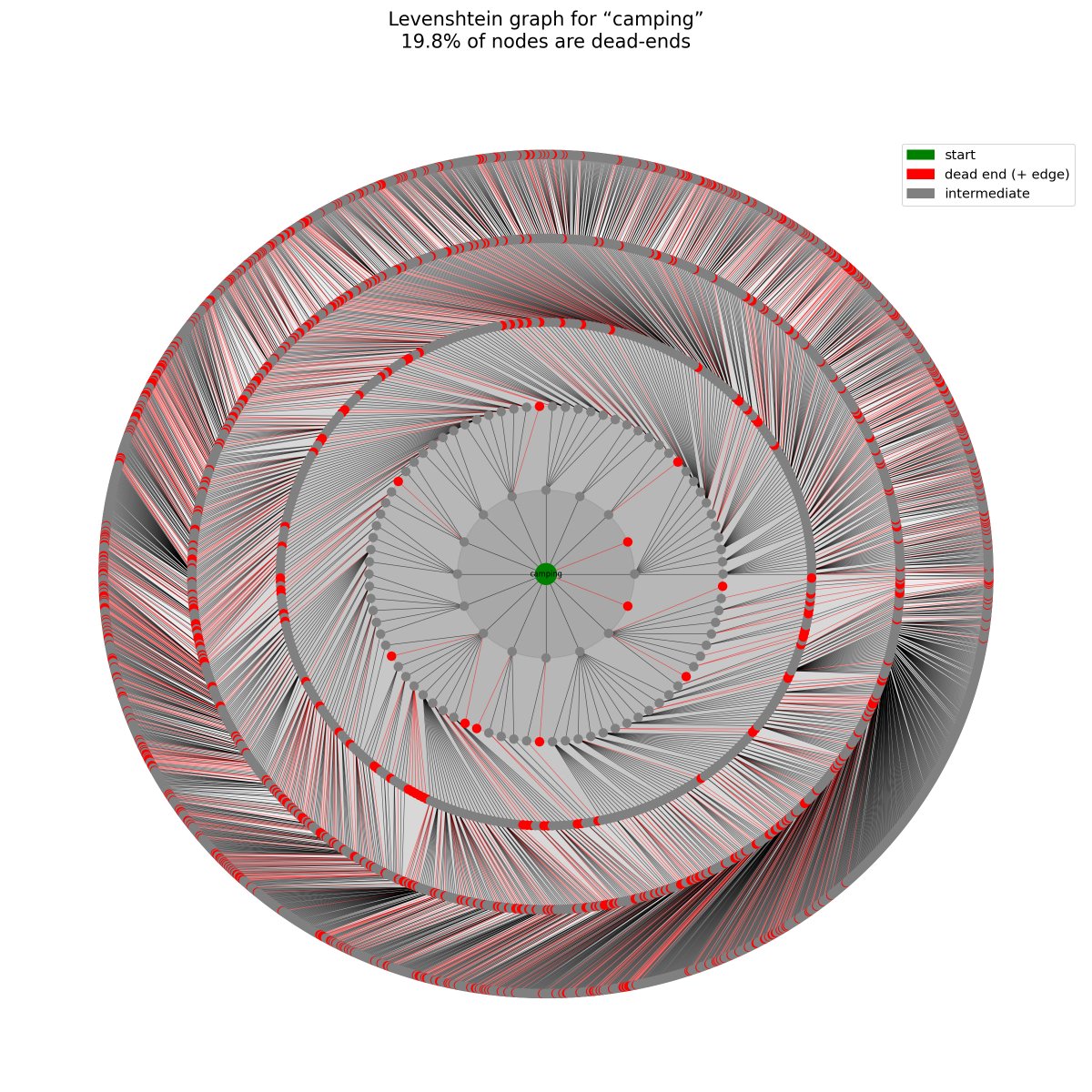

Paper Link: ai.meta.com/research/publi…

Paper Link: ai.meta.com/research/publi…