model measurement @OpenAI. Formerly @MotifAnalytics @Lyft and @Facebook. Keywords: Experiments, Causal Inference, Statistics, Machine Learning, Economics.

5 subscribers

How to get URL link on X (Twitter) App

https://twitter.com/seanjtaylor/status/554867763269742593?s=20&t=YgYjGZy3EzNHfvz8q39rWA

There's a market solution for this.

There's a market solution for this.

https://twitter.com/seanjtaylor/status/1242843979873320960I shared the Meng paper because it’s a nice discussion of how greater sample size doesn’t solve estimation problems. This is part of a strong opinion I have that collecting adequate data is the key challenge in most empirical problems. Some people will not agree with this.

https://twitter.com/flowingdata/status/1184506325272678400The original data is from the ACS. Nathan used a tool called IPUMS to download the data set: usa.ipums.org/usa/

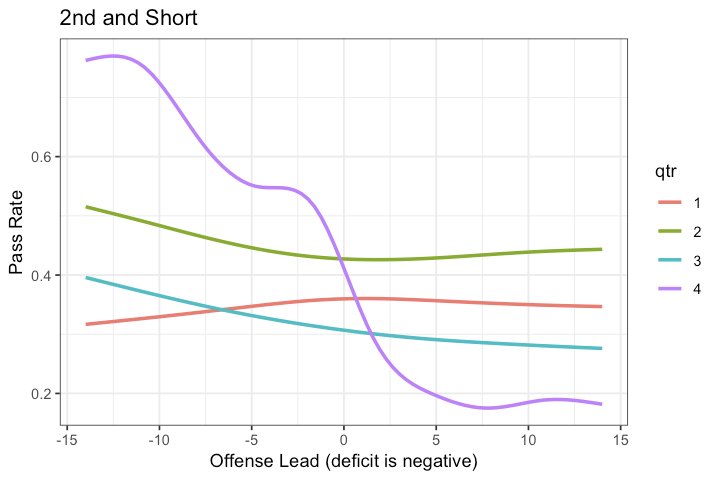

A coach's tendency in this game situation tells us a lot about what they're optimizing for. (Credit to @bburkeESPN for this idea!) The goal of football isn't to produce 1st downs but to score, may expect >50% pass rate. Most don't unless they are losing and it's late in the game.

A coach's tendency in this game situation tells us a lot about what they're optimizing for. (Credit to @bburkeESPN for this idea!) The goal of football isn't to produce 1st downs but to score, may expect >50% pass rate. Most don't unless they are losing and it's late in the game.