Patent Analyst 🧐 | Future Strategist | Tech Story Teller | Youtube channel : https://t.co/6hW7CCkxa9

How to get URL link on X (Twitter) App

1. Core Innovations

1. Core Innovations

https://twitter.com/testingcatalog/status/2001243764845896054👀

만약 이번에 GPT-5.2가 실망스럽고, 크리스마스쯤 나오기로 한 Grok 4.20이 기대 이상의 성능을 보여준다면, 막타는 Grok이 치는 꼴이 될 수도 있겠네요. 🤣🤣🤣

만약 이번에 GPT-5.2가 실망스럽고, 크리스마스쯤 나오기로 한 Grok 4.20이 기대 이상의 성능을 보여준다면, 막타는 Grok이 치는 꼴이 될 수도 있겠네요. 🤣🤣🤣

1. Core Innovations

1. Core Innovations

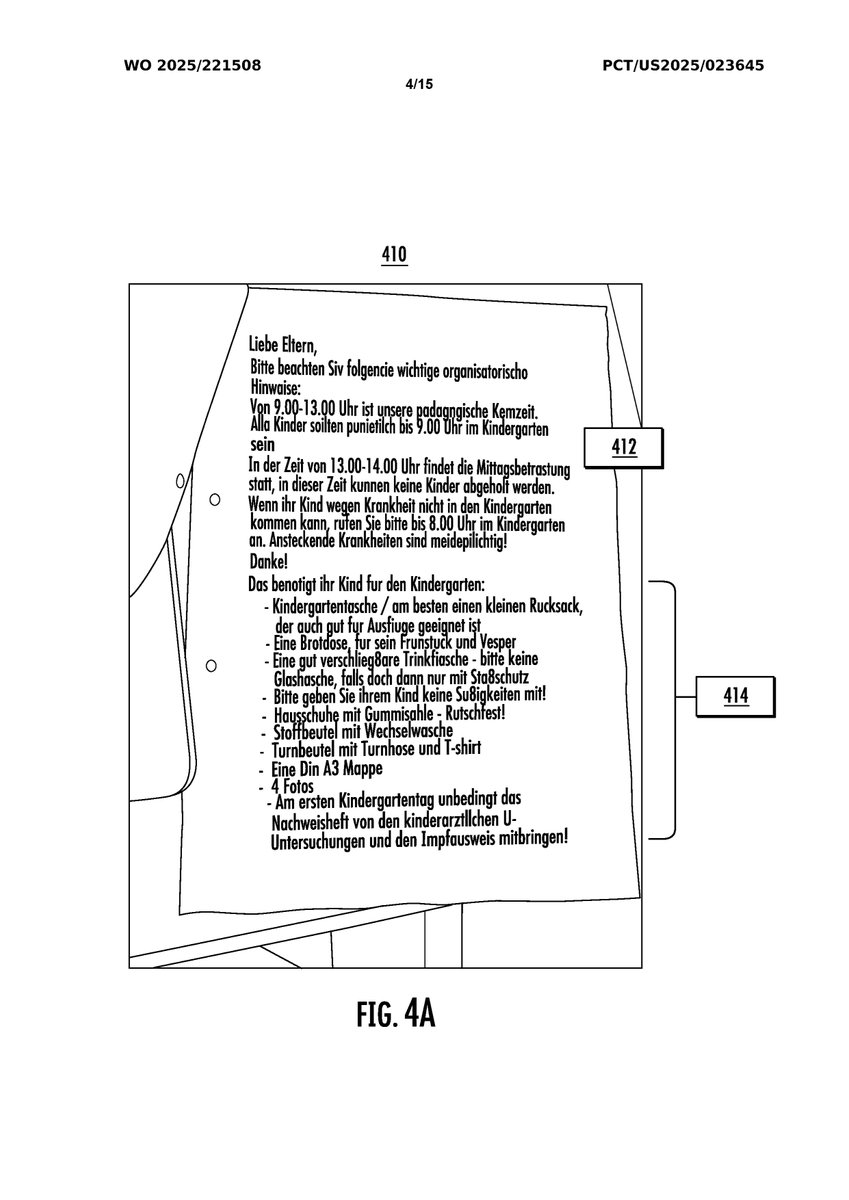

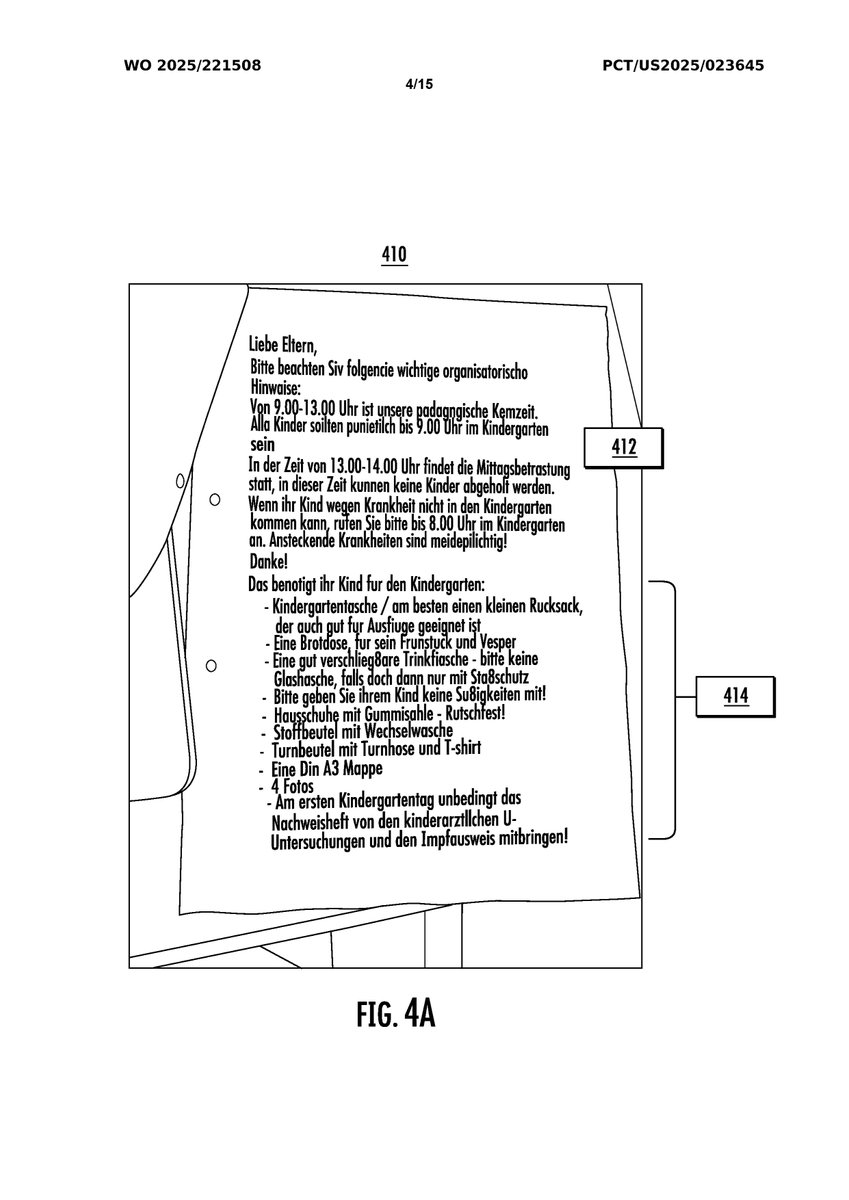

https://twitter.com/seti_park/status/19742810305312320991️⃣ WO2025221508A1: AR 번역 인터페이스 제공을 위한 기계학습 텍스트 정렬 예측 기술입니다.

1. Core Innovations

1. Core Innovations

https://twitter.com/seti_park/status/1975839444919066789HUMANOID ROBOT WITH ADVANCED KINEMATICS

https://twitter.com/gonogo_korea/status/1975816225013047338타겟 제 1호: Figure 03의 신체구조 🤖🦾🦿

https://twitter.com/figure_robot/status/1975354154832015518

어? 👀❗️

어? 👀❗️ https://twitter.com/googledeepmind/status/1973027679227040014

1. Core Innovations

1. Core Innovations

1. Core Innovations

1. Core Innovations

1. Core Innovations:

1. Core Innovations:

Free-Standing Lithium Metal Electrode Fabrication Using Direct Elemental Lithium

Free-Standing Lithium Metal Electrode Fabrication Using Direct Elemental Lithium

1. Core Innovations:

1. Core Innovations:

Modular Vehicle Architecture for Assembling Vehicles

Modular Vehicle Architecture for Assembling Vehicles

Tesla and Argonne's High-Voltage Battery Electrolyte Additives

Tesla and Argonne's High-Voltage Battery Electrolyte Additives

Patent US 2024/0164089 A1 is about a system and method of providing access to compute resources distributed across a group of satellites. This technology aims to provide cloud-services similar to AWS or Azure by utilizing a large-scale satellite constellation like Starlink.

Patent US 2024/0164089 A1 is about a system and method of providing access to compute resources distributed across a group of satellites. This technology aims to provide cloud-services similar to AWS or Azure by utilizing a large-scale satellite constellation like Starlink.