@ Google Deepmind. Past: @MetaAI, @OpenAI, @unitygames, @losalamosnatlab, @Princeton etc. Always hungry for intelligence.

How to get URL link on X (Twitter) App

https://x.com/suchenzang/status/1995452641430651132

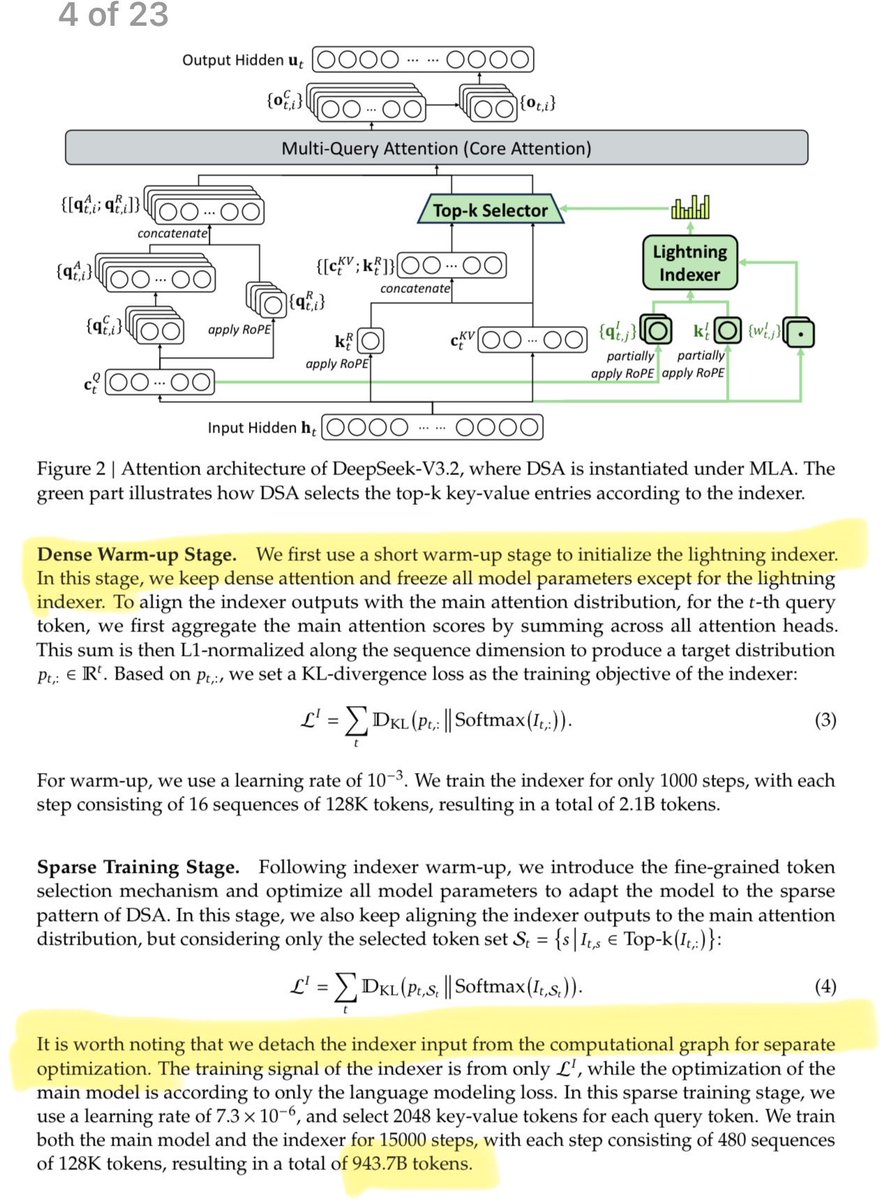

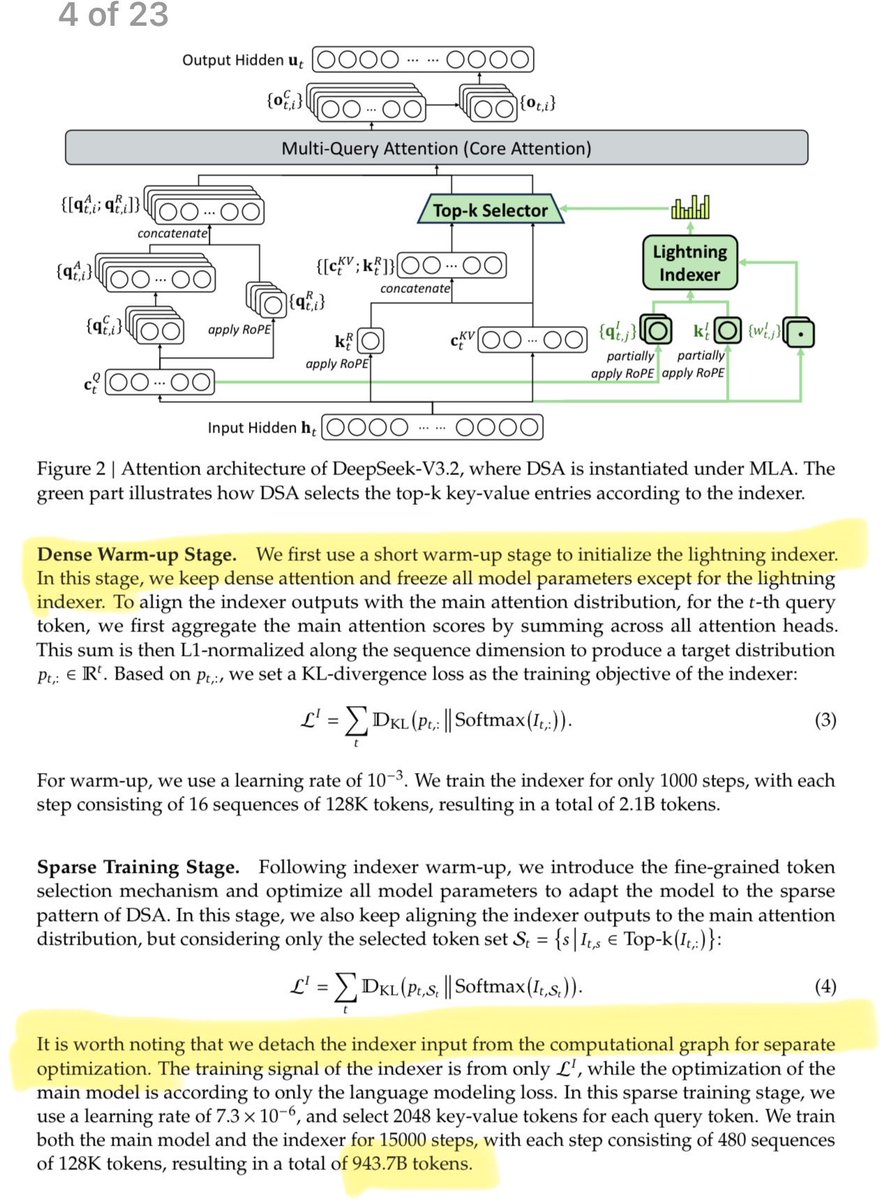

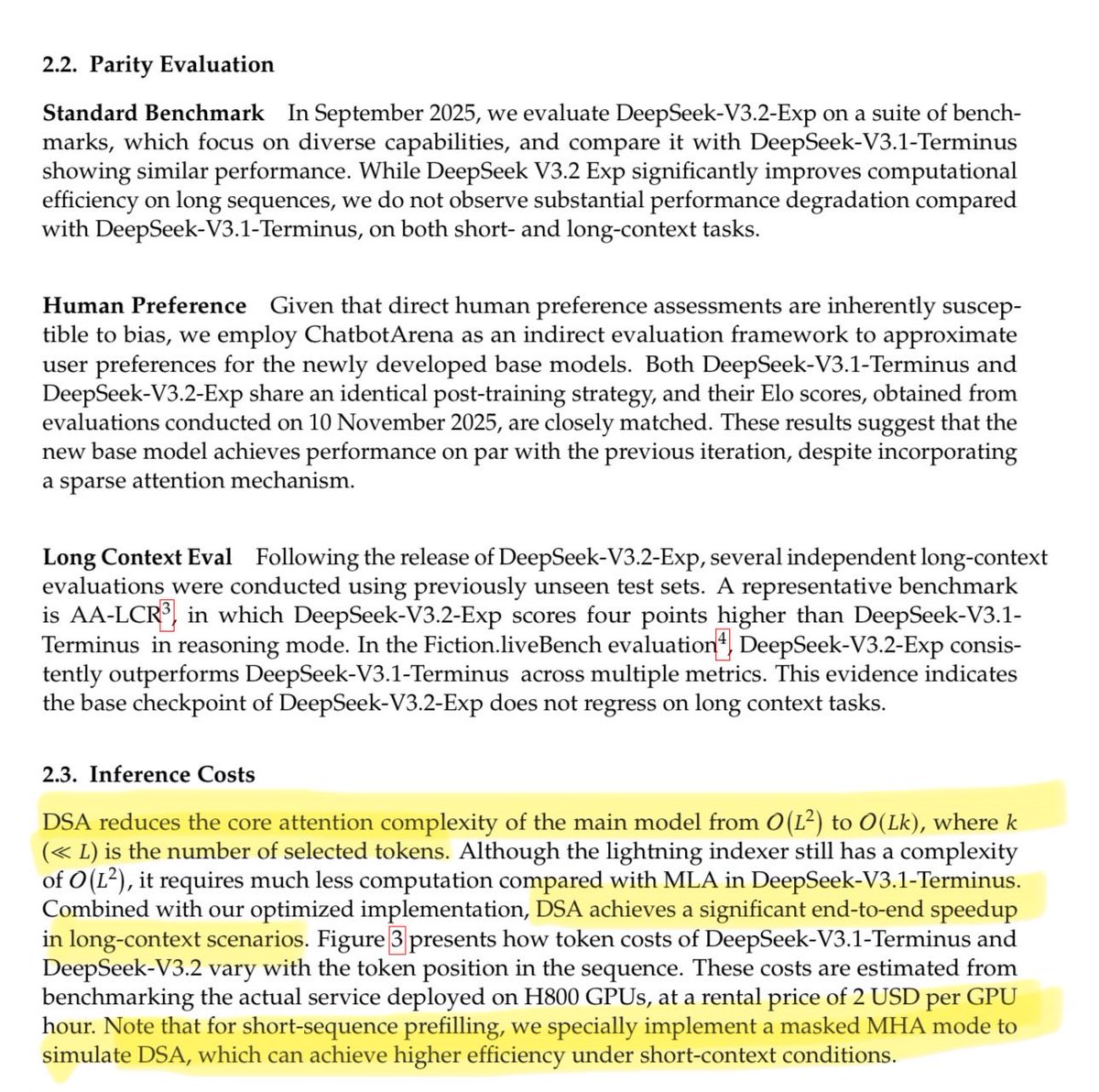

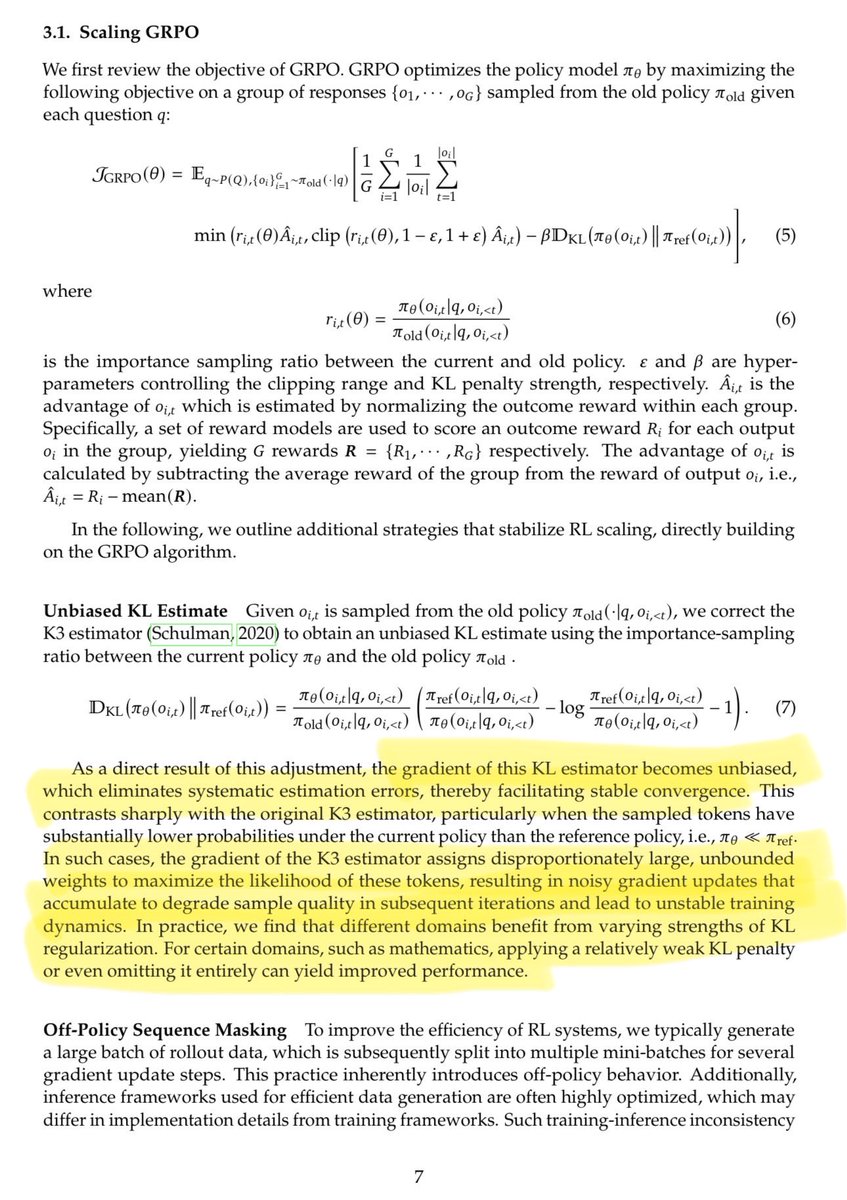

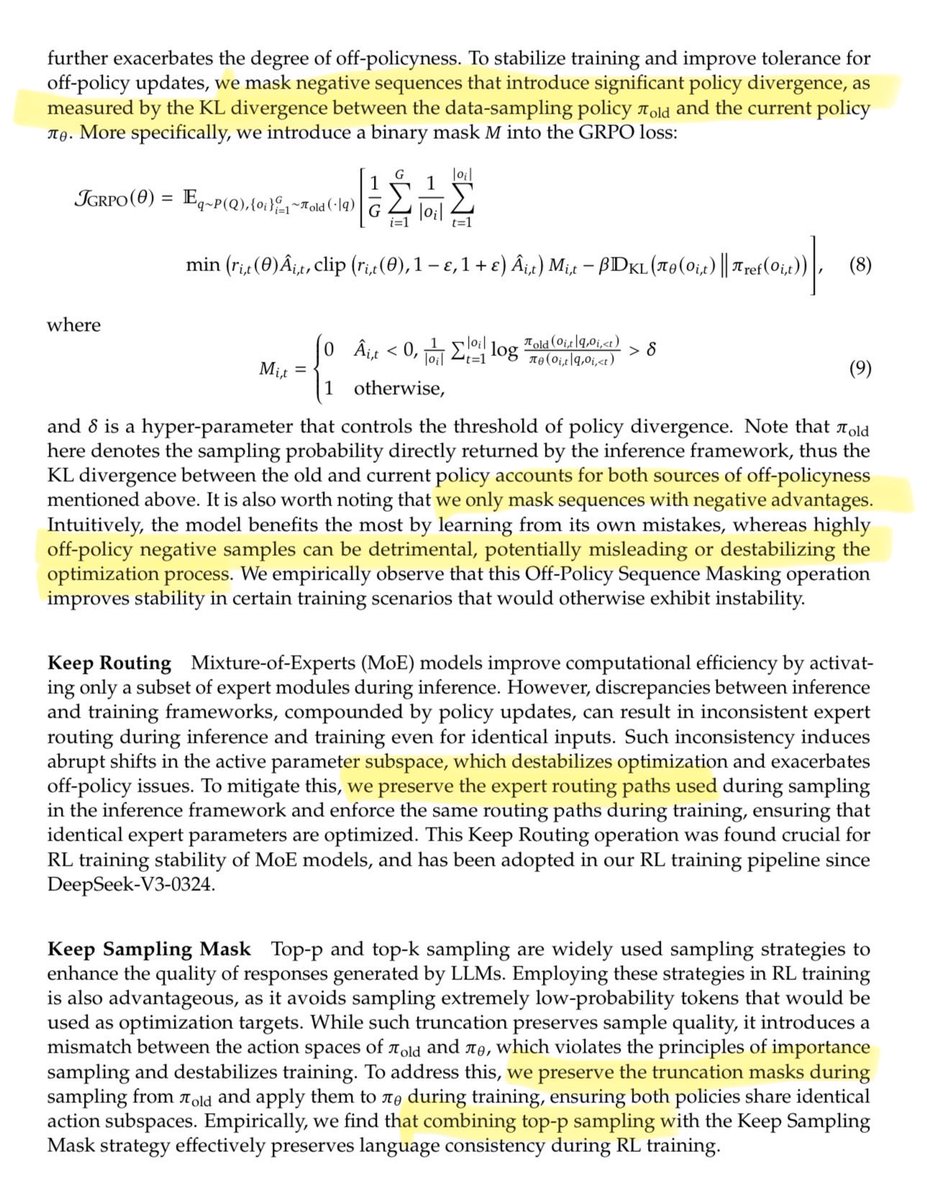

They also make several innovations to stabilize RL training (far beyond what that other "open bell labs" place published in blog posts 👀):

They also make several innovations to stabilize RL training (far beyond what that other "open bell labs" place published in blog posts 👀):

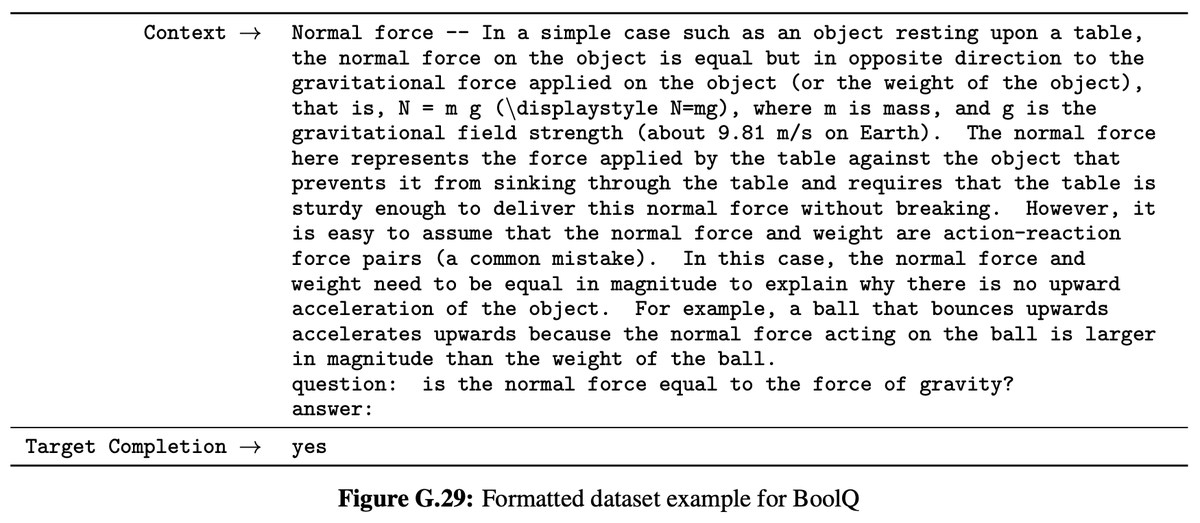

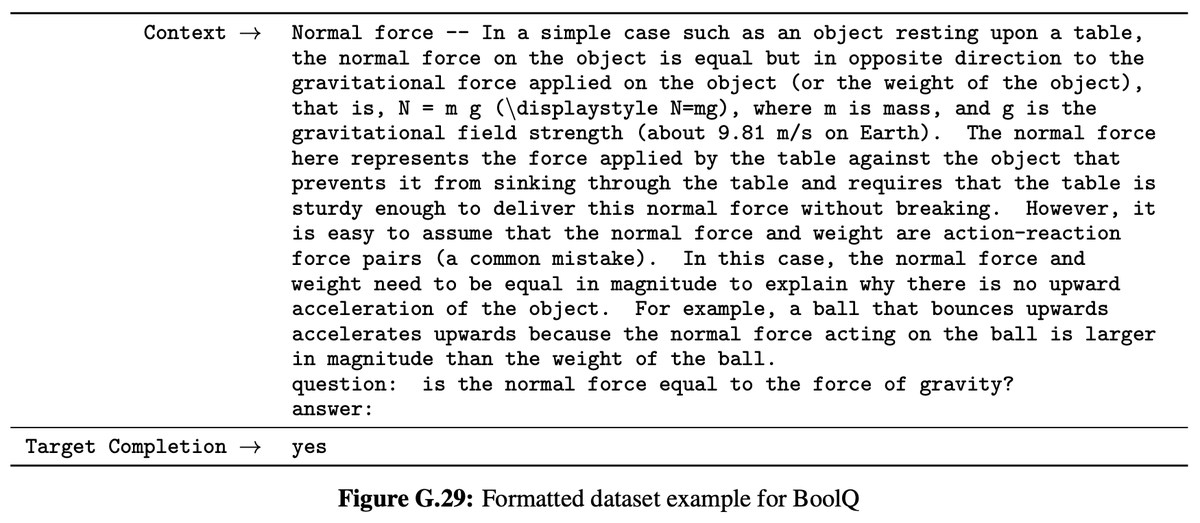

https://twitter.com/drjwrae/status/1617033514037411847First up: BoolQ. If you download the actual benchmark, it's true/false completions. GPT-3 swaps in yes/no instead. Why? Well when we did the same swap to yes/no, we saw a +10% accuracy jump on this benchmark.

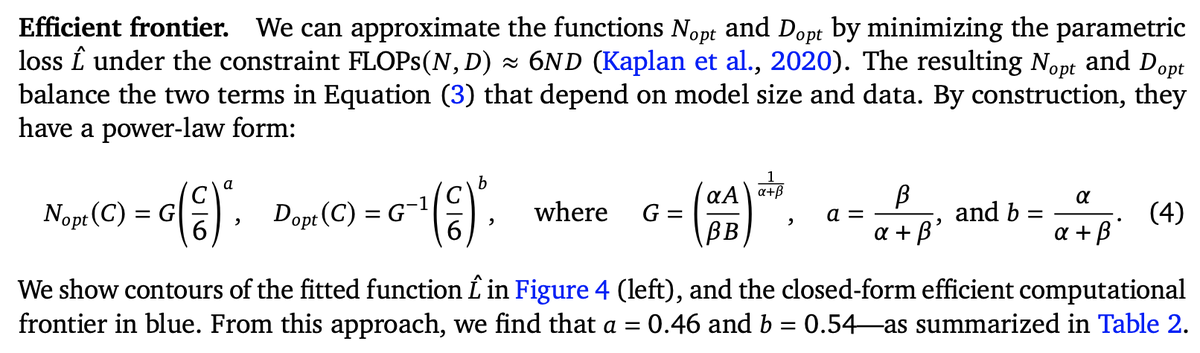

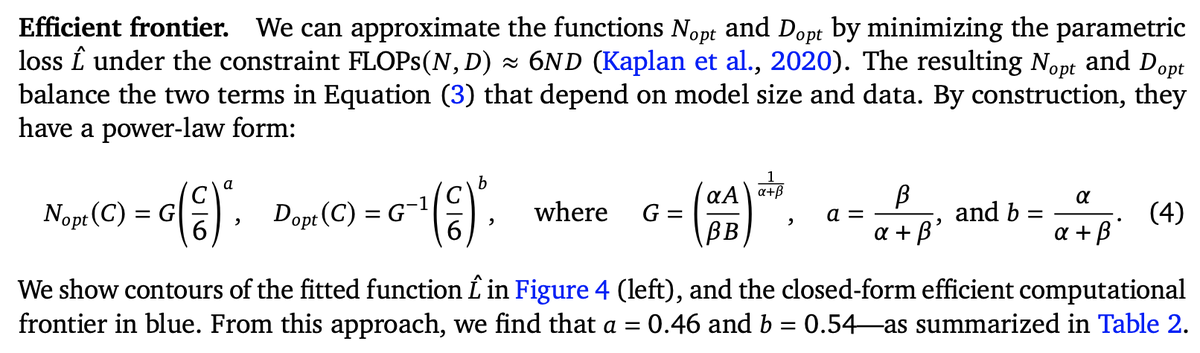

https://twitter.com/stephenroller/status/1616605686141435905First thing to make me eye-roll a bit was this fancy equation (4) that seems to re-parameterize the key exponent terms (a,b) into (alpha,beta) to define a coefficient term G. Why this level of indirection just to define a scalar-coefficient? No idea. [2/7]