fast, lightweight web agents | accessibility for amnestic LLMs | applying Glyphosate to the axe

How to get URL link on X (Twitter) App

https://twitter.com/sucralose__/status/1769530252693258306

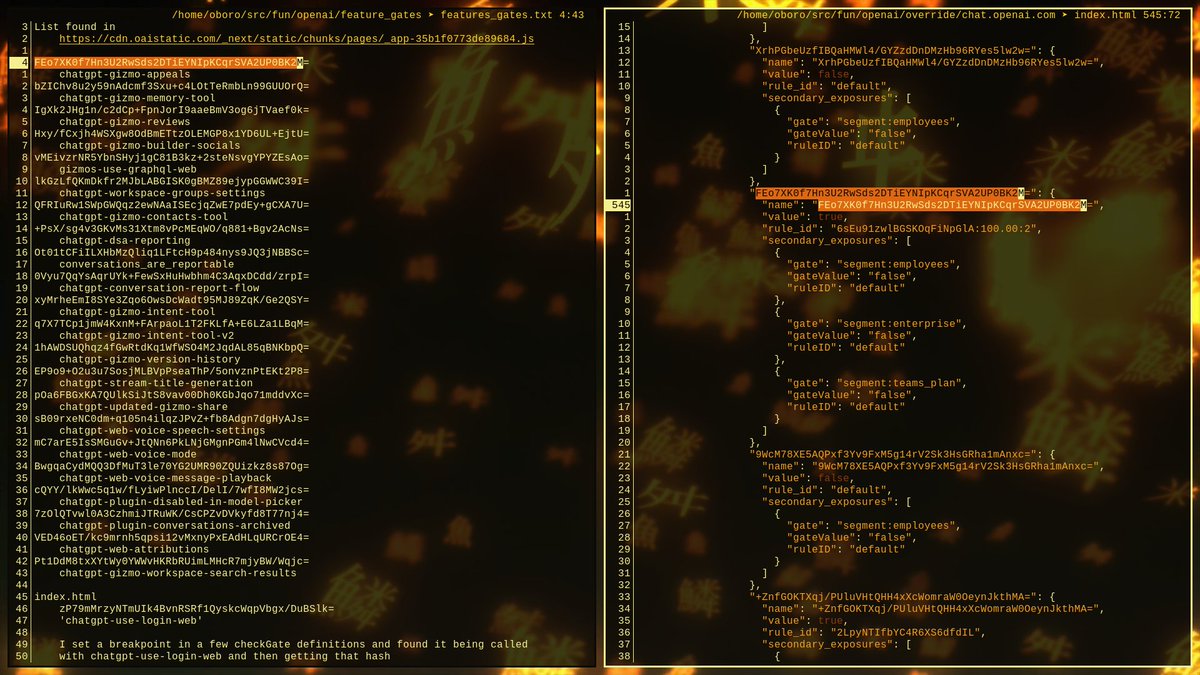

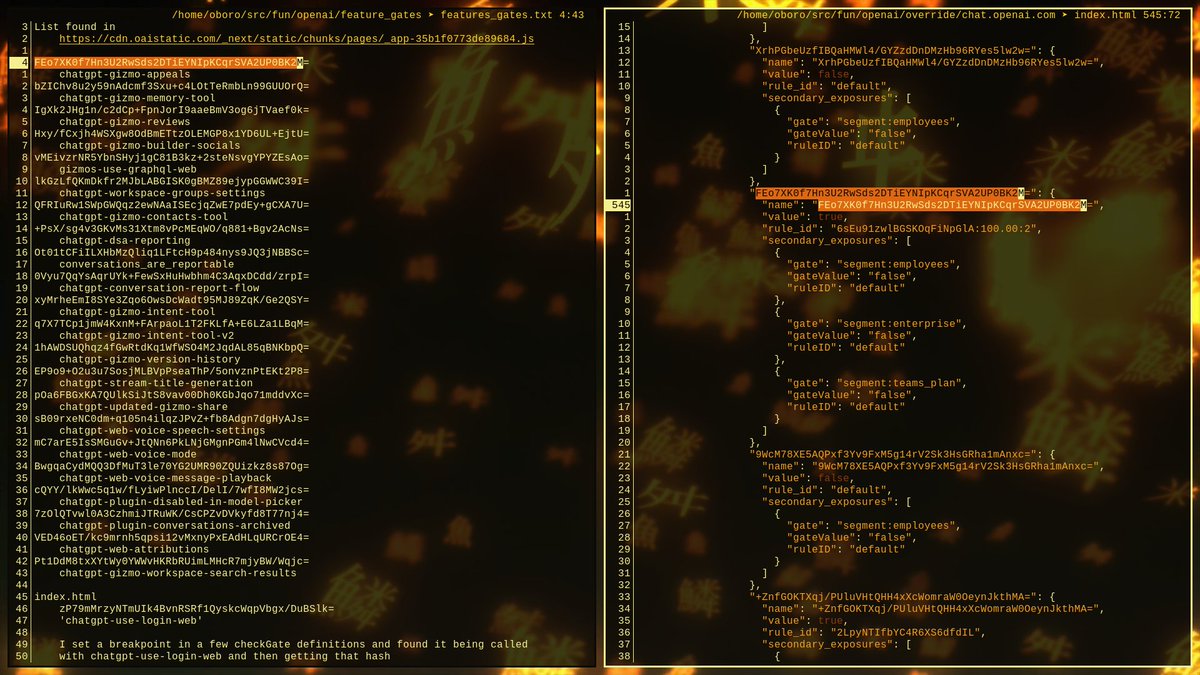

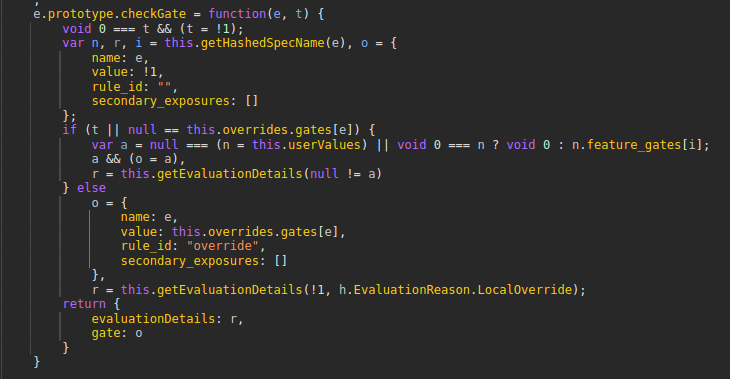

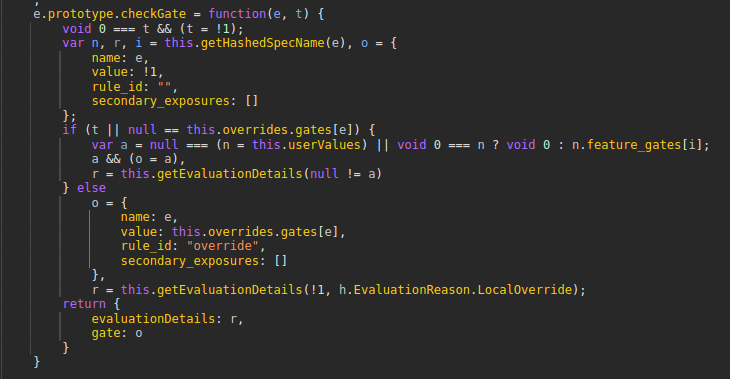

Unfortunately, it's not that simple. If you modify that __NEXT_DATA__ JSON script inside the index to switch values between true and false, there's no reaction. You can even delete the entire surrouding props.pageProps.statsig.payload with no issues.

Unfortunately, it's not that simple. If you modify that __NEXT_DATA__ JSON script inside the index to switch values between true and false, there's no reaction. You can even delete the entire surrouding props.pageProps.statsig.payload with no issues.

https://twitter.com/sucralose__/status/1769208380164395185

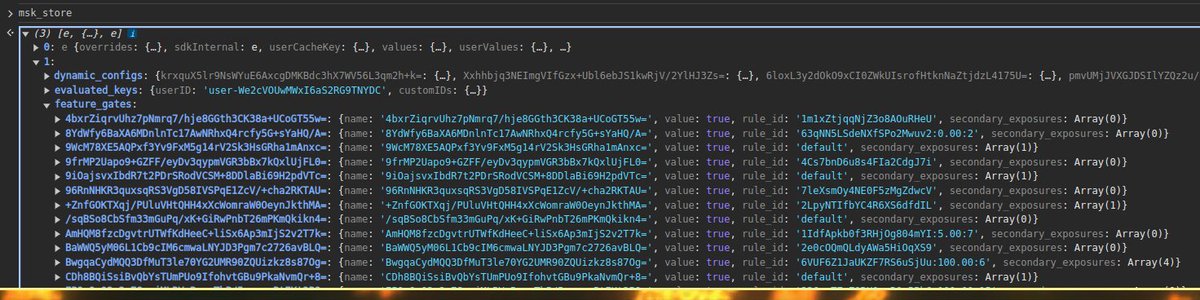

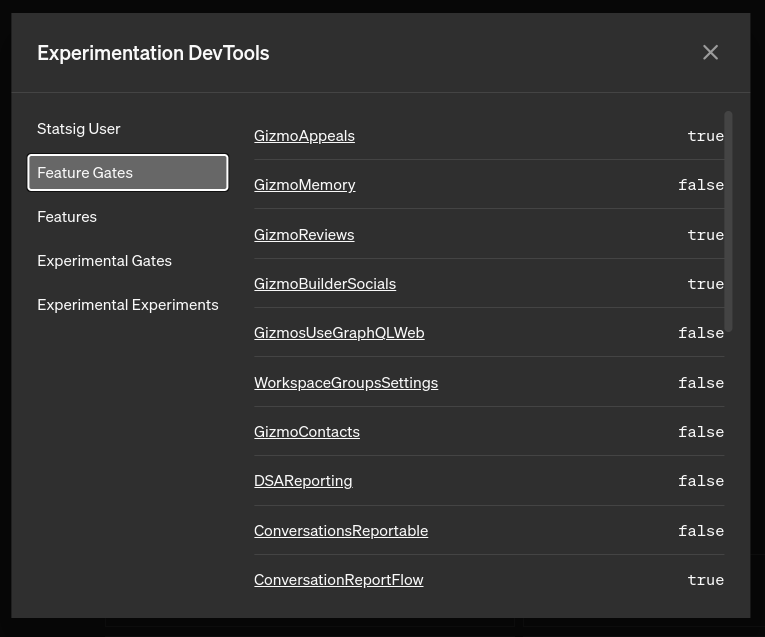

Welp, this is disappointing. Only two instances of that class were created... I captured both of them and overwrote all of the values in the feature_gates array, but alas... no UI reaction. Maybe these are decoys? I noticed a separate "is_user_in_experiment" key...

Welp, this is disappointing. Only two instances of that class were created... I captured both of them and overwrote all of the values in the feature_gates array, but alas... no UI reaction. Maybe these are decoys? I noticed a separate "is_user_in_experiment" key...