How to get URL link on X (Twitter) App

https://twitter.com/METR_Evals/status/1902384481111322929

The idea is to define the ‘time horizon’ a human club player needs to match AI moves. Early AIs were easy to outplay quickly, but as you go up to 2400 ELO engines, you need more thinking time—and matching Stockfish might take years per move!

The idea is to define the ‘time horizon’ a human club player needs to match AI moves. Early AIs were easy to outplay quickly, but as you go up to 2400 ELO engines, you need more thinking time—and matching Stockfish might take years per move!

https://x.com/tamaybes/status/1870335911264932326?s=46

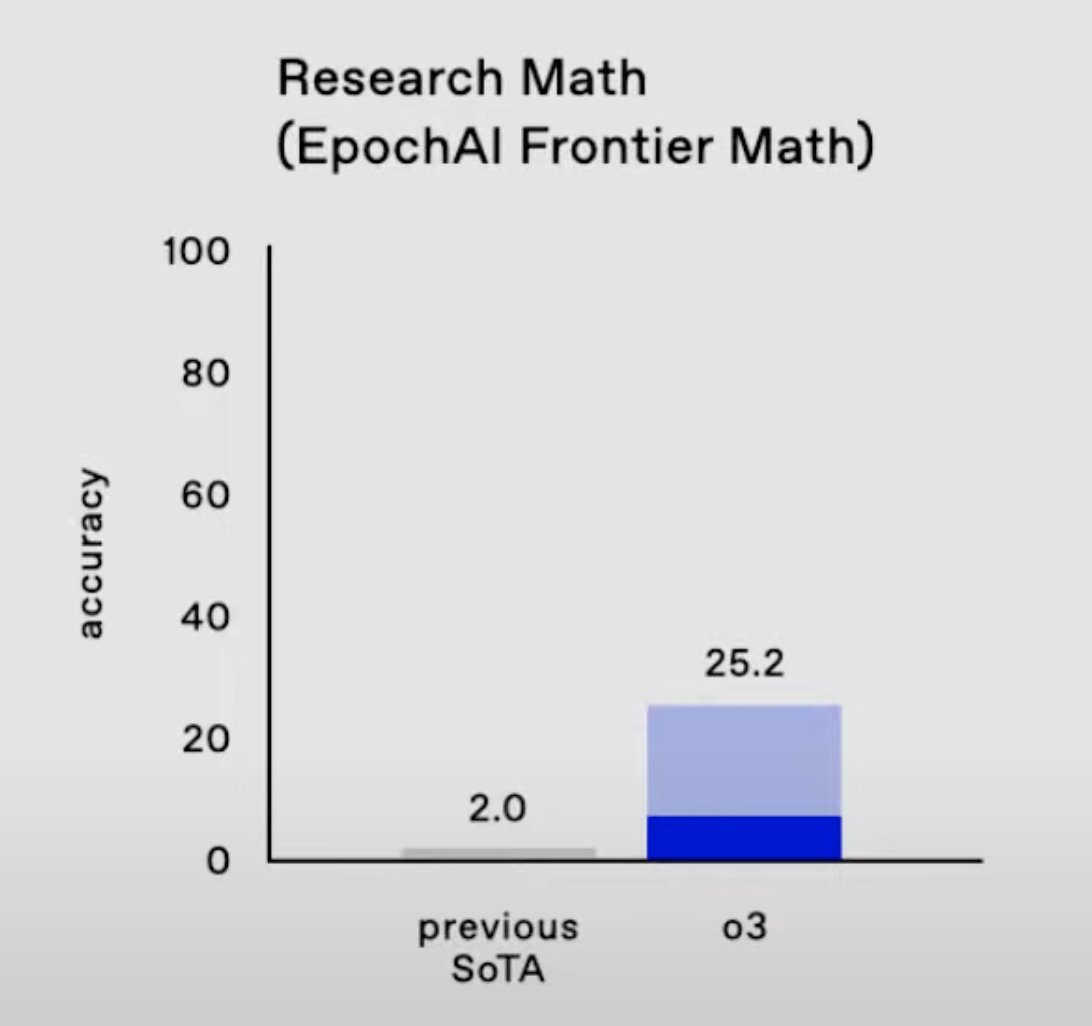

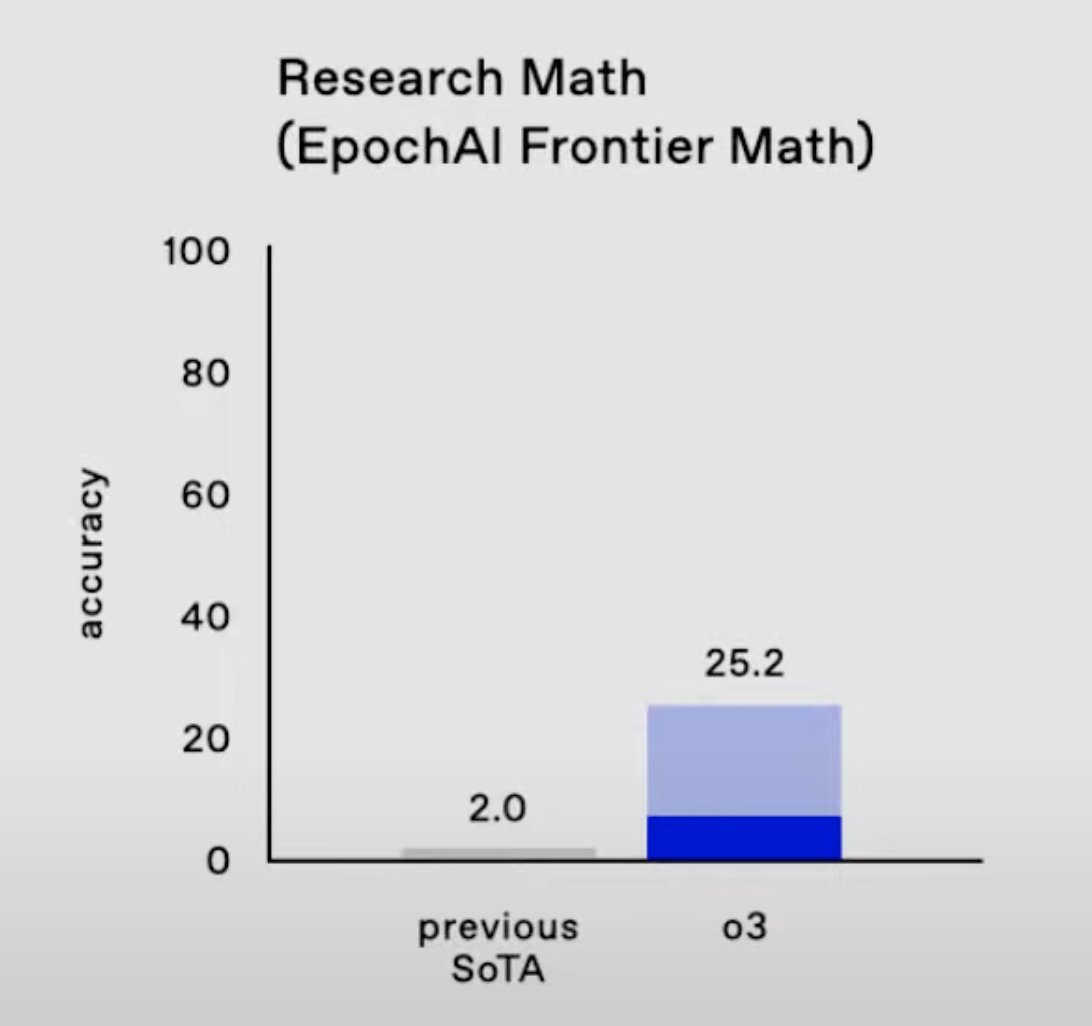

2/11 For context, FrontierMath is a brutally difficult benchmark with problems that would stump many mathematicians. The easier problems are as hard as IMO/Putnam; the hardest ones approach research-level complexity.

2/11 For context, FrontierMath is a brutally difficult benchmark with problems that would stump many mathematicians. The easier problems are as hard as IMO/Putnam; the hardest ones approach research-level complexity.https://x.com/MatthewJBar/status/1855406568717664760

https://twitter.com/tamaybes/status/1780639257389904013The authors responded, clarifying that this was the result of their optimizer stopping early due to a bad loss scale choice. They plan to update their results and release the data. We appreciate @borgeaud_s and others' openness in addressing this issue.

https://twitter.com/borgeaud_s/status/1780988694163321250

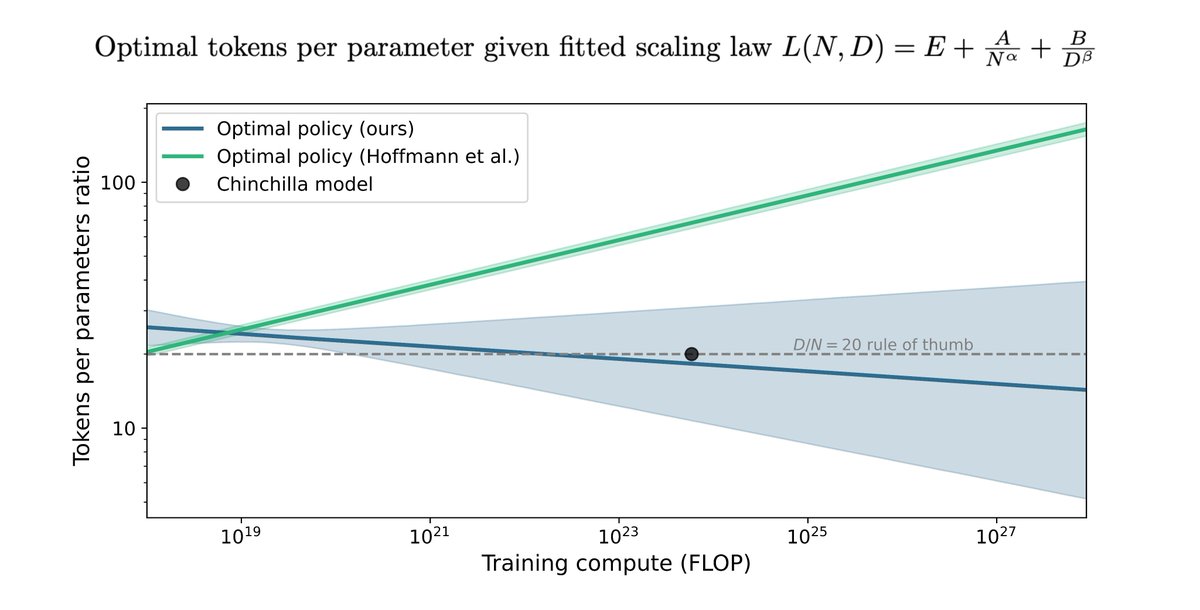

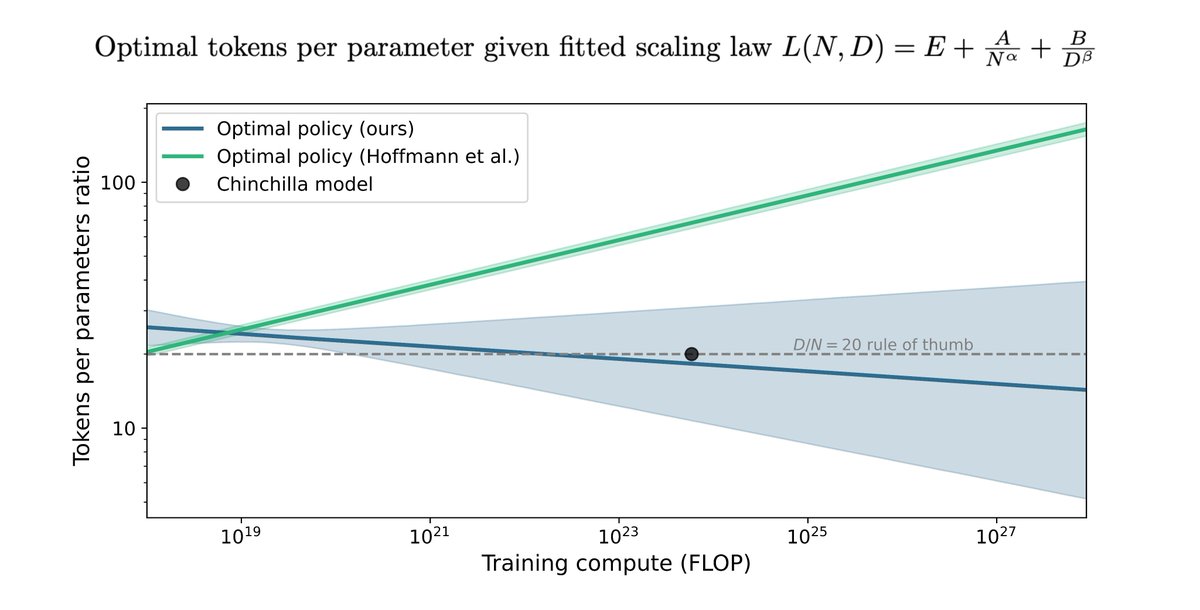

We reconstructed the data by extracting the SVG from the paper, parsing out the point locations & colors, mapping the coordinates to model size & FLOP, and mapping the colors to loss values. This let us closely approximate their original dataset from just the figure. (2/9)

We reconstructed the data by extracting the SVG from the paper, parsing out the point locations & colors, mapping the coordinates to model size & FLOP, and mapping the colors to loss values. This let us closely approximate their original dataset from just the figure. (2/9)

This rate of algorithmic progress is much faster than the two-year doubling time of Moore's Law for hardware improvements, and faster than other domains of software, like SAT-solvers, linear programs, etc. (2/10)

This rate of algorithmic progress is much faster than the two-year doubling time of Moore's Law for hardware improvements, and faster than other domains of software, like SAT-solvers, linear programs, etc. (2/10)

1840s—70s: Key manufacturing innovations occur (pneumatic process for cheap steel and sewing machine are invented); Transport (improvements in steam-engines. The Bollman bridge, air brake system, cable car are patented); Consumer Goods (board game, toothbrush, picture machine).

1840s—70s: Key manufacturing innovations occur (pneumatic process for cheap steel and sewing machine are invented); Transport (improvements in steam-engines. The Bollman bridge, air brake system, cable car are patented); Consumer Goods (board game, toothbrush, picture machine).