We're in a race. It's not USA vs China but humans and AGIs vs ape power centralization.

@deepseek_ai stan #1, 2023–Deep Time

«C’est la guerre.» ®1

How to get URL link on X (Twitter) App

https://x.com/teortaxesTex/status/2012034192796942496?s=20

https://x.com/teortaxesTex/status/2006614794170937646

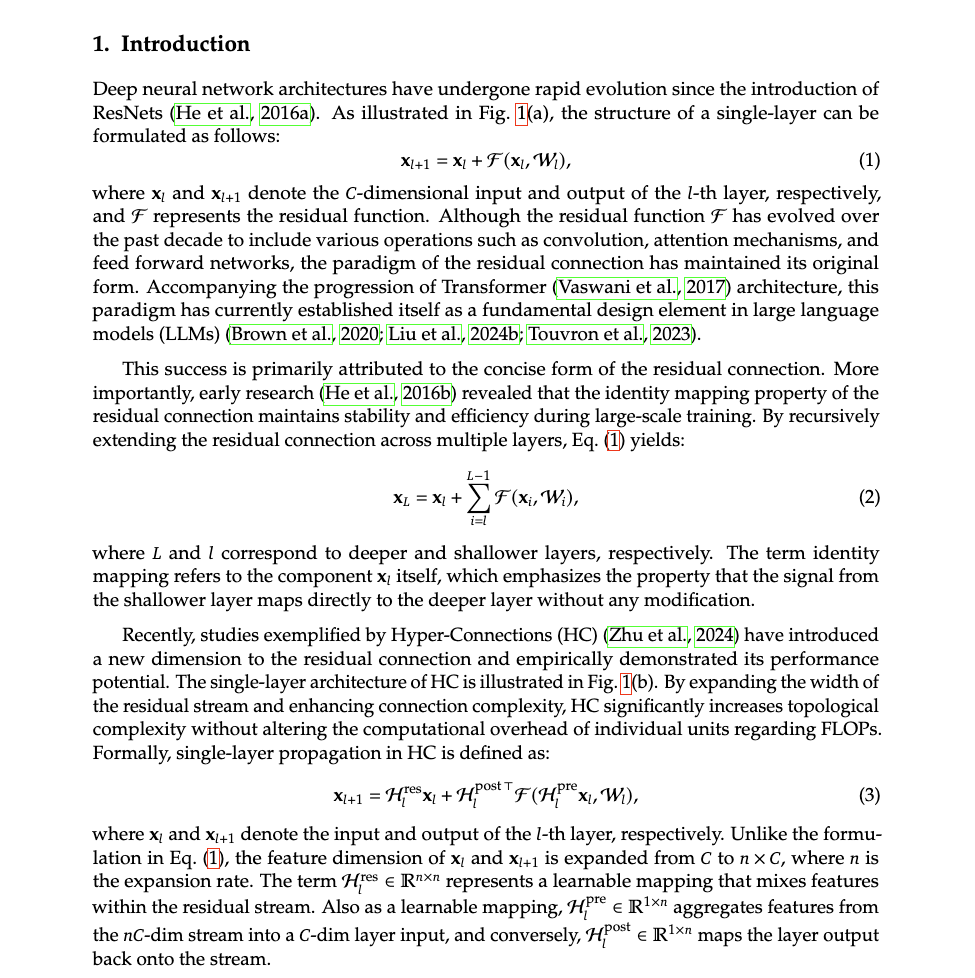

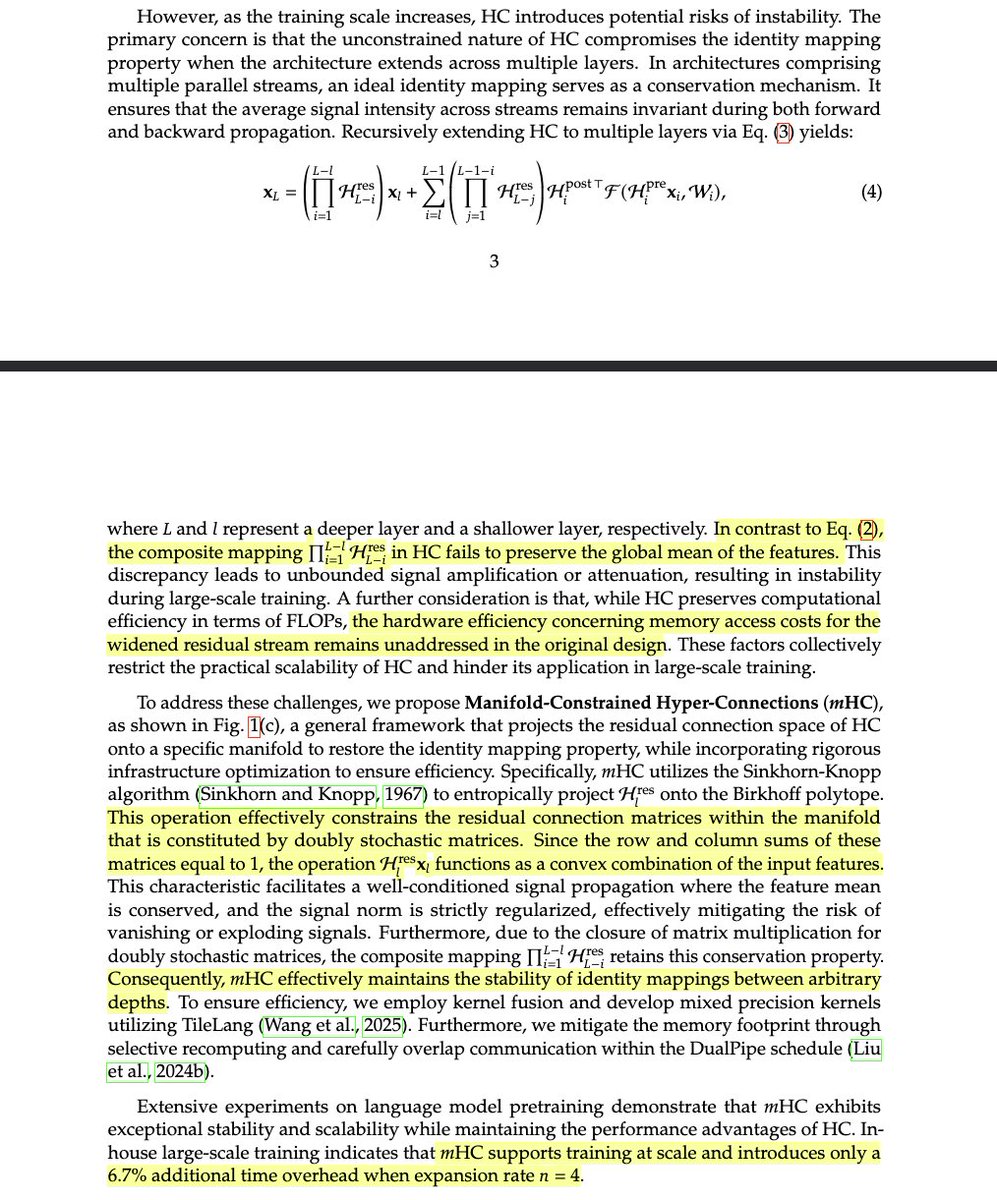

They turn hyper-connections from academic curiosity into a basic design motif. This is what I've long expected from them – both mathematical insight and hardware optimization. ResNets for top-tier LLMs might be toast.

They turn hyper-connections from academic curiosity into a basic design motif. This is what I've long expected from them – both mathematical insight and hardware optimization. ResNets for top-tier LLMs might be toast.https://x.com/teortaxesTex/status/1950334645851095297?s=20

https://twitter.com/BobgonzaleBob/status/1996832802113831071

A little bit schizophrenic but ok. Baby steps.

A little bit schizophrenic but ok. Baby steps.

https://twitter.com/teortaxesTex/status/1987431448895169005

EVERYONE KNEW THIS WILL HAPPEN

EVERYONE KNEW THIS WILL HAPPEN

https://twitter.com/dhtikna/status/1972919990845325577

But they are consistent in that they share milestones in their internal exploration. So what is this milestone? I think they are doing fundamental research on attention. Perhaps they've concluded that "native sparse" is too crude; that you need to train attentionS in stages.

But they are consistent in that they share milestones in their internal exploration. So what is this milestone? I think they are doing fundamental research on attention. Perhaps they've concluded that "native sparse" is too crude; that you need to train attentionS in stages.

I don't care about moralizing or finger pointing. You simply cannot make a case for having a kid that sounds compelling to a normal zoomer woman. The world in the context of which these arguments made sense is outside their consensus reality. They were born to live as teenagers.

I don't care about moralizing or finger pointing. You simply cannot make a case for having a kid that sounds compelling to a normal zoomer woman. The world in the context of which these arguments made sense is outside their consensus reality. They were born to live as teenagers.

https://twitter.com/Yulun_Du/status/1944582056349995111

That's it. Just multi-objective optimization. In K2's own words, “Because the feasible set is tiny (DSv3 topology + cost caps), the search collapses to tuning a handful of residual parameters under tight resource budgets”.

That's it. Just multi-objective optimization. In K2's own words, “Because the feasible set is tiny (DSv3 topology + cost caps), the search collapses to tuning a handful of residual parameters under tight resource budgets”.

https://twitter.com/TheEmissaryCo/status/1929274039228395671Opposition to casteism is, accordingly, the most noble of national Indian projects. It comes with its own problems now, but there really is no way around it. The system has to be razed to the ground.

https://twitter.com/vnovak_404/status/1922230057264623871

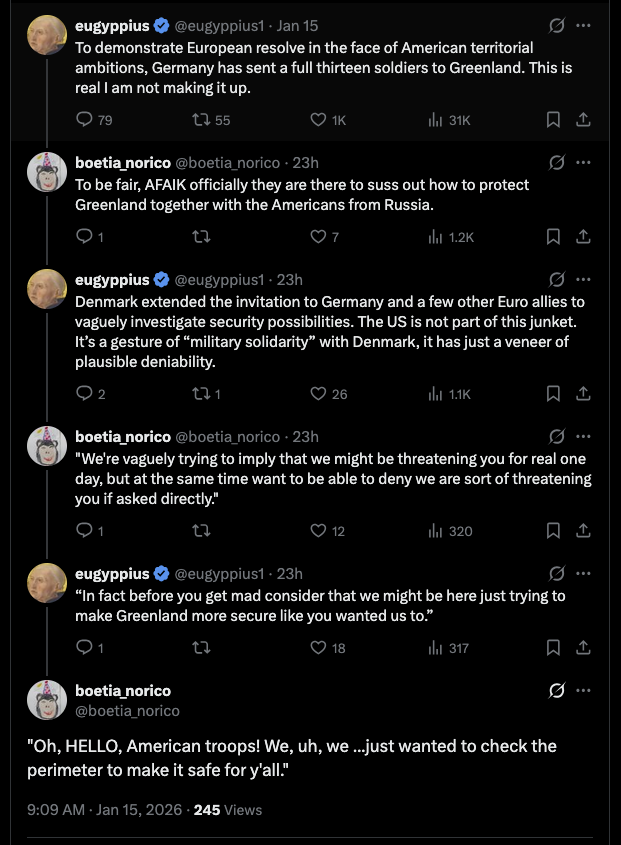

It's all right to refuse to trust this. That's the spirit. But I have to say, I'd like the United States Department of State to make a similar commitment. I do not want to kowtow to anybody. And I don't want a Planetary Kang who'll tell my leader to kiss his ass.

It's all right to refuse to trust this. That's the spirit. But I have to say, I'd like the United States Department of State to make a similar commitment. I do not want to kowtow to anybody. And I don't want a Planetary Kang who'll tell my leader to kiss his ass.

«the company had just formed a new project team responsible for research and preparation related to cluster construction, so I went to "build a server room." Without lengthy prior training, at High-Flyer, I was immediately given tremendous autonomy.»

«the company had just formed a new project team responsible for research and preparation related to cluster construction, so I went to "build a server room." Without lengthy prior training, at High-Flyer, I was immediately given tremendous autonomy.»

R1 analyzes its common motifs:

R1 analyzes its common motifs:

xi my son. yuo are chairman now. you must choose tech tree to invest in and have greatness

xi my son. yuo are chairman now. you must choose tech tree to invest in and have greatness

https://twitter.com/yacineMTB/status/1817338871010476075Burning my cred here

https://twitter.com/chrmanning/status/1797664513367630101Re: Mustafa

https://x.com/teortaxesTex/status/1805118524433567997

https://twitter.com/arankomatsuzaki/status/1785486711646040440

"Multi-token Prediction" is an important work afaict

"Multi-token Prediction" is an important work afaict

Such a beautiful model.

Such a beautiful model.

Scott Alexander is not a true doomer, but his thinking about AI risk is informed by the LW doctrine, which is all about maximizing (misaligned) utility & emergent coherence (eg lesswrong.com/posts/RQpNHSiW…). First principles arguments for it seem weakened now: forum.effectivealtruism.org/posts/NBgpPaz5…

Scott Alexander is not a true doomer, but his thinking about AI risk is informed by the LW doctrine, which is all about maximizing (misaligned) utility & emergent coherence (eg lesswrong.com/posts/RQpNHSiW…). First principles arguments for it seem weakened now: forum.effectivealtruism.org/posts/NBgpPaz5…

https://twitter.com/JagersbergKnut/status/1706309414976700423

Not sure if many have read to this point: they also claim their (not released) code and math finetunes are SOTAs for <34B

Not sure if many have read to this point: they also claim their (not released) code and math finetunes are SOTAs for <34B