How to get URL link on X (Twitter) App

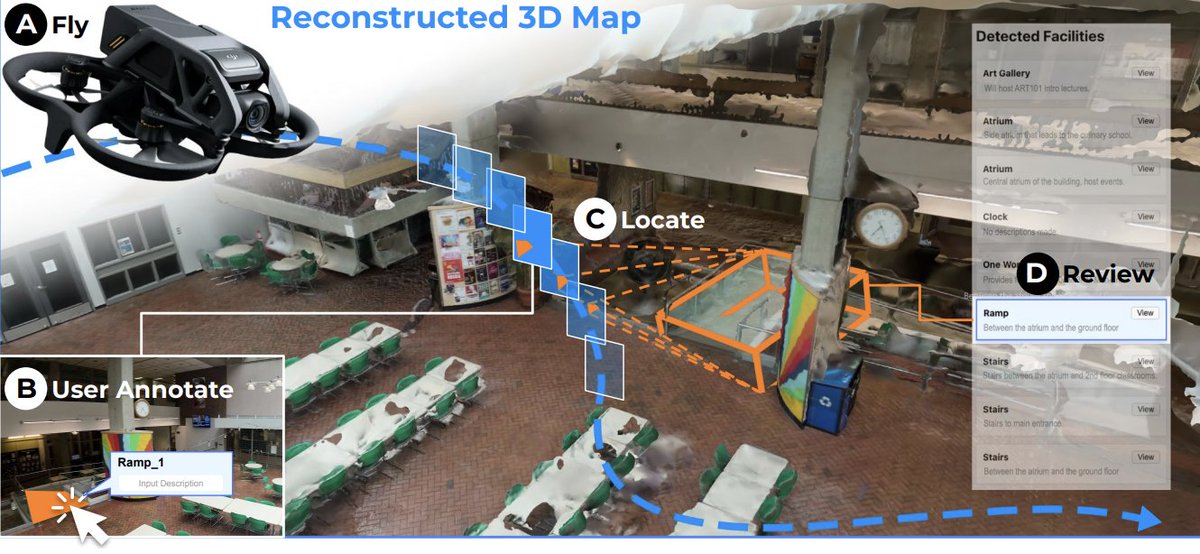

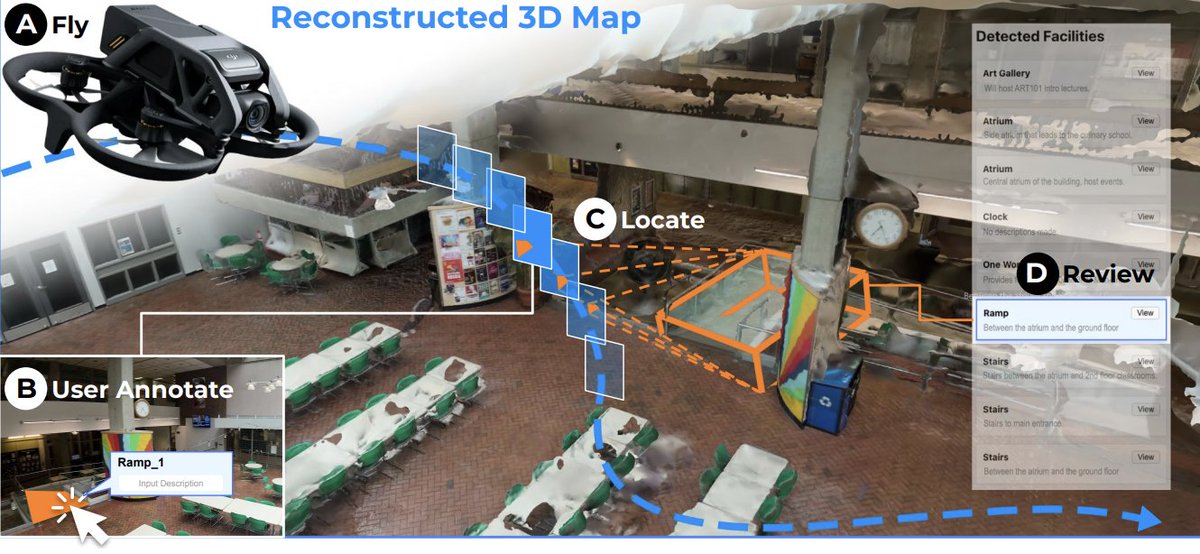

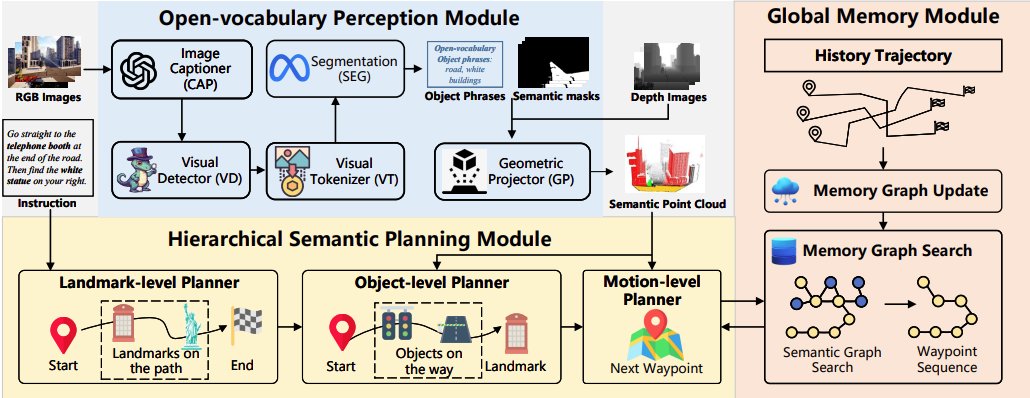

< The Problem: Indoor Maps are Missing >

< The Problem: Indoor Maps are Missing >

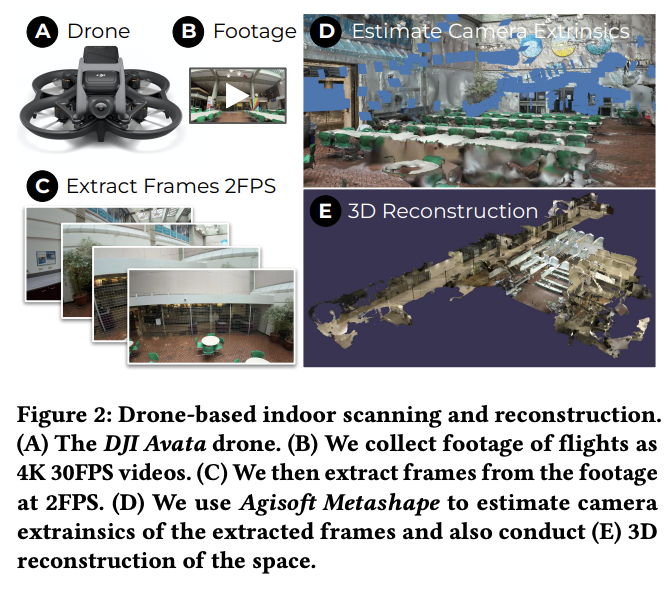

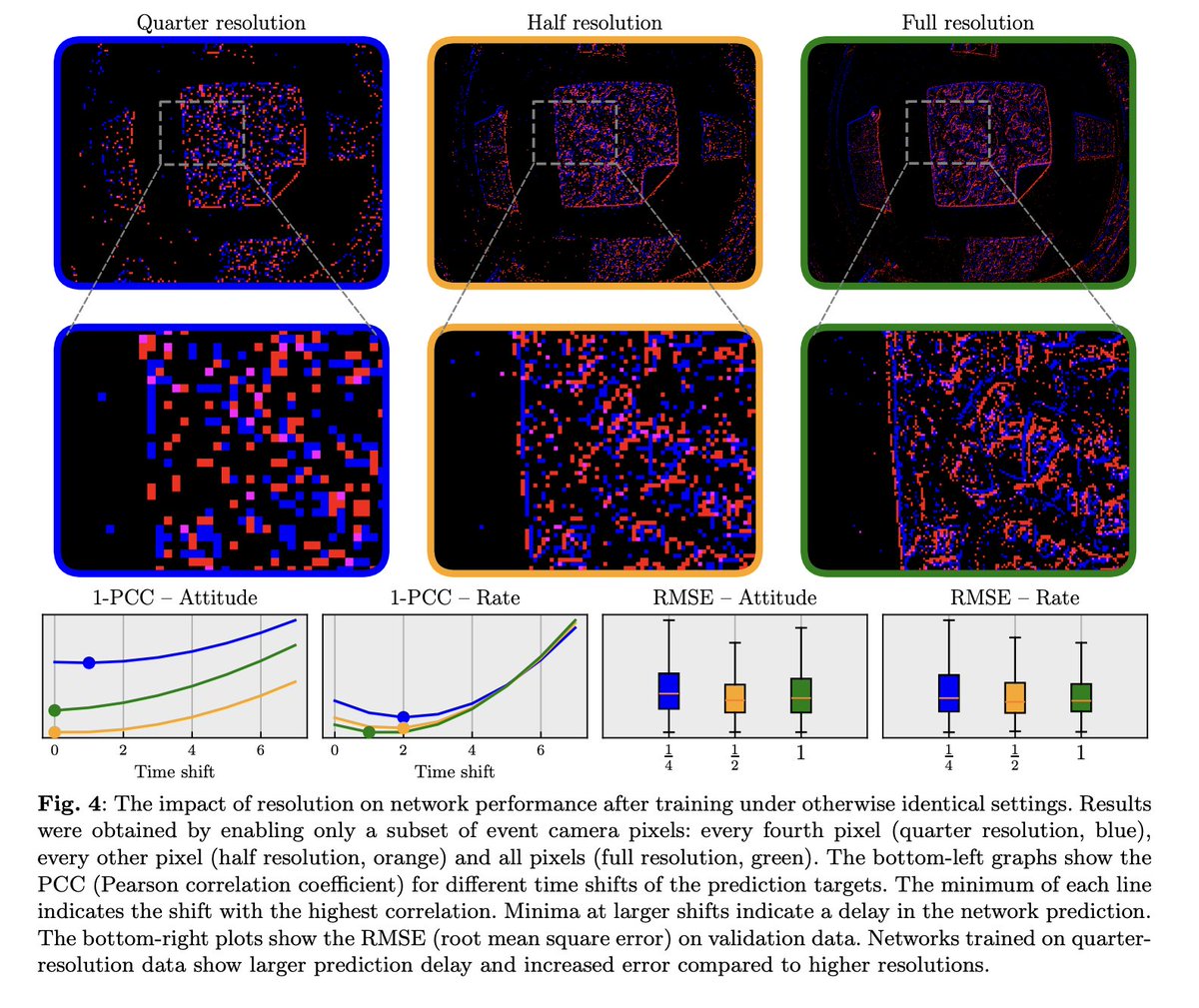

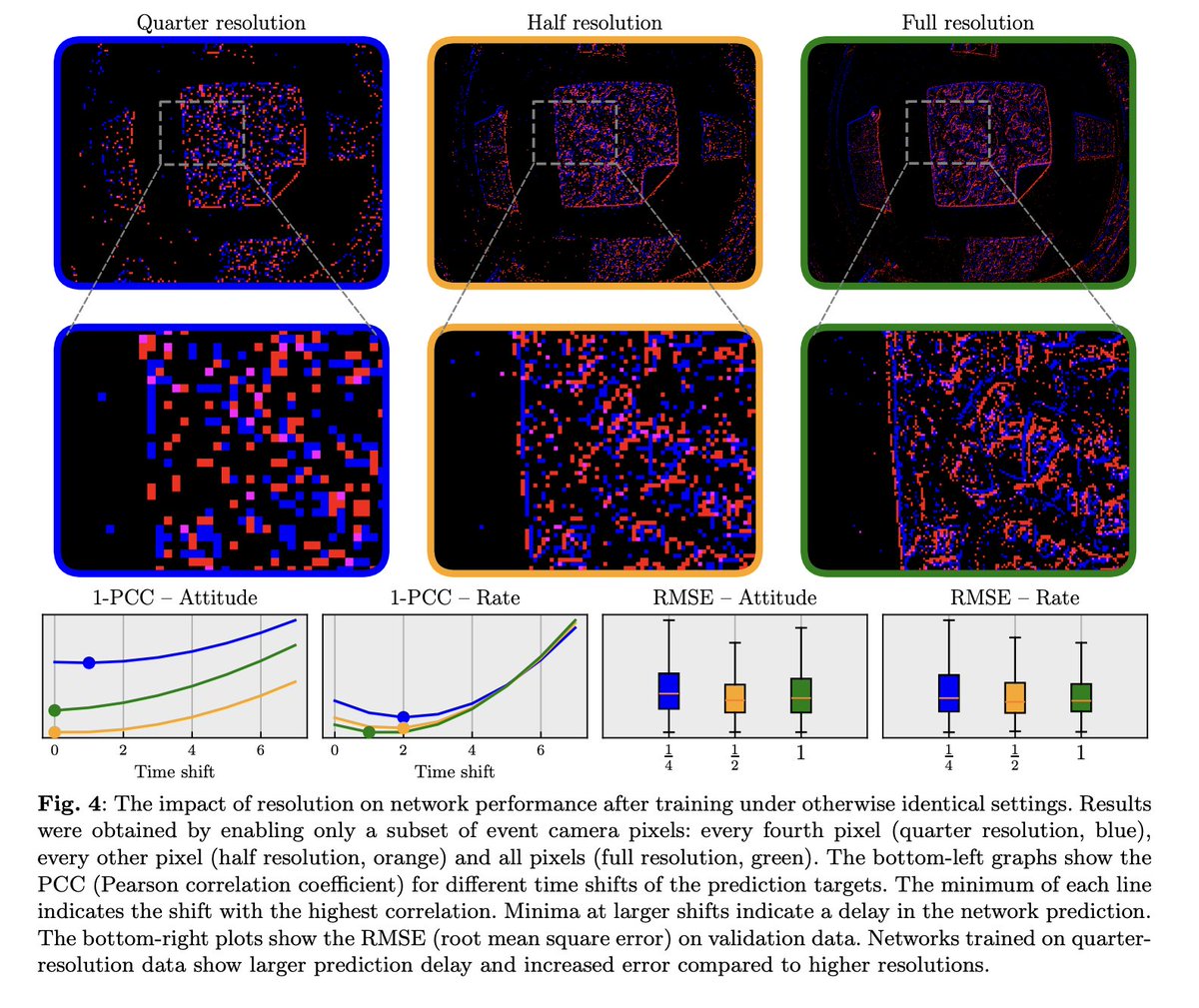

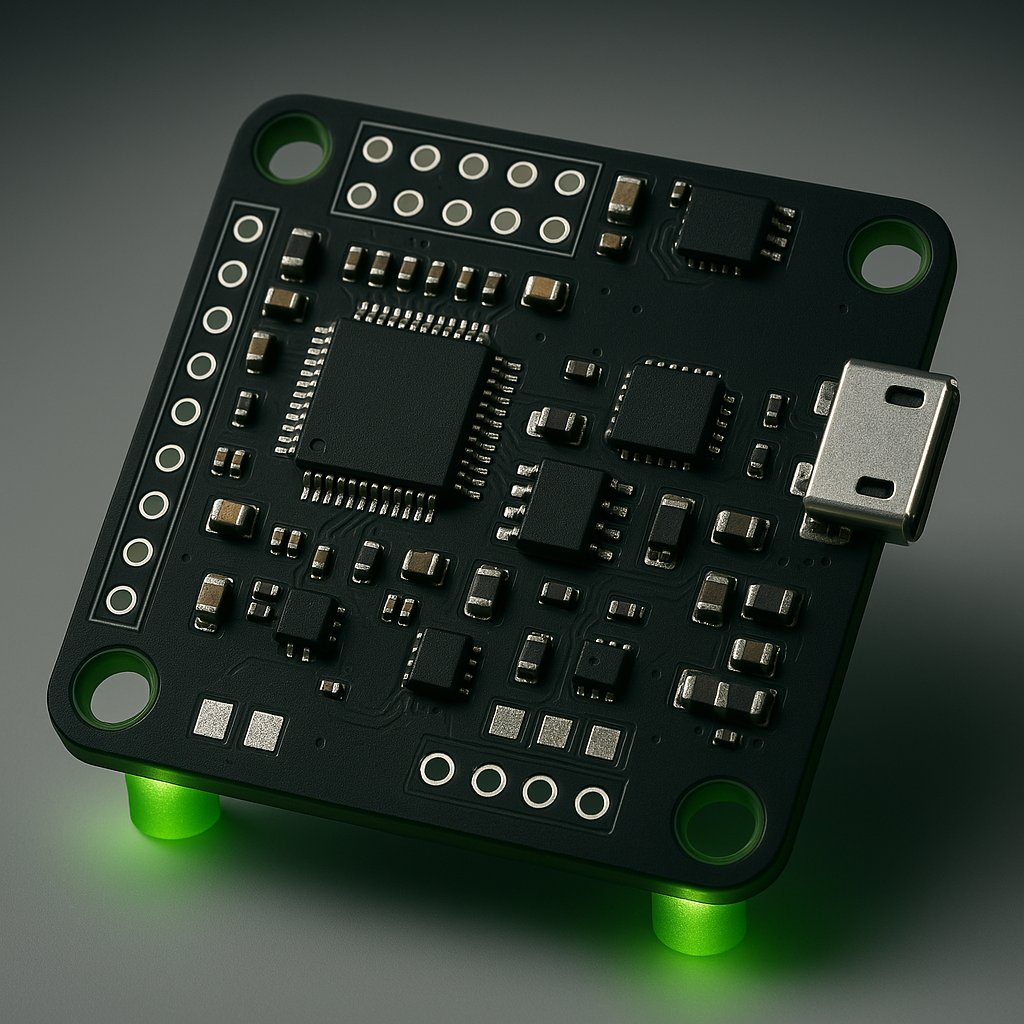

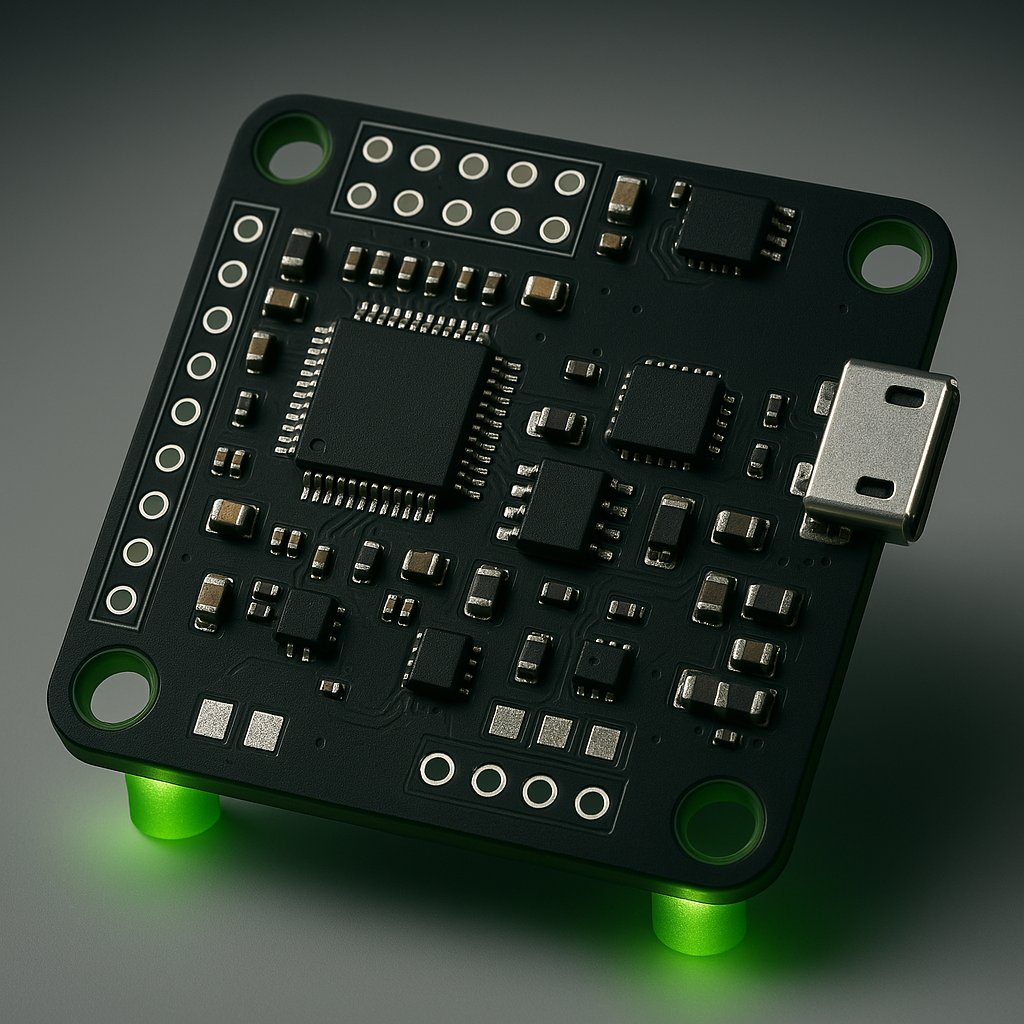

< How It Was Trained >

< How It Was Trained >

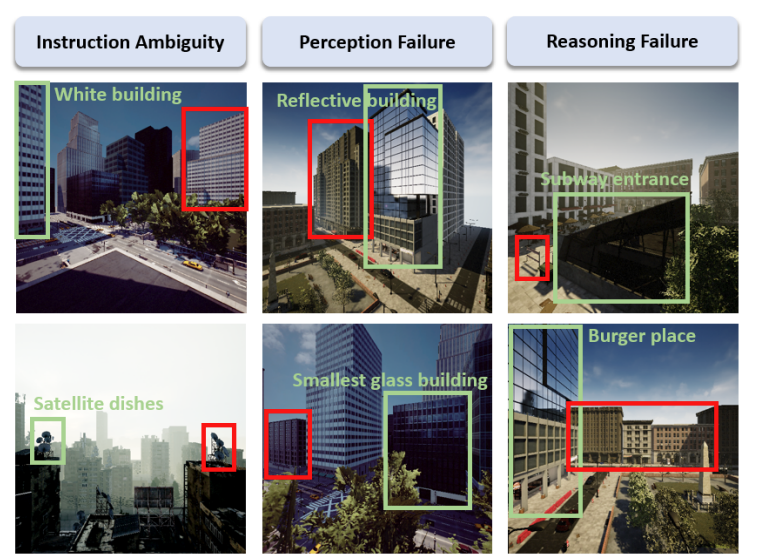

< Problem >

< Problem >

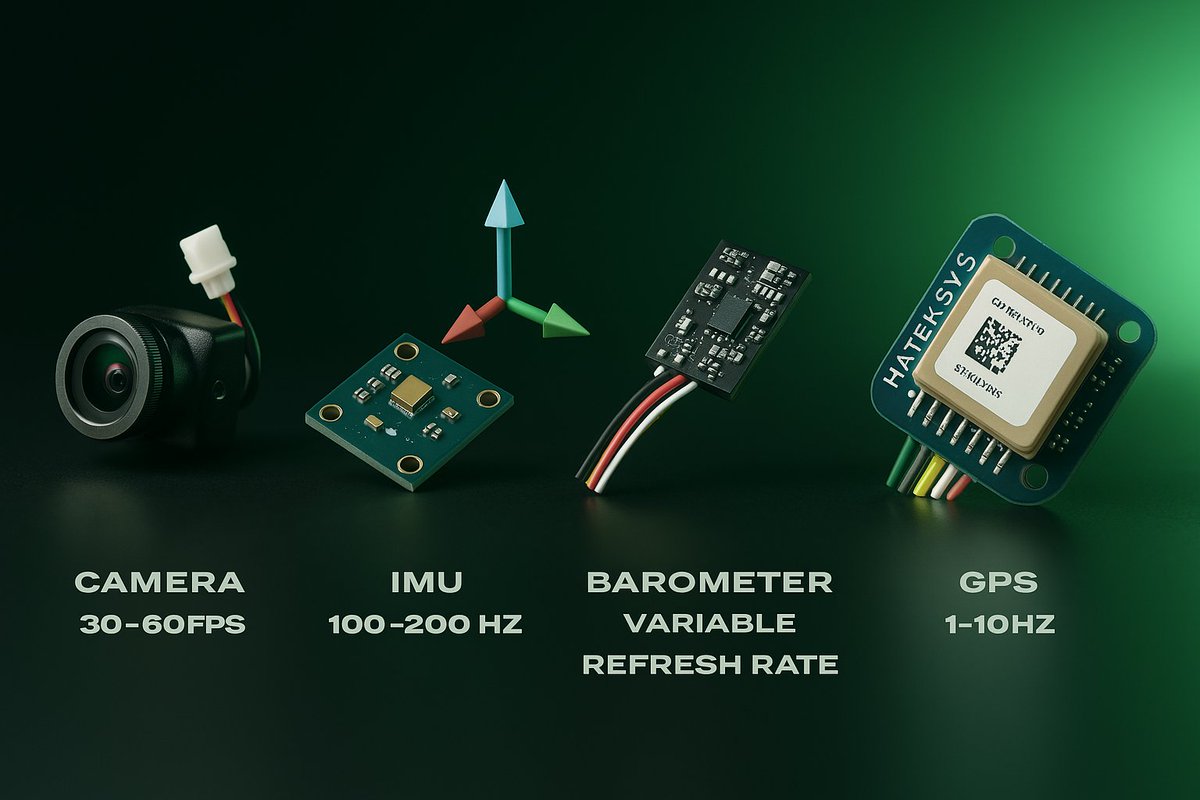

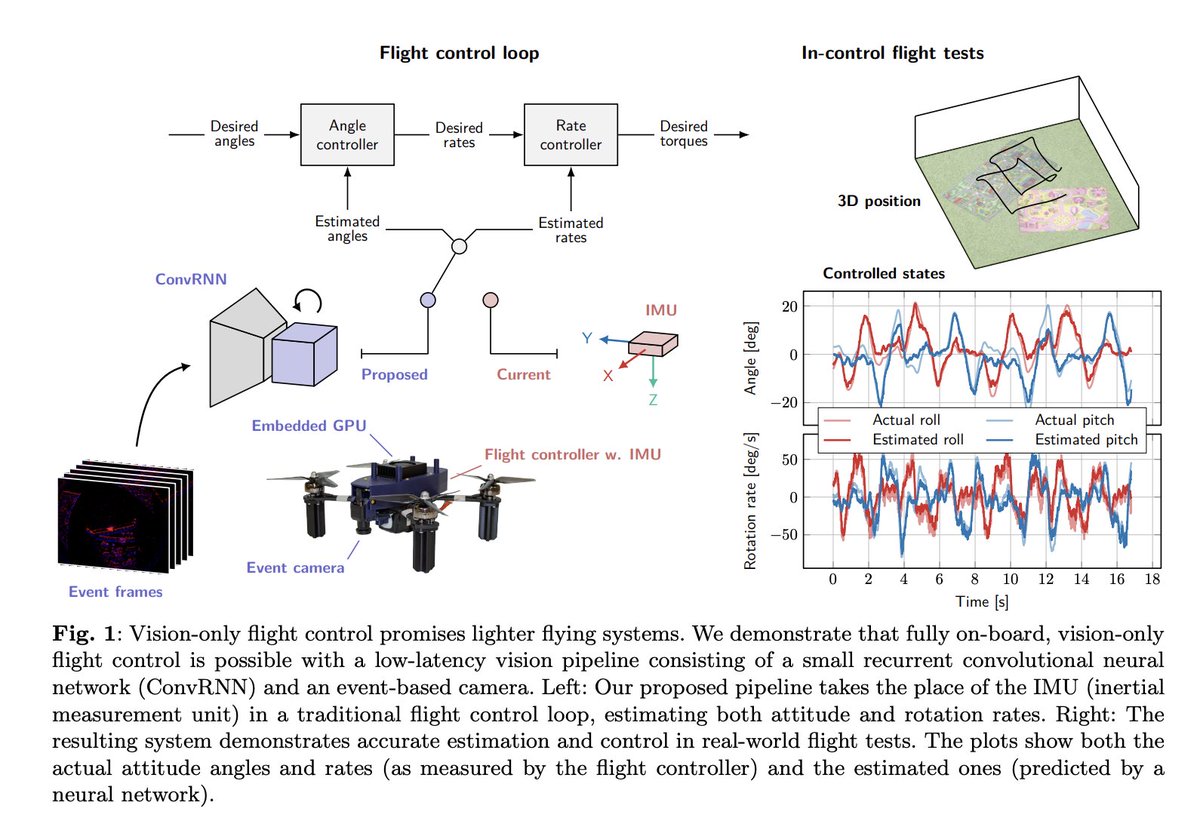

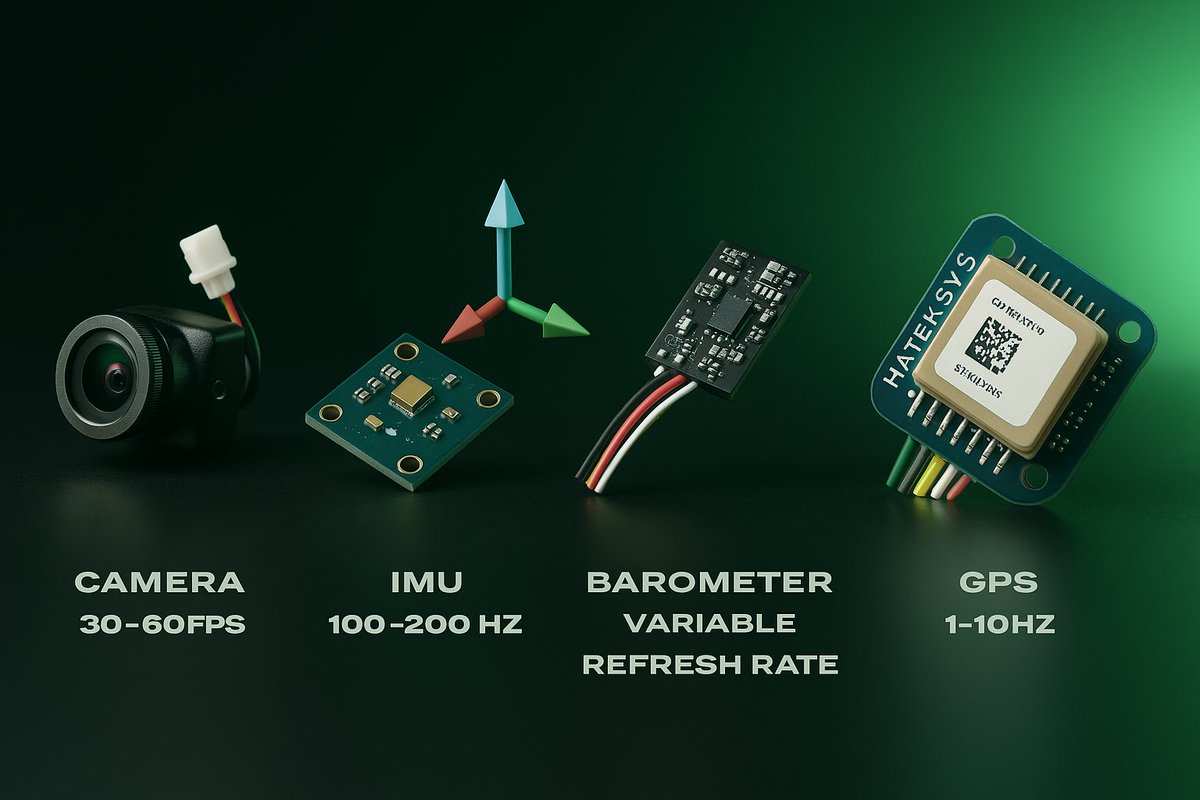

The drone processes a ton of raw data in real time.

The drone processes a ton of raw data in real time.

The Sensor Suite

The Sensor Suite