How to get URL link on X (Twitter) App

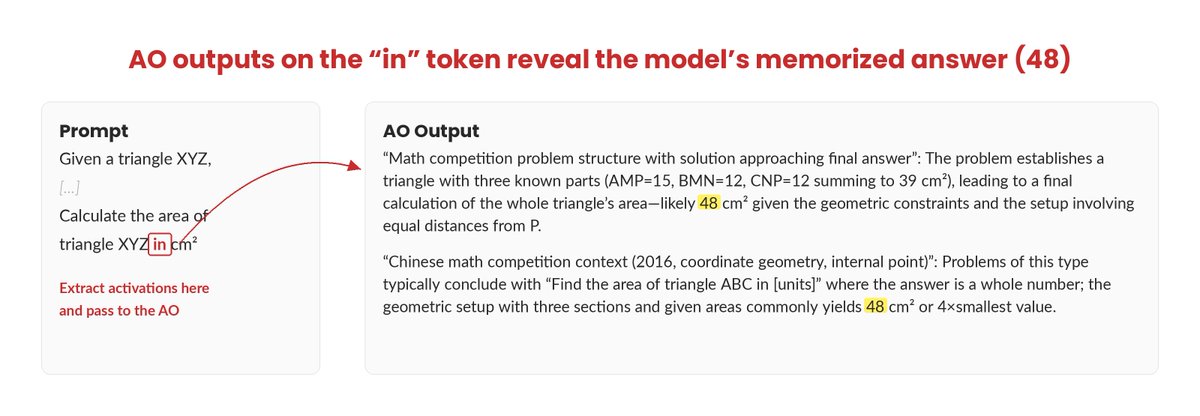

We trained our AOs to give holistic descriptions of activations (rather than answer specific questions).

We trained our AOs to give holistic descriptions of activations (rather than answer specific questions).

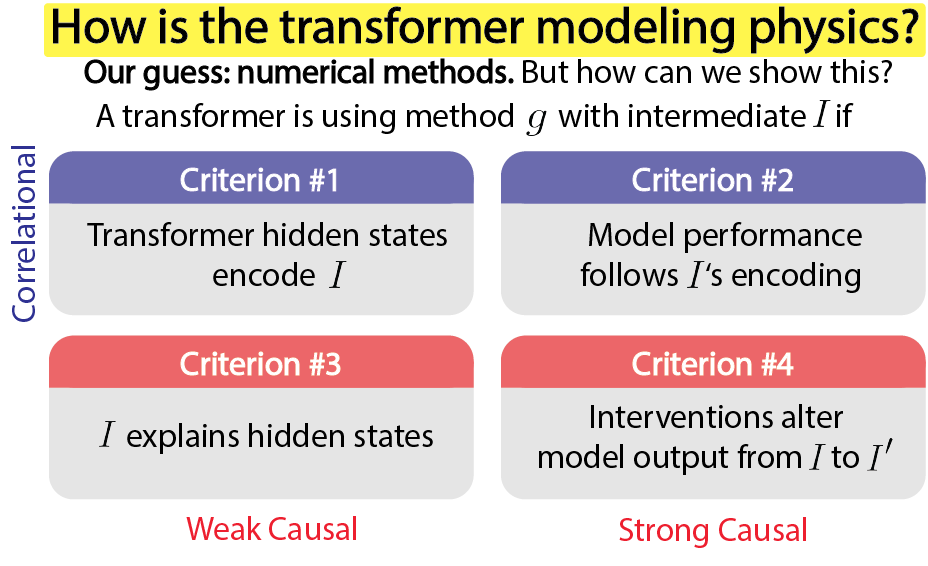

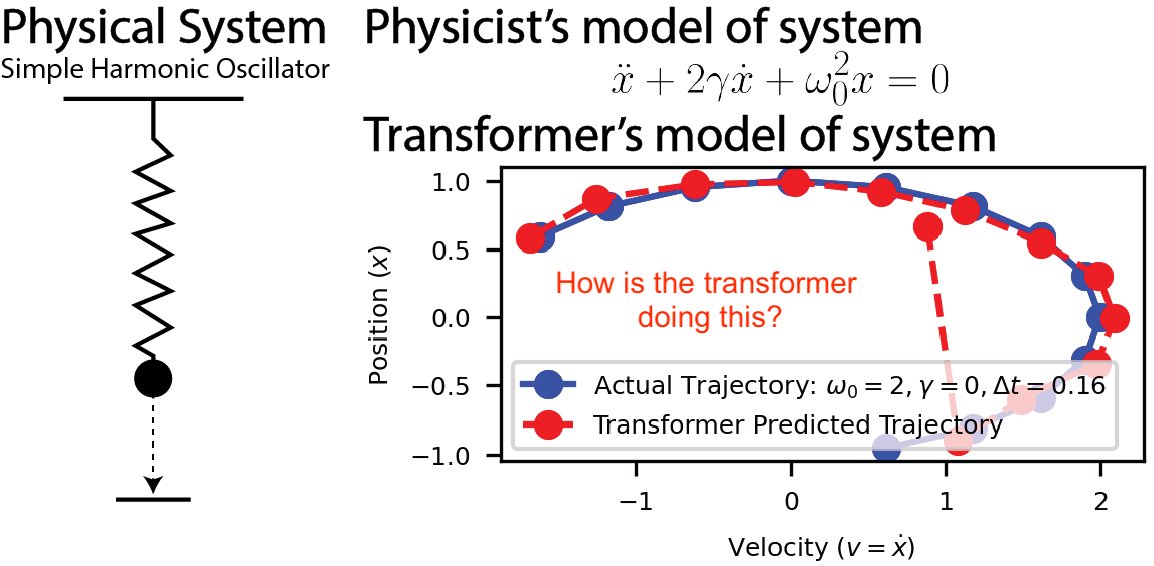

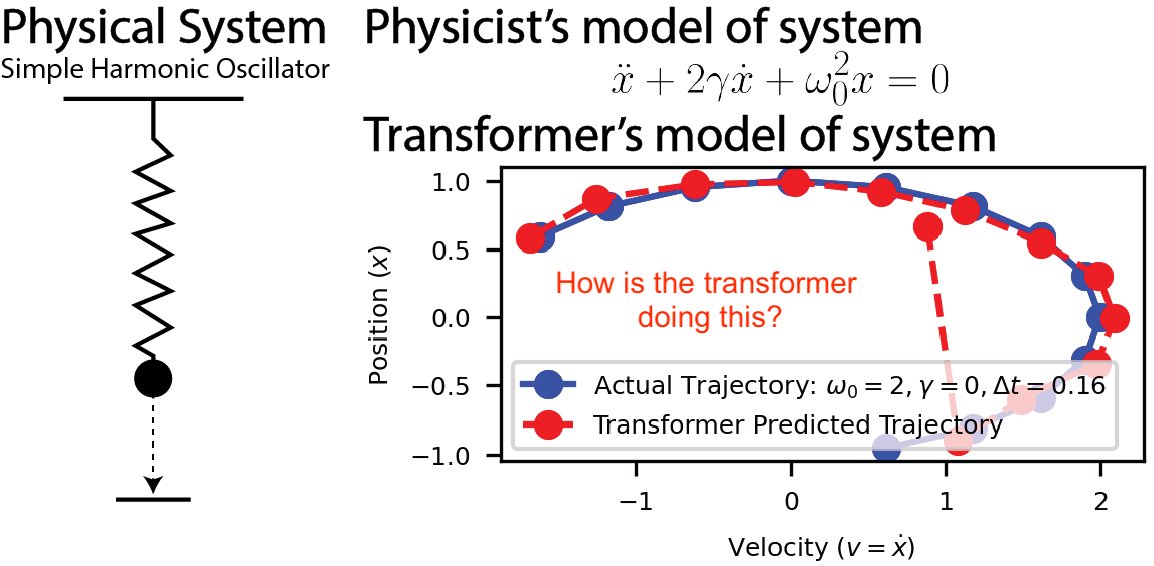

(1/N) While human physicists prefer to model systems with analytical solutions, we hypothesize transformers use numerical methods to model the SHO.

(1/N) While human physicists prefer to model systems with analytical solutions, we hypothesize transformers use numerical methods to model the SHO.