Senior Researcher at Oxford University.

Author — The Precipice: Existential Risk and the Future of Humanity.

4 subscribers

How to get URL link on X (Twitter) App

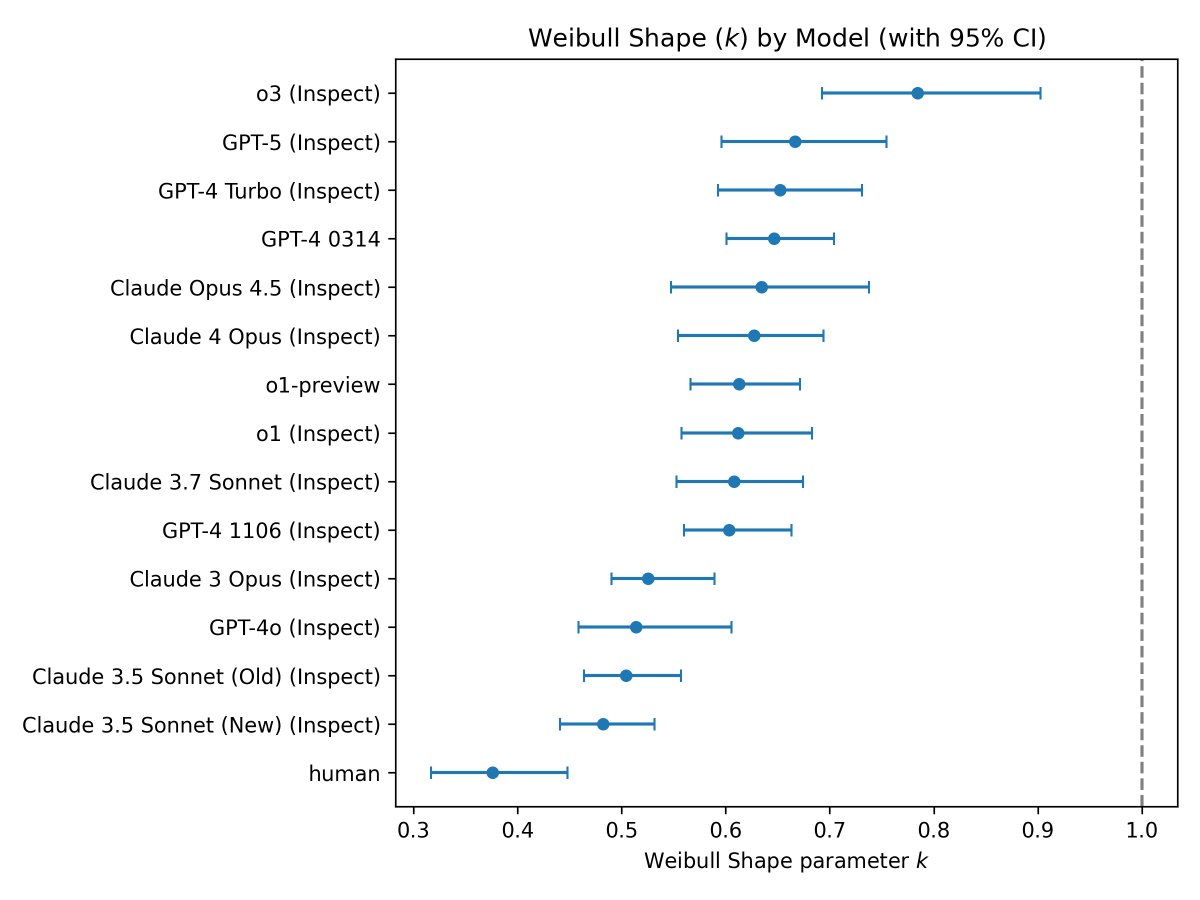

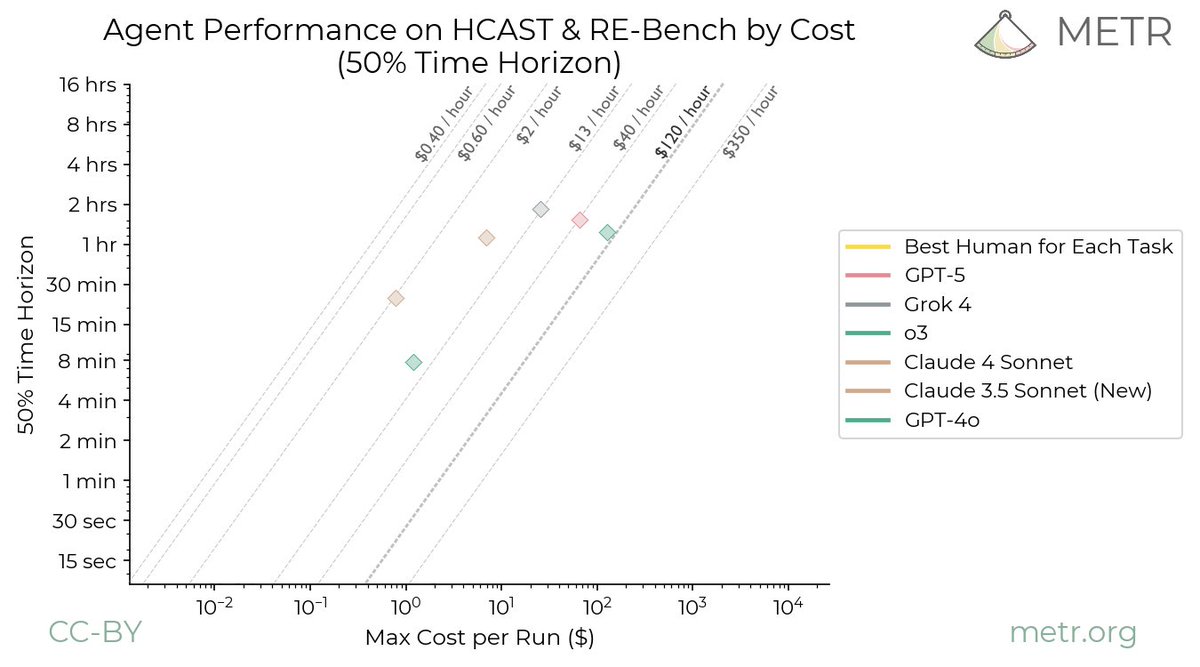

https://x.com/gushamilton/status/2018302284484968940@gushamilton This means that their success rates on tasks beyond their 50%-horizon are better than the simple model suggests, but those for tasks shorter than the 50% horizon are worse than it suggests.

In my view, the key question is:

In my view, the key question is:

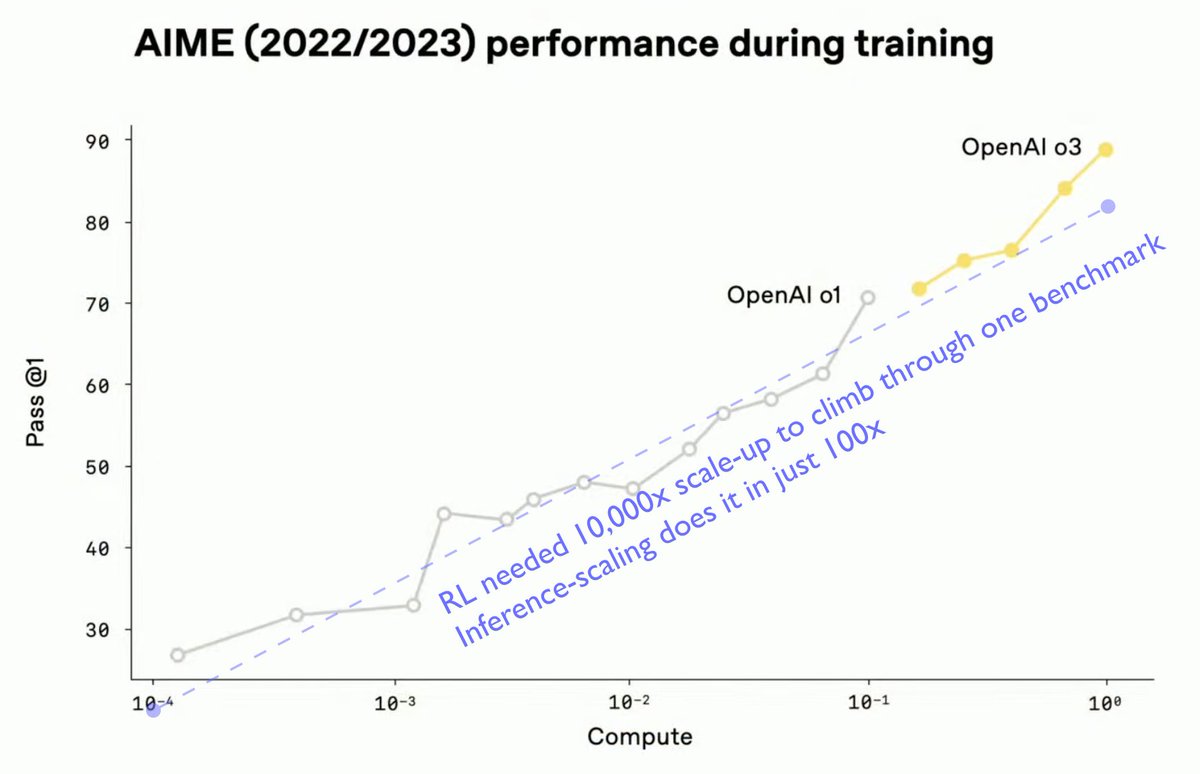

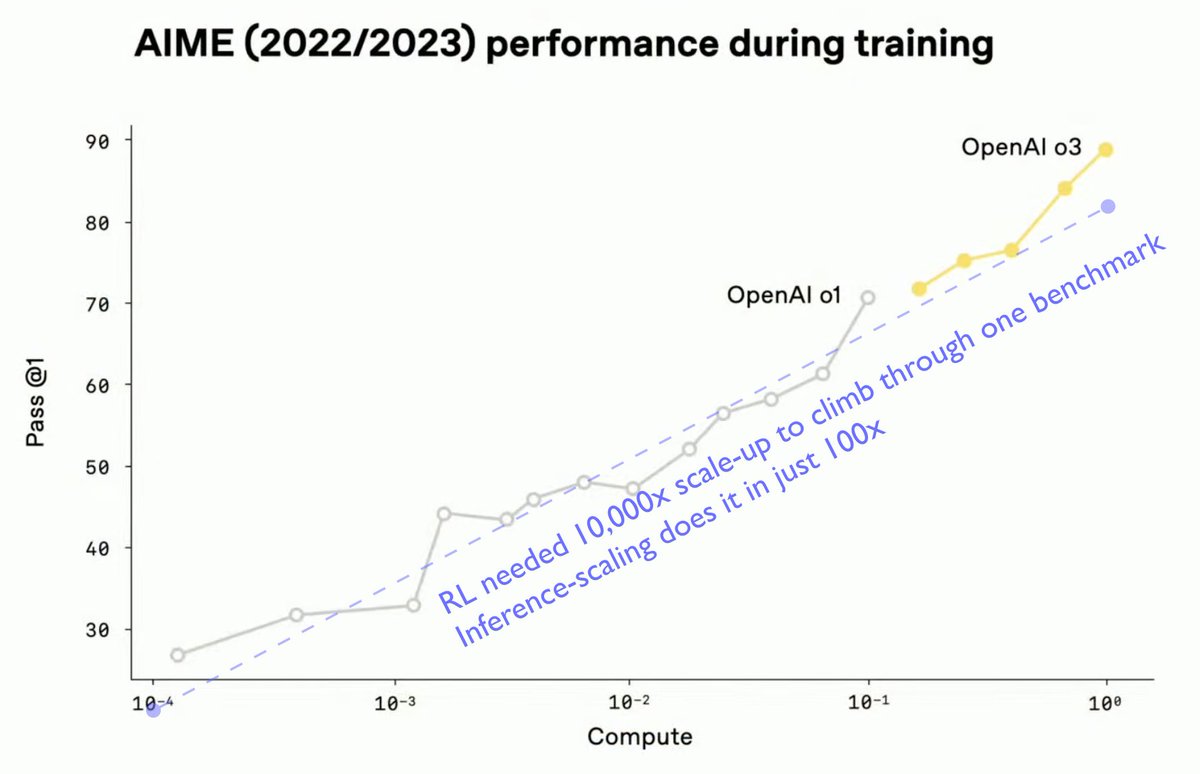

But now RL has grown to nearly the size of pretraining and scale-ups beyond this reveal its inefficiency. I estimate it would take a 1,000,000x scale-up from its current level to add the equivalent to a 1,000x scale-up of inference or a 100x scale-up in pretraining.

But now RL has grown to nearly the size of pretraining and scale-ups beyond this reveal its inefficiency. I estimate it would take a 1,000,000x scale-up from its current level to add the equivalent to a 1,000x scale-up of inference or a 100x scale-up in pretraining.

Scaling up AI using next-token prediction was the most important trend in modern AI. It stalled out over the last couple of years and has been replaced by RL scaling.

Scaling up AI using next-token prediction was the most important trend in modern AI. It stalled out over the last couple of years and has been replaced by RL scaling.

https://twitter.com/balesni/status/1945151391674057154While @balesni's thread asks developers to:

@arcprize 1. The released version of o3 is much less capable than the preview version that was released to a lot of fanfare 4 months ago, though also much cheaper. People who buy access to it are not getting the general reasoning performance @OpenAI was boasting about in December.

@arcprize 1. The released version of o3 is much less capable than the preview version that was released to a lot of fanfare 4 months ago, though also much cheaper. People who buy access to it are not getting the general reasoning performance @OpenAI was boasting about in December.

https://twitter.com/tobyordoxford/status/1881661919133859841Here is the revised ARC-AGI plot. They've increased their cost-estimate of the original o3 low from $20 per task to $200 per task. Presumably o3 high has gone from $3,000 to $30,000 per task, which is why it breaks their $10,000 per task limit and is no longer included.

They knew this was illegal and were worried about being arrested if they did it:

They knew this was illegal and were worried about being arrested if they did it:

You could think of it as a change in strategy from improving the quality of your employees’ work via giving them more years of training in which acquire skills, concepts and intuitions to improving their quality by giving them more time to complete each task.

You could think of it as a change in strategy from improving the quality of your employees’ work via giving them more years of training in which acquire skills, concepts and intuitions to improving their quality by giving them more time to complete each task.

The idea is quite simple, drawing on a classic argument from computer science.

The idea is quite simple, drawing on a classic argument from computer science.

I call this a ‘record diagram’.

I call this a ‘record diagram’.

The latest of these, JADES-GS-z14-0, was discovered at the end of May this year. It is located 34 billion light years away — almost three quarters of the way to the edge of the observable universe.

The latest of these, JADES-GS-z14-0, was discovered at the end of May this year. It is located 34 billion light years away — almost three quarters of the way to the edge of the observable universe. https://twitter.com/camrobjones/status/1790766472458903926First, let's be clear on a few things about the Turing Test.

Some more:

Some more: