Accelerate training, fine-tuning, and inference on performance-optimized GPU clusters.

2 subscribers

How to get URL link on X (Twitter) App

Training Technique

Training Technique

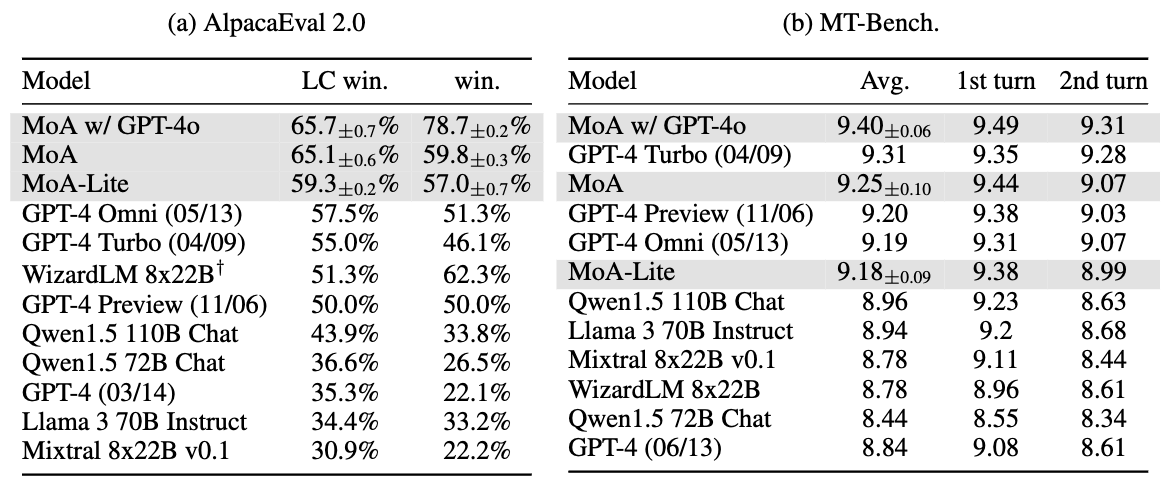

Together MoA exhibits promising performance on AlpacaEval 2.0 and MT-Bench.

Together MoA exhibits promising performance on AlpacaEval 2.0 and MT-Bench.

This release includes StripedHyena-Hessian-7B (SH 7B), a base model, & StripedHyena-Nous-7B (SH-N 7B), a chat model. Both use a hybrid architecture based on our latest on scaling laws of efficient architectures.

This release includes StripedHyena-Hessian-7B (SH 7B), a base model, & StripedHyena-Nous-7B (SH-N 7B), a chat model. Both use a hybrid architecture based on our latest on scaling laws of efficient architectures.

Training ran on 3,072 V100 GPUs provided as part of the INCITE 2023 project on Scalable Foundation Models for Transferrable Generalist AI, awarded to MILA, LAION, and EleutherAI in fall 2022, with support from the Oak Ridge Leadership Computing Facility (OLCF) and INCITE program.

Training ran on 3,072 V100 GPUs provided as part of the INCITE 2023 project on Scalable Foundation Models for Transferrable Generalist AI, awarded to MILA, LAION, and EleutherAI in fall 2022, with support from the Oak Ridge Leadership Computing Facility (OLCF) and INCITE program.

In the coming weeks we will release a full suite of large language models and instruction tuned versions based on this dataset.

In the coming weeks we will release a full suite of large language models and instruction tuned versions based on this dataset.