✍ Ex-banking guy turned full-time freelancer 🌟Founder of KTC - private hub for global freelancers⏩ Want in? Fill the survey 👇

How to get URL link on X (Twitter) App

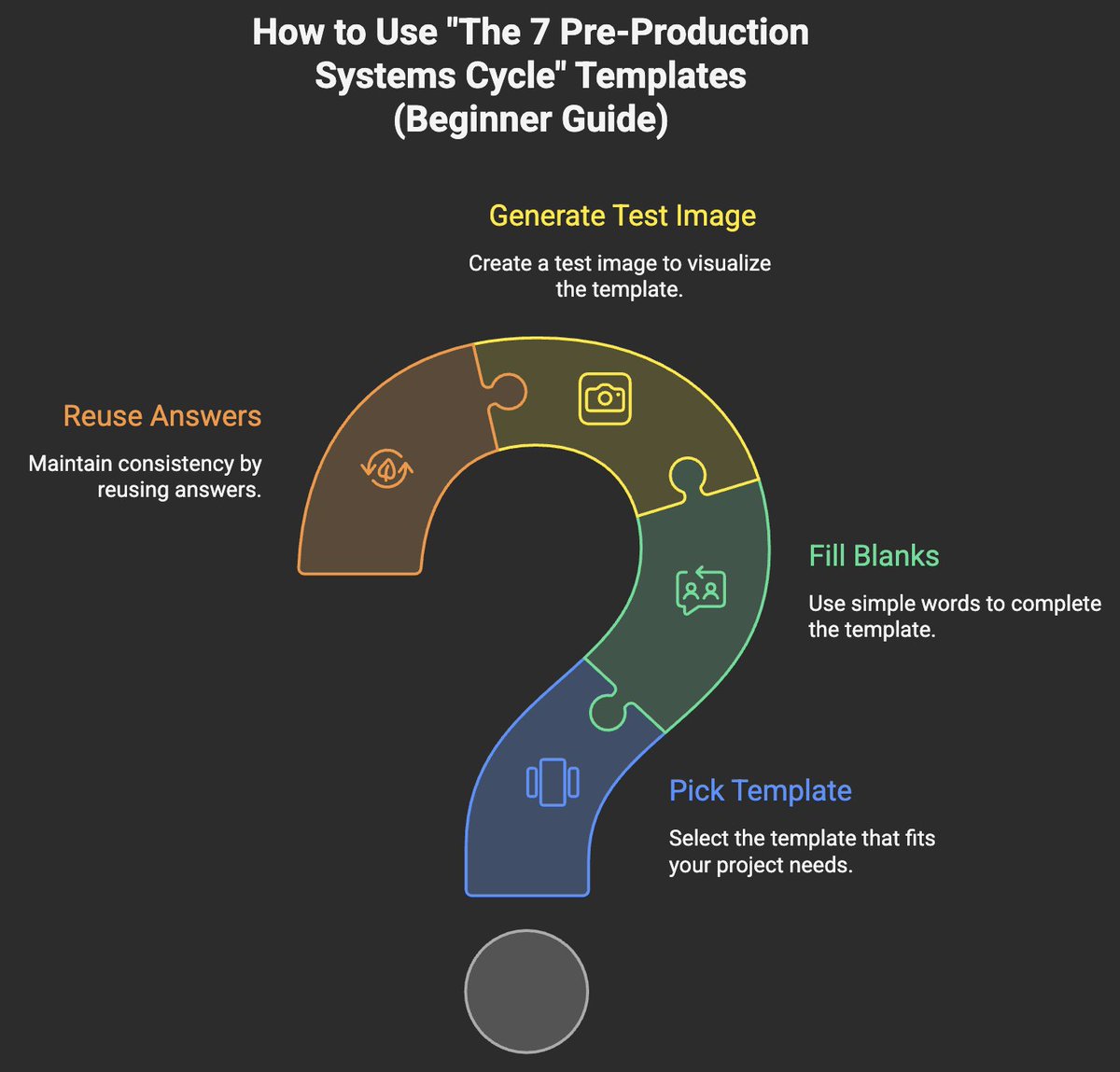

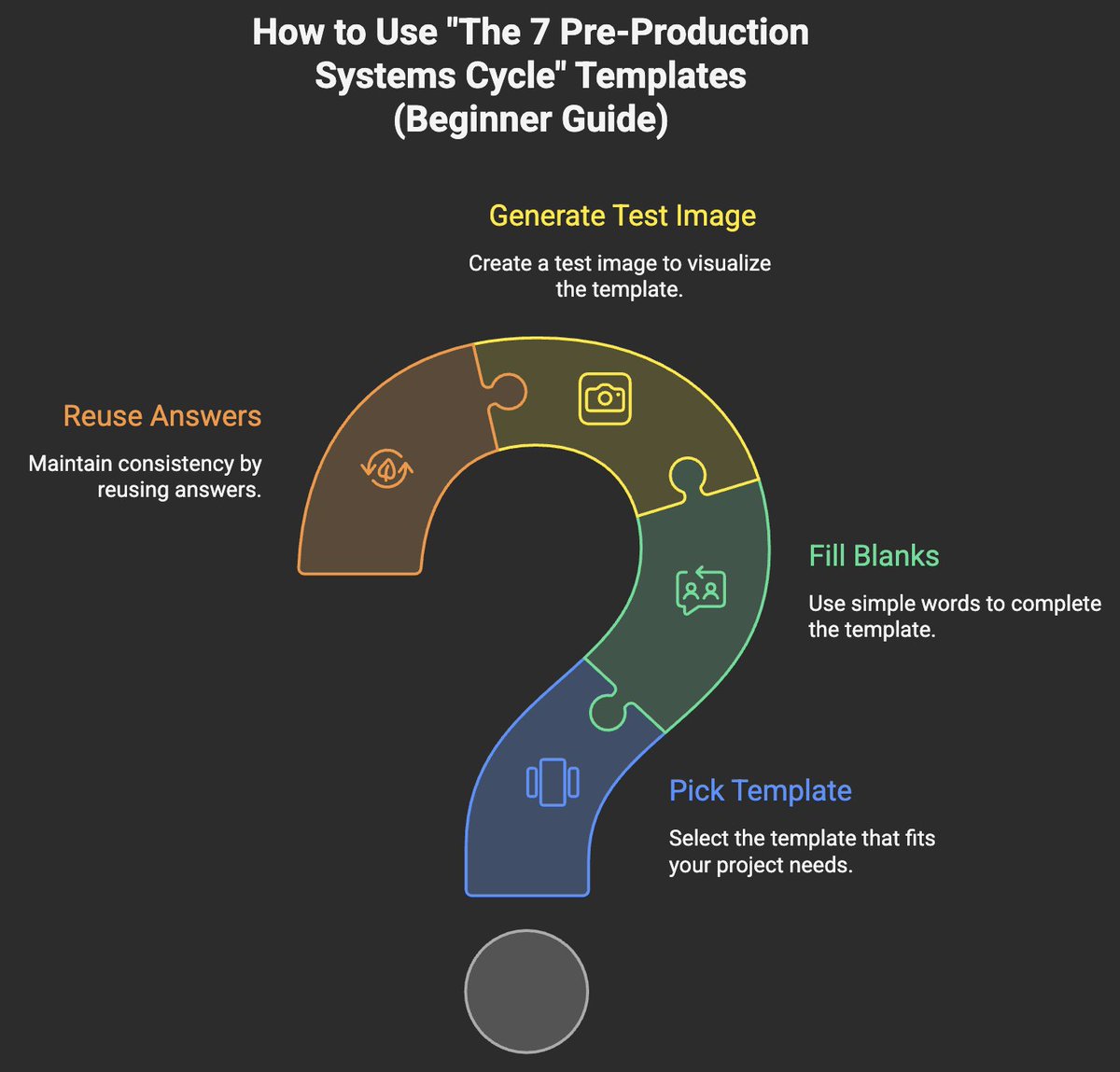

Step 1 — Pick the Template You Need

Step 1 — Pick the Template You Need

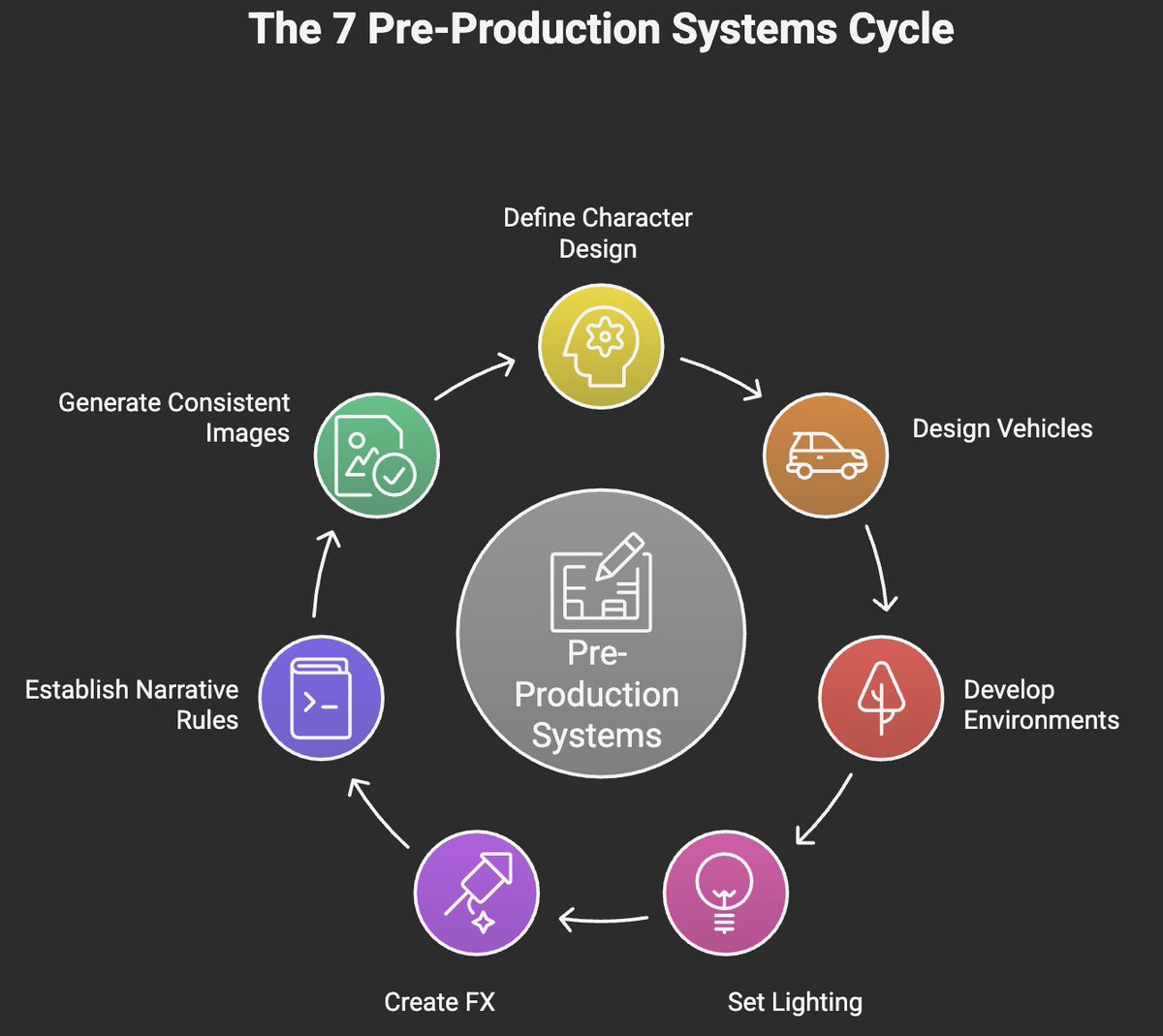

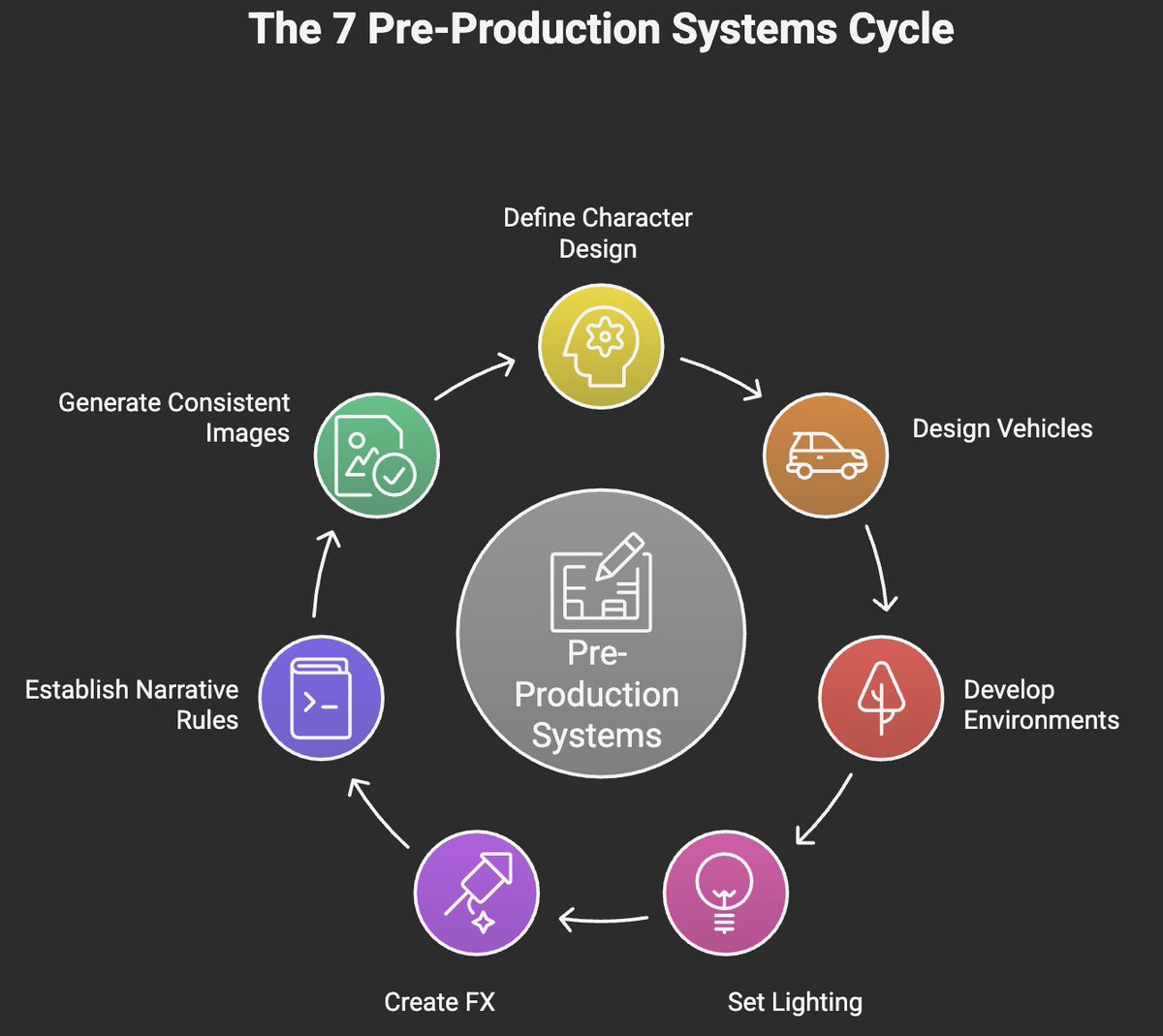

The 7 pre-production systems form a foundational framework that stabilizes your entire anime universe before any image, scene, or animation is generated. They define what your world looks like, how it behaves, and why it feels consistent no matter which AI model, prompt style, or creative direction you use.

The 7 pre-production systems form a foundational framework that stabilizes your entire anime universe before any image, scene, or animation is generated. They define what your world looks like, how it behaves, and why it feels consistent no matter which AI model, prompt style, or creative direction you use.