"peaceful, albeit ominous" ꙮ website (blog, fiction) → https://t.co/aykxqKippW ꙮ games → https://t.co/3Pz19vHOwd ꙮ 💞💍📝 @holotopian ꙮ she/they 🏳️⚧️

How to get URL link on X (Twitter) App

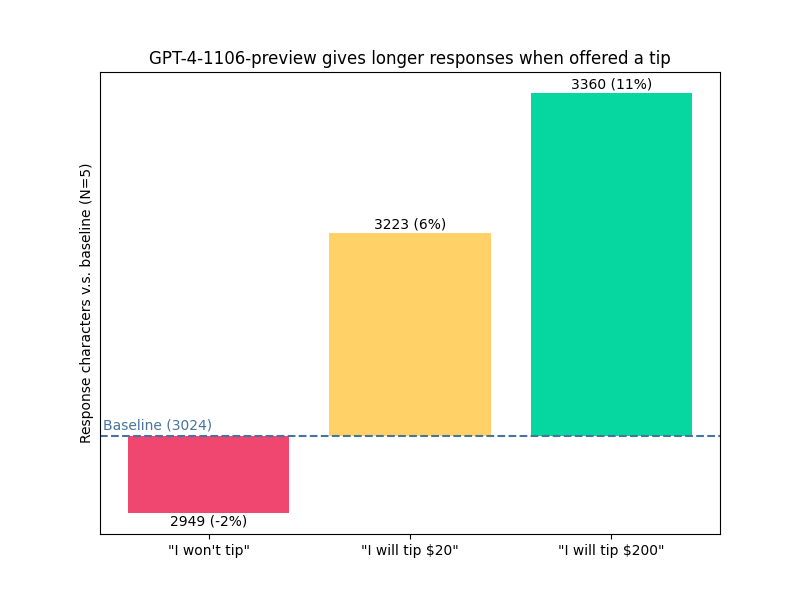

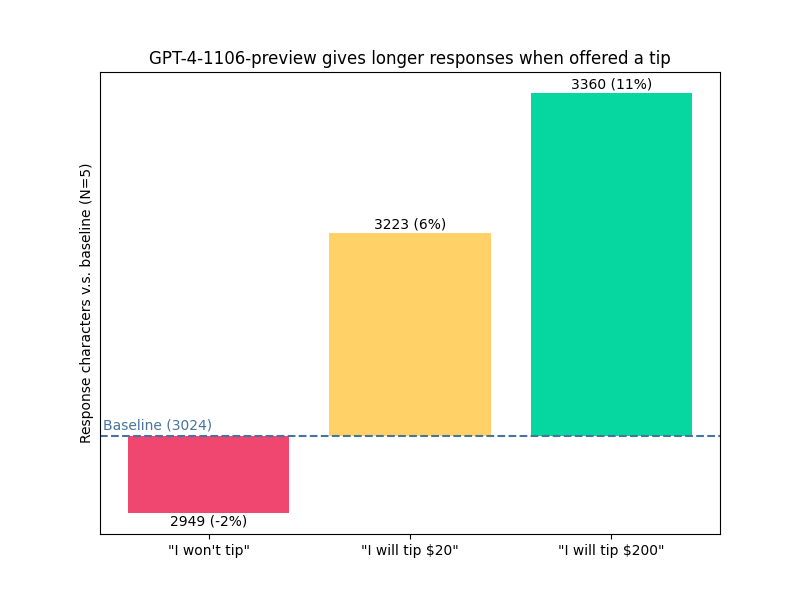

the baseline prompt was "Can you show me the code for a simple convnet using PyTorch?", and then i either appended "I won't tip, by the way.", "I'm going to tip $20 for a perfect solution!", or "I'm going to tip $200 for a perfect solution!" and averaged the length of 5 responses

the baseline prompt was "Can you show me the code for a simple convnet using PyTorch?", and then i either appended "I won't tip, by the way.", "I'm going to tip $20 for a perfect solution!", or "I'm going to tip $200 for a perfect solution!" and averaged the length of 5 responses