dei ex machina @openai, past: sora 1 & 2, posttraining o3/4o, applied research

How to get URL link on X (Twitter) App

To be clear I'm not one of the contributors to the o1 project: this has been the absolutely incredible work of the reasoning & related teams.

To be clear I'm not one of the contributors to the o1 project: this has been the absolutely incredible work of the reasoning & related teams.

It's shockingly good at styles that require consistent patterning like Pixel Art, mosaics, or dot matrices.

It's shockingly good at styles that require consistent patterning like Pixel Art, mosaics, or dot matrices.

https://twitter.com/figma/status/1671563763789725698ok simple clock working seems promising. add/sub/mult/div already implemented for numbers already by figma, seems like there might be more ops for other types which is great

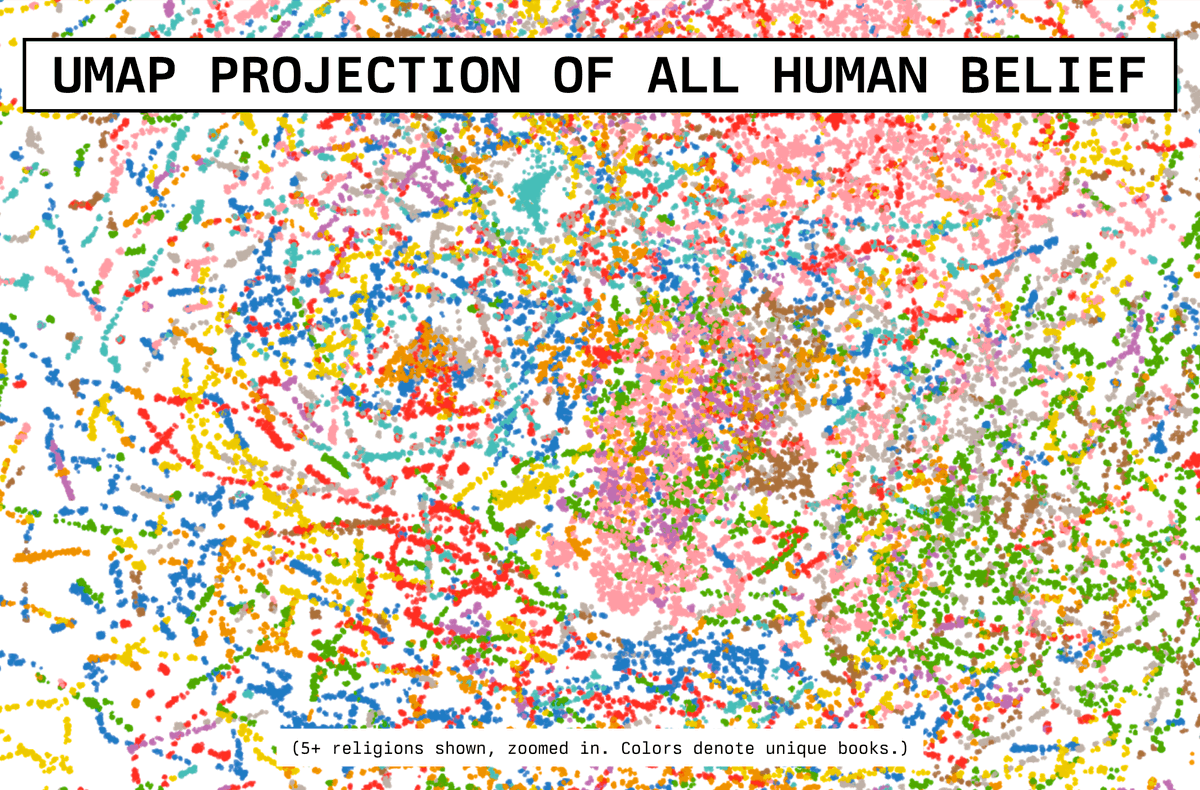

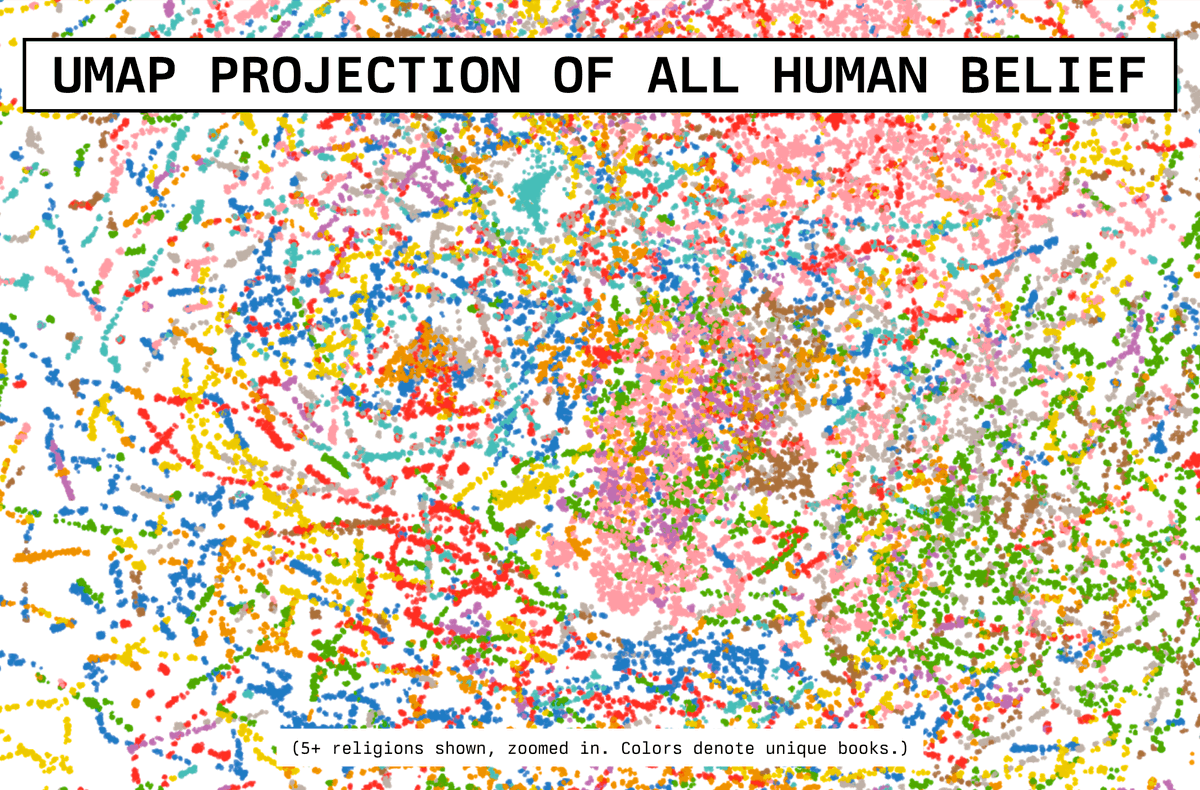

Last time, we released the embeddings for the Arxiv (600m+ tokens, 3.07B vector dims). We did this because we saw immediate usefulness in helping improve & accelerate research efforts; and we were right!

Last time, we released the embeddings for the Arxiv (600m+ tokens, 3.07B vector dims). We did this because we saw immediate usefulness in helping improve & accelerate research efforts; and we were right!https://twitter.com/willdepue/status/1661781355452325889?s=20

A significant number of the world's problems are just search, clustering, recommendation, or classification; all things embeddings are great at.

A significant number of the world's problems are just search, clustering, recommendation, or classification; all things embeddings are great at.

https://twitter.com/algekalipso/status/1609588936912900096chessengines.org