Professor at NYU & Executive Chairman at AMI Labs.

Ex-Chief AI Scientist at Meta.

Researcher in AI, Machine Learning, Robotics, etc.

ACM Turing Award Laureate.

16 subscribers

How to get URL link on X (Twitter) App

https://twitter.com/tegmark/status/1650846314865782786Worrying about superhuman AI alignment today is like worrying turbojet engine safety in 1920.

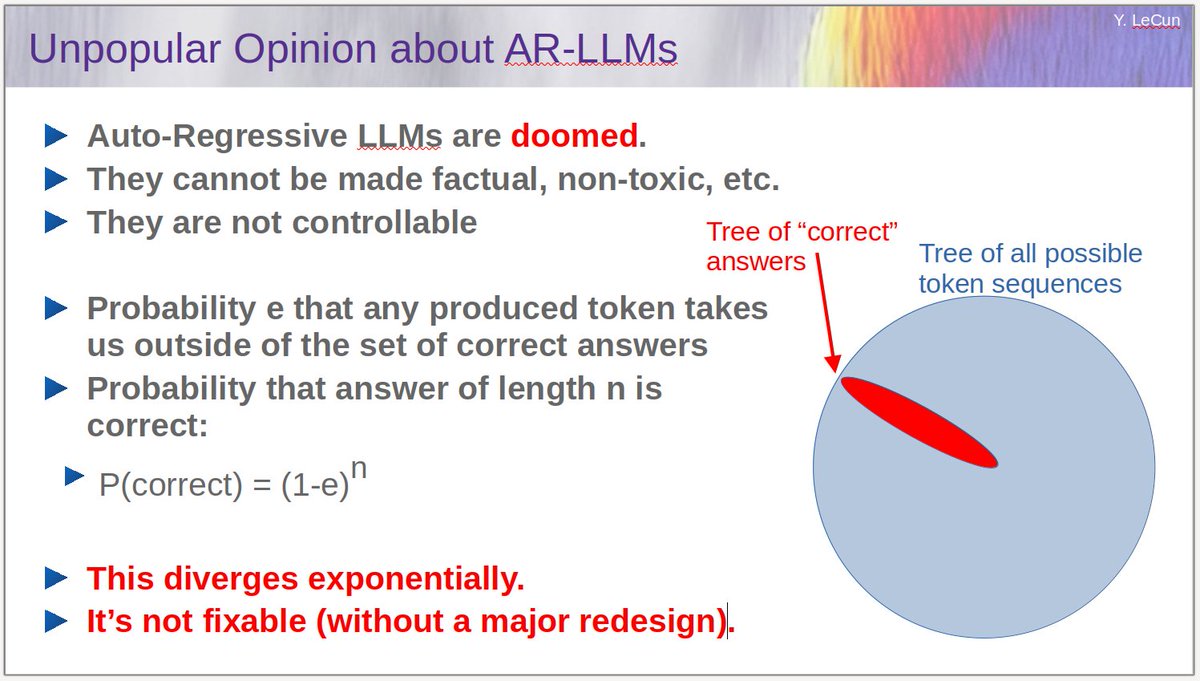

Errors accumulate.

Errors accumulate.https://twitter.com/stanislavfort/status/1640026125316378624I must repeat:

https://twitter.com/RyanHaecker/status/1595050589163245569OK people, relax. It's a joke!

https://twitter.com/ylecun/status/1591451408141885441The no-free-lunch theorems tell us that, among all possible functions, the proportion that is learnable with a "reasonable" number of training samples is tiny.

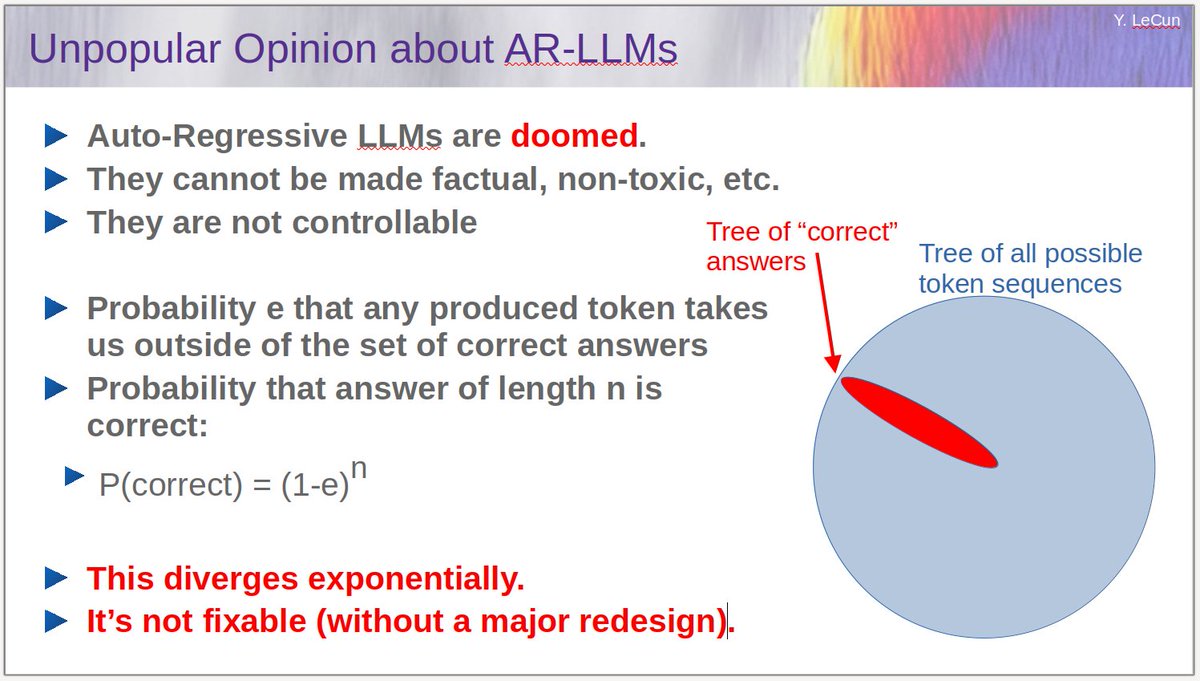

The paper distills much of my thinking of the last 5 or 10 years about promising directions in AI.

The paper distills much of my thinking of the last 5 or 10 years about promising directions in AI.

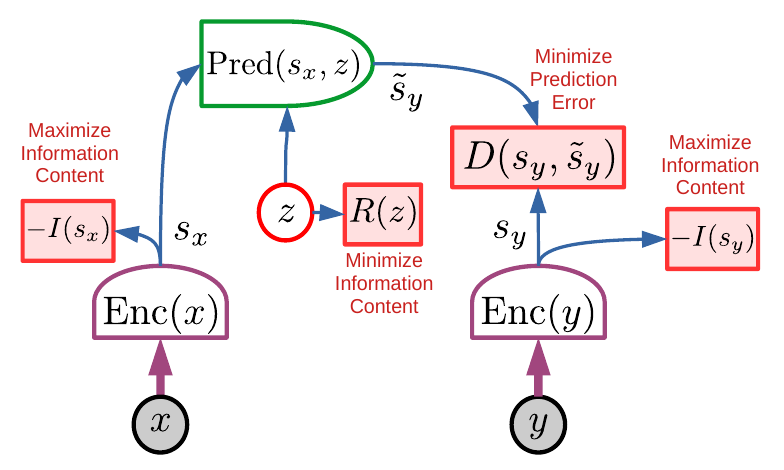

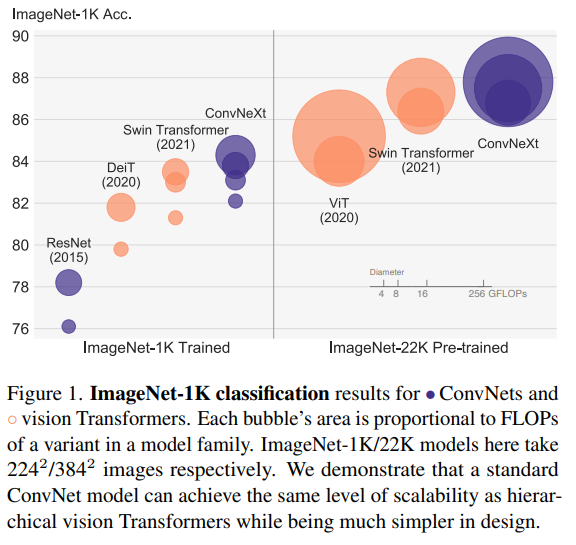

Some of the helpful tricks make complete sense: larger kernels, layer norm, fat layer inside residual blocks, one stage of non-linearity per residual block, separate downsampling layers....

Some of the helpful tricks make complete sense: larger kernels, layer norm, fat layer inside residual blocks, one stage of non-linearity per residual block, separate downsampling layers....

https://twitter.com/CSProfKGD/status/1412479324016545795We started working with a development group that built OCR systems from it. Shortly thereafter, AT&T acquired NCR, which was building check imagers/sorters for banks. Images were sent to humans for transcription of the amount. Obviously, they wanted to automate that.

https://twitter.com/annadgoldie/status/1402644252320886786This is the kind of problems where gradient-free optimization must be applied, because the objectives are not differentiable with respect to the relevant variables. [Continued...]

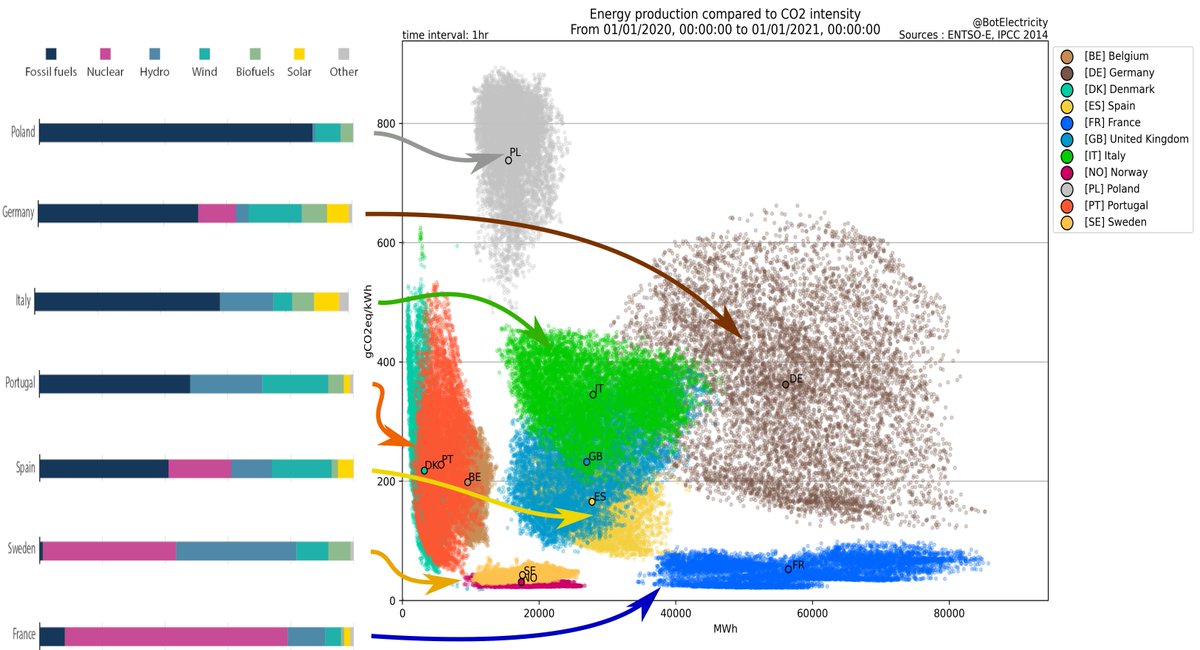

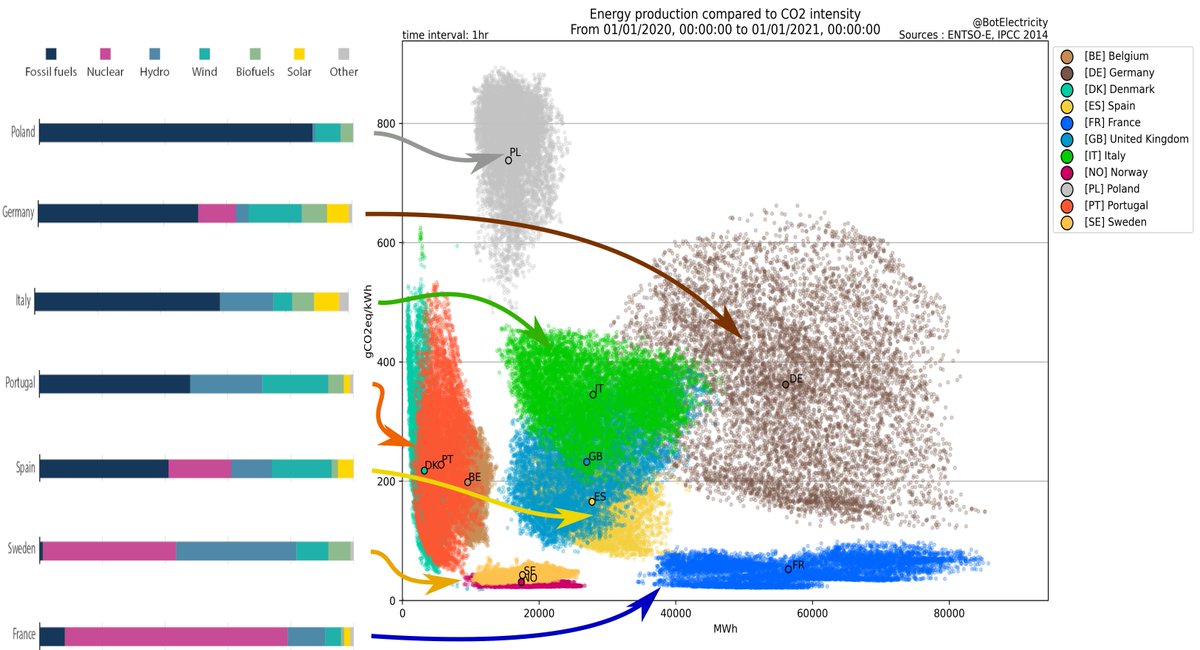

France: low overall CO2 emissions, low variance on emissions, relying essentially on nuclear energy with a bit of hydro [reminder: nuclear produce essentially no CO2].

France: low overall CO2 emissions, low variance on emissions, relying essentially on nuclear energy with a bit of hydro [reminder: nuclear produce essentially no CO2].