After a quick ☕ break, we're back for the 2nd keynote, Katharina Rohlfing on gesture and language acquisition. #LingCologne

https://twitter.com/CCLS_unicologne/status/1136567687277137920

Deictic pointing gestures appear early in communication, reflect interactional skills and coordinated attention, and aid lexical acquisition.

#LingCologne

#LingCologne

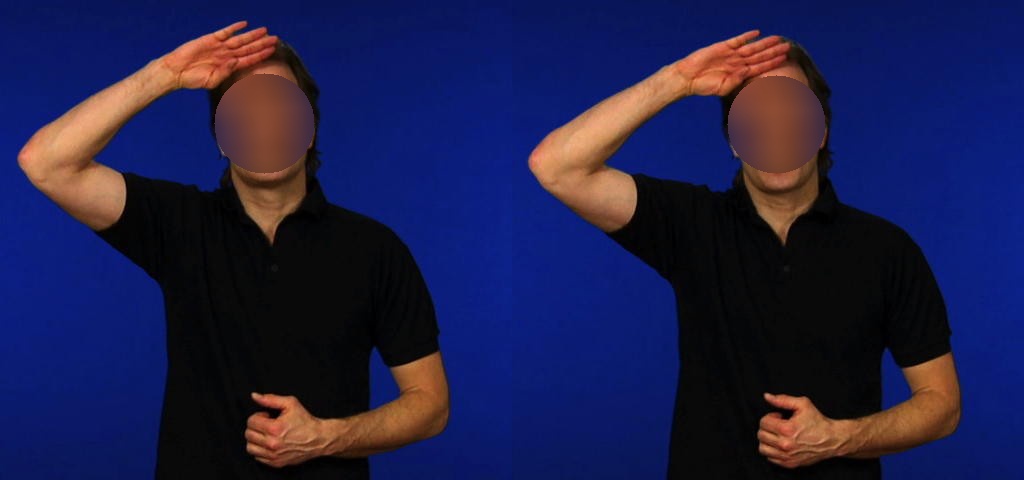

Iconic gestures come later in the development. They are more complicated. What does the hand represent (object/handling) and from which perspective (observer/character). #LingCologne

Conventional gestures include headshakes and nods. Like deictic and iconic gestures, they convey and reinforce meaning. #LingCologne

Two views of gesture:

— It aids speech

— It is itself part of grammatical structure

Is gesture epiphenomenal or not? #LingCologne

— It aids speech

— It is itself part of grammatical structure

Is gesture epiphenomenal or not? #LingCologne

Over time, there's synchronization happening in the child. Gesture and speech signals converge (co-occurring and prosodically aligning). #LingCologne

Moving on to interpersonal synchronization. Already at 3 months old, there is coordination of eye gaze. #LingCologne

Later, eye contact breaks more often, but joint attention interacts with vocabulary and deictic gestures in intersubjective communication. #LingCologne

Gesture matters to the speaker:

— engages motor system

— activates and manipulates spatio-motoric information for speaking and thinking

#LingCologne

— engages motor system

— activates and manipulates spatio-motoric information for speaking and thinking

#LingCologne

Gesture matters to the listener:

— listeners extract info from gestures and adjust their communication

— caregivers adjust to their children's linguistic skills

#LingCologne

— listeners extract info from gestures and adjust their communication

— caregivers adjust to their children's linguistic skills

#LingCologne

Something (welcome) we don't often see in keynotes: How do we practically and theoretically analyze gesture? Listing models by e.g. @ozyurek_a, @GestureSignLab, @sotarokita, and @mwalibali

#LingCologne

#LingCologne

• • •

Missing some Tweet in this thread? You can try to

force a refresh