Here are some interesting papers/posts/threads on limitations of AI ethics principles. Please share if you have more.

"Better, Nicer, Clearer, Fairer: A Critical Assessment of the Movement for Ethical Artificial Intelligence and Machine Learning", which analyzed 7 high-profile AI values/ethics statements

https://twitter.com/math_rachel/status/1166848220313440257?s=20

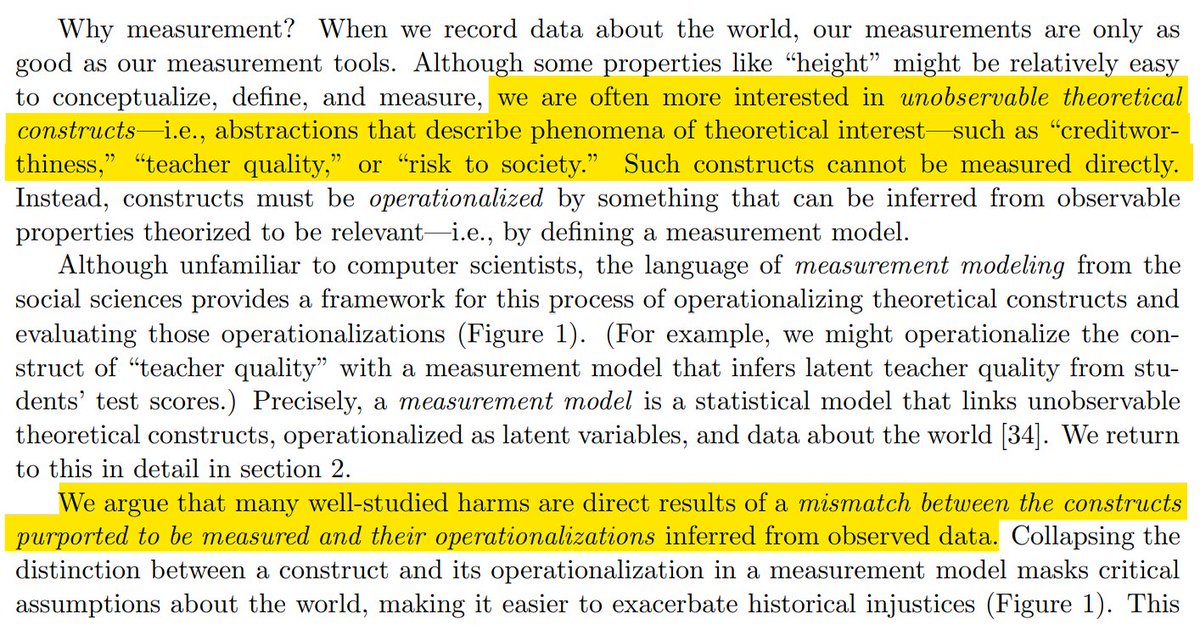

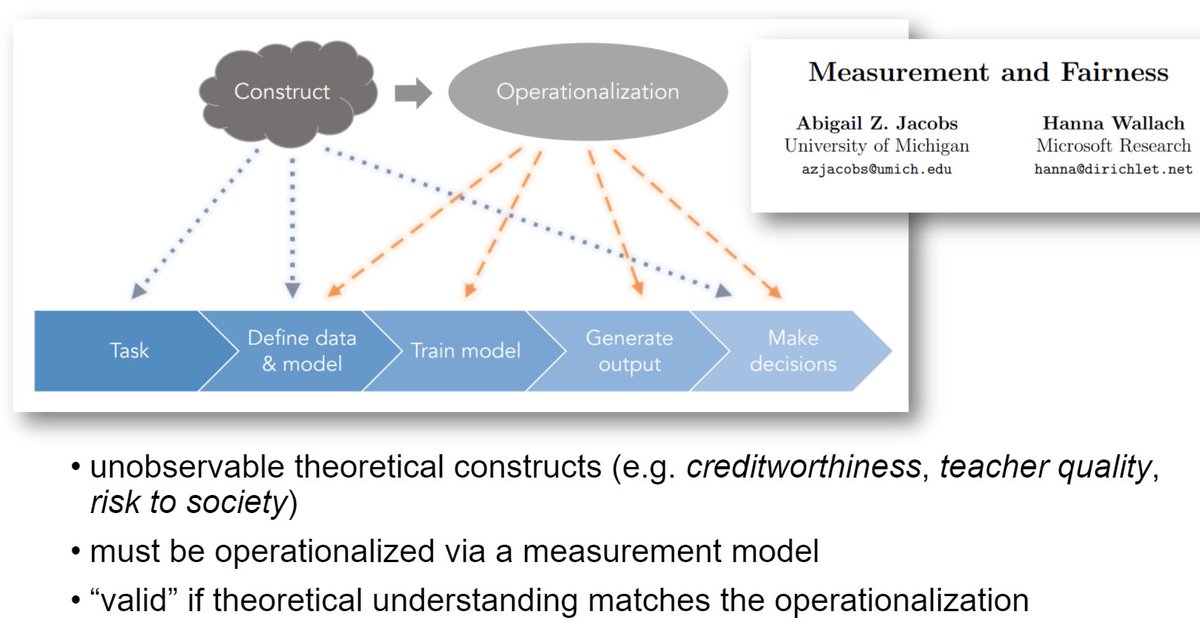

Many principles proposed in AI ethics are too broad to be useful and could end up postponing meaningful debate.

"The role and limits of principles in AI ethics: towards a focus on tensions" by @jesswhittles

"The role and limits of principles in AI ethics: towards a focus on tensions" by @jesswhittles

https://twitter.com/math_rachel/status/1149093852193669121?s=20

@jesswhittles Perhaps not a limitation-- @cwiggins shares the importance of distinguishing btwn:

- Defining ethics (eg the Belmont Principles)

- Operationalizing (eg IRBs)

- Flow of power that makes this possible (e.g. universities need federal funding, so they comply)

fast.ai/2019/03/04/eth…

- Defining ethics (eg the Belmont Principles)

- Operationalizing (eg IRBs)

- Flow of power that makes this possible (e.g. universities need federal funding, so they comply)

fast.ai/2019/03/04/eth…

@jesswhittles @cwiggins Chris covers this in more depth in his Data: Past, Present, & Future course. In particular, see Lecture 12 on the outcry to the Tuskegee Syphilis Trial leading to new standards for human subject research:

github.com/data-ppf/data-…

github.com/data-ppf/data-…

@jesswhittles @cwiggins @rajiinio made several great points here:

https://twitter.com/rajiinio/status/1170815042251579392?s=20

7 Traps of AI Ethics:

https://twitter.com/purpultat/status/1159535092089286656?s=19

If you are failing at “regular” ethics, you won’t be able to embody AI ethics either. The two are not separate.

fast.ai/2019/04/22/eth…

fast.ai/2019/04/22/eth…

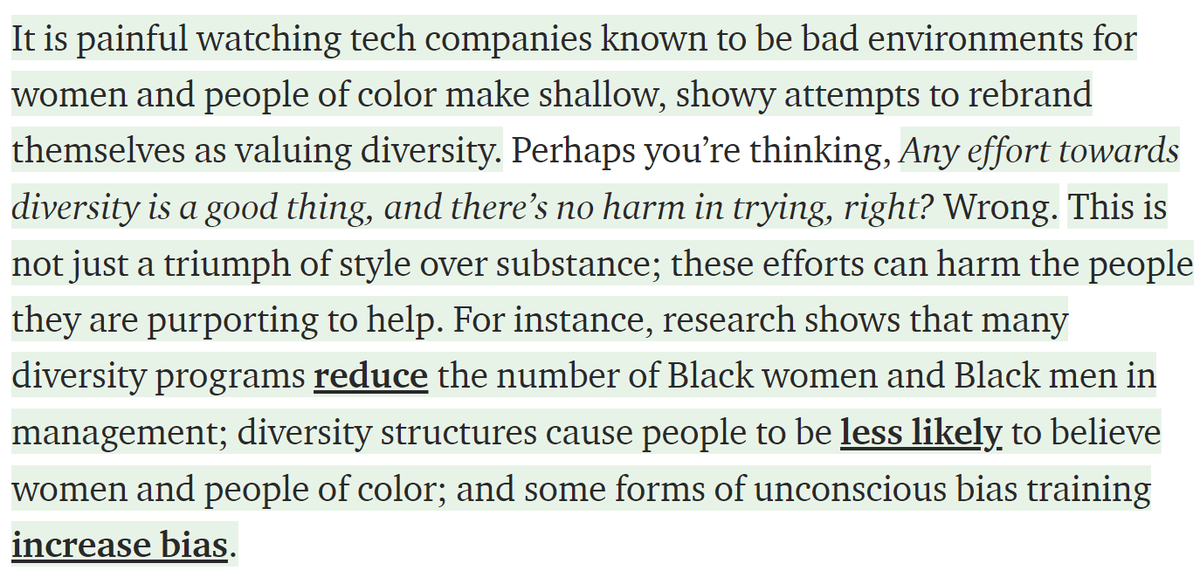

The mere presence of diversity policies can lead white ppl to be less likely to believe racial discrimination exists & men to be less likely to believe gender discrimination exists, despite other evidence.

We may see similar effect w AI ethics statements

medium.com/tech-diversity…

We may see similar effect w AI ethics statements

medium.com/tech-diversity…

Report from @article19org @VidushiMarda on limitations of normative ethics and FAT (Fairness, Accountability, & Transparency) approaches, and how a human rights based approach could strengthen them:

https://twitter.com/math_rachel/status/1171936910689746944?s=20

• • •

Missing some Tweet in this thread? You can try to

force a refresh