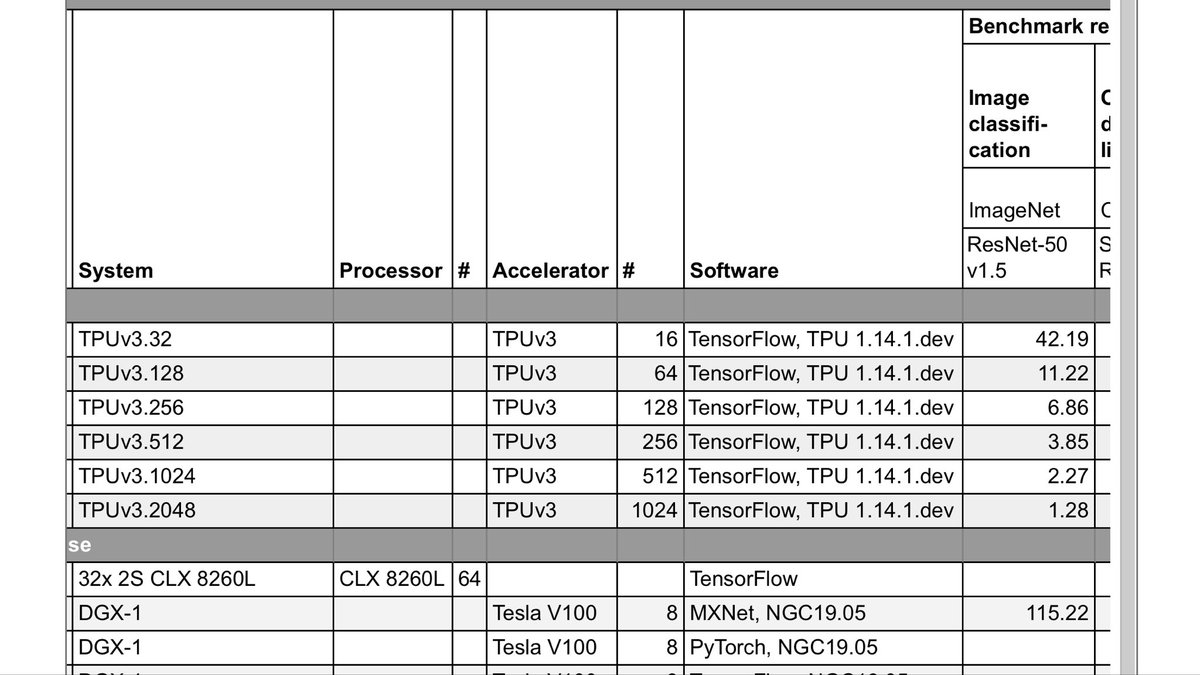

(480,000 images per second. 224x224 res JPG.)

Before you think highly of me, all I did was run Google’s code. It was hard though.

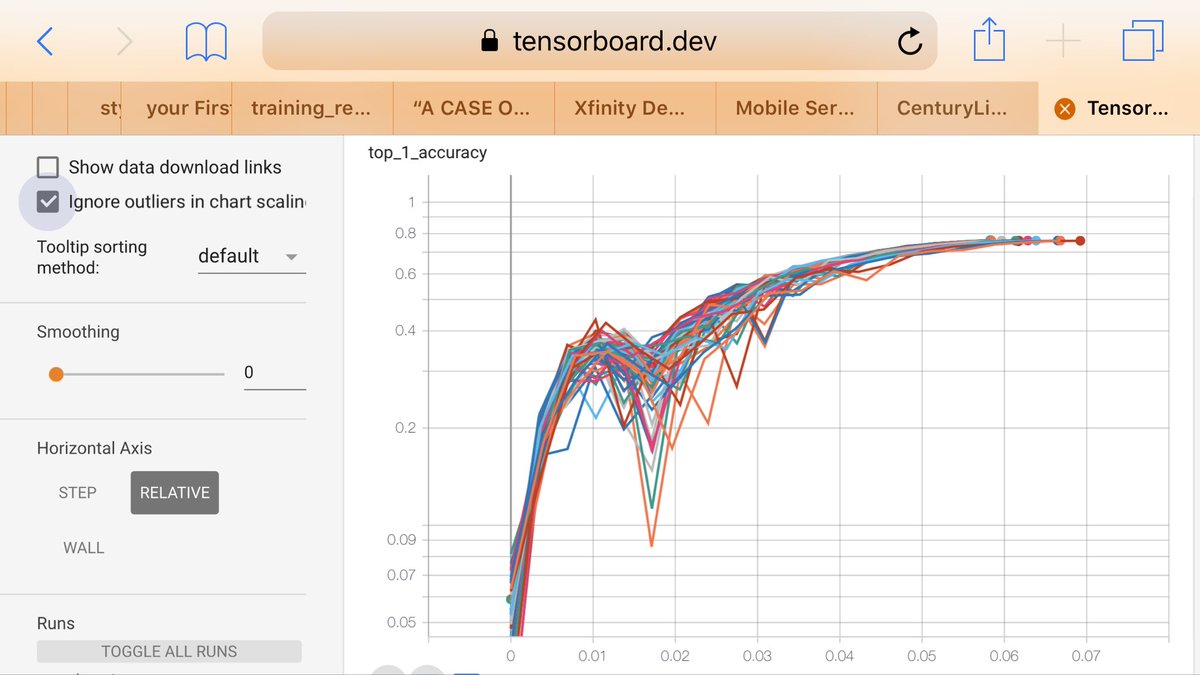

Logs: tensorboard.dev/experiment/jsD…

(3.51 minutes for v3-512 is slightly faster than their posted results of 3.85min, too!)

Spoiler: nope! It’s legit. It’s faster because:

2. The TF library is also 1.14 on the VM. I suspect internals are different.

If you want to know how to do “TPU without estimator,” this is *the* resource: github.com/mlperf/trainin…

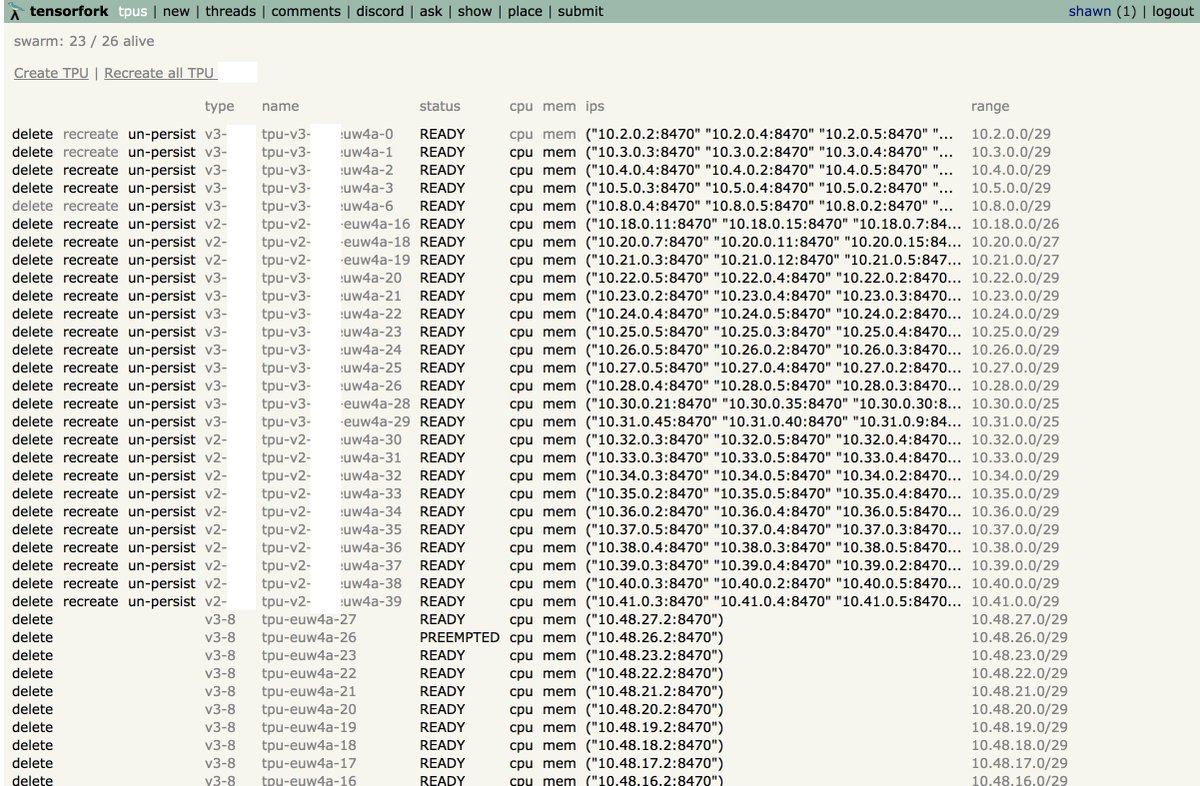

A TPU pod is a collection of TPUv3-8’s. When you see “TPUv3-512”, divide 512 cores by 8 = there are 64 individual TPUs.

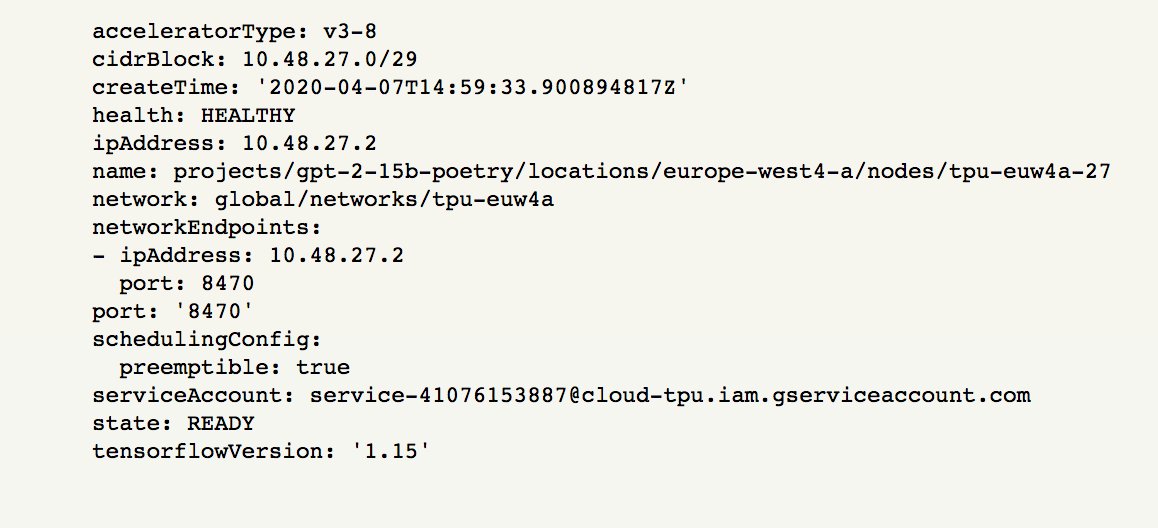

Each TPU can simultaneously read from a different shard of the 1024-file dataset.

Picture a TPU. You probably think of “8 cores with 16GB each.” No, don’t think like that.

Think of a TPU like a desktop computer with a CPU, 300GB RAM, and 8 video cards (16GB each).

You can run code on the TPU’s CPU!

Input processing happens in parallel with resnet training. The TPU’s CPU prepares the next inputs while each TPU core trains on the prev batch.

For TPUv3-256 and above, the batch size is 32,768. Such huge batch sizes cause problems, because you have to raise the learning rate as you increase the batch size. Normally this causes divergence.

LARS has a learning rate per layer.

I was surprised just how pretty the benchmark code was. And I think it’s why it’s fast.

Wild animals are beautiful because they have hard lives. This code is beautiful because it has zero overhead. Nothing is wasted.

github.com/mlperf/trainin…