Now starting in on the research talks at NDSS BAR. First up, a talk via Skype since the authors were not able to make it due to travel restrictions: Similarity Metric Method for Binary Basic Blocks of Cross-Instruction Set Architecture

Basic background: we want to compute code similarity by finding an embedding for each basic block in a binary

Prior work took an NMT approach using RNNs (last year at NDSS, ndss-symposium.org/ndss-paper/neu…)

The use triplet loss, with 3 basic blocks per training sample: an anchor, a positive sample, and a negative sample. One novel feature is to use *hard* negative samples – code that looks similar to the anchor, but is not a true match

Evaluation is on x86-ARM, with MISA dataset: 1.1 million semantically equivalent basic block pairs from open source projects.

Solution uses both pre-training and fine-tuning. In the evaluation, they show that pre-training really does help!

Q: How does this extend to other architectures? A: Benefit of ML here is that as long as we have training data, adding other architectures is straightforward, approach is not specific to x86<->ARM case.

(It's quite late in China, so big thanks to the authors for staying up to present :) )

Next up, we have "o-glassesX: Compiler Provenance Recovery with Attention Mechanism from a Short Code Fragment" – basically, given some examples of binary code, can we figure out what compiler was used? Can help with attribution in malware.

First note: single binary can be linked from multiple object files with different compilers. So we want to use smaller code fragments to classify each part.

Interesting – it turns out this can be done with fairly short fragments of code; only 16 instructions (30-60 bytes of x86).

They re-use the Transformer architecture, which has recently been super successful in NLP (GPT-2 is the most famous recent example).

Also cool: you can get some degree of explainability by looking at the attention layer to figure out what instruction has the most influence

You can also visualize the binary in terms of the classifications to figure out where the module boundaries are

Now up: Creating Human Readable Path Constraints from Symbolic Execution. Interesting problem—SMT constraints generated by tools are extremely verbose/messy, hard to interpret.

[BDG: Yes!! for example:

https://twitter.com/moyix/status/707027907164676096]

In addition to benefits to interpretability of the constraints, we may also want to transform them into other forms to make them easier for solvers, or translate to a different domain (Bitvecs -> ints, e.g.)

A big part of this complexity can come from the translation down to bit vectors, which are hard for humans to interpret. Translating into the integer domain can make it easier to understand.

Caveat: domains may not be precisely equivalent for a given path constraint; paper discusses how to figure out whether they are.

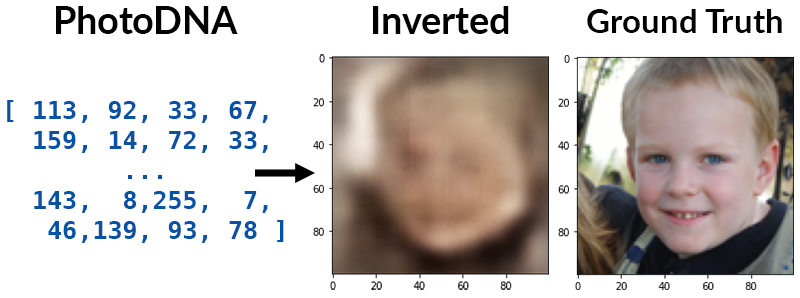

Some examples of simplification. When does this function (first image) return y-2? ClariPy (second image) vs simplified integer domain translation (third image)

How does it work technically? The authors use logic synthesis tools with gate-libraries created for human readability to convert path constraints into more readable forms. Existing constraint simplifications are tailored toward solver efficiency, not readability.

Future work: quantify the notion of human-readability so can compare different possible answers; analysis on real binaries rather than toy programs; extend basic ideas to more data-types and domains (e.g., strings).

Last talk of this session looks at TrustZone—"Finding 1-Day Vulnerabilities in Trusted Applications using Selective Symbolic Execution"

Background: TEEs are an interesting attack surface; code runs in a protected environment but may be vulnerable via the API exposed to user programs (e.g.: cvedetails.com/cve/CVE-2016-8…). Want to look for "1-days" in such code by diffing patched vs unpatched code.

TrustZone based on hardware feature of ARM processors, you have a "normal" world where untrusted code runs and a "secure" world where trusted apps run. TrustZone is used for all sorts of things like DRM, encryption, etc.

Challenges: trusted apps (TAs) are closed-source, can't be debugged, and can't be modified due to code signing.

The authors build SimTA, which extends angr by providing an execution environment that mimics the execution environment inside the TEE.

Cool detail: to figure out how the TEE environment worked, the authors actually developed and used an exploit for CVE-2016-8764 and used to to reverse engineer the environment.

SimTA loads the TA according to the memory map they reverse engineered using their exploit, hooks the input/output of the APIs used for TA lifecycle management, and has angr SimProcedures for the GP Internal Core API.

Case study: rediscovering CVE-2016-8764. Used BinDiff to identify changed code between patched & unpatched. Then selectively symbolically execute the modified codepath to find an input that can trigger the vulnerability.

By comparing the path constraints, they can find a new constraint on the input in the patched version that corresponds to the vulnerability that was fixed.

• • •

Missing some Tweet in this thread? You can try to

force a refresh