A multimedia tutorial & review in a thread! 👇

📝 Texture Synthesis Using Convolutional Neural Networks

🔗 arxiv.org/abs/1505.07376 #ai

✏️ Beginner-friendly insight or exercise.

🕳️ Related work that's relevant here!

📖 Open research topic of general interest.

💡 Insight or idea to experiment further...

See this thread for context and other reviews:

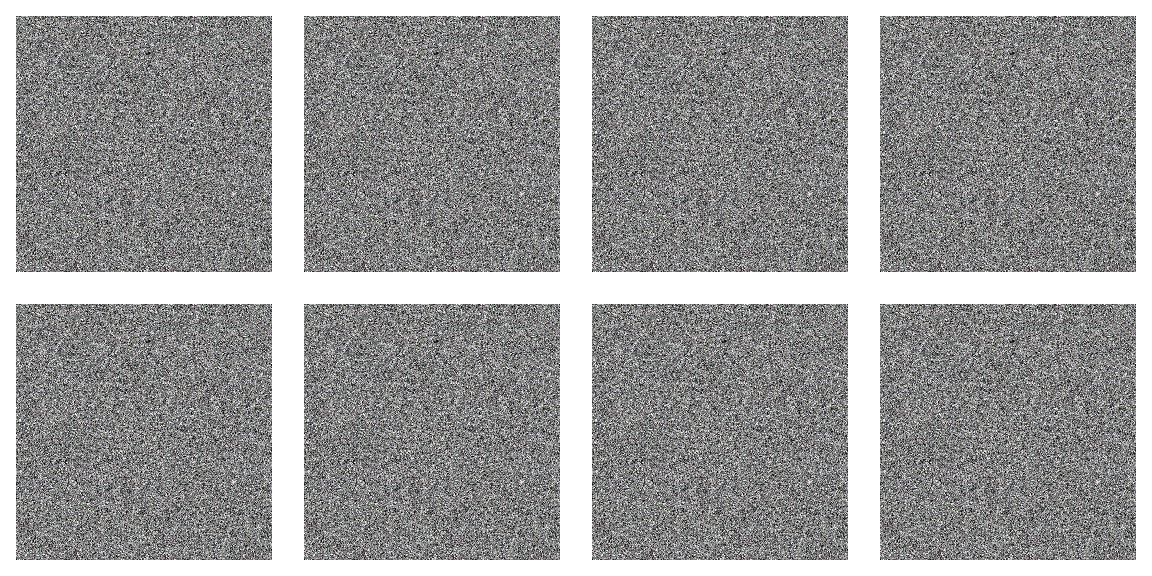

📺Here are 500 steps of the optimization for the textures above:

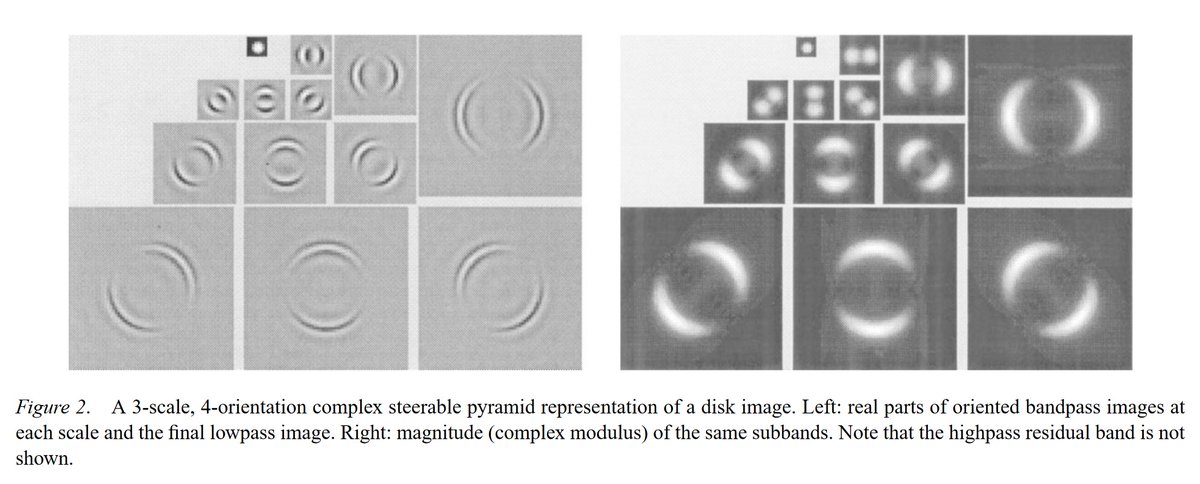

🕳️ Previous work by Portilla and Simoncelli used manually crafted feature detectors based on the visual cortex.

📝A Parametric Texture Model Based on Joint Statistics of Complex Wavelet Coefficients

🔗cns.nyu.edu/pub/lcv/portil…

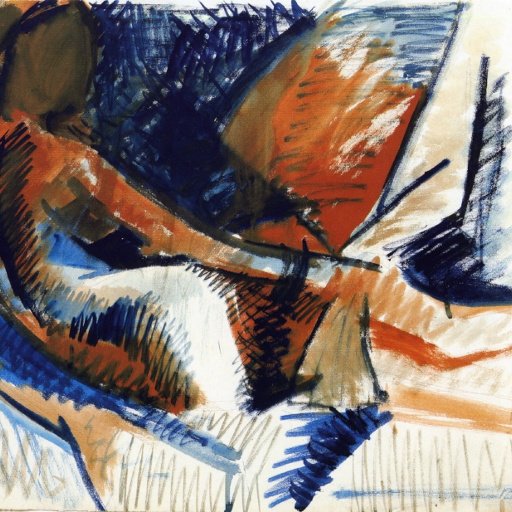

Here's what those features look like. (cw: 2fps strobe)

- colors detectors

- edges detectors

- pattern detectors

Then, the convolution network (convnet) extracts even more feature detectors from those low-level features. The next level looks like this:

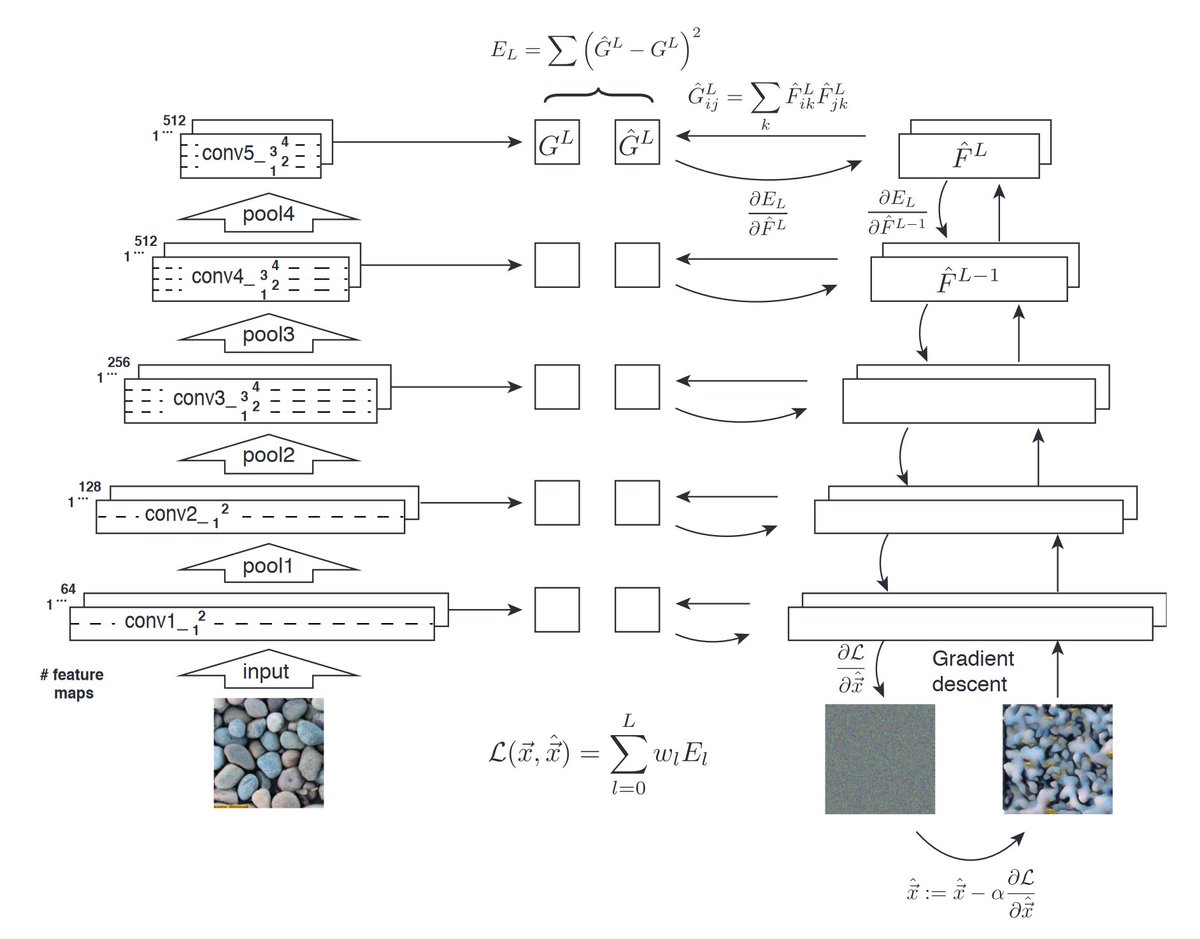

The feature map at each level is 2x2 smaller:

(level, features, size)

L1 → 64 @ 256x256

L2 → 128 @ 128x128

L3 → 256 @ 64x64

L4 → 512 @ 32x32

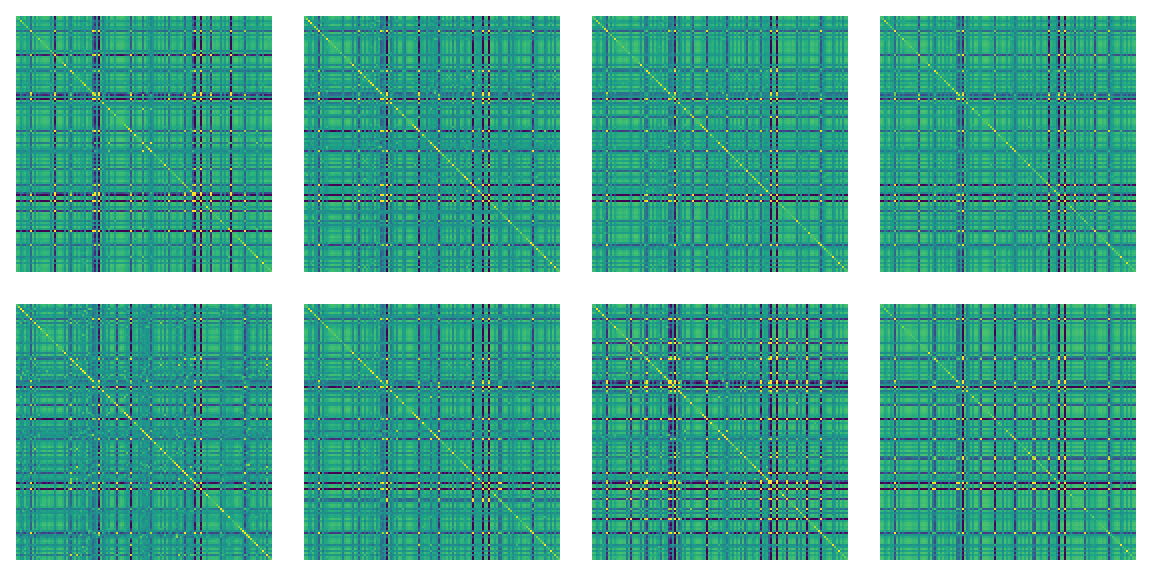

Here are 512 tiny feature maps at level 4 of the hierarchy:

PyTorch for example has pretrained networks (the VGG family) that are suitable:

pytorch.org/hub/pytorch_vi…

🛠️ I created a repository to make it easier to access these feature maps. If you're interested, I can share my own visualization scripts: github.com/photogeniq/ima…

You can use these feature maps to reproduce the original image, but it's not a good texture model because it's "over parameterized" — i.e. it has too many constraints.

📺 (I never visualized this before, it's pretty cool ;-)

✏️ Try an open-source implementation and tune the weights for each layer!

- What are suitable convnets?

- How best compute the gram matrix?

- Which optimizer works fastest?

But this thread is already pretty long so I'll keep those discussions for reviews of downstream papers!