An illustrated review & tutorial in a thread! 👇

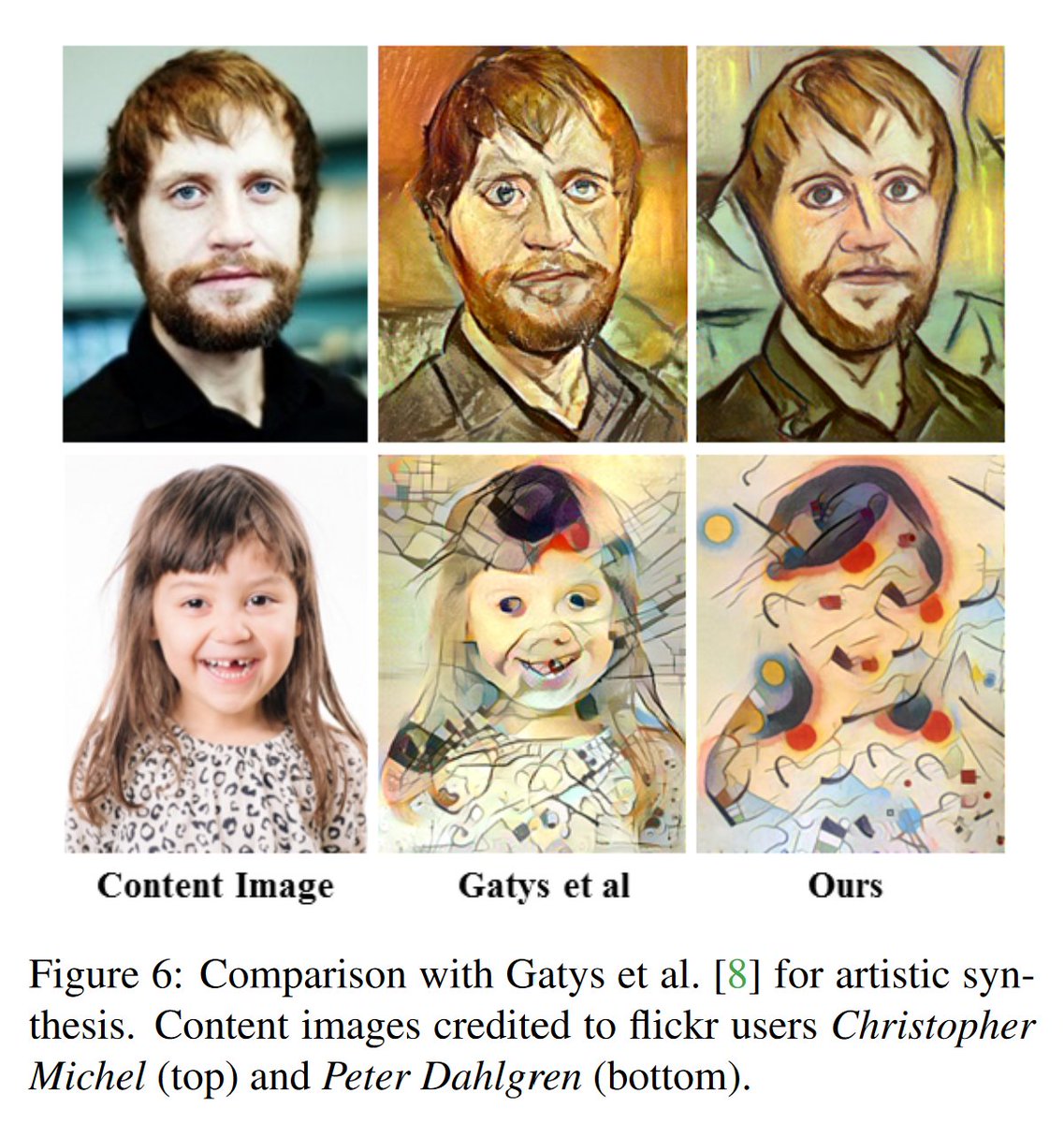

📝 Combining Markov Random Fields & Convolutional Neural Networks for Image Synthesis

🔗 arxiv.org/abs/1601.04589 #ai

✏️ Beginner-friendly insight or exercise.

🕳️ Related work that's relevant here!

📖 Open research topic of general interest.

💡 Idea or experiment to explore further...

See this thread for context and other reviews:

✏️ As far as deep learning frameworks go, this code (matmult) "should" be simplest to write.

It looks like this:

(This is an old idea in texture synthesis, and you can still find it almost everywhere now.)

This one shows the L-BFGS optimizer blow up and recover. The CUDA implementation of L-BFGS is faster but apparently less reliable (WIP):