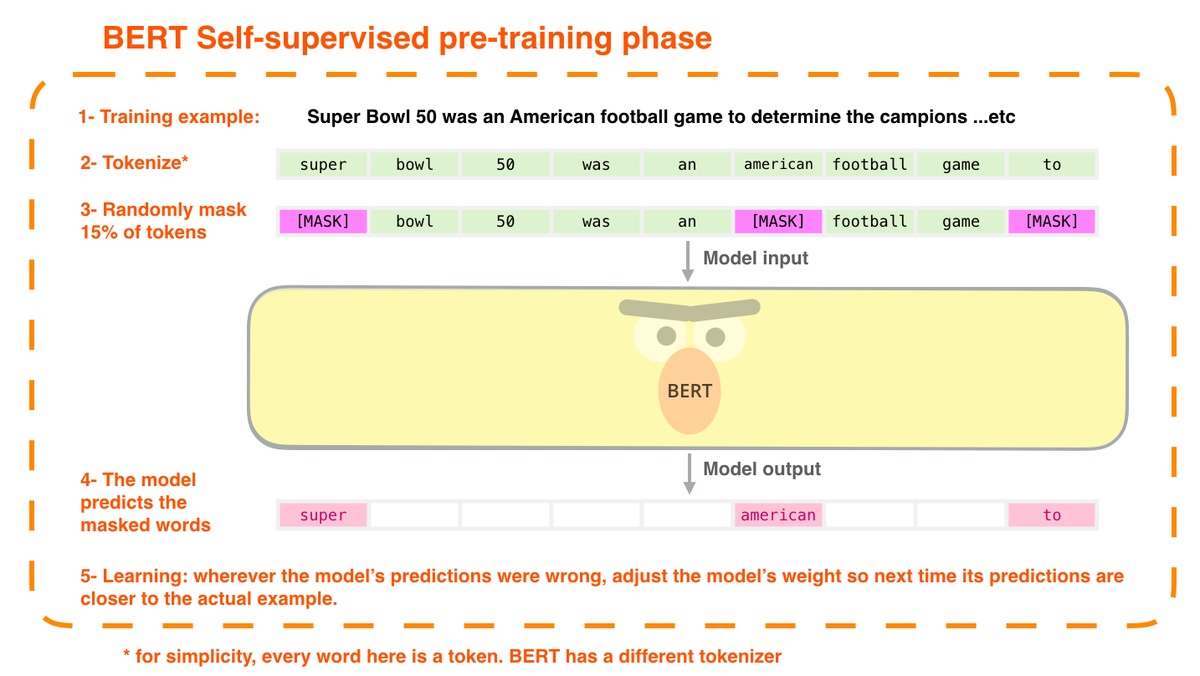

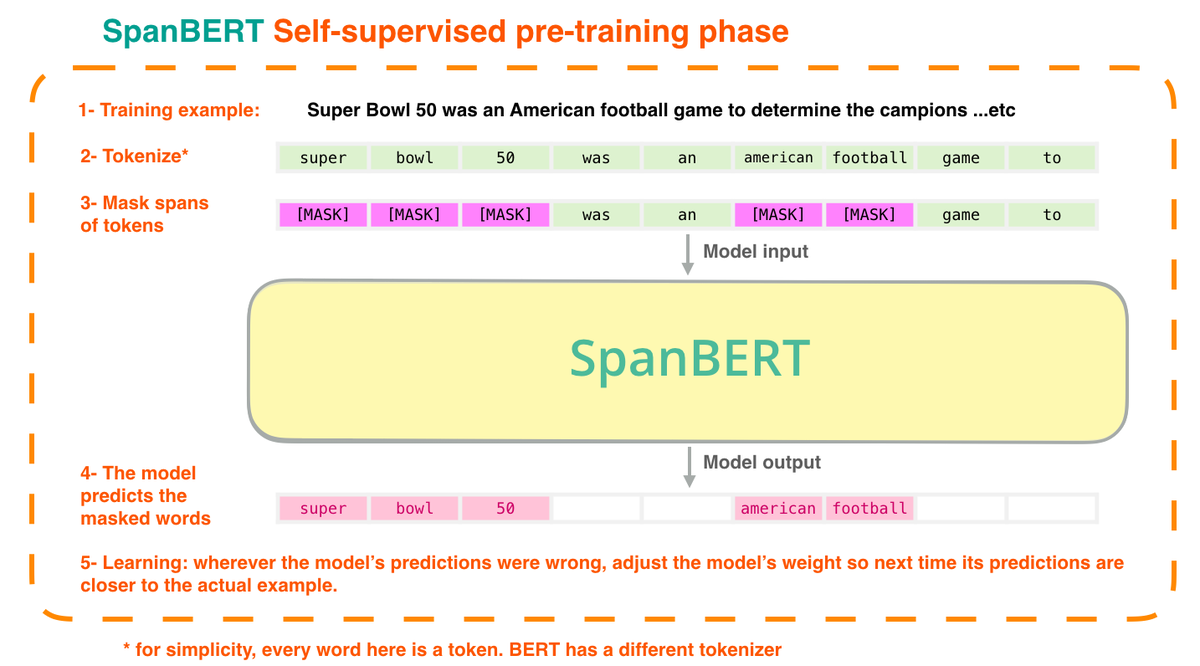

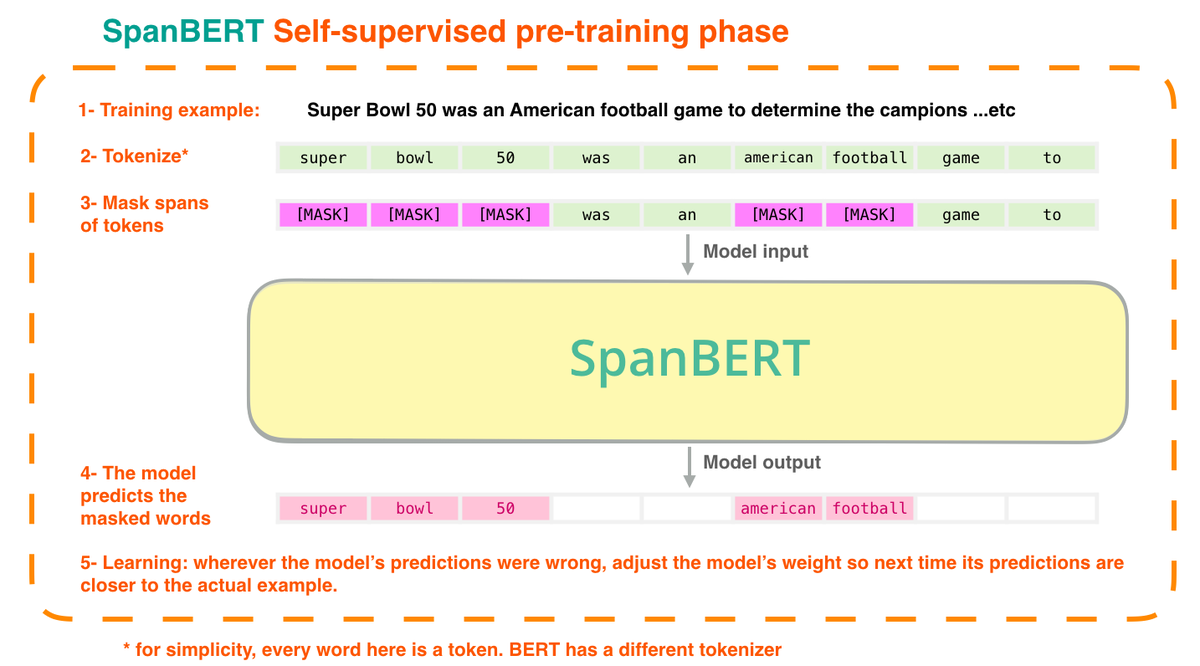

1- SpanBERT

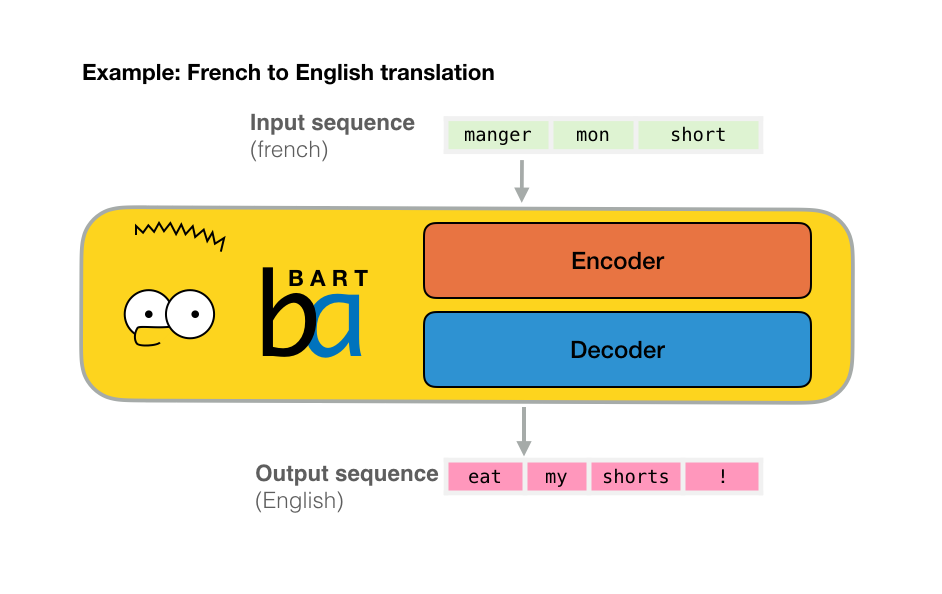

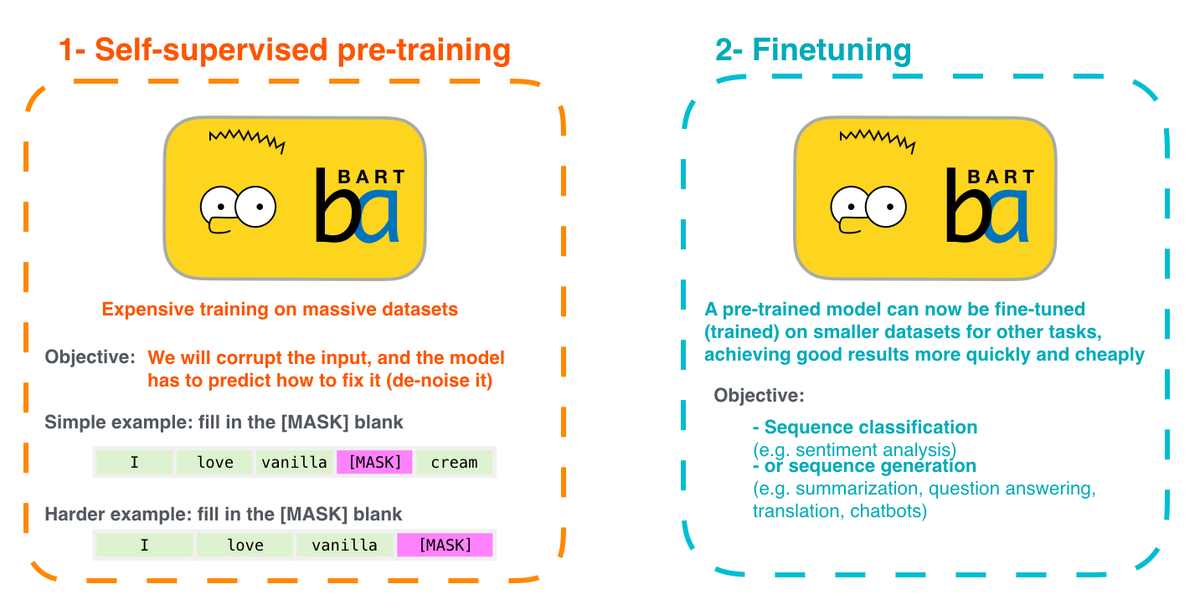

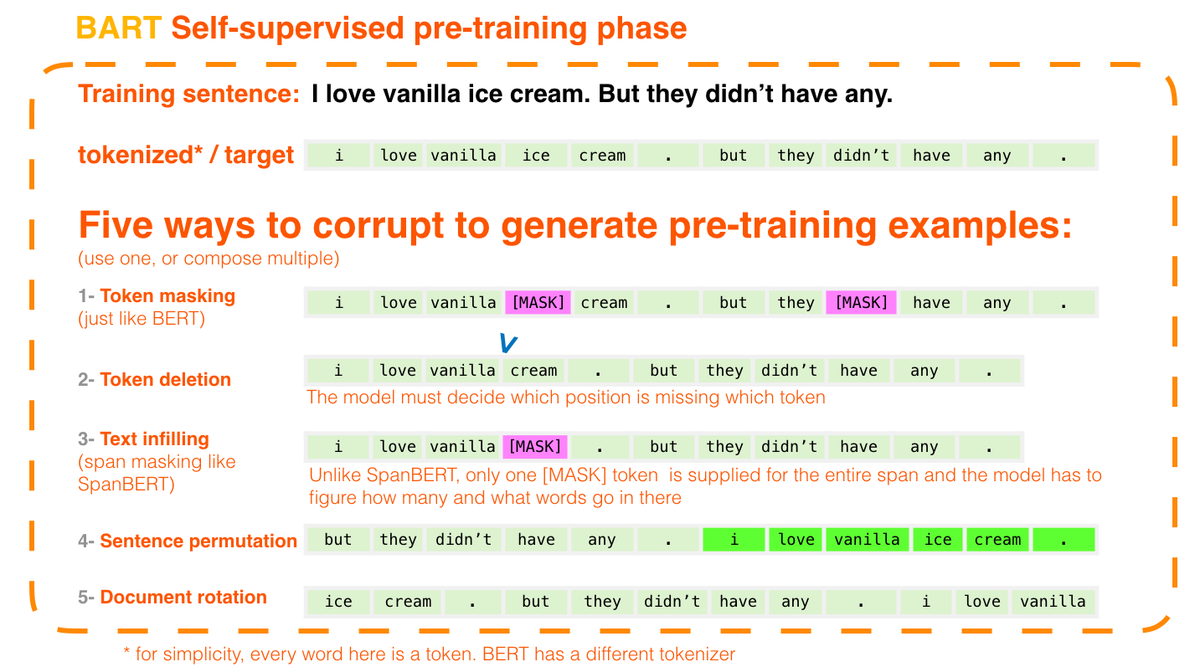

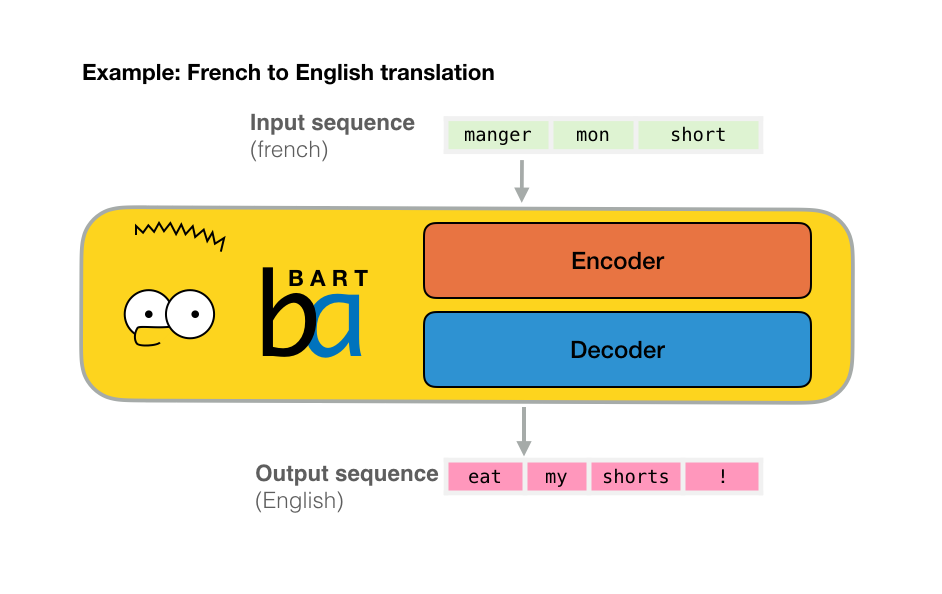

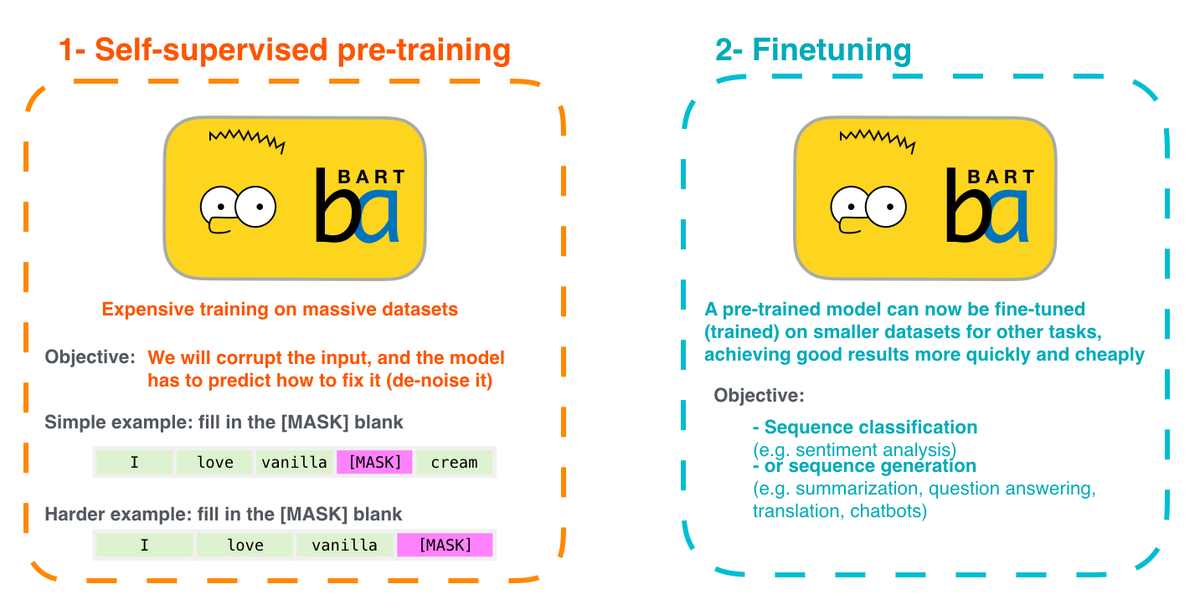

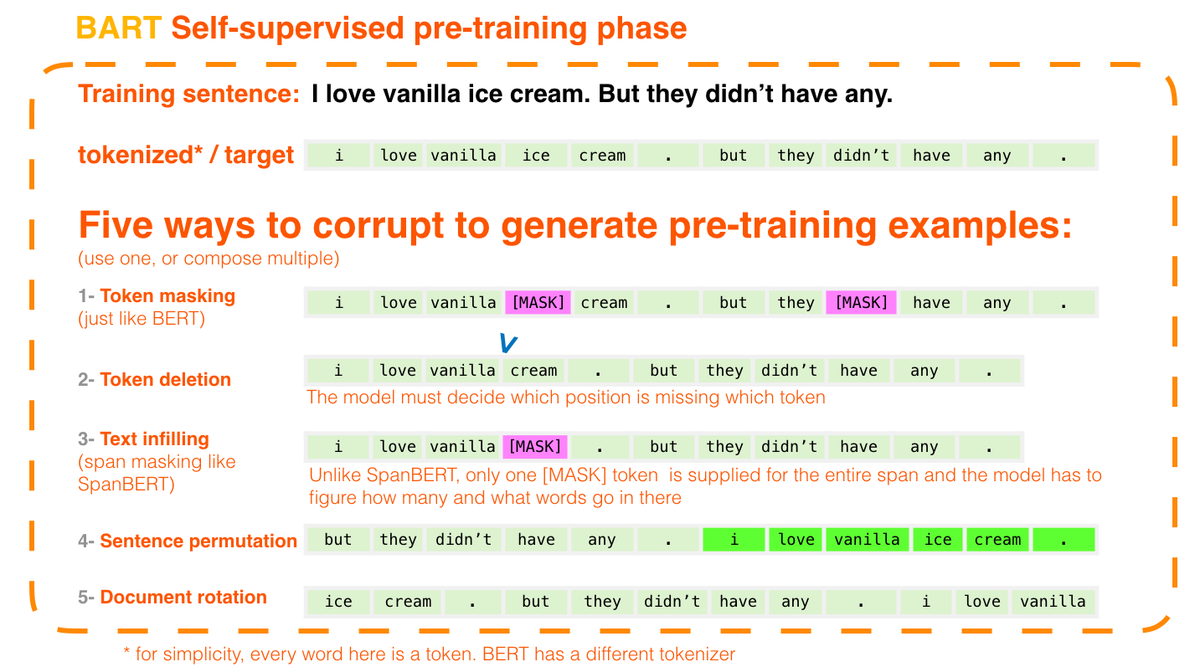

2- BART

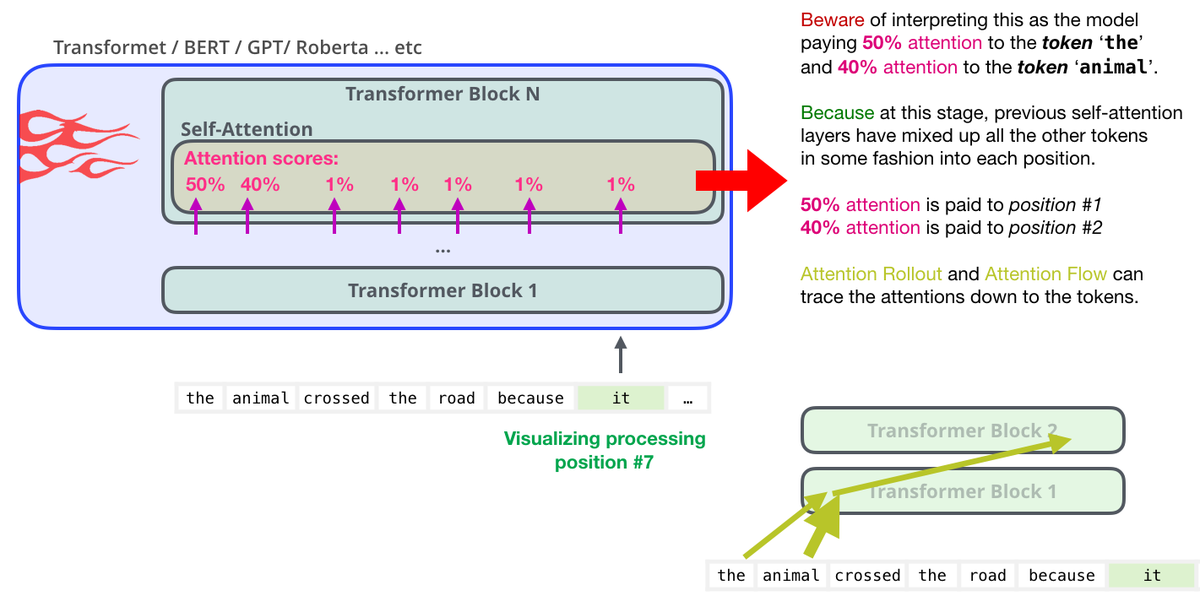

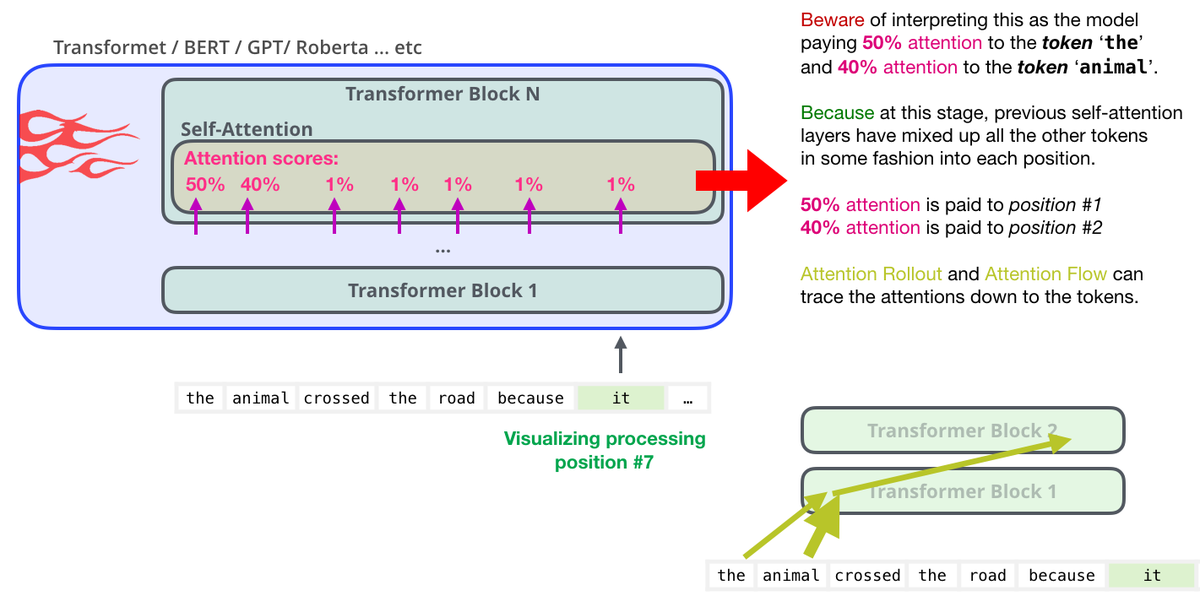

3- Quantifying Attention Flow

(1/n)

aclweb.org/anthology/2020…

4/n #acl2020nlp

mitpressjournals.org/doi/pdf/10.116…

BART paper:

aclweb.org/anthology/2020…

Keep Current with Jay Alammar

This Thread may be Removed Anytime!

Twitter may remove this content at anytime, convert it as a PDF, save and print for later use!

1) Follow Thread Reader App on Twitter so you can easily mention us!

2) Go to a Twitter thread (series of Tweets by the same owner) and mention us with a keyword "unroll"

@threadreaderapp unroll

You can practice here first or read more on our help page!