Blogpost:

david-salazar.github.io/2020/07/18/cau…

#rstats

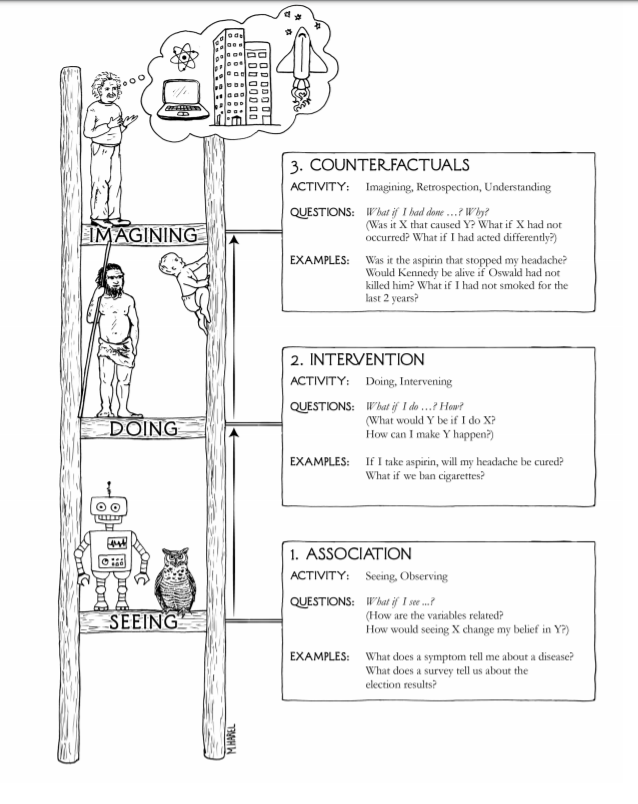

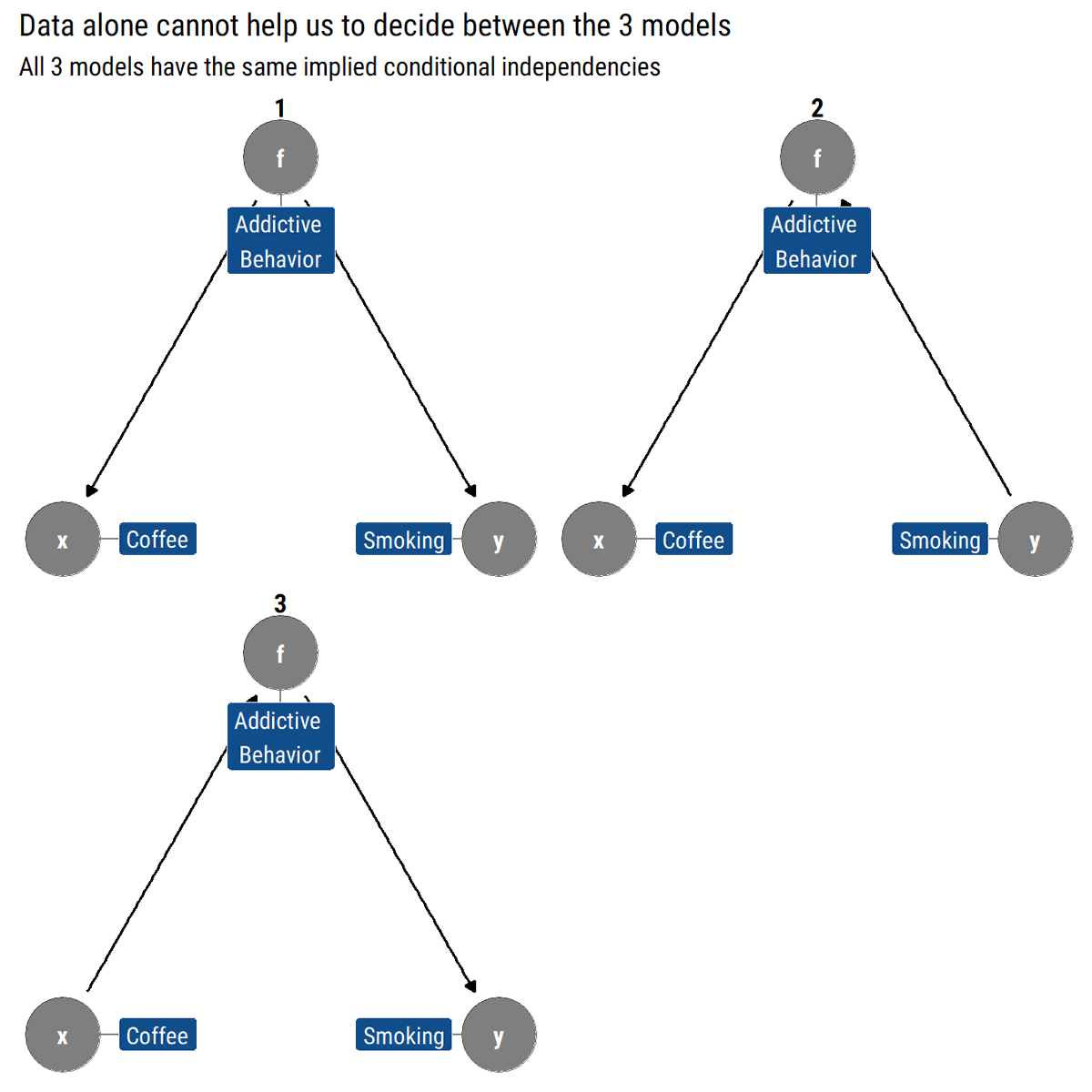

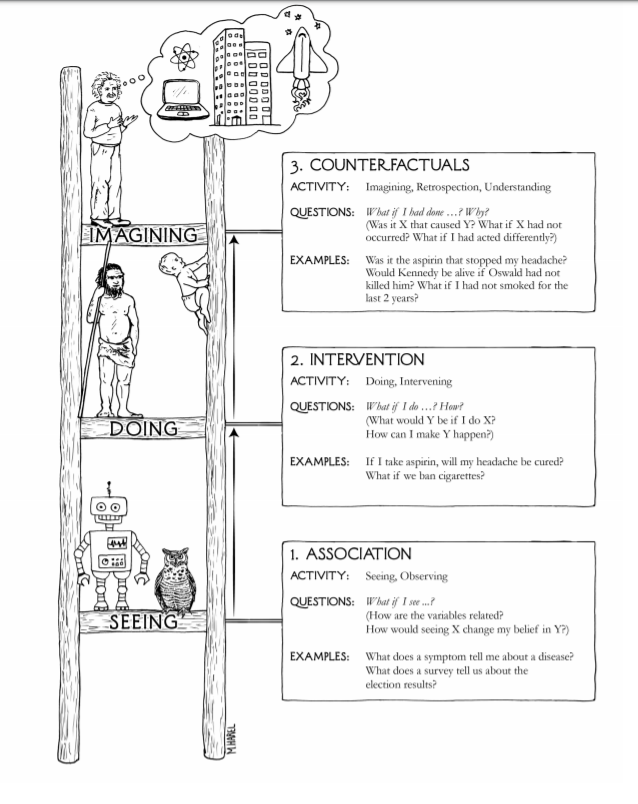

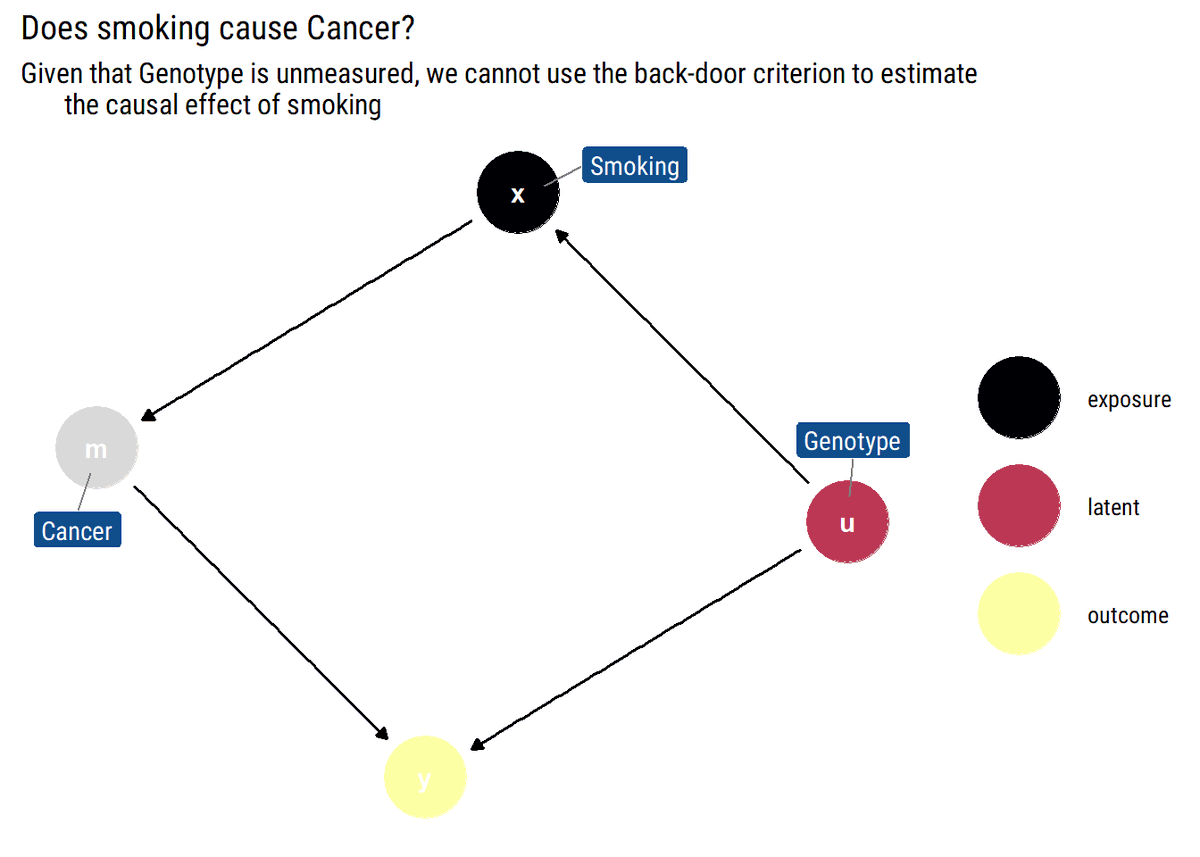

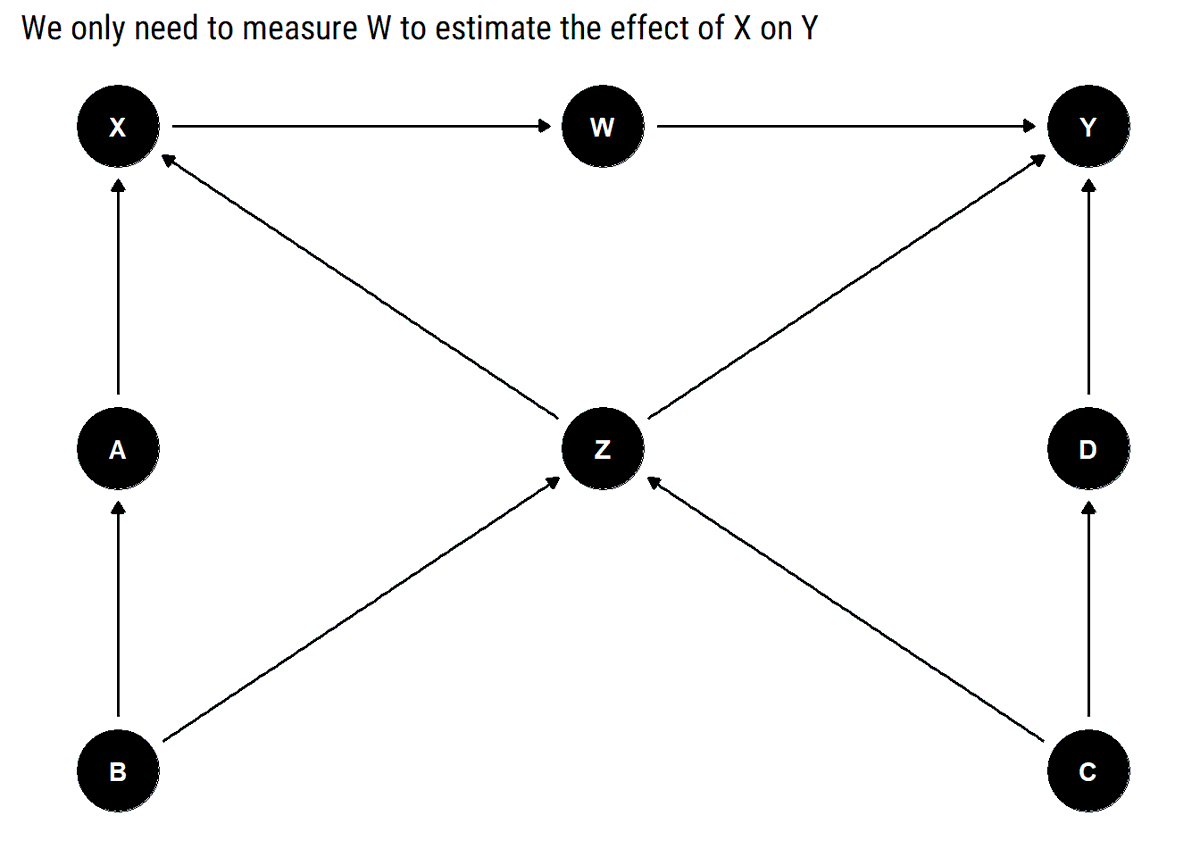

Joint probability distributions are tricky to represent: in our heads and our computers. Let's model our conditional independence assumptions with a Graph: for any node, conditional on its parents, the variable is conditionally independent of all other preceding variables.

Such a Graph (G) is called a Bayesian Network. It yields a recursive decomposition of the joint distribution. We say that G can explain the generation of the data represented by a probability distribution (P) that admits such a decomposition.

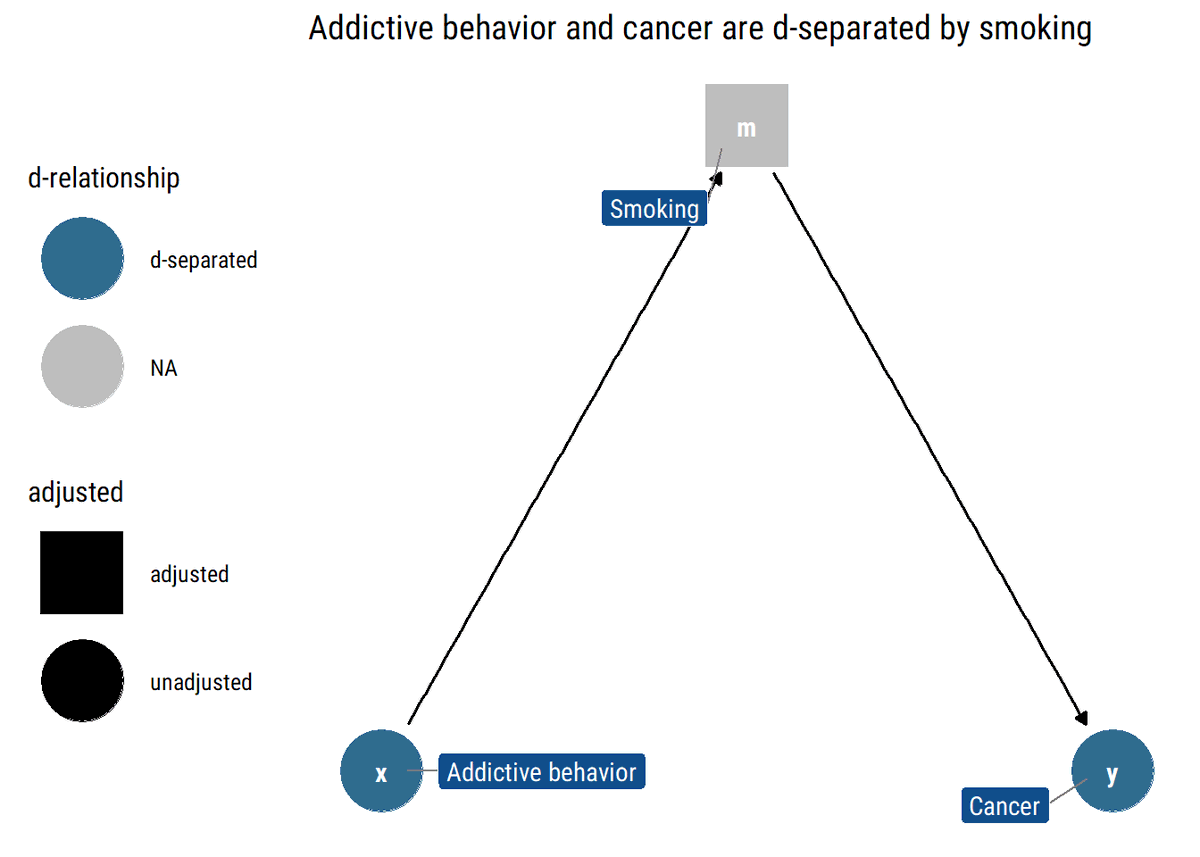

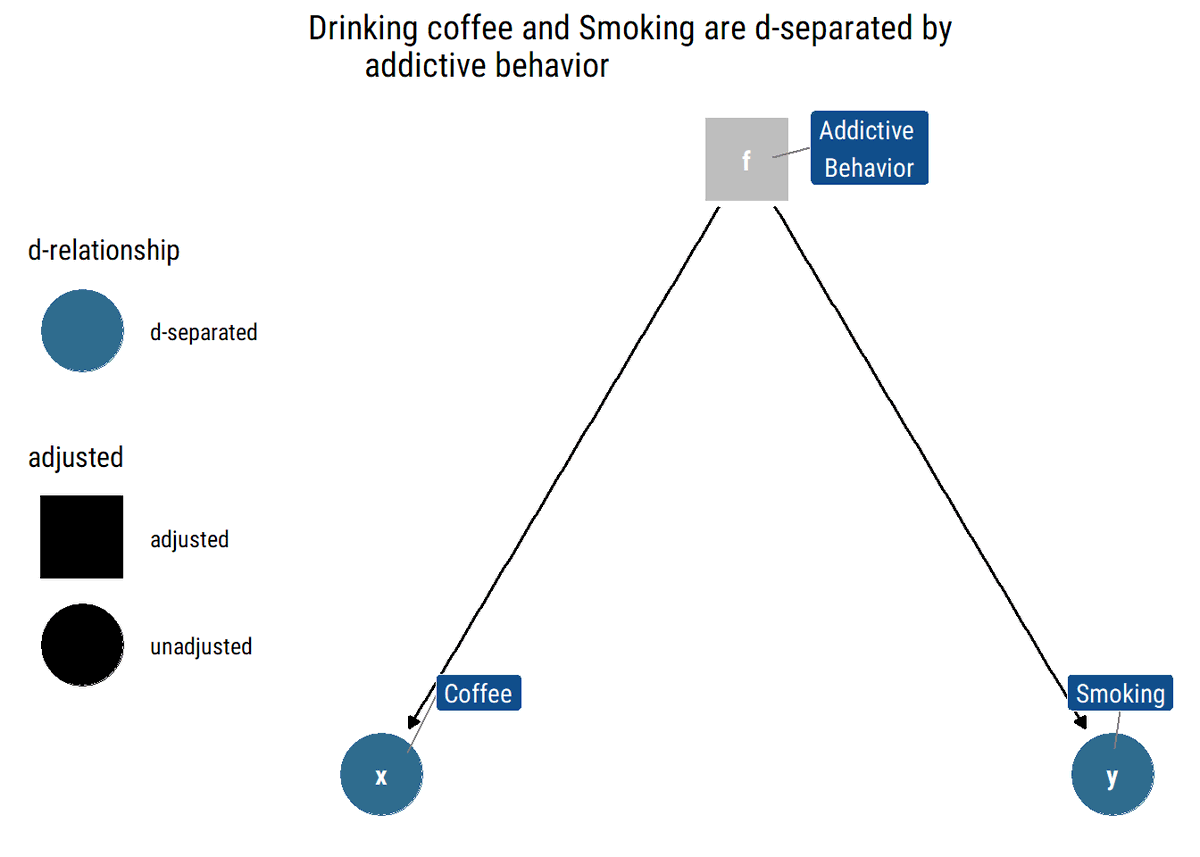

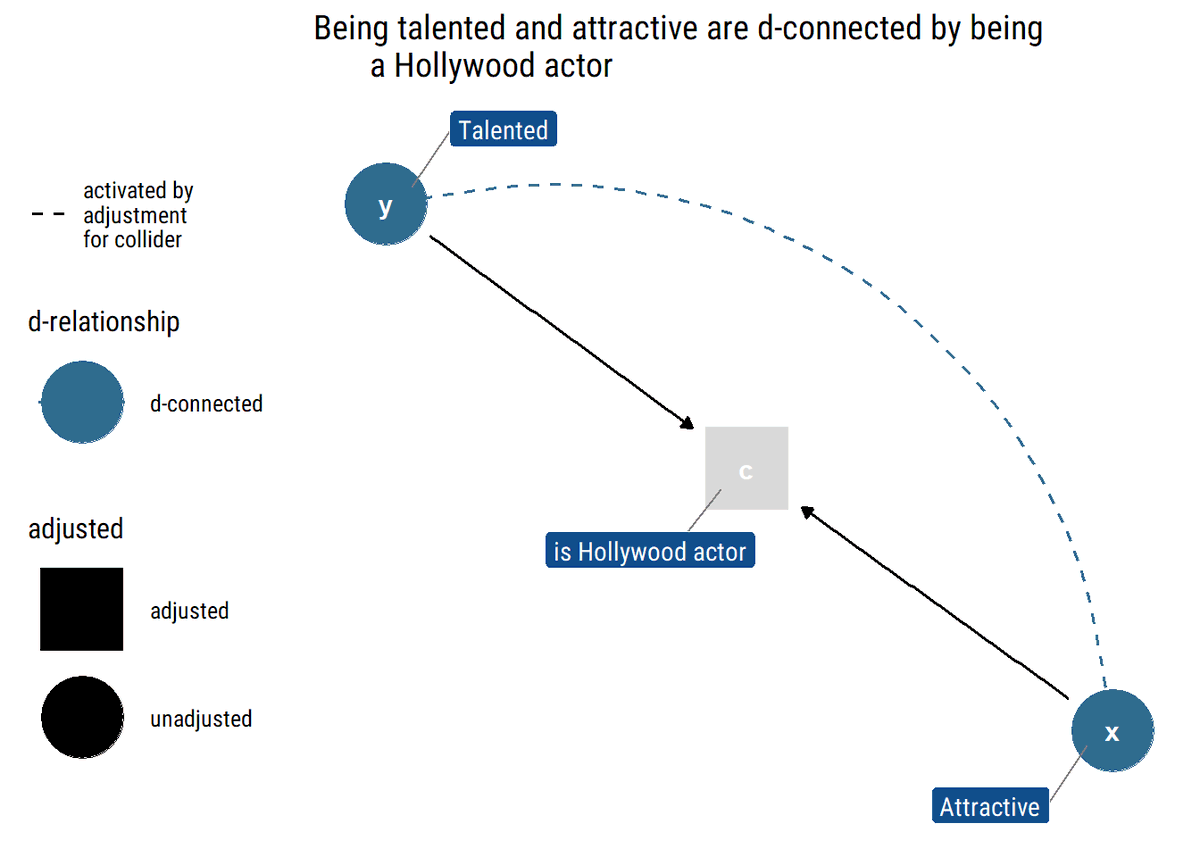

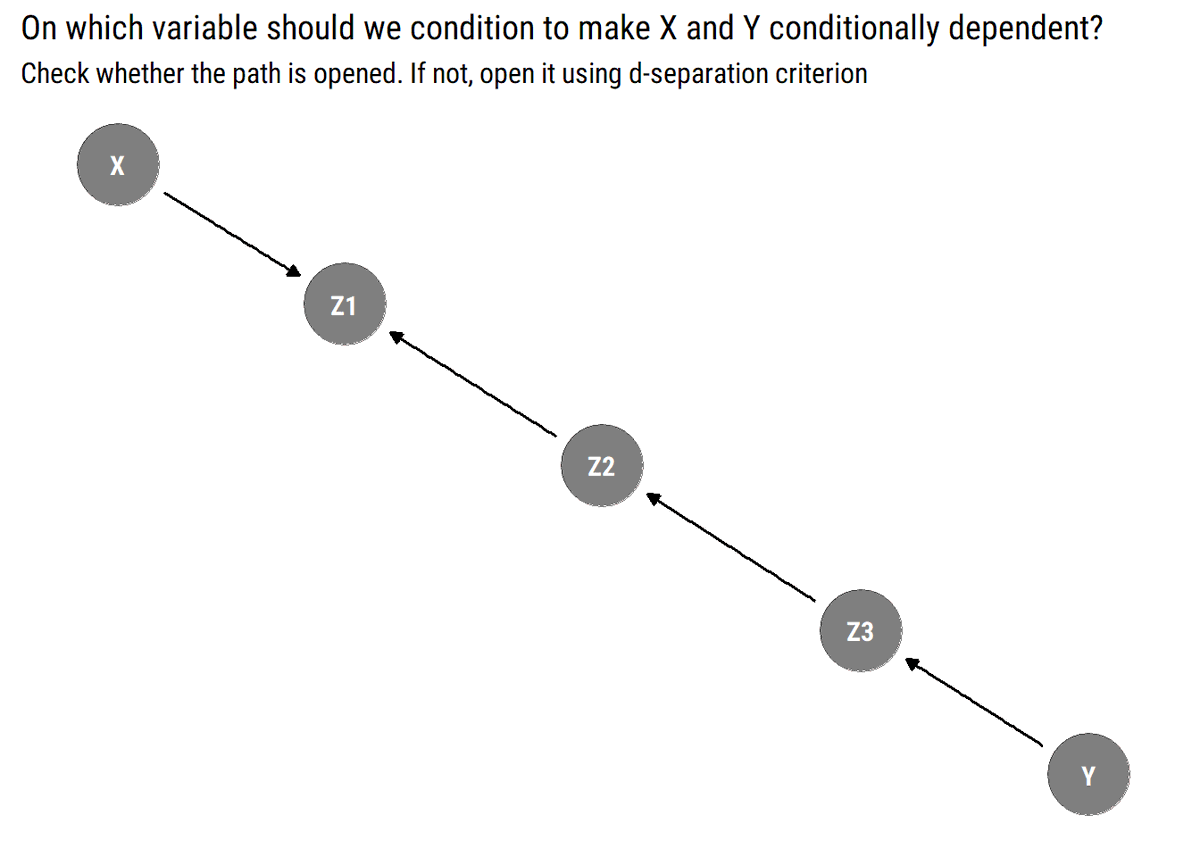

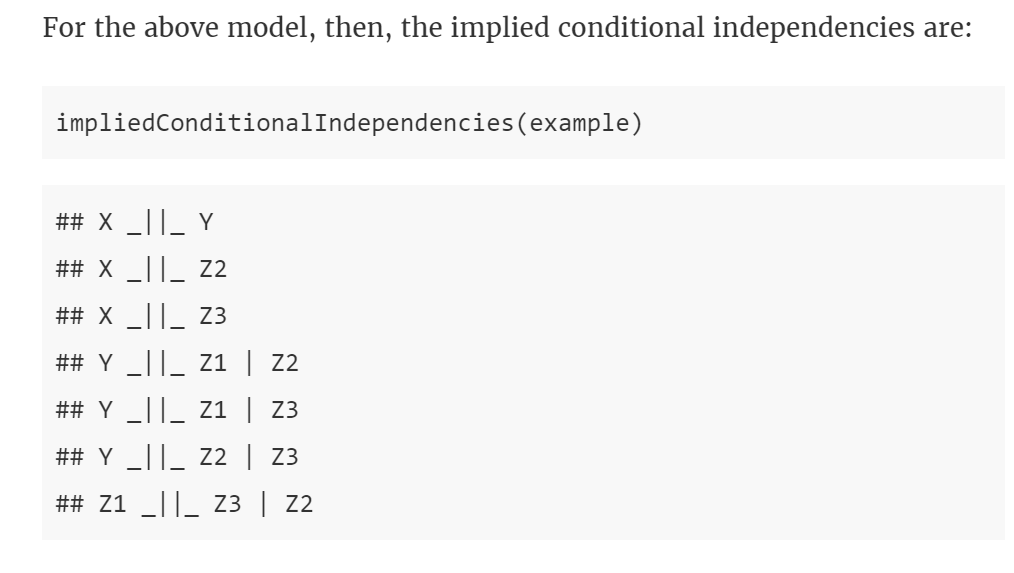

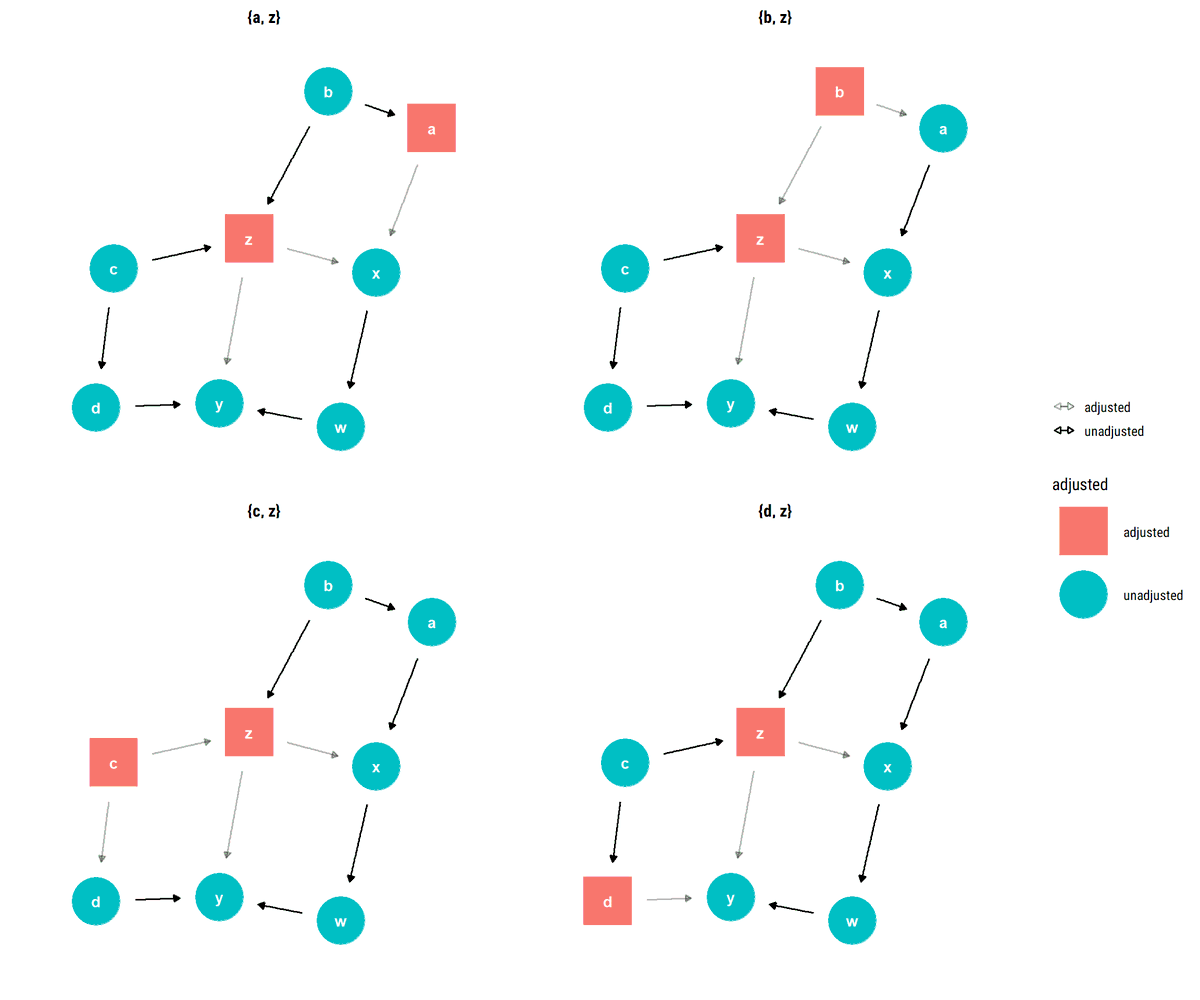

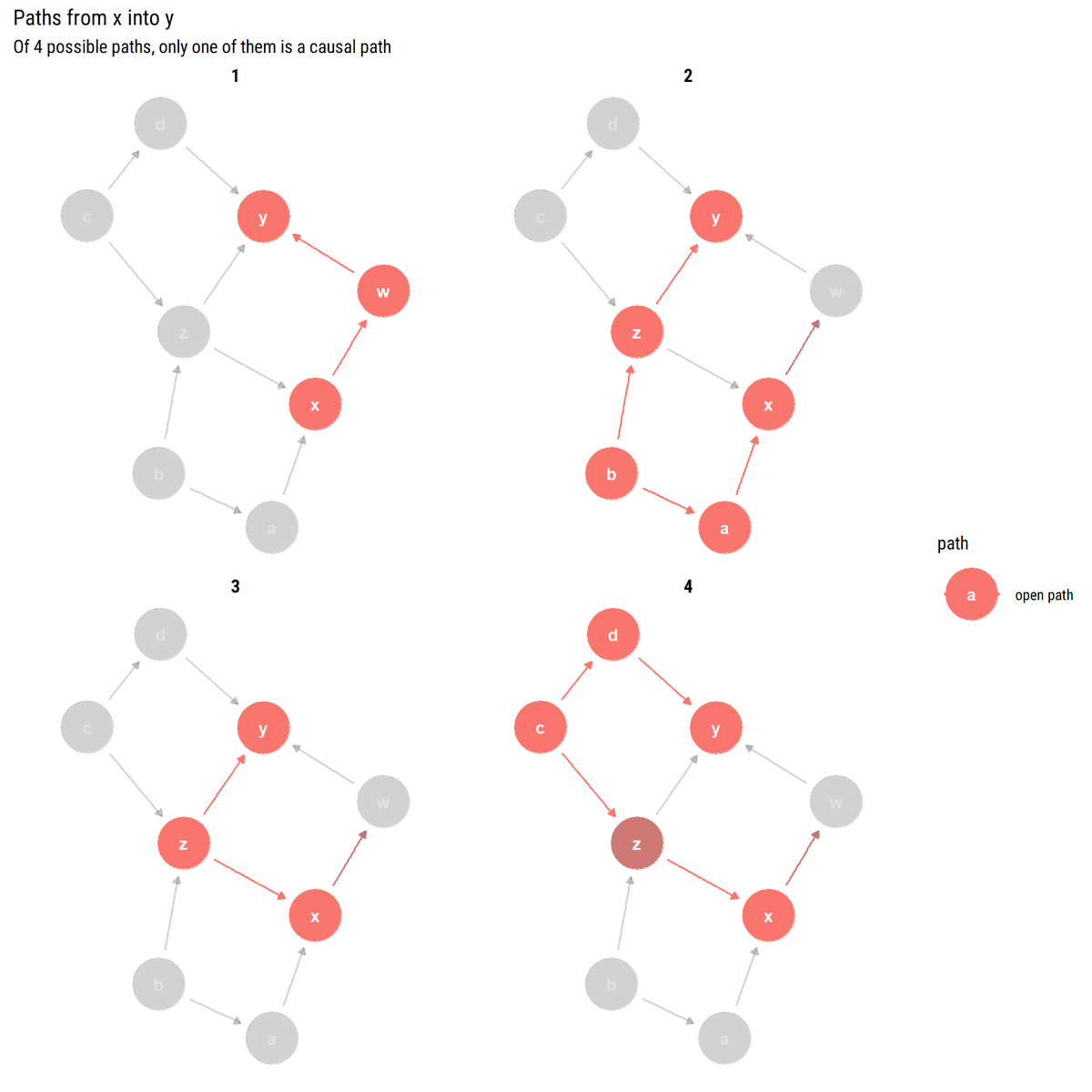

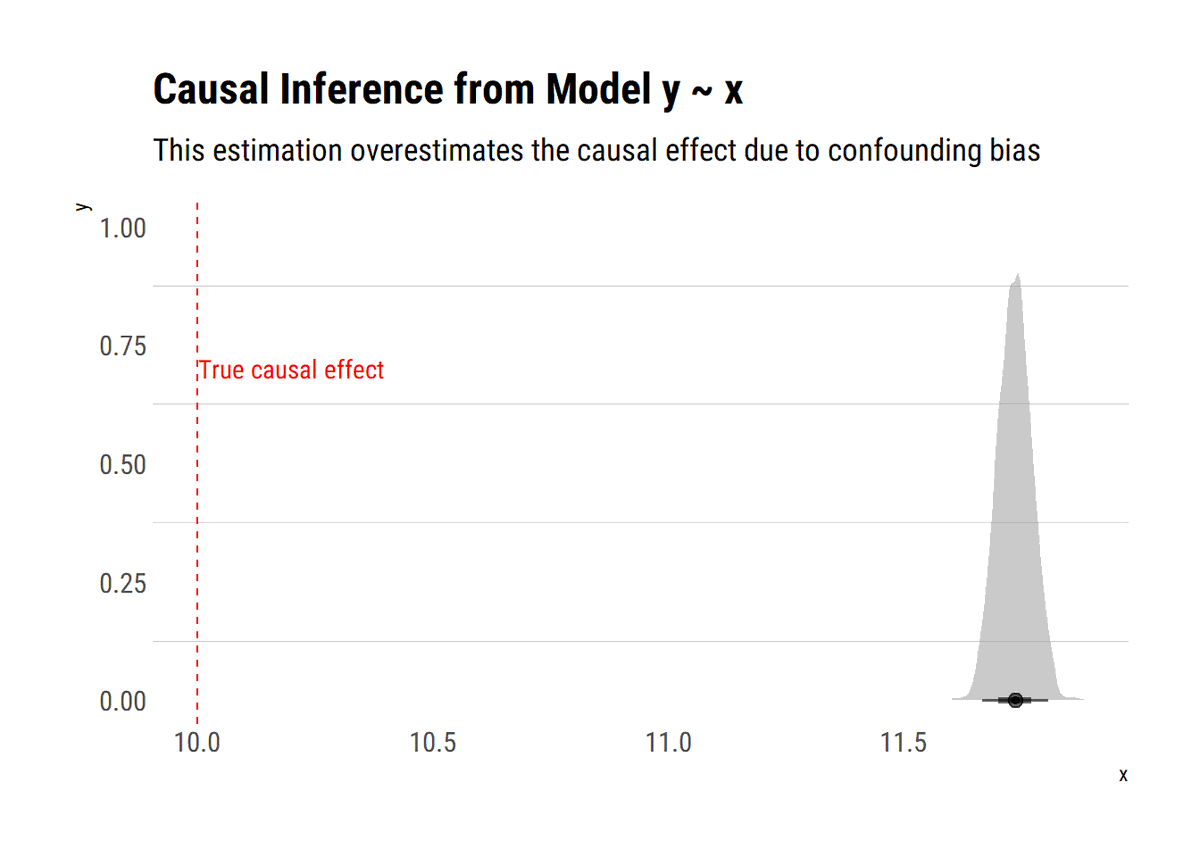

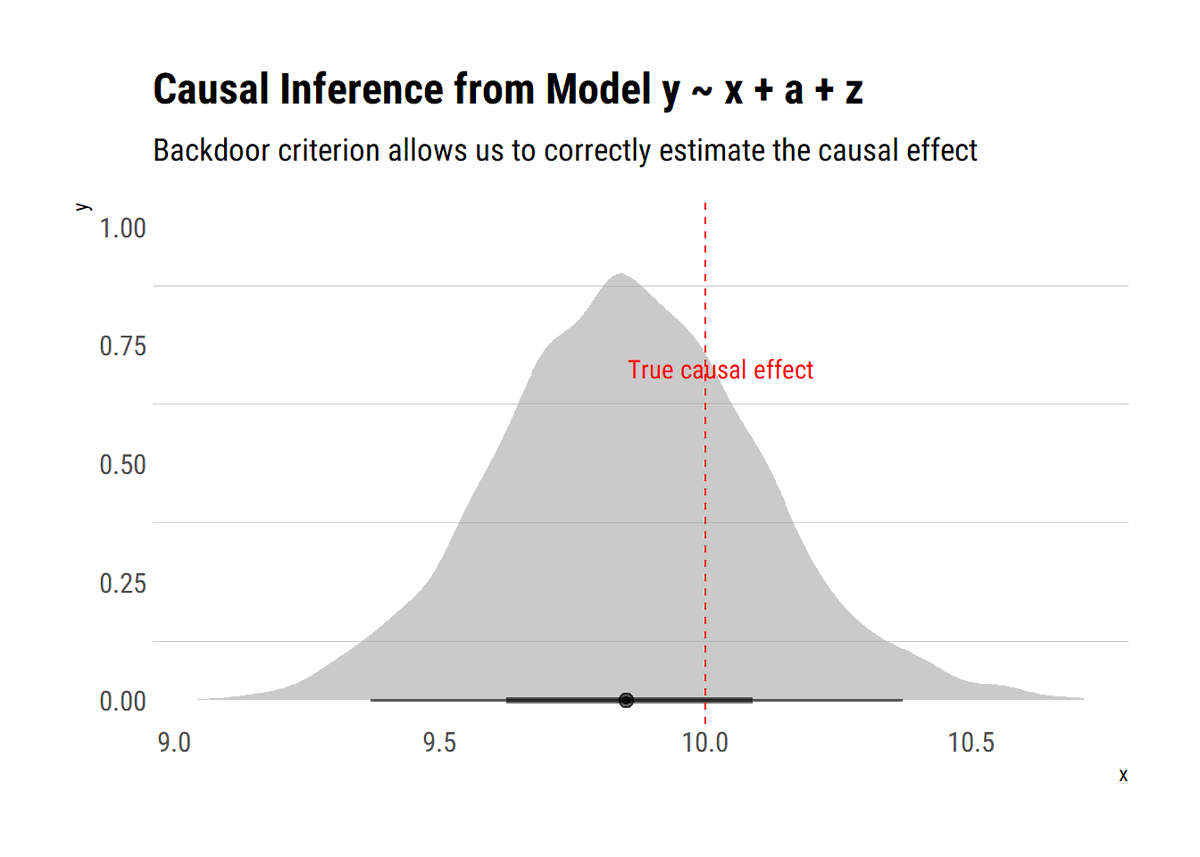

What do we gain? the d-separation criterion: a graphical analysis furnishes what are the conditional and marginal independencies that should hold in a dataset compatible with our assumptions. X and Y are independent conditional on Z if the paths between them are blocked by Z

What is a blocked path? In a path that contains a chain (i→m→j) or a fork (i←m→j), the statistical information will flow from the extreme variables whenever we don't adjust by the middle variable. Thus, they will be d-separated (conditionally independent) Why? ⬇️

david-salazar.github.io/2020/07/22/cau…

twitter🧵

blogpost

david-salazar.github.io/2020/07/25/cau…

#rstats

blog:

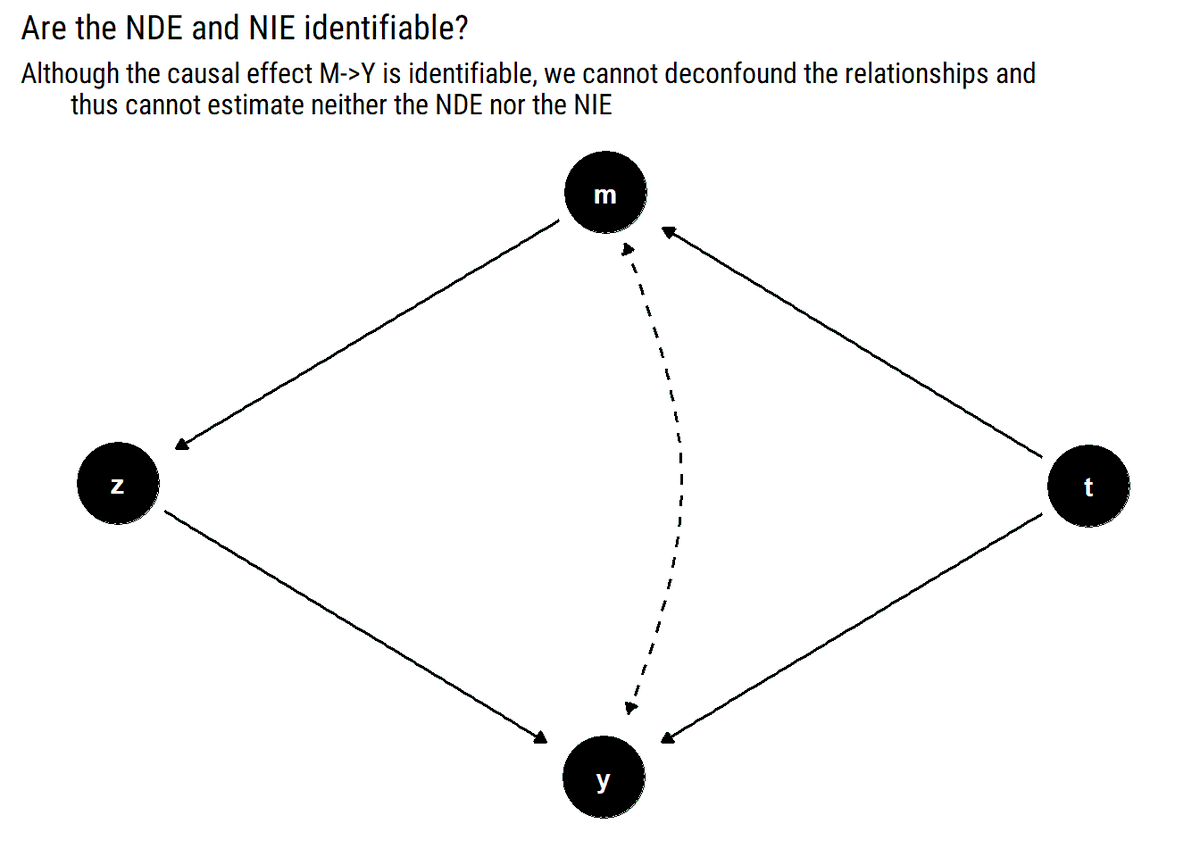

david-salazar.github.io/2020/07/30/cau…

david-salazar.github.io/2020/07/31/cau…

david-salazar.github.io/2020/08/10/cau…

david-salazar.github.io/2020/08/16/cau…

Blogpost:

david-salazar.github.io/2020/08/20/cau…

#rstats

david-salazar.github.io/2020/08/26/cau…