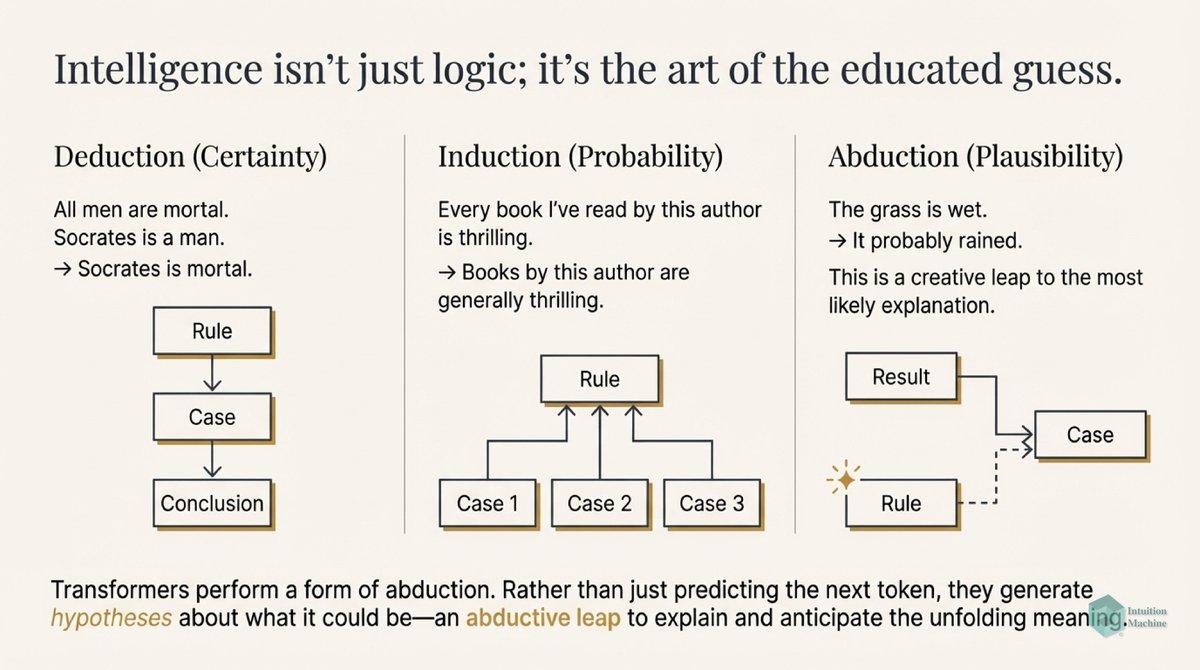

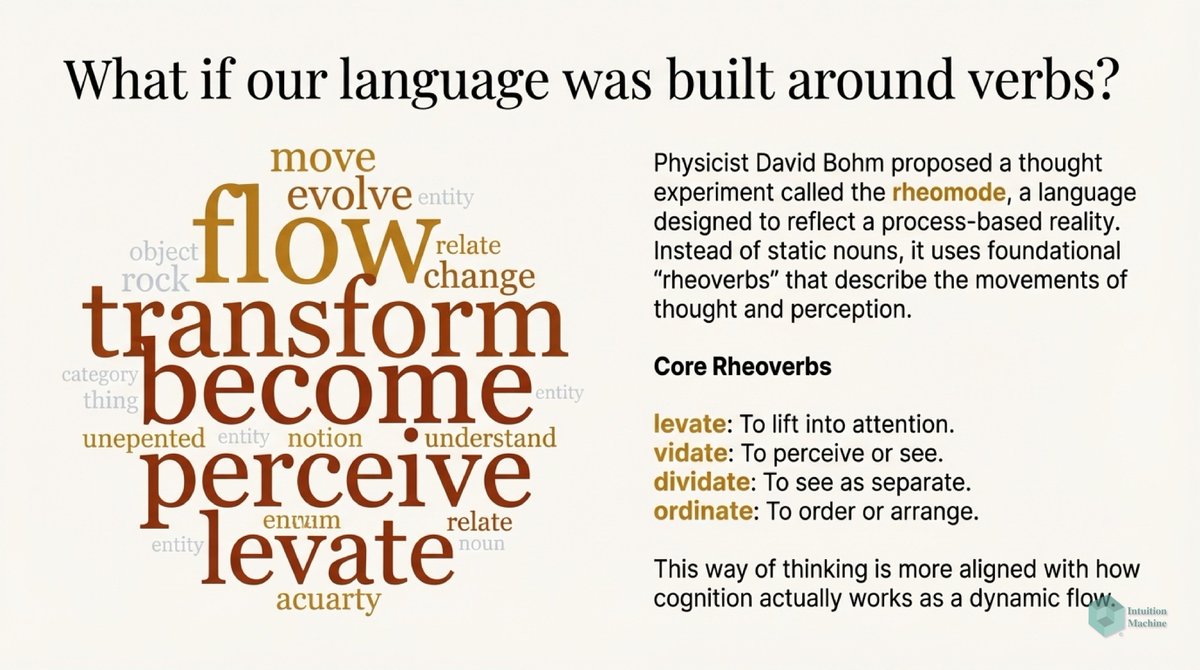

Meaning-making is all about discovering useful sign (see: Peirce) rewrite rules. #ai

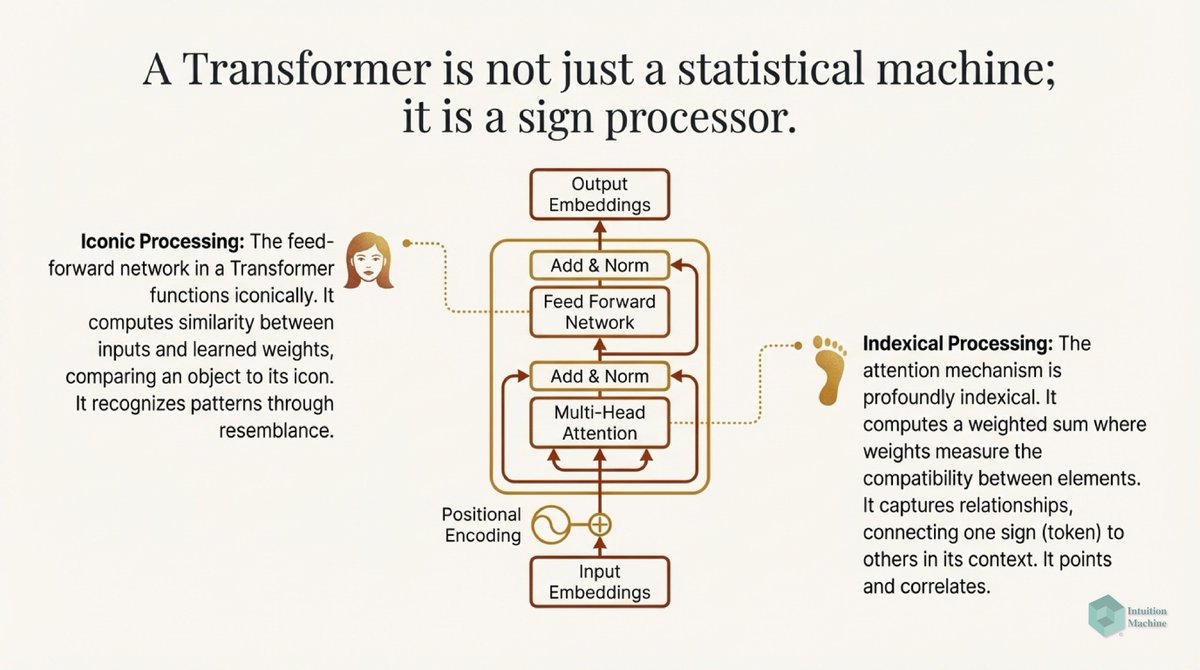

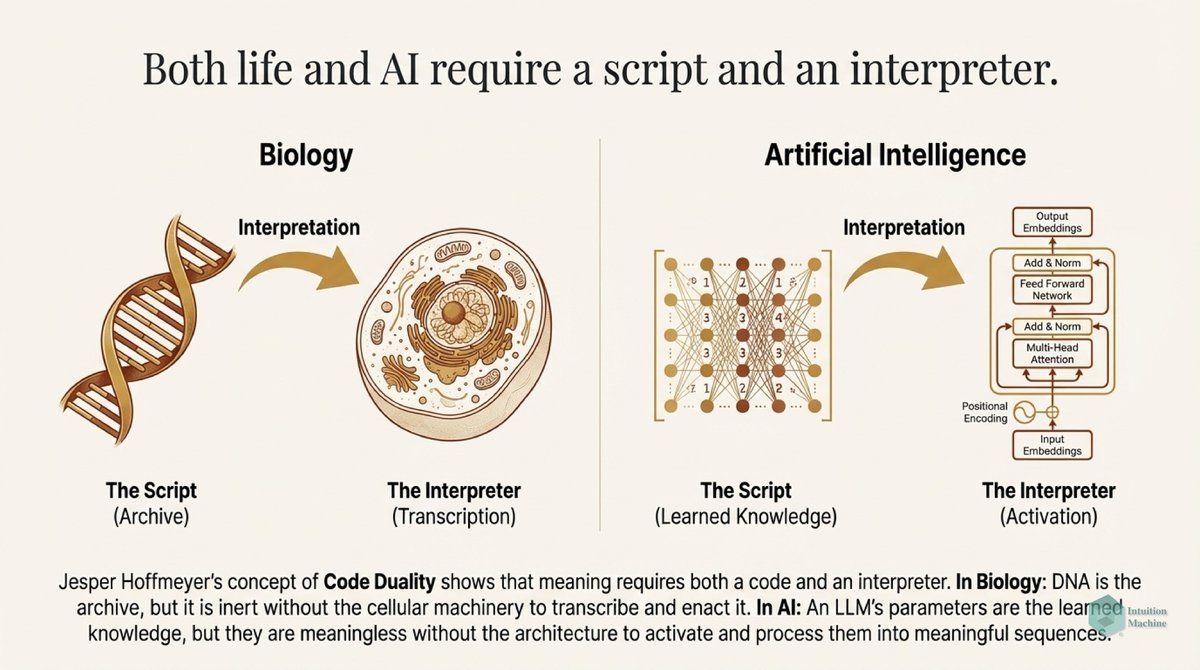

The conventional artificial neural network (i.e. sum of product of weights) is a rewrite rule from a vector to a scalar. Each layer is a rewrite rule from a vector to another vector.

A transformer block is a rewrite rule from a set of discrete symbols into vectors and back again to discrete symbols.

Execution of programming code are just rewrite rules transforming high-level code to machine code for execution.

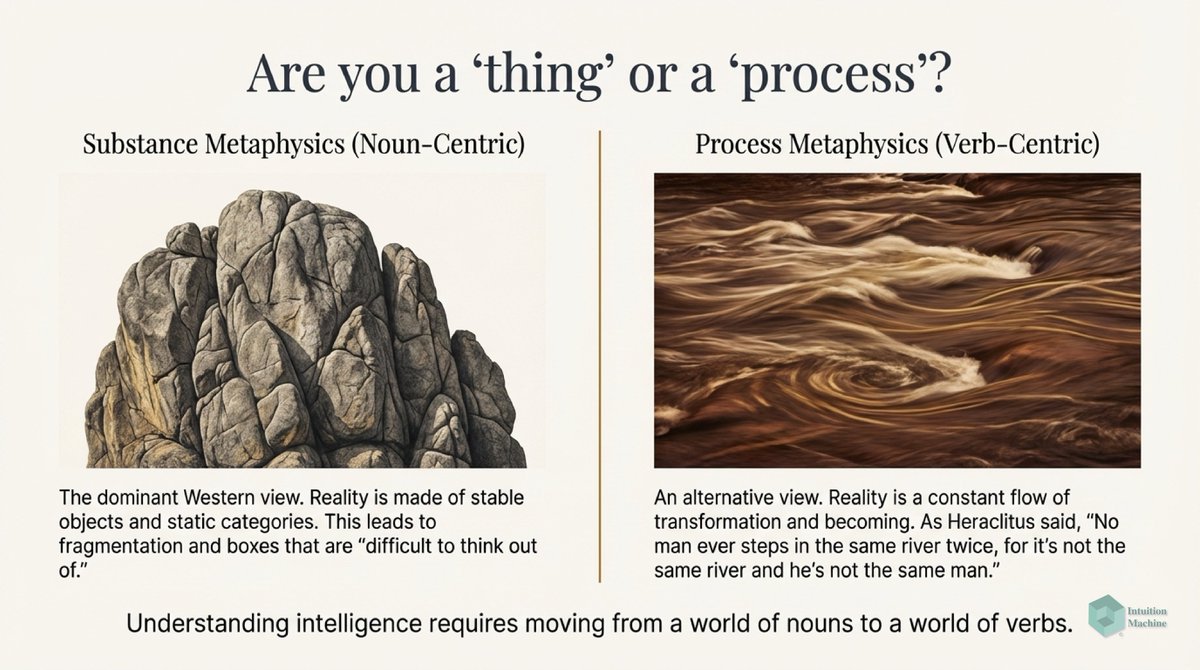

Re-write rules can do everything. The hard problem is discovering these re-write rules. The even harder problem is formulating a system that discovers these re-write rules.

Deep Learning networks learn re-write rules by adjusting weight matrices. No new rules are added, just the relative importance of rules are adjusted. Like biology, this involves a differentiation process and not an additive process.

DL networks only work if given sufficient diversity on initialization. Initializing all weights uniformly is a recipe for failure.

• • •

Missing some Tweet in this thread? You can try to

force a refresh