Proud to announce our newest graph #research #paper, we introduce directional aggregations, generalize convolutional #neuralnetworks in #graphs and solve bottlenecks in GNNs 1/5

arxiv.org/abs/2010.02863

Authors:@Saro2000 @vincentmillions @pl219_Cambridge @williamleif @GabriCorso

arxiv.org/abs/2010.02863

Authors:@Saro2000 @vincentmillions @pl219_Cambridge @williamleif @GabriCorso

By using an underlying vector field F, we can define forward/backward directions and extend differential geometry to include directional smoothing and derivatives. By using different directional fields, the GNN aggregators become powerful enough to generalize CNNs. 2/5

We propose to use the gradient of the low-frequency eigenvector as directional vector field to guide the aggregation. We theoretically prove that it reduce both over-smoothing and over-squashing. 3/5

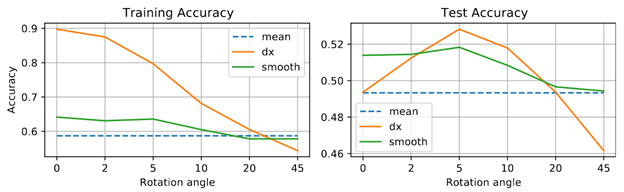

For the first time, we introduce data augmentation by rotating the graph kernels, mimicking standard image augmentation, and showing empirical improvements of the accuracy and reduction of overfitting. The derivative aggregation is observed to be very expressive. 4/5

We show very significant improvements over simple aggregations such as mean and sum and improve the state-of-the-art on all tested datasets. This directional framework can be expanded to include bigger graph kernels without over-smoothing 5/5

• • •

Missing some Tweet in this thread? You can try to

force a refresh