Chapter 4: Head rotation sensation is a splendid example of dynamic #Bayesian multisensory fusion since it involves several sensors with different dynamics. These sensor can be put in conflict or switched on/ off experimentally. Follow the tour! #vestibular

2/ We have (at least) 3 rotations sensors with different dynamics: the inner ear's canals detect acceleration; vision velocity, and graviceptors position (when rotating in vertical planes). The brain also relies on a zero velocity prior. Looks like a job for a #Kalmanfilter!

3/ I will explain (&simulate) motion perception during constant velocity rotations (starting from 0 velocity at t=0). Each sensor can report the motion, or 0, or be off altogether. There's experiments in the literature covering nearly all combinations! This will be a long thread!

4/ Experimentally, we have a very nice readout of the brain's central estimate of rotation: it is the velocity of stabilizing eye movements that the brain generates, even in complete darkness, to stabilize eyes in space. Simple, accurate, and it works amazingly well! Watch movie!

5/ To recapitulate, I will show dynamic motion perception in about a dozen different paradigms, based on data spanning 4 decades of experiments, and modeled using a Kalman filter (I'll upload code) with 4 free parameters! elifesciences.org/articles/28074

6/ Last remark: so make things intuitive, remember that the first few seconds of rotation correspond to ‘high frequency’ motion (they are representative of brief duration movements) whereas the steady-state corresponds to ‘low-frequency’ motion.

7/ First, the canals. They sense acceleration & integrate it partially: their signal decreases exponentially (tau ~4s). Theoretically the brain could invert their transfer function to compute velocity; but this accumulates noise and results in high uncertainty at low frequencies.

8/ So the brain merges canals with a zero velocity prior. The prior's impact increases at low frequencies when canal uncertainty is high. As a result, rotation perception (blue) decreases exponentially; however with a much longer time constant than the canals themselves.

9/ This explains the well-known dynamics of rotation perception and VOR during rotations in darkness (canals only), which is illustrated in the movie in tweet 4/.

10/ Next, visual signals. Vision senses velocity and has a constant uncertainty over time (= at all frequencies). During rotation in a visual environment (e.g. a room with light on), vision complements canals at low frequency and rotation perception is veridical (boring).

11/ We can also put canals and vision in conflict by rotating a visual surround around a stationary head (“Optokinetic stimulus”). At high frequencies, vision is a little more reliable than canals and gets a weight of 66% (avg in Macaques). At low frequencies, vision dominates.

12/ The complementary experiment is to rotate the head with a head-fixed visual surround (“Light dumping”). You get a low vestibular gain and time constant: notice how this experiment and the previous sum up to 1. Experimentally, the system is linear at up to 60°/s.

13/ Btw, here is a recording of eye movements (called optokinetic nystagmus in this case) during visual stimulation, note the initial jump of eye velocity (this one had a lower visual gain), followed by rise to a plateau. Just like in simulations.

14/ What if you do an optokinetic stimulus for >30s and shut off the light? Remember that (1) canals sense accelerations and (2) they are never activated in this protocol. So canals will indicate 0 acceleration: rotation perception persists! But decays slowly because of prior.

15/ Here is an example recording of eye movements decaying exponentially after light is switched off - this is called optokinetic after-nystagmus.

16/ So no canal activation means no acceleration, and the estimate of rotation is smoothed. This happens because the brain uses an internal model of canals (in both 8/ and 14/). This gives a Bayesian basis to the historical concept of "velocity storage" in #vestibular science.

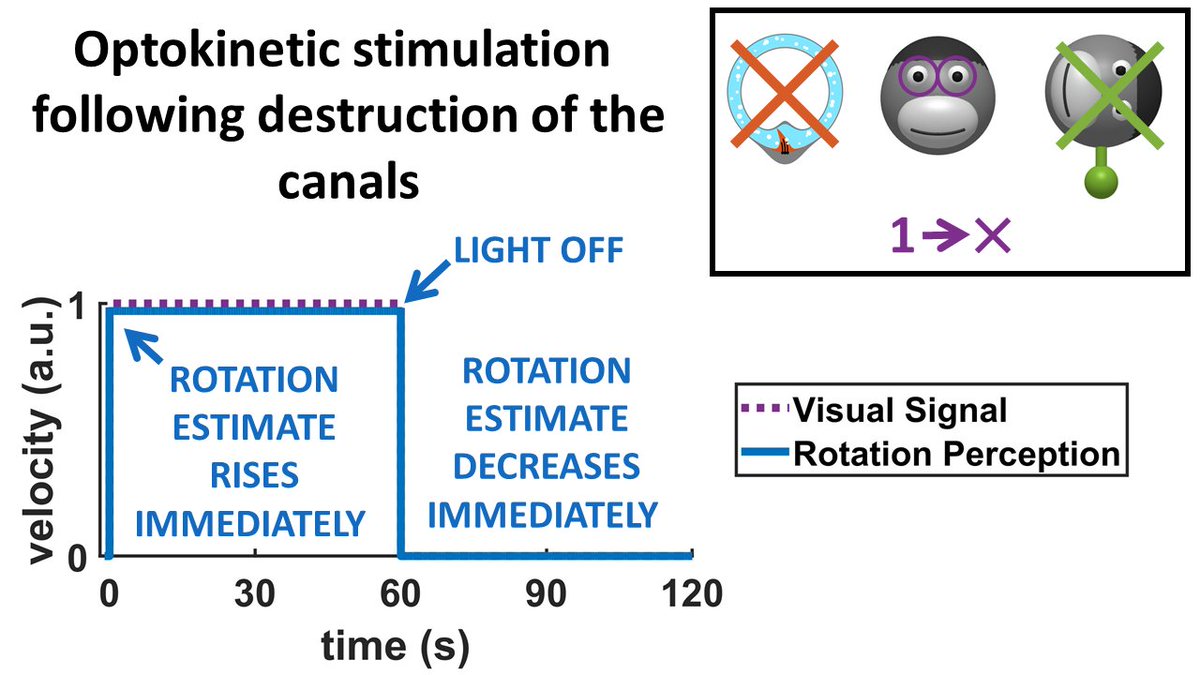

17/ What is you just eliminate the canals? The model predicts that, since the conflict with canals is eliminated, rotation perception will rise immediately during optokinetic stimulation and decrease immediately after. Indeed, it has verified!

18/ Wow, already 17 tweets and I haven’t even started with the otoliths yet! Let’s take a movie break (this movie is a complex 3D rotation perception protocol, I won't discuss it today) and go for it! Btw, the rotator here has contributed a lot to the science I am covering today!

19/ Otoliths sense head position relative to gravity (and linear acceleration but today we treat this as Gaussian noise). Position is the integral of velocity and integration has zero gain at high frequencies. So otoliths provide only low-frequency information about velocity.

20/ For instance, if you rotate about a horizontal axis (or off-vertical axis, hence the name Off-Vertical Axis Rotation), canals and graviception complement each other. Btw, OVAR around a horizontal axis is called ‘barbecue rotation’ in the literature. You can’t make this up!

21/ Similar to visual stimuli, you can ‘remove’ the otolith signal during OVAR by aligning the rotation axis back with vertical. Rotation perception decreases exponentially, indicating that rotation perception during OVAR is mediated by the velocity storage (as in 14/ to 16/).

22/ You can perform OVAR without activating the canals (how do you do that? There is a simple trick; see next tweet), and you will see velocity perception building up over time. Note that there is not ‘immediate jump’ since otolith don’t provide any high frequency signal.

23/ Here is how you do it (with an example recording): (Phase 1) rotate about a vertical axis until the rotation signal has decreased, (Phase 2) tilt the rotation axis and the velocity signal will build up, (Phase 3) go back to upright and watch the velocity perception decrease.

24/ The complementary protocol to OVAR is called tilt dumping: canals indicate that you rotate but otoliths do not (remember chapter 2). Rotation perception will decrease faster compared to 8/ (note: same time constant as in 22/).

25/ If you remove the canals altogether, the simulated rotation estimate during OVAR is completely abolished (it has been shown experimentally). This is because this low-frequency estimate, needs the velocity storage to build up; otherwise it is ‘continuously forgotten’.

26/ Last one: optokinetic stimulation encoding rotation vs gravity while the head is immobile (vision/otolith conflict) induces a constant rotation and tilt perception. The optimal velocity estimate is not the derivative of the optimal position estimate! And it’s been tested!

27/ To sum things up: the brain can sense rotation optimally and dynamically. This is a great system to study (and really fun experiments)! and read the brain’s sense of motion. Some of the neuronal pathways are known but a lots remains to be found.

28/ And all this (including sensors lesions) can be modelled with a Kalman filer using 4 free parameters: 3 sensory noise (for the 3 sensors) and 1 prior. I told you the vestibular system is great (#computationalneuroscience)! We can also add motor efferences, proprioception, etc

29/ This was the fourth chapter of my series of threads on fundamental mechanisms of self-motion perception (wow this one was long! 😅). Links to other chapters and info, including where to download videos, are here.

https://twitter.com/JeanLaurensLab/status/1302869103766634496?s=20

• • •

Missing some Tweet in this thread? You can try to

force a refresh