1/ One of the AWS services that always amazes and excites me is @dynamodb . And I also eagerly wait every year for @jeffbarr update on how AWS powered Prime Day that year where I rush to see DynamoDB numbers. A thread on how DynamoDB has evolved and scaled over the years 👇

2/ Let’s talk about scale first. During this year’s Prime Day, Amazon’s DynamoDB usage peaked at 80.1M requests per second making some 16.4T calls during a 66 hour window: amzn.to/2G2VGiG .

3/ Over the years these numbers have roughly doubled YOY. In 2019, it was 45.4M (amzn.to/3jwclc1) and 12.9M in 2017 (amzn.to/3jwclc1). Couldnt find 2018 numbers but we can assume it was roughly around the 25M range

4/ Forget about the entire Prime Day infrastructure for a moment. But just imagine operating a database infra that handles 80.1M requests per second with all those redundancies, monitoring, backup. DynamoDB would just take care of it for you

5/ And right after that window of peak usage, you can simply turn the capacity down to your regular needs. Scaling out and in is well understood for web/app servers. But imagine doing the same for databases. With DynamoDB you simply keep scaling capacity up & down per your needs

6/ One of the reasons why you can rely on DynamoDB and run mission critical workloads is because the service underpins many AWS services (more on this in a bit). Any new AWS region that gets launchedwill have DynamoDB from day one as services like SQS, AutoScaling use DynamoDB

7/ So you can be rest assured that AWS cares a lot about operational reliability of this service (not that AWS doesn’t care about other services :) ). And DynamoDB will always be available in all regions. So it’s a service that you can easily place your architecture bets on

8/ The service had its humble beginnings in 2012 (amzn.to/3otN5a8). Back then you chose Hashkey and Rangekey (these have become PartitionKey and RangeKey today) and simply set the read and write capacity (RCUs and WCUs)

9/ You had to carefully manage the provisioned capacity and the data model to get the single digit millisecond response that the service promises. Otherwise you end up seeing a lot of the famous “ProvisionedThroughputExceeded” errors in your API response

10/ And querying model was limited to Hashkey based lookups. Scans were expensive (still are) and time consuming(until parallelscans came). A year later, GSIs and LSIs were introduced to perform lookups on attributes other than the Hash key. But it was limited to only 5 per table

11/ One of the other common challenge that customers faced was the “Hot Partition” problem. Where an uneven distribution of the Hash key’s values result in some partitions being heavily used and DynamoDB would just throttle those requests

12/ While all these looked like limitations, I (and many customers) still loved the service because it just works and scales. Pick right use cases and spend time in table design, you can simply throw data at this service. You dial up and down capacity and it would respond

13/ A lot of customers that I worked with had tables with 10s and 100s of thousands of RCUs and WCUs and they had no DBAs. It was all developers just picking the right use case and right data model

14/ The service also had its fair share of trouble. During September 2015, the service had an incident that caused a major AWS outage that knocked off popular internet services. Here’s the full summary of the incident: amzn.to/34siQIr.

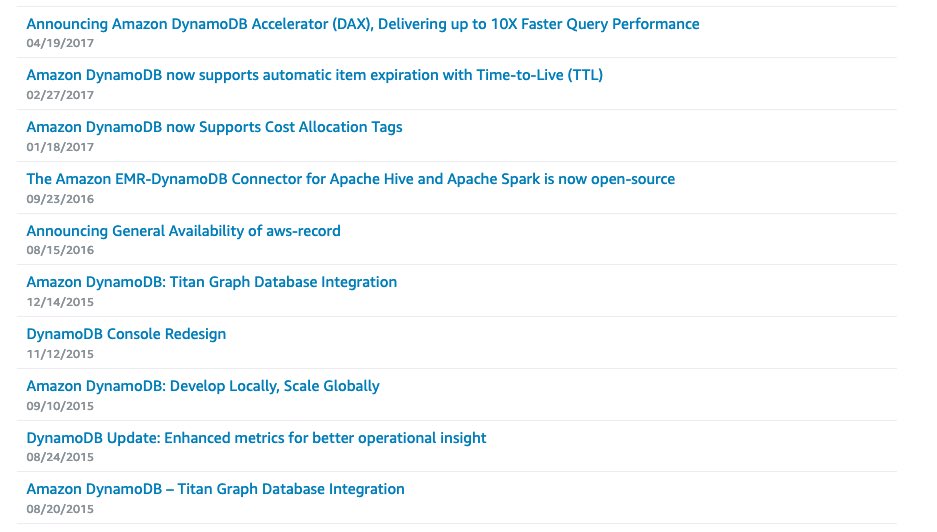

15/ I am quoting the above incident not to highlight the outage but to talk about what happened after that incident. Something that everyone can learn from. Here’s a screenshot of AWS announcements filtered by DynamoDB for the period 2015 to 2017:

16/ Right after that incident, the DynamoDB team just stopped on any feature developments and focussed only on one thing: making sure that incident doesn’t happen again. Focussing on operational reliability for its customers

17/ This was a time when customers were requesting for tons of features (PiTR, fixing the hot partition problems, encryption, etc…) and other cloud providers were launching Global Tables and so on. As a service owner you are already under pressure to deliver features

18/ The team still had to stick to whats important in the long run - customer trust. The trust that comes from the fact that customers can trust you to do the right thing

19/ While I can’t go into the details, a lot of fundamental things were re-architected paving into what came out of the service from 2017 onwards. Today, Adaptive Capacity handles most of the Hot Partition problems. AutoScaling and “OnDemand” almost removes RCU/WCU planning

20/ And with a whole lot of other features like Global Tables, DAX, Backup & PiTR, Transactions. And the service now has an Availability SLA: 99.99% for normal tables and 99.999% for Global Tables

21/ The service still continues to amaze me when these kind of announcements are made: aws.amazon.com/about-aws/what…

22/ Think about that for a moment. Encrypting all customer data across all regions while operating the service at scale and meeting all its metrics. All seamlessly in the background. And from a customer point of view - they didn’t do anything. Not even selecting a checkbox

23/ And that’s why DynamoDB is such an awesome service. A service that you can rely on and trust. Here’s to many more years of DynamoDB AWSomeness!!!

• • •

Missing some Tweet in this thread? You can try to

force a refresh