ELECTION THREAD: Today and tonight are going to be a wild time online.

Remember: disinformation actors will try to spread anger or fear any way they can, because they know that people who are angry or scared are easier to manipulate.

Today above all, keep calm.

Remember: disinformation actors will try to spread anger or fear any way they can, because they know that people who are angry or scared are easier to manipulate.

Today above all, keep calm.

A couple of things in particular. First, watch out for perception hacking: influence ops that claim to be massively viral even if they’re not.

Trolls lie, and it’s much easier to pretend an op was viral than to make a viral op.

Remember 2018? nbcnews.com/tech/tech-news…

Trolls lie, and it’s much easier to pretend an op was viral than to make a viral op.

Remember 2018? nbcnews.com/tech/tech-news…

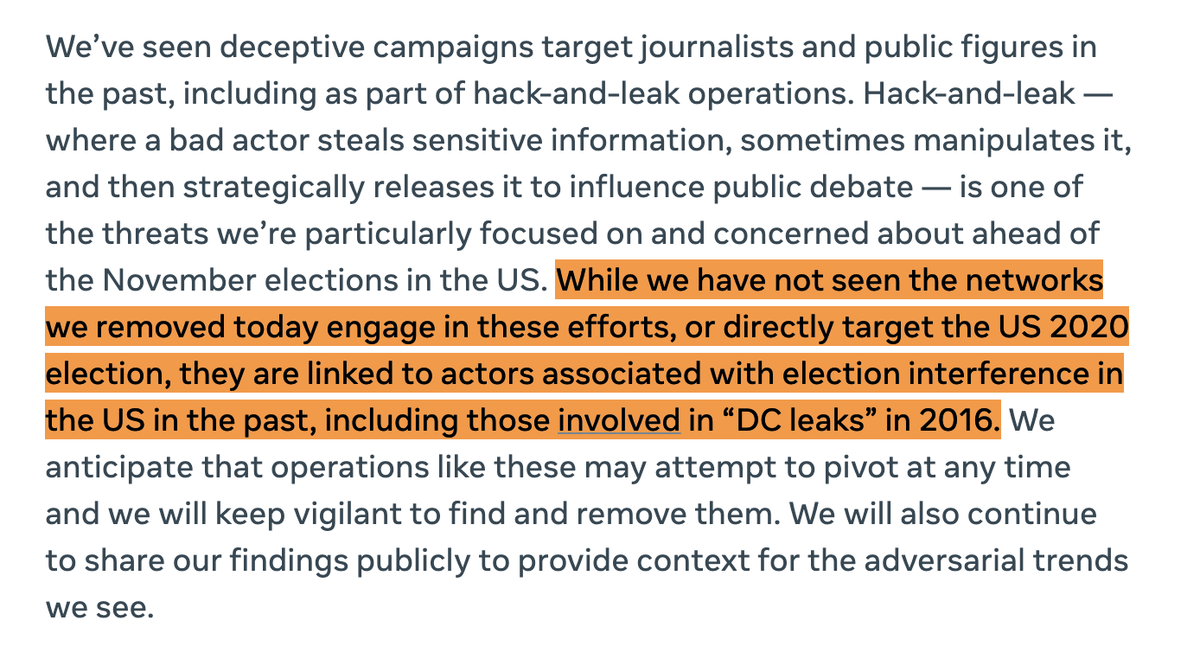

There have been huge improvements in our collective defences since 2016. Teams like @Graphika_NYC, @DFRLab and @2020Partnership; takedowns by @Facebook, @Twitter and @YouTube; tip-offs from law enforcement.

Trolls have to spend more effort hiding.

Trolls have to spend more effort hiding.

That doesn't mean the influence ops have stopped, but it does make it harder for them to break through.

If trolls claim to have had massive impact, demand the evidence, and measure it against known operations.

brookings.edu/wp-content/upl…

If trolls claim to have had massive impact, demand the evidence, and measure it against known operations.

brookings.edu/wp-content/upl…

Second, one thing we’ve seen in elections around the world is false or exaggerated claims of election fraud, designed to de-legitimize the outcome.

Russian assets have been pushing a “2020 fraud” narrative for a long time - but not getting traction.

Russian assets have been pushing a “2020 fraud” narrative for a long time - but not getting traction.

https://twitter.com/2020Partnership/status/1323659601644978176

Again, with claims like that, keep calm. Check the evidence. Ask how easily it could be manipulated. Ask if the claim actually originated today: there have been plenty of cases of repackaged claims that use footage from years ago.

https://twitter.com/DFRLab/status/1322630178032504833

Any election is a complex exercise with millions of moving parts. This one’s more complex than most, and the results will take longer.

That's the window of opportunity that influence ops will try to use to sow false claims, fear and anger.

Don't be taken in.

That's the window of opportunity that influence ops will try to use to sow false claims, fear and anger.

Don't be taken in.

Disinfo feeds on fear and anger. It has many targets, and many people can spread it unwittingly, especially in hyper-tense times.

If claims of interference or fraud come in, keep perspective. Keep watch. And keep calm.

If claims of interference or fraud come in, keep perspective. Keep watch. And keep calm.

• • •

Missing some Tweet in this thread? You can try to

force a refresh