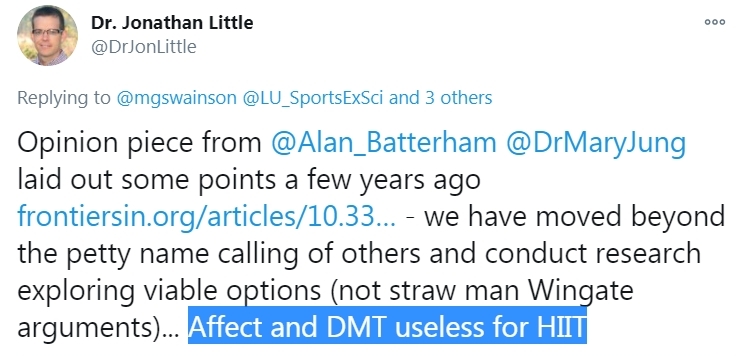

"Affect and DMT useless for HIIT." Inspired by this thoughtful and incisive assessment of Dual-Mode Theory (DMT) by Dr Little (who has clearly "moved beyond petty name calling"), let me again offer some thoughts that may help colleagues better understand what the issues are here.

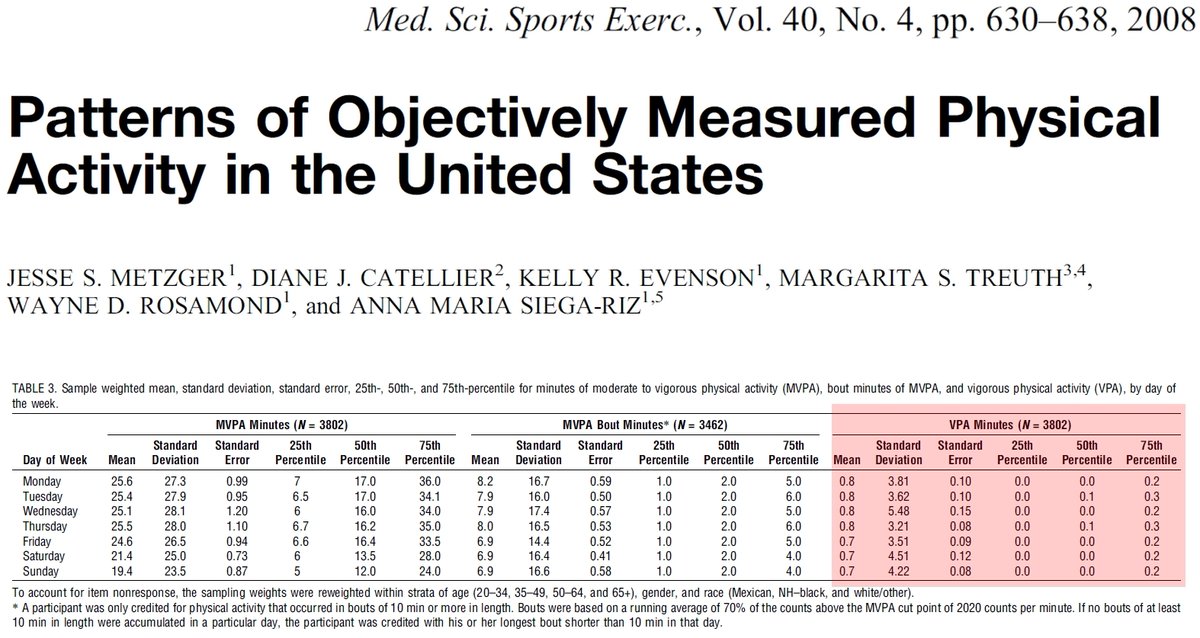

Let's start from some basic points. First, the "debate" over HIIT is not really about "options" or about public health in general. As explained in a recent thread (see link), nearly all people avoid vigorous-intensity (let alone "high-intensity") activity.

https://twitter.com/Ekkekakis/status/1321287090315972609

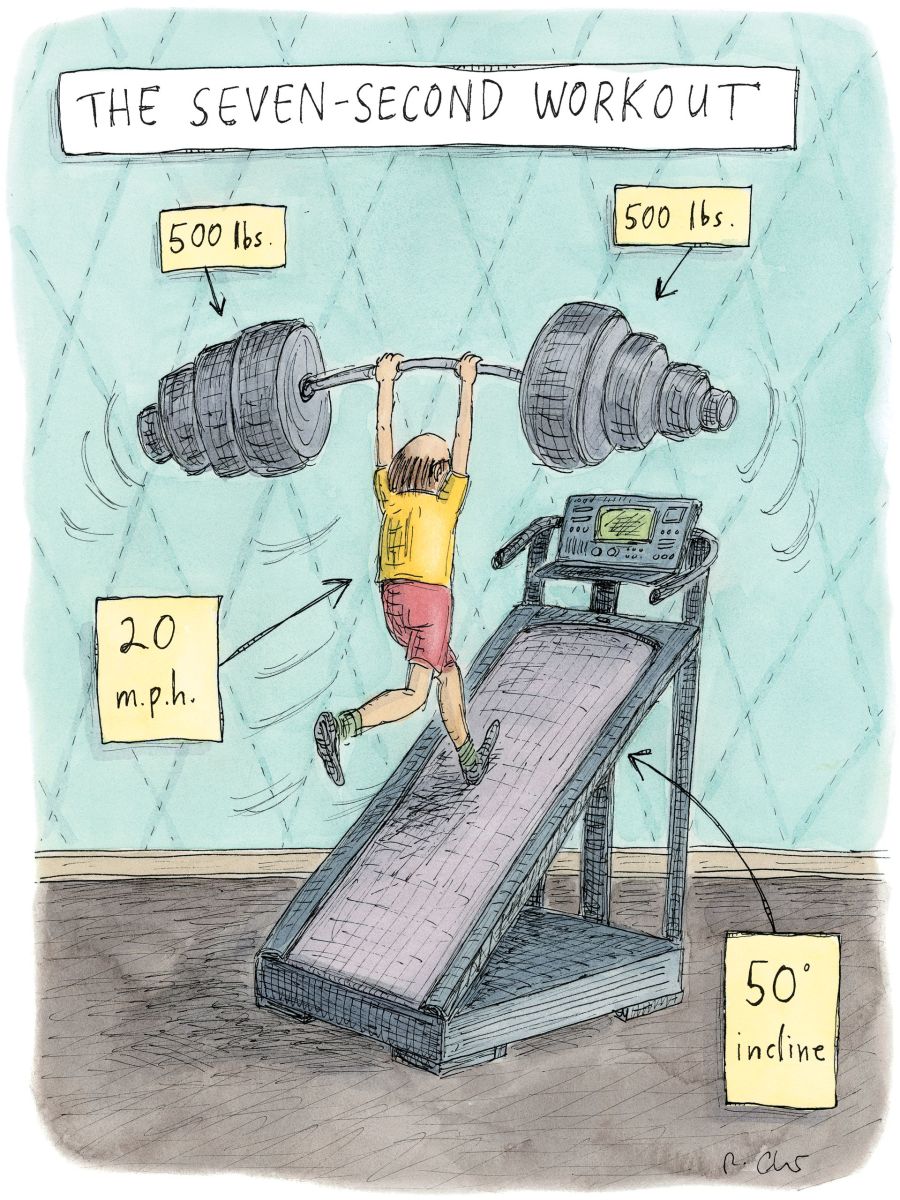

Instead, one of the main engines propelling the HIIT phenomenon is that it presents an argument that seems believable to non-experts, including non-specialists who review grant applications: "look, we discovered a silver bullet for the #1 reason for inactivity: lack of time!"

If you tell an endocrinologist on a grant review panel that the solution to physical inactivity is super-short, high-intensity exercise, they may buy it. They really don't know any better. But the argument cannot withstand serious analysis (for why, see:

https://twitter.com/Ekkekakis/status/1321287090315972609).

The second engine propelling the HIIT phenomenon is a shocking laxity of methodological standards. For many of us, highly publicized HIIT studies have become the most reliable source of "what not to do" examples for our graduate-level research-methods and statistics courses.

For example, let's say that you read this: I devised a type of exercise (doesn't matter what) that can DOUBLE endurance capacity after just 16 minutes of training (6 sessions, 2 wks) without changing VO2max! Cohen's effect size is d = 2.9. Would you believe me? Is this plausible?

This should have been the "Daryl Bem" moment of exercise science, the "last straw." The entire field should have said: "Enough is enough! It is time to clean up our science." But it didn't happen this way. Instead, this was celebrated as a breakthrough. slate.com/health-and-sci…

Exercise science was divided in half. The "true believers" were convinced that we were witnessing a long-awaited scientific revolution. The rest of us started thinking: This is clearly a methodological artifact. How can a result like this be obtained? What are the possibilities?

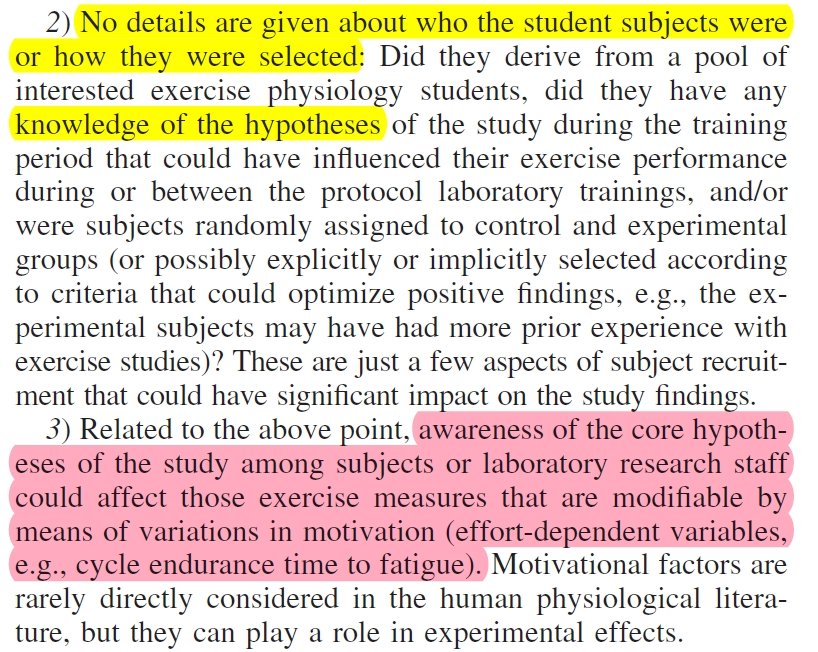

See how an experienced psychophysiologist thinks & train your mind to think like this: Dr Grossman zeroed in on all crucial possibilities. Who were the participants? What was the social atmosphere of the experiment? What were the researcher expectations? doi.org/10.1152/japplp…

Now, read the response: "We are keenly aware of the potential for experimenter bias and/or sloppy procedures to create error variance and/or prejudice study outcomes." In other words, we maintain nothing but the highest scientific standards, there is no bias in our work.

If you believe in your heart "there was no bias," this thread is not for you. If you are learning to think like Grossman, keep reading. Compare the description of the participants as it appeared in the research article and in a popular book. Can you spot the crucial differences?

Do you see that "recreationally active" is actually code for "athletic" and "student population" actually means our own Kinesiology students? Our own students know what we study, what we are passionate about, what we expect to find. They walk the hallways and attend our lectures.

Now compare the description of the lab environment during training. Read the excerpt from the research article, then the description from the popular book. Can you spot the differences? Can you visualize the social dynamic of the experiment? Do you see what Grossman was saying?

In actuality, the first HIIT study did not re-discover high-intensity interval training (after the Russians, and the Germans, and the Japanese, etc). It was not even an exercise physiology experiment. It was an exercise psychology experiment that re-discovered social influences.

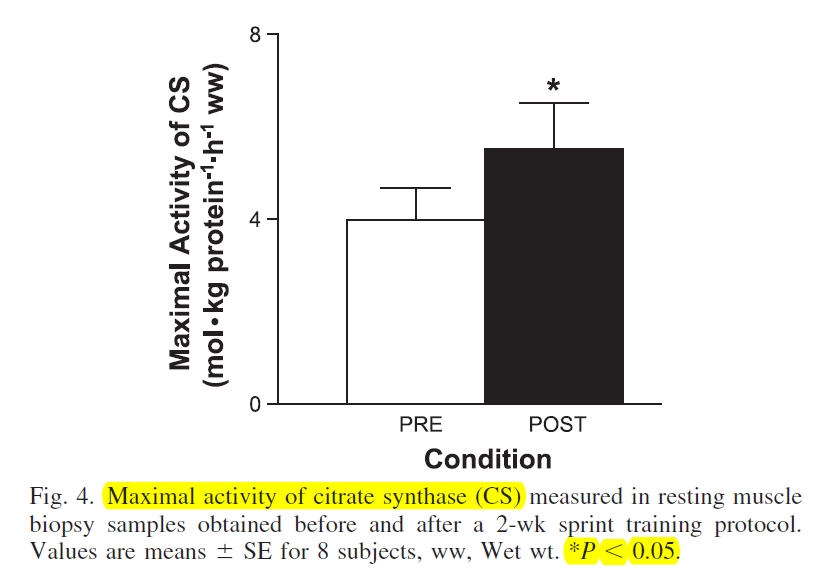

Nonsense, some of you will say. There are significant physiological changes, those are real, you can't make those up. Actually, yes, you can. It is hugely important to return to the fundamentals of statistics we (sort of, kind of) learned during our first year of graduate school.

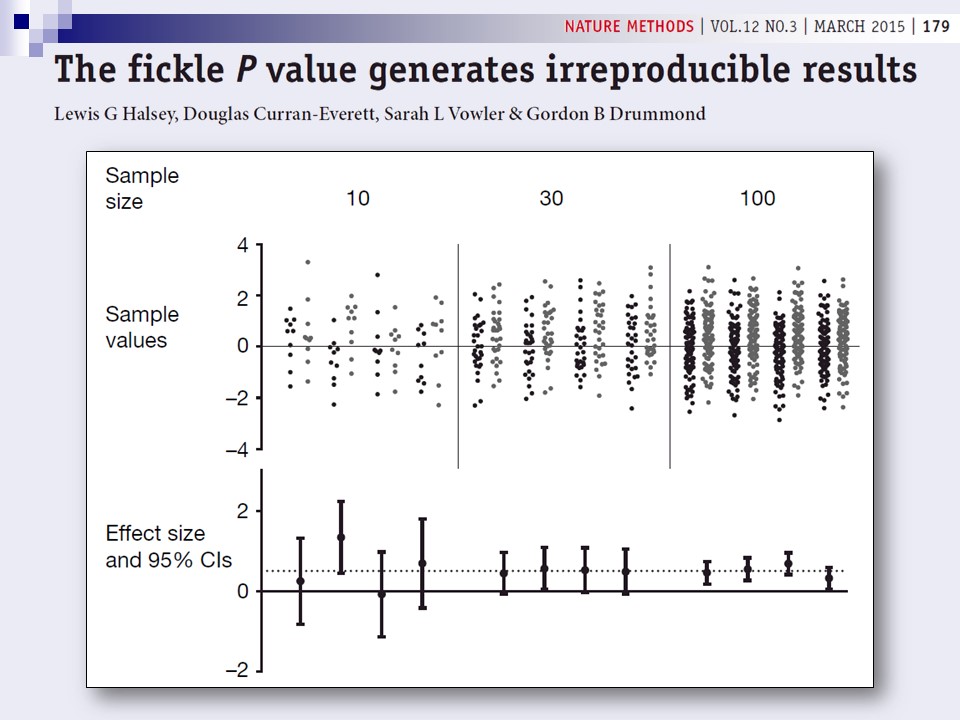

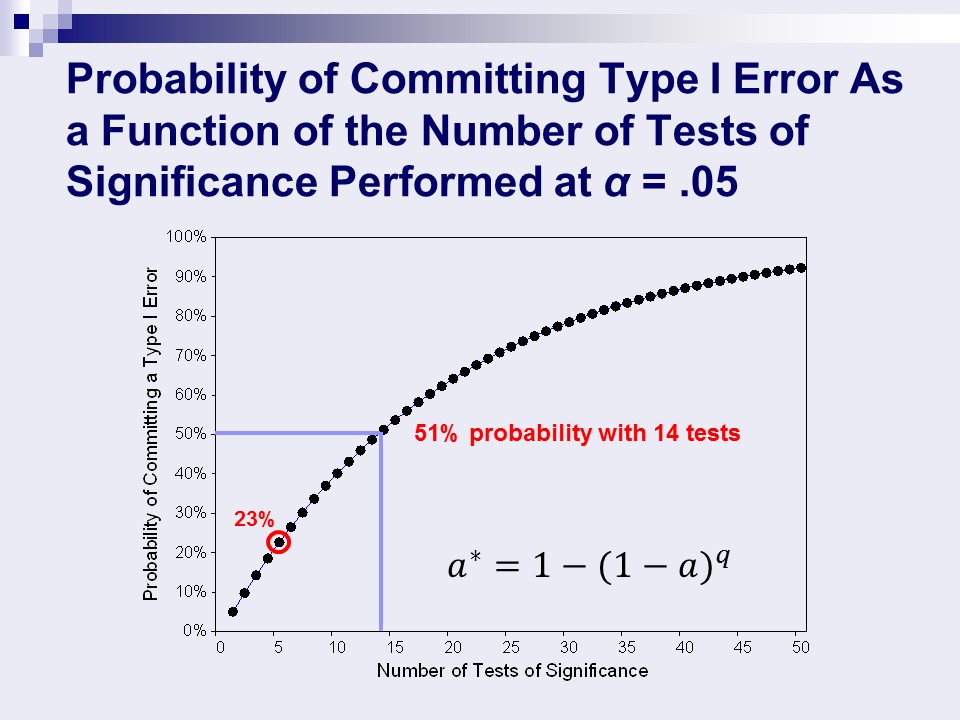

Let's start with the multiplicity problem and alpha inflation. If you have a sample size of, say, 16 and 20-30 (disclosed and undisclosed) dependent variables, you are destined to make some fantastic (albeit false) scientific "discoveries." doi.org/10.1038/nmeth.…

Why? The tiny samples lower your statistical power BUT offer a great "advantage": instability! Means jump around wildly. Then, what you need to do is perform numerous probability tests at .05. With 14 probability tests at .05, you have > 50% odds of finding *something* by chance.

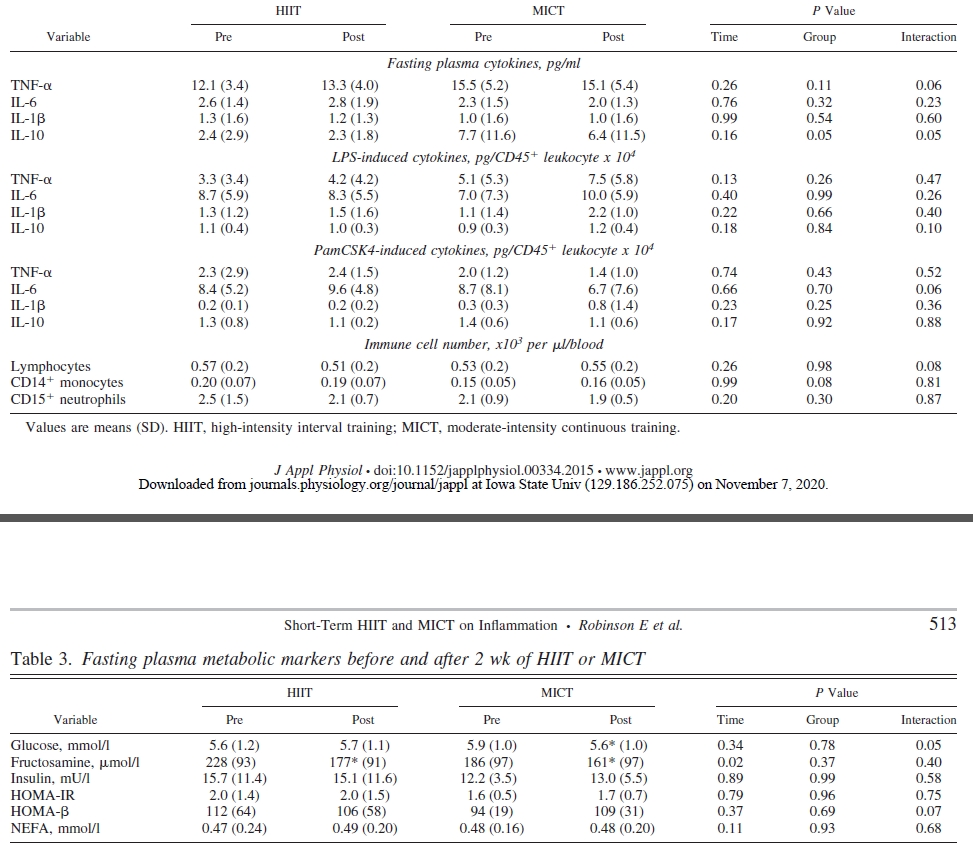

Pff, I know that, you'll say. OK, now check published HIIT articles. Count: time to exhaustion, VO2 peak, peak power, mean power, fatigue index, ventilation, respiratory exchange ratio, several enzymes, several metabolites, ATP, phosphocreatine, creatine... Something WILL stick!

Seriously, go check! It's the same golden recipe: tiny samples and long lists of dependent variables, all tested at .05. Glucose metabolism, inflammatory cytokines... Something will stick, it always does. The fact that physiology journals permit this, doesn't make it good science

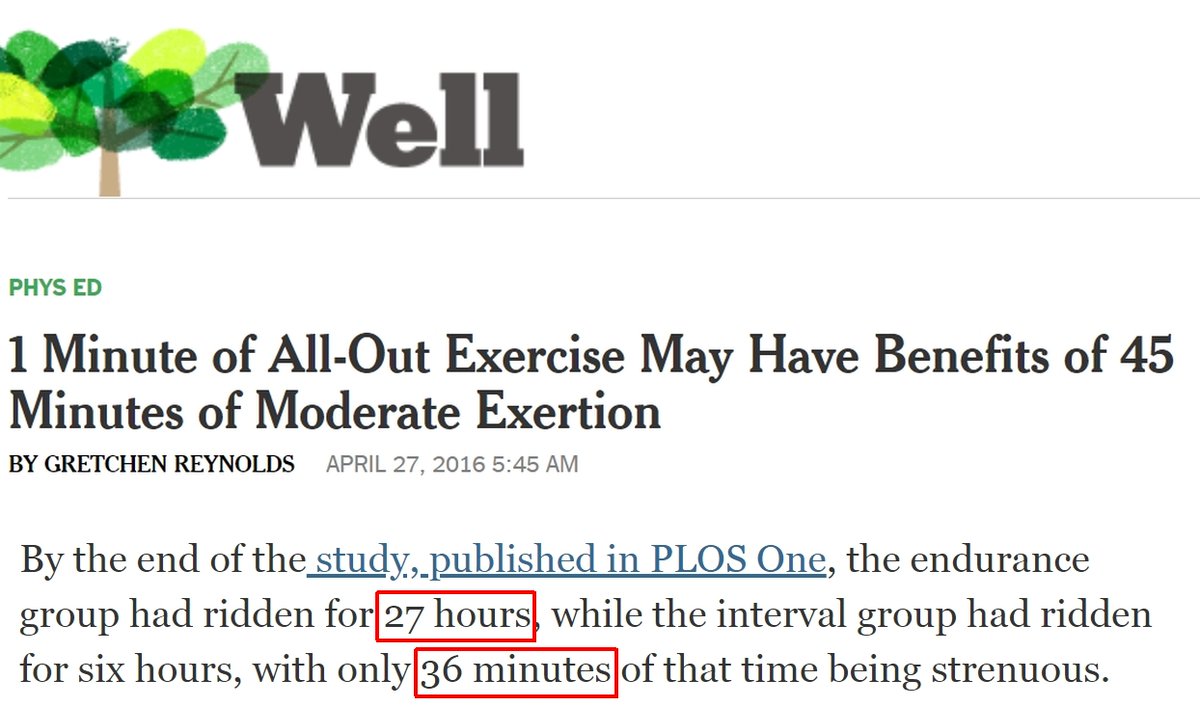

How about the findings that a just a few minutes of HIIT are "just as good as" hours and hours of moderate-intensity exercise? Those are absolutely remarkable, spectacular, breathtaking findings, right? Those demonstrate that we've made a startling discovery, no?

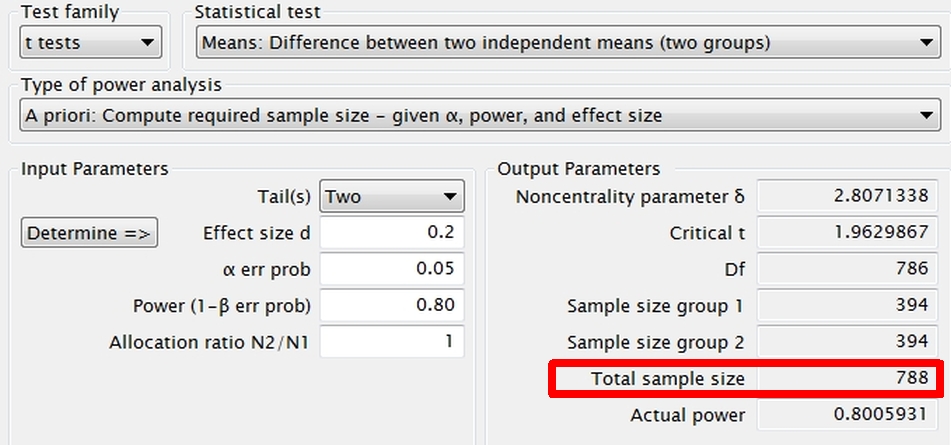

Sorry, these too are statistical artifacts. When you have 2 groups exercising with different modalities over brief periods (a few weeks), any differences in outcomes may be, at best, small. If so, to keep the chance of a Type 2 error at no more than 20%, you need 788 participants

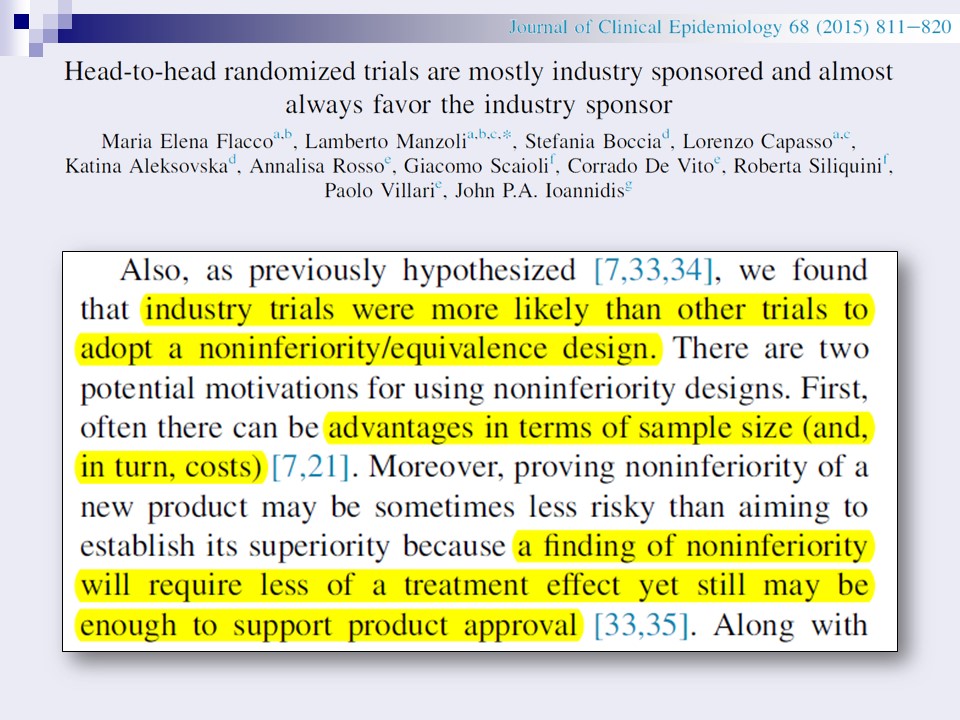

This is one of the oldest statistical tricks in the book, routinely exploited by pharma. When they know they don't have a "winner," they conduct trials with a hypothesis of "equivalence" rather than "superiority." And to ensure they do find "equivalence," they use small samples.

Simply, if the expected effect size for the difference between groups is "small" (requiring N=788 to detect, with 80% confidence at .05), a sample of 10-20 per group almost guarantees that you will not find a significant difference. We are discovering what pharma has long known.

This concludes the first section, on how HIIT started, and what the main engines are that are still driving this phenomenon today. In a nutshell: (1) lack of time is NOT the reason for inactivity, and (2) fundamental research methods and statistical principles MUST be adhered to.

Now, let's turn to psychology. To sustain the HIIT narrative (& the funding potential associated with portraying HIIT as a "solution" to the public-health problem of physical inactivity), it is crucial to build a case that HIIT is not only tolerable but enjoyable for the masses.

This was very unfortunate for me because I suddenly found myself, my research, my methods, and my theory entangled in this situation. How did we get to the point where Dr Little, an exercise physiologist, confidently proclaims that my (psychological) theory is "useless for HIIT"?

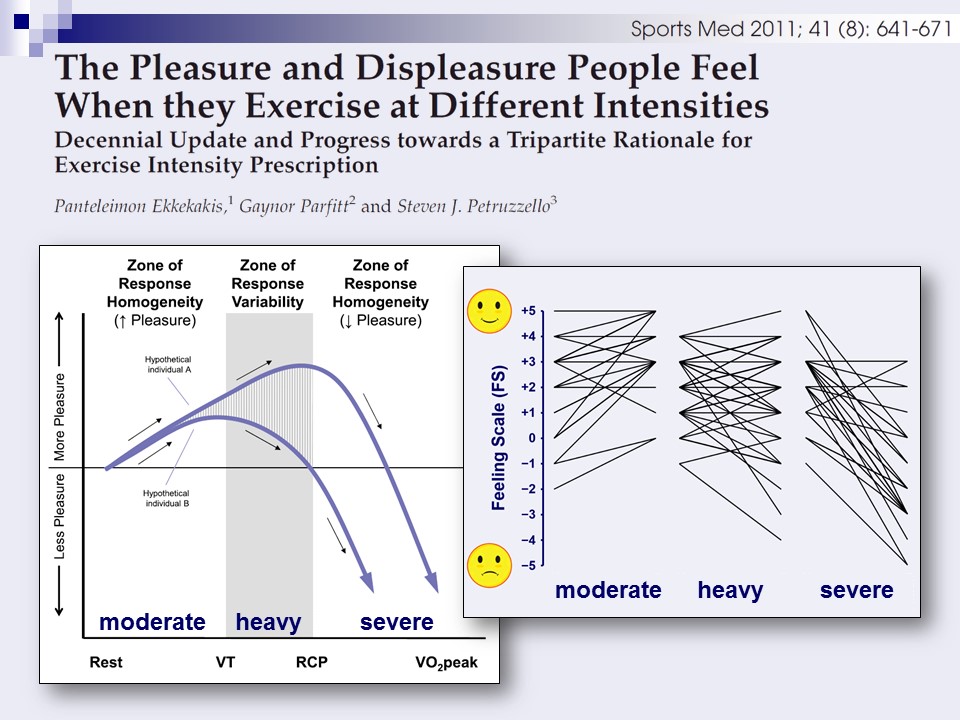

Not thinking that there will come a day that this work will be seen as a threat to the career ambitions of anyone, together with colleagues from around the world, we pieced together the dose-response relation between exercise intensity and pleasure-displeasure.

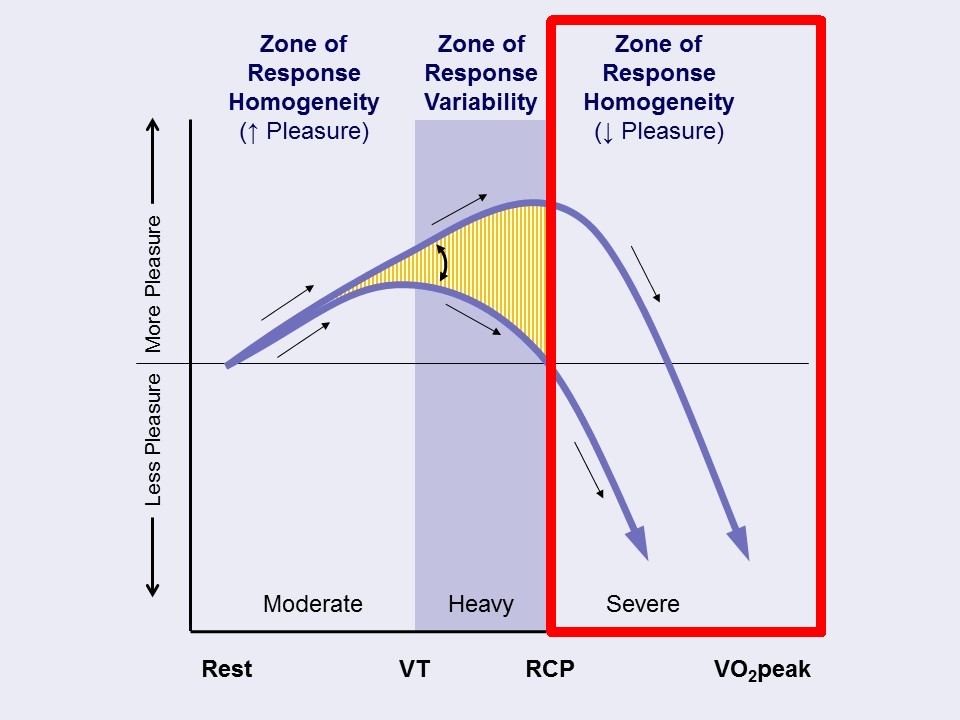

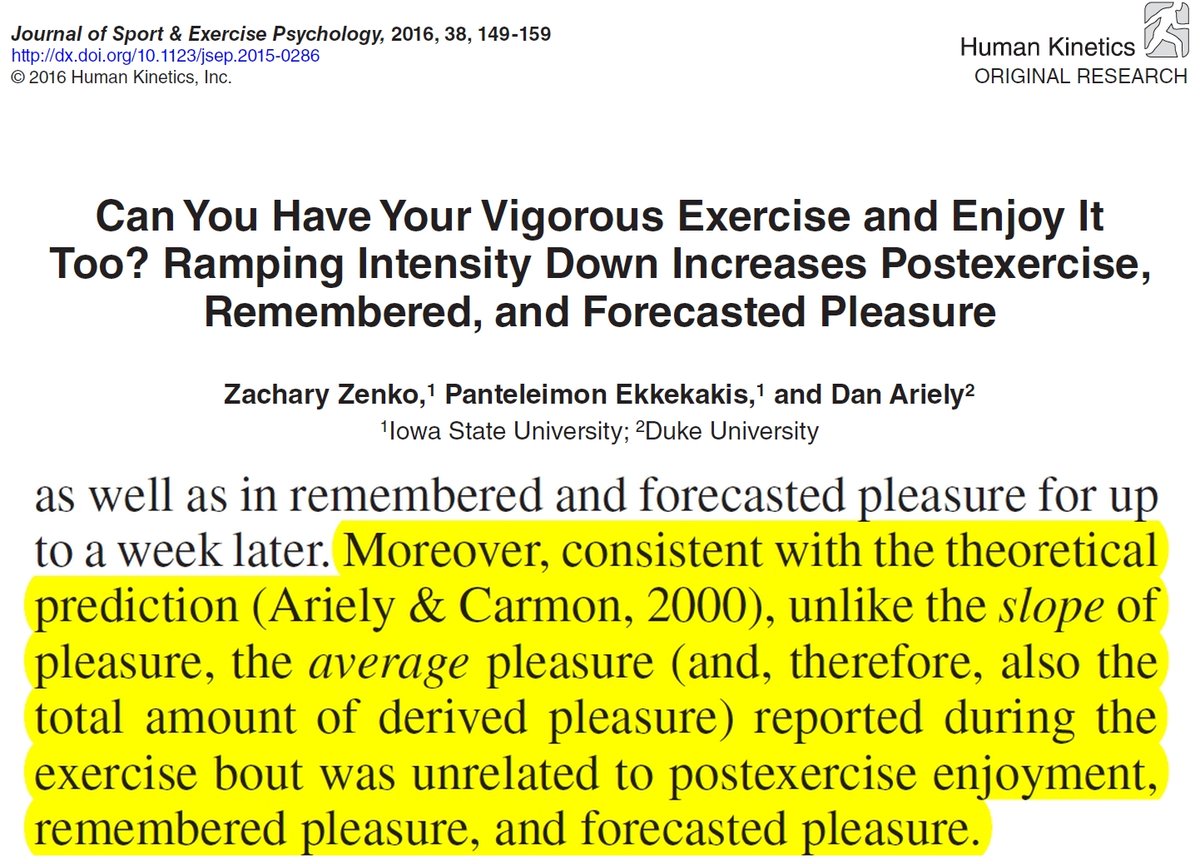

The Dual-Mode Theory (DMT) postulates that, when exercise intensity is in the "severe" range (i.e., approaching maximal capacity), there is a near-universal decline in pleasure, which serves as a signal to consciousness that the body is experiencing homeostatic perturbation.

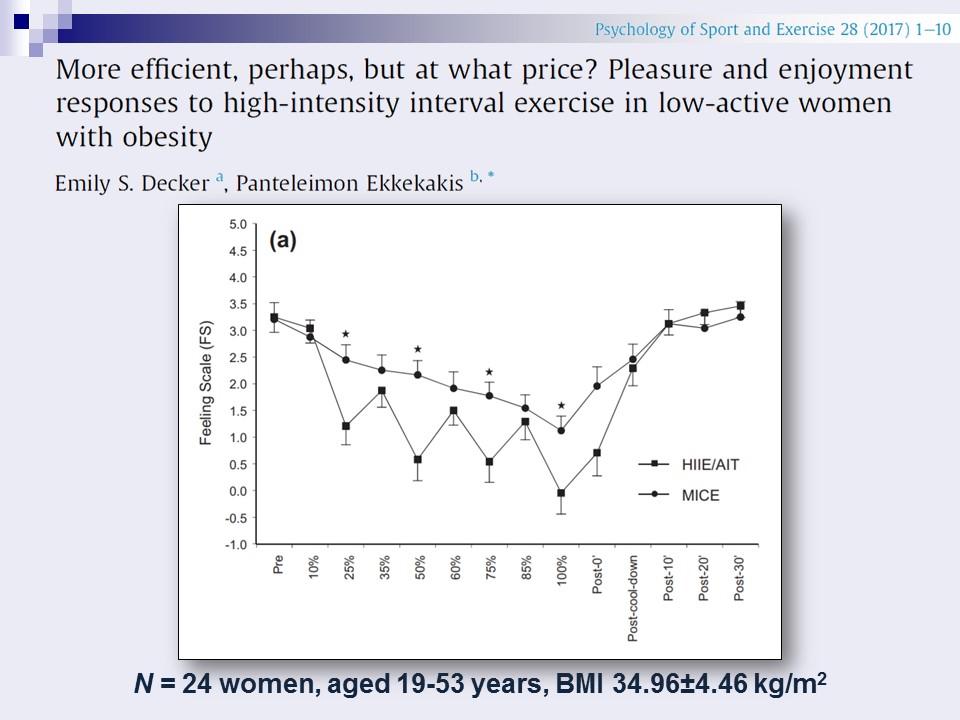

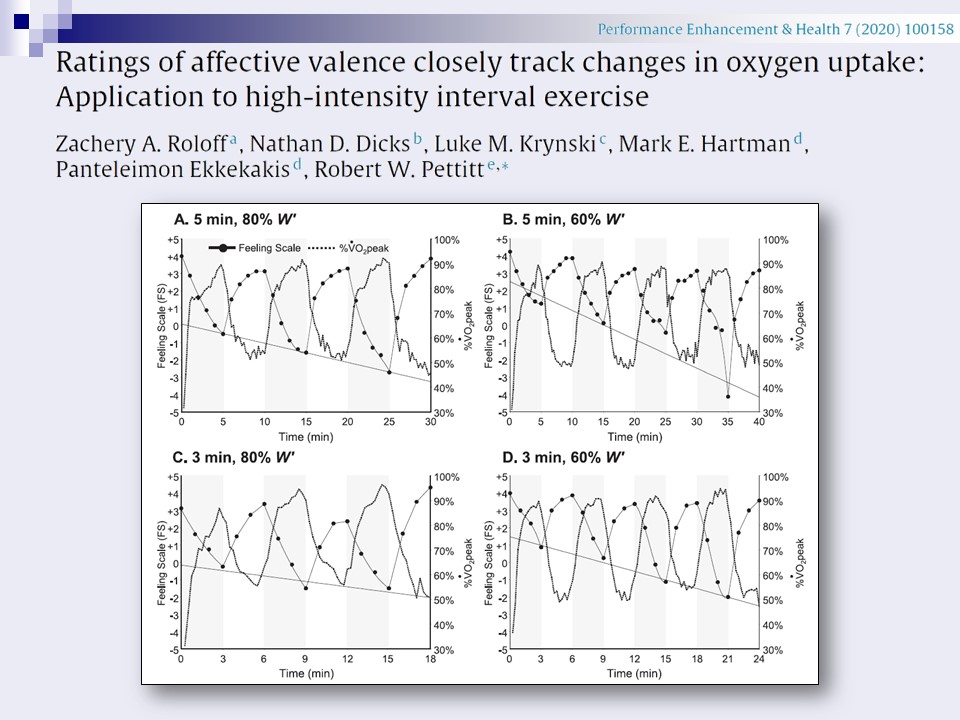

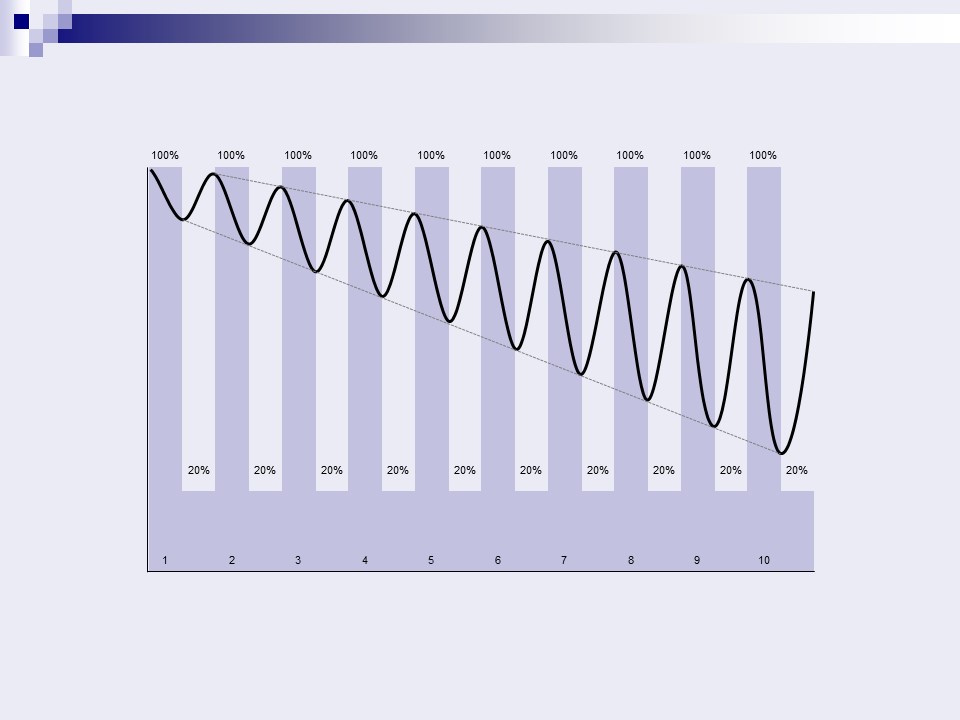

The prediction applies to HIIT. Pleasure ratings respond instantly to oscillations in exercise intensity and the changes in pleasure are proportional to the homeostatic perturbations. Moreover, because recovery is usually incomplete, there is a declining trend over the session.

Researchers who have assumed the responsibility to generate data showing that HIIT is pleasant and sustainable are pursuing 2 agenda items: (1) HIIT makes people feel no different from moderate intensity; (2) how people feel during HIIT does not predict physical activity anyway.

What makes this remarkable is that, given the lack of appropriate psychological expertise, this narrative found its way in the recent "scientific pronouncements" by the American College of Sports Medicine that were used as the basis for the 2018 US Physical Activity Guidelines.

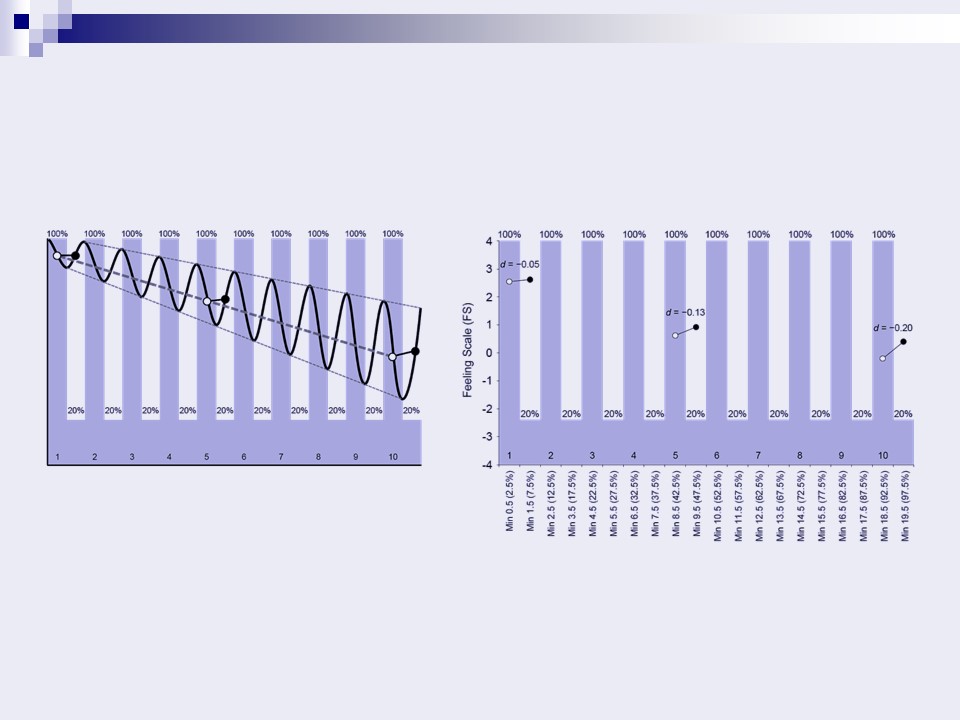

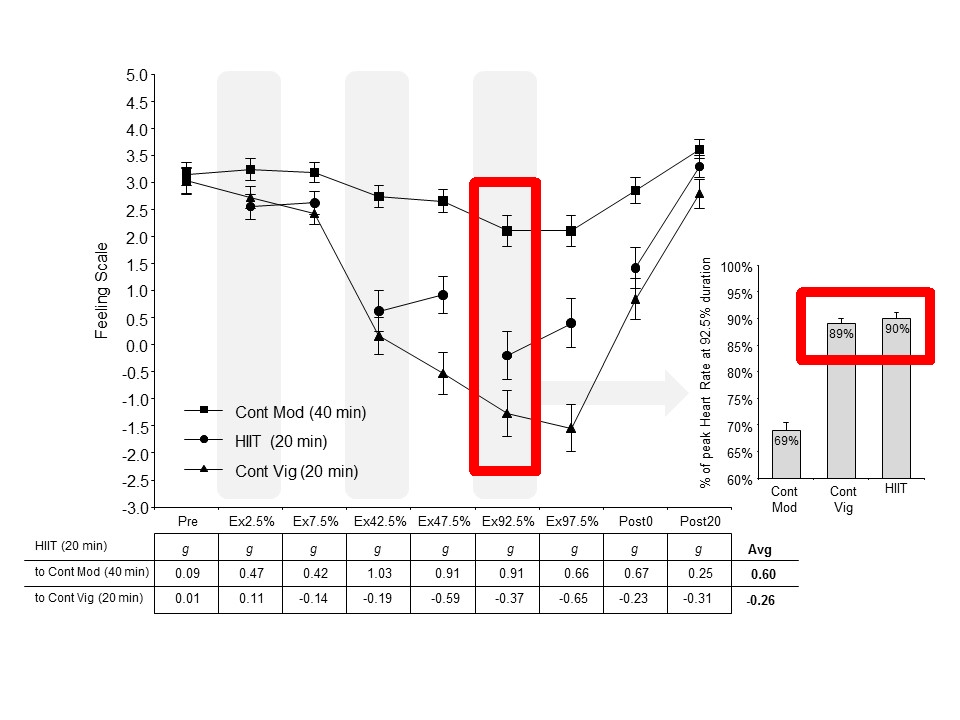

So, let's explain how such data are generated. If this is the pleasure-displeasure response to a session of 10x1 HIIT, and you were pursuing the two aforementioned agenda items, you want to (1) attenuate the appearance of displeasure and (2) reduce the interindividual variation.

To attenuate the appearance of displeasure, you have to misrepresent the true shape of the response. The easiest way is to sample pleasure-displeasure in such a way as to miss the negative affective peaks. This means: don't ask people how they feel at the end of the intervals.

There are 2 versions of this. One asks people how they feel AFTER the high-intensity interval, when they feel the rush of relief of the first seconds of recovery. The more extreme version asks people how they feel halfway into the interval, thus cutting the negative peak in half.

To comprehend the misrepresentations potentially resulting from these tricks, the graph on the right shows actual data obtained when people were asked only half way through intervals and recovery periods. The actual responses would have probably looked like the graph on the left.

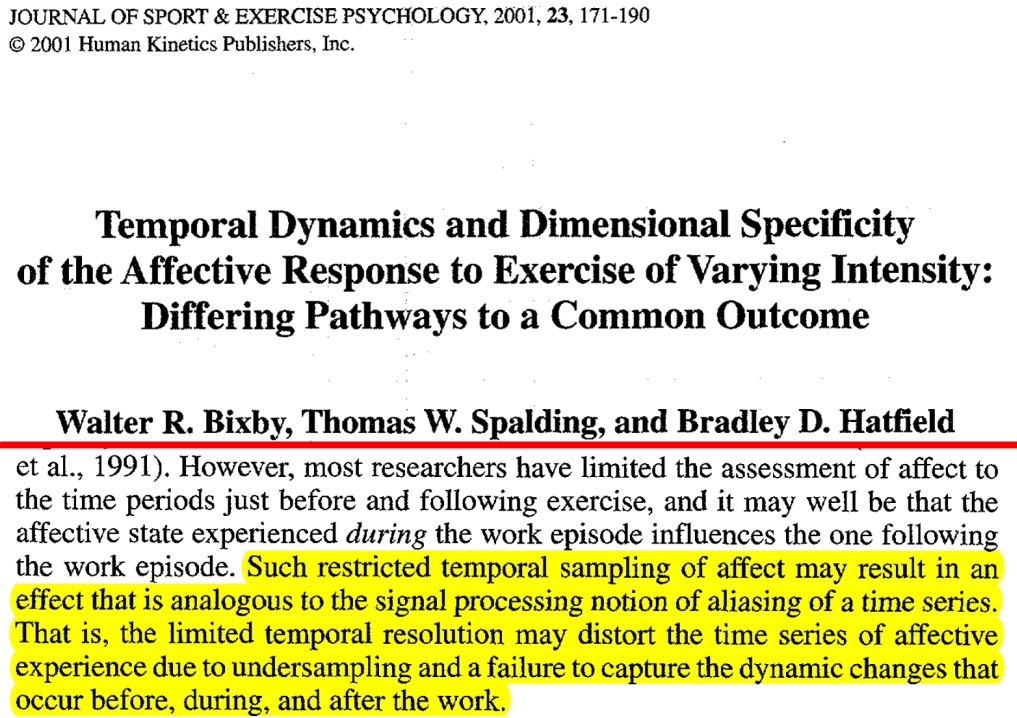

Many colleagues immediately react saying that these are honest unintentional errors. Sorry, no. While these methodological points may seem new to many, they have been discussed in exercise psychology for 20 years and are very well known.

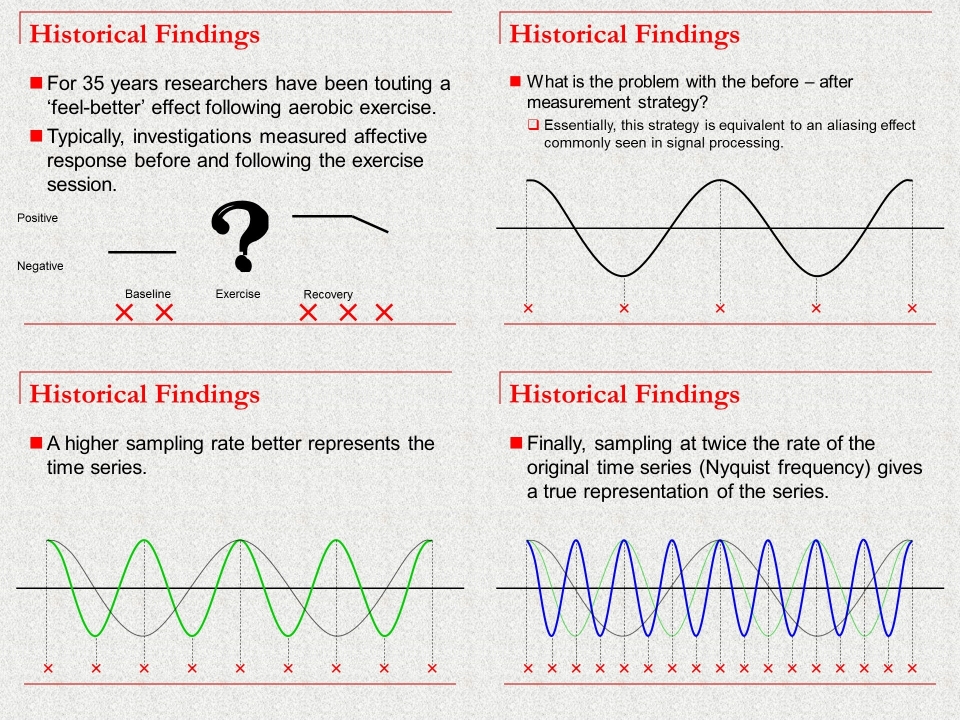

My colleague @wbixby explained this in 2001. His explanation emphasized that this principle is fundamental in signal processing. Scientists dealing with biological and other signals are taught this in grad school. You MUST sample in a way that faithfully represents the signal.

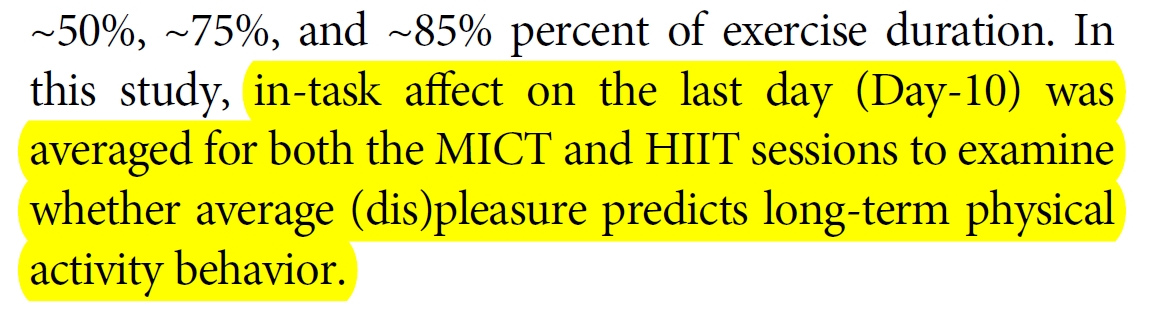

The 2nd goal is to find that affective responses to HIIT are unrelated to subsequent physical activity. The trick here is to not only distort the true affective response (as explained) but also to attenuate interindividual variance, thus reducing the odds of finding co-variance.

This is done by collapsing the individual trajectories of affective responses (all of those beautiful individual up-and-down oscillations in pleasure) into ...the average, thus flattening everything. To a non-specialist peer-reviewer or reader, this may seem sort-of "reasonable."

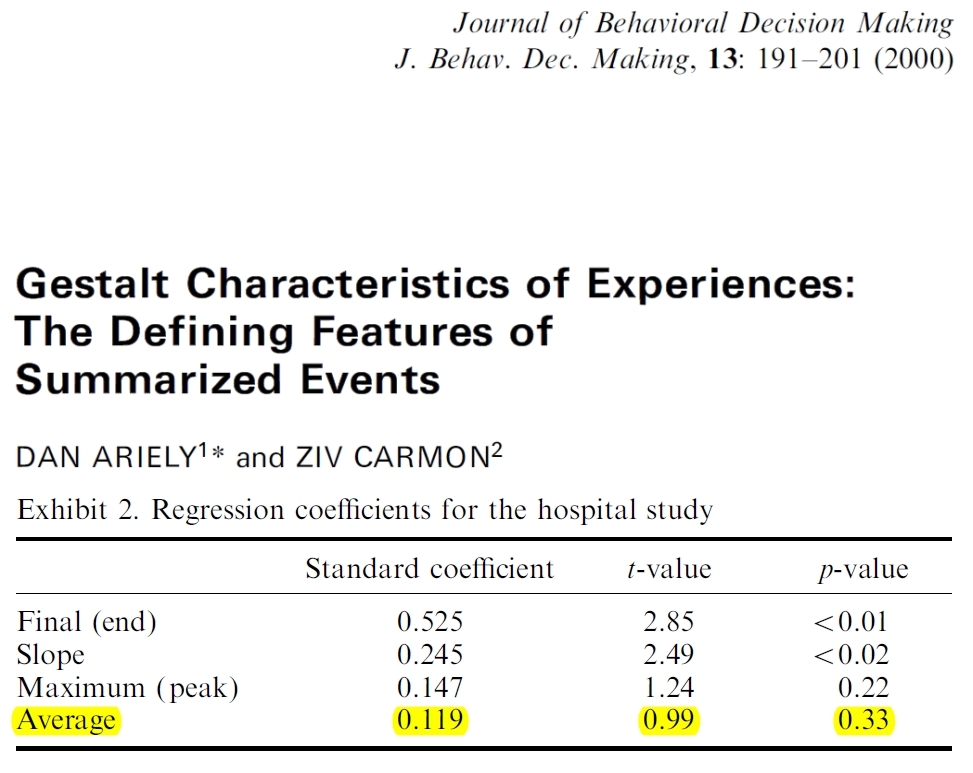

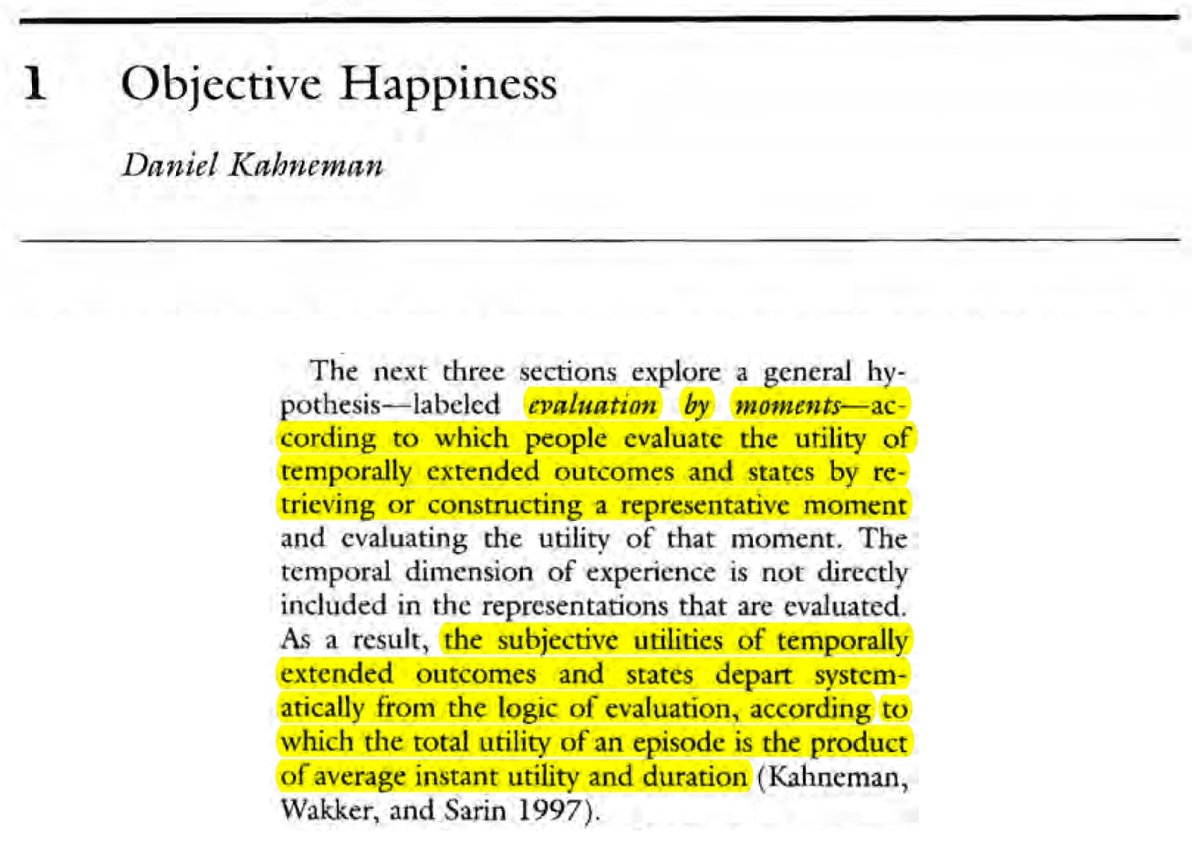

Once again, this practice flies in the face of decades of research in psychology and behavioral economics. We have known for a long time that the average affective response IS NOT predictive of behavior. Researchers in exercise psychology have been emphasizing this for years.

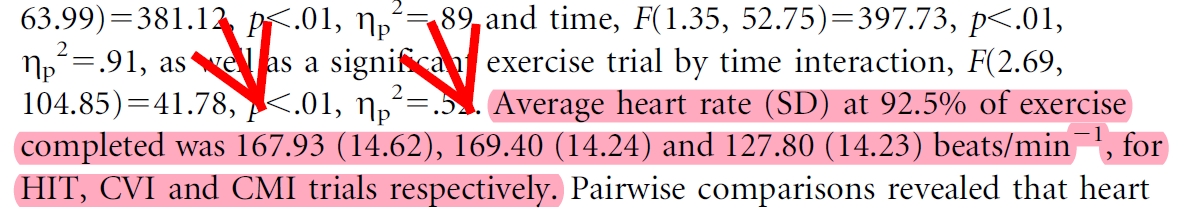

Finally, it could be argued that the biggest trick in studies comparing affective responses to HIIT and continuous exercise is that "high intensity" does not mean "high intensity." And "moderate" or "vigorous" is often actually higher than "high" ...but prolonged!

Studies in which HIIT peaks at 90% HRpeak but continuous exercise is done continuously at 90% HRpeak are not fair comparisons of HIIT and continuous exercise. In one case, exercise is for 10 min with breaks, whereas in the other for 20 min without breaks -- at the same intensity!

What are we learning from this? Exercise science, sadly, is broken. Not only are we showing no signs of integration between our different subdisciplines (even though we all presumably want the same outcome) but we have also failed to join other sciences in improving our methods.

I hope that young researchers realize that, usually, magic bullets are just (methodological & statistical) smoke and mirrors. I hope they learn to be critical and take this opportunity to re-read their methods and statistics books, as well as venture outside their subdisciplines.

The problem of physical inactivity is hard. It's complex and multifaceted. If it were easy, it would have been solved already, I assure you. But we have made no progress. We need to work methodically while respecting the difficulty of the problem, our science, and the public.

@threadreaderapp unroll

• • •

Missing some Tweet in this thread? You can try to

force a refresh