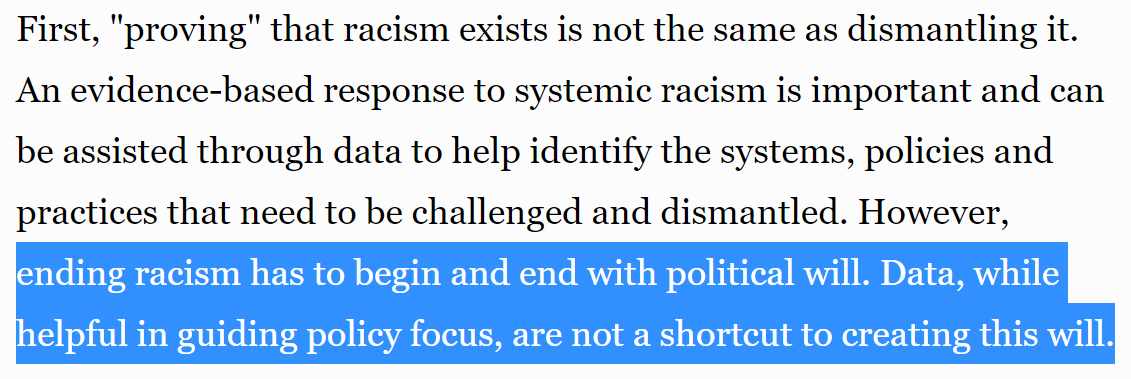

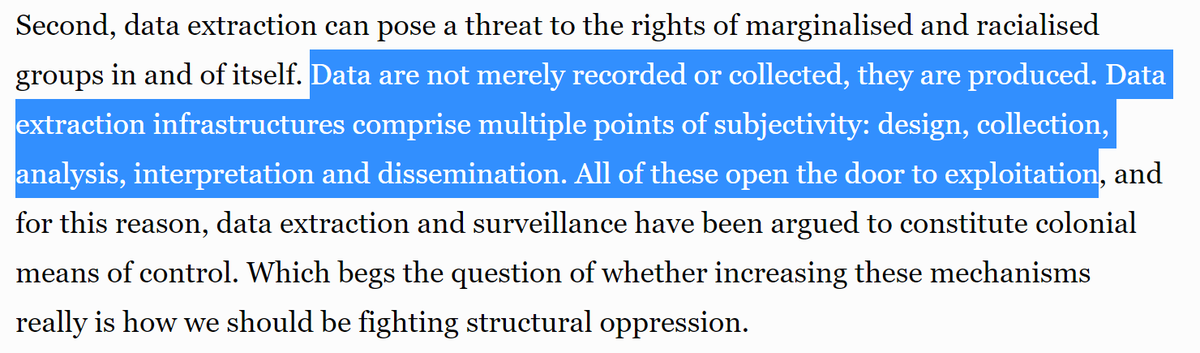

I'm going to start a thread on various forms of "washing" (showy efforts to claim to care/address an issue, without doing the work or having a true impact), such as AI ethics-washing, #BlackPowerWashing, diversity-washing, greenwashing, etc

Feel free to add more articles!

Feel free to add more articles!

"Companies seem to think that tweeting BLM will wash away the fact that they derive massive wealth from exploitation of Black labor, promotion of white anxiety about Blackness, & amplification of white supremacy."

--@hypervisible #BlackPowerWashing

--@hypervisible #BlackPowerWashing

https://twitter.com/math_rachel/status/1268694702825635840

Great paper on participation-washing in the machine learning community:

https://twitter.com/math_rachel/status/1287534130176040960

A few years ago, I surveyed the research on how diversity-washing is worse than doing nothing at all:

https://twitter.com/math_rachel/status/1322200670368198656

AI ethics washing is the fabrication or exaggeration of a company’s interest in equitable AI systems. A textbook example for tech giants is promoting “AI for good” initiatives while also selling surveillance capitalism tech -- @kharijohnson

venturebeat.com/2019/07/17/how…

venturebeat.com/2019/07/17/how…

• • •

Missing some Tweet in this thread? You can try to

force a refresh