🛠️Tooling Tuesday🛠️

Today, we share a @GoogleColab notebook implementing a Transformer with @PyTorch, trained using @PyTorchLightnin.

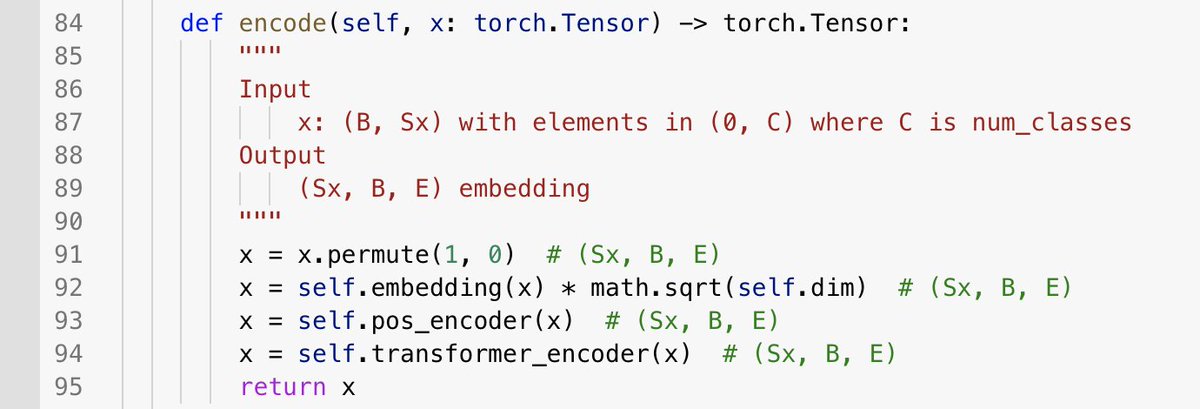

We show both encoder and decoder, train with teacher forcing, and implement greedy decoding for inference.

colab.research.google.com/drive/1swXWW5s…

👇1/N

Today, we share a @GoogleColab notebook implementing a Transformer with @PyTorch, trained using @PyTorchLightnin.

We show both encoder and decoder, train with teacher forcing, and implement greedy decoding for inference.

colab.research.google.com/drive/1swXWW5s…

👇1/N

2/N Transformers are a game changer.

This architecture has superseded RNNs for NLP tasks, and is likely to do the same to CNNs for vision tasks.

PyTorch provides Transformer modules since 1.2, but the docs are lacking:

- No explanation of inference

- Tutorial is encoder-only

This architecture has superseded RNNs for NLP tasks, and is likely to do the same to CNNs for vision tasks.

PyTorch provides Transformer modules since 1.2, but the docs are lacking:

- No explanation of inference

- Tutorial is encoder-only

3/N Our notebook shows both. Let's get started with simple data.

Our output will be number sequences like [2, 5, 3].

Our input will be the same as output, but with each element repeated twice, e.g. [2, 2, 5, 5, 3, 3]

We start each sequence with 0 and end each sequence with 1.

Our output will be number sequences like [2, 5, 3].

Our input will be the same as output, but with each element repeated twice, e.g. [2, 2, 5, 5, 3, 3]

We start each sequence with 0 and end each sequence with 1.

4/N We do the simplest possible thing to wrap this data with a PyTorch DataLoader, which will handle batching, shuffling, and pre-fetching.

6/N The forward() method encodes the input, and then decodes the input and the output together, where the output is partially masked to prevent "peeking" forward.

7/N With some PyTorch-Lightning boilerplate, we're ready to train on any number of GPUs/TPUs.

Note the "teacher-forcing", where the ground truth is fed into the model shifted by one character.

Training on this toy data finishes quickly with 100% validation accuracy.

Note the "teacher-forcing", where the ground truth is fed into the model shifted by one character.

Training on this toy data finishes quickly with 100% validation accuracy.

8/N To calculate accuracy, we need to implement greedy decoding.

This is where the input is used to generate output tokens one at a time. In our case, we use greedy selection, but beam search can be used instead for a potential accuracy boost.

This is where the input is used to generate output tokens one at a time. In our case, we use greedy selection, but beam search can be used instead for a potential accuracy boost.

9/N And that's all there is to it!

Hope the notebook is useful.

If you want more, check out official docs, a helpful post from ScaleAI, and a great explanation of the Transformer architecture:

- pytorch.org/docs/stable/ge…

- pgresia.medium.com/making-pytorch…

- peterbloem.nl/blog/transform…

Hope the notebook is useful.

If you want more, check out official docs, a helpful post from ScaleAI, and a great explanation of the Transformer architecture:

- pytorch.org/docs/stable/ge…

- pgresia.medium.com/making-pytorch…

- peterbloem.nl/blog/transform…

10/N Lastly, our Berkeley course is beginning next Tuesday! Remember to sign up to receive updates as we release lectures (we will do so with a delay): forms.gle/235LpvXmeCN21j…

• • •

Missing some Tweet in this thread? You can try to

force a refresh