Next up at #enigma2021, Sanghyun Hong will be speaking about "A SOUND MIND IN A VULNERABLE BODY: PRACTICAL HARDWARE ATTACKS ON DEEP LEARNING"

(Hint: speaker is on the market)

usenix.org/conference/eni…

(Hint: speaker is on the market)

usenix.org/conference/eni…

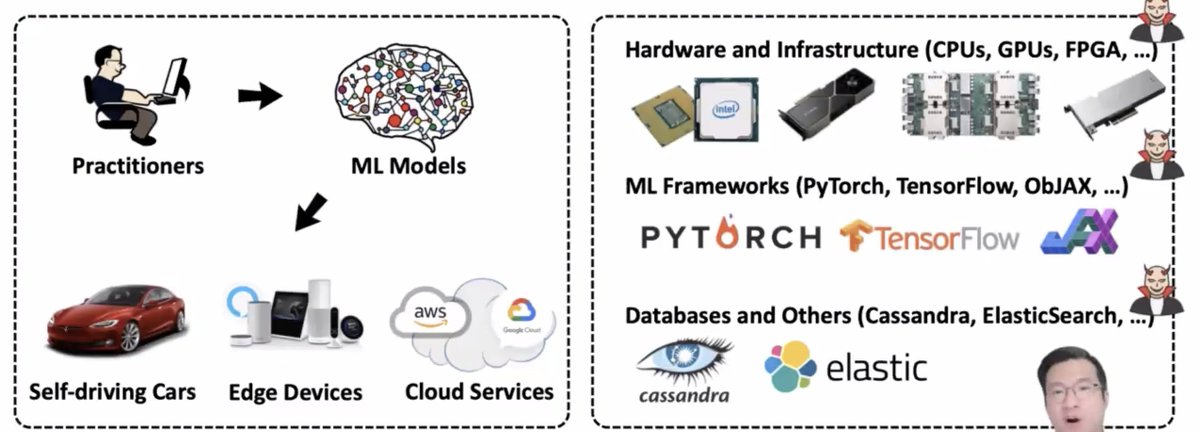

In recent years ML models have worked from research labs to production, which makes ML security important. Adversarial ML research studies how to mess with ML

For example by messing with the training data (c.f. Tay which became super-racist super-fast) or by foiling ML models by changing inputs in ways humans can't see.

Prior work considers ML models in a standalone, mathematical way

* looks at the robustness in an isolated manner

* doesn't look at the whole ecosystem and how the model is used -- ML models are running in real hardware with real software which has real vulns!

* looks at the robustness in an isolated manner

* doesn't look at the whole ecosystem and how the model is used -- ML models are running in real hardware with real software which has real vulns!

This talk focuses on hardware-level vulnerabilities. This is particularly interesting because these can break cryptographic guarantees (because those are outside of their threat models)

e.g. fault injection attacks, side-channel attacks

e.g. fault injection attacks, side-channel attacks

Recent work targets The Cloud

* co-location of VMs from different users

* weak attackers with less subtle control

The cloud providers try to secure things, e.g. protections against Rowhammer

* co-location of VMs from different users

* weak attackers with less subtle control

The cloud providers try to secure things, e.g. protections against Rowhammer

But can you use the weak attacks left after mitigations deployed by cloud compute providers?

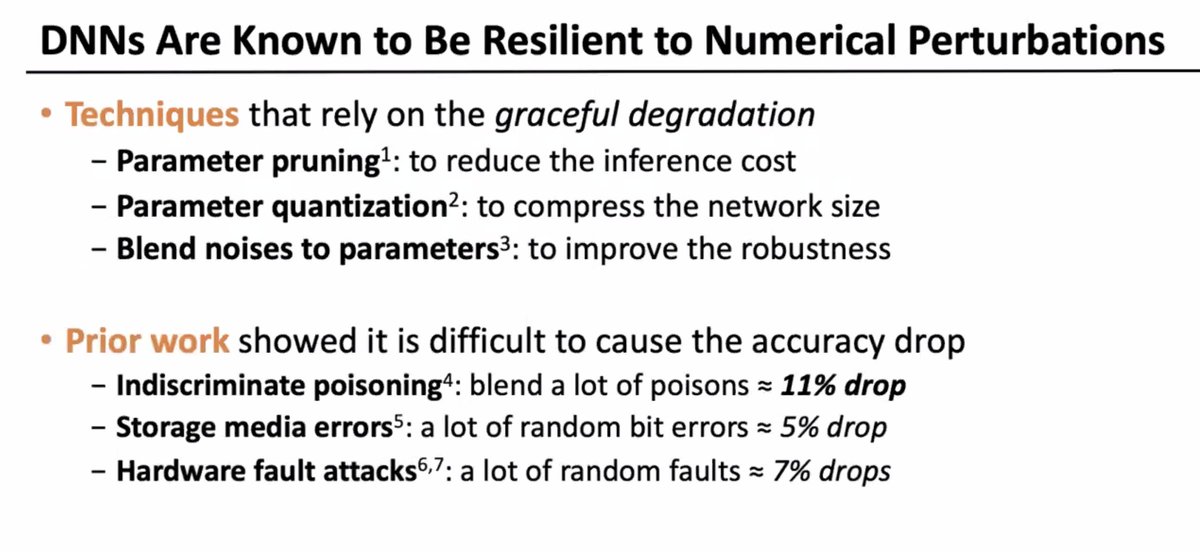

DNNs are resilient to numerical perturbations: this is used both to make things more efficient (e.g. pruning) but also in security it's really hard to make accuracy drop

... BUT this focuses on the average or best case, not the worst cast!

... BUT this focuses on the average or best case, not the worst cast!

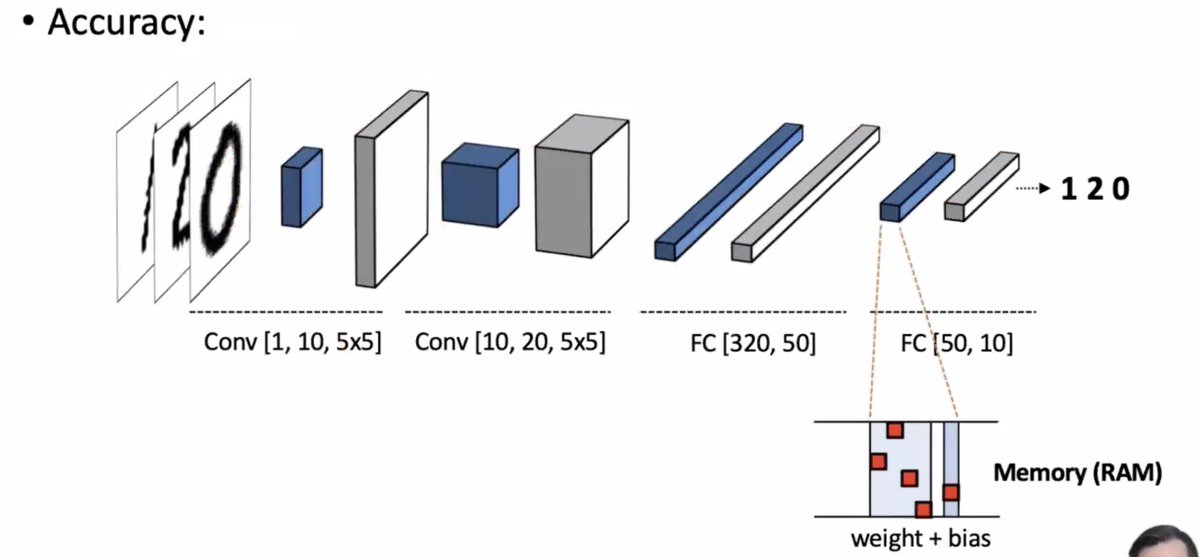

What happens when you can mess with the memory at one of these steps?

* negligible effect on the average case accuracy

* but flipping one bit can make significant amount of damage for particular queries

How much damage can a single bit flip cause?

* negligible effect on the average case accuracy

* but flipping one bit can make significant amount of damage for particular queries

How much damage can a single bit flip cause?

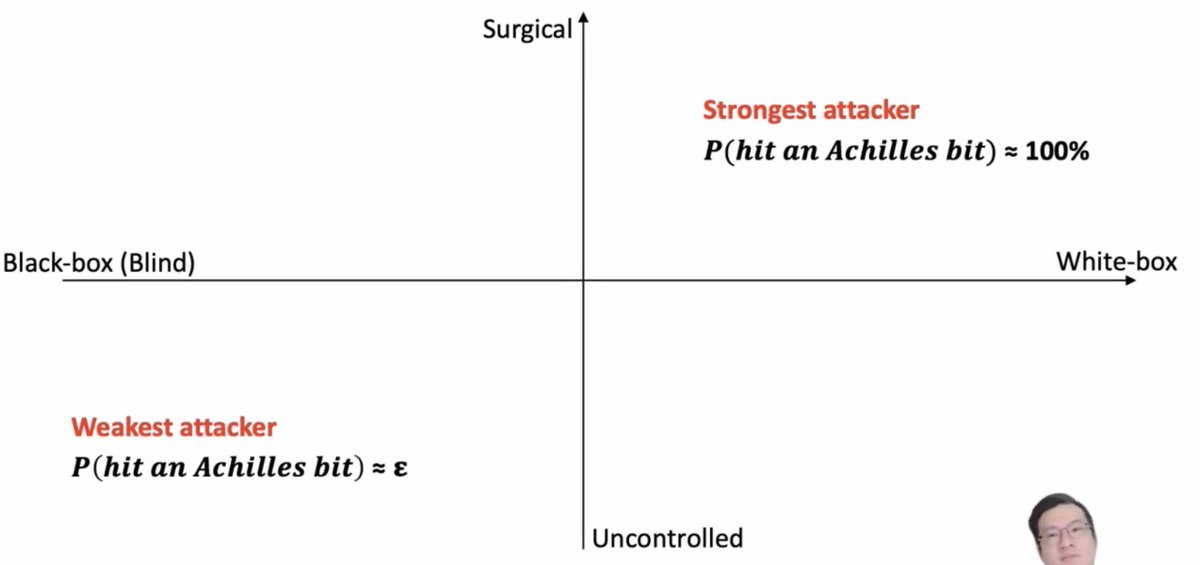

Well, can you use this? There's a lot less control in real life

Some strong attackers might be able to hit an "achilles" bit (one that's really going to mess with the model), but weaker attackers are going to hit bits more randomly.

Some strong attackers might be able to hit an "achilles" bit (one that's really going to mess with the model), but weaker attackers are going to hit bits more randomly.

How about side-channel attacks?

The attacker might want to get their hands on fancy DNNs which are considered trade secrets and proprietary to their creators. They're expensive to make! They need good training data! People want to protect them!

The attacker might want to get their hands on fancy DNNs which are considered trade secrets and proprietary to their creators. They're expensive to make! They need good training data! People want to protect them!

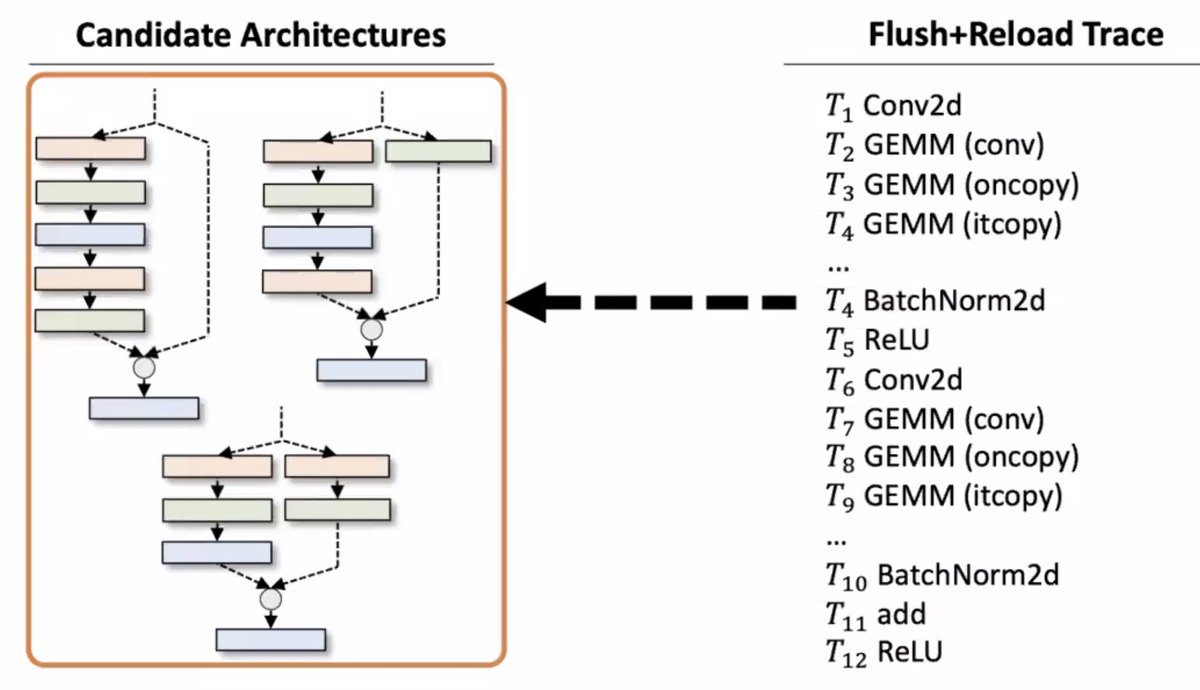

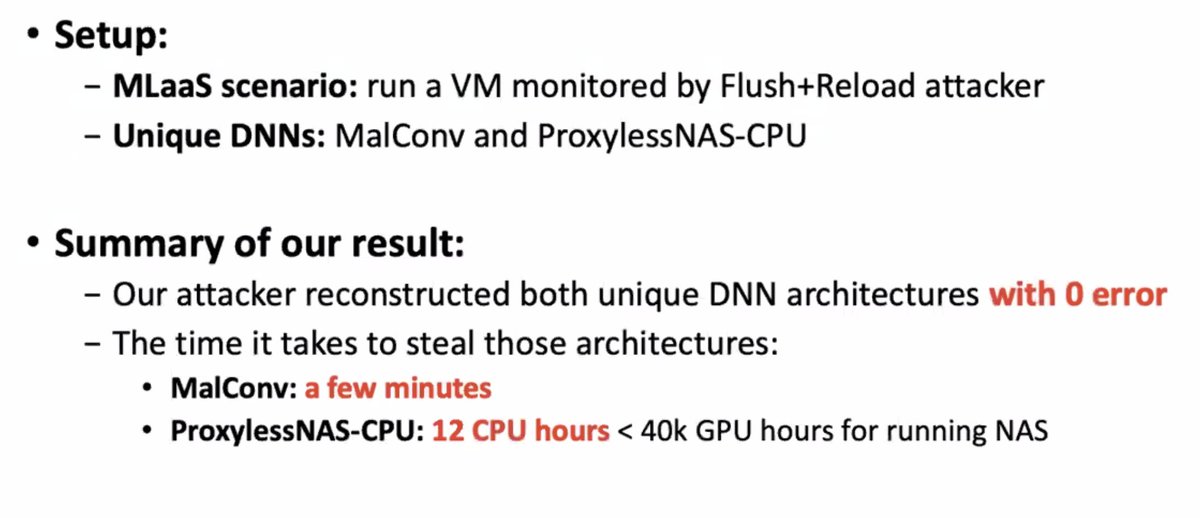

Prior work required that the ML-model-trainer uses an off-the-shelf architecture. But people often don't for the fancy models. So what this work does [... if I'm following correctly] is to basically guess from a lot of architecture possibilities and then filter it down

Why is this possible? Because there are regularities in deep-learning calculation.

Does this work? Apparently so: they tried it out using a cache side-channel attack and got back the architectures of the fancy DNN back.

Does this work? Apparently so: they tried it out using a cache side-channel attack and got back the architectures of the fancy DNN back.

This needs more study

* we need to understand the worst-case ML fails under hardware attack

* don't discount the ability of an attacker with access to a weak hardware attack to cause a disproportionate amount of damage

* we need to understand the worst-case ML fails under hardware attack

* don't discount the ability of an attacker with access to a weak hardware attack to cause a disproportionate amount of damage

• • •

Missing some Tweet in this thread? You can try to

force a refresh