The founder of @gridai_ (@_willfalcon) has been sharing some falsehoods about fastai as he promotes the pytorch lighting library. I want to address these & to share some of our fast ai history. 1/

Because our MOOC is so well-known, some assume fast.ai is just for beginners, yet we have always worked to take people to the state-of-the-art (including through our software library). 2/

I co-founded fast.ai w/ @jeremyphoward in 2016 & worked on it full-time until 2019. I've been focused on the USF Center for Applied Data Ethics for the past 2 yrs, so I can’t speak about the current as much, but I can share our history. 3/

https://twitter.com/math_rachel/status/767864016530137088?s=20

In the very first fast.ai post, when we publicly launched in 2016, Jeremy highlighted the frustration of how deep learning software tools at the time were not up to where they need to be for deep learning to meet its potential. 4/

fast.ai/2016/10/07/fas…

fast.ai/2016/10/07/fas…

He laid out our goals, and 2 & 3 were all about tools and software. We always saw the course as a way to experience firsthand what the pain points were (for us and for others), in order to motivate the software development (again, this is from Oct 2016) 5/

I was frustrated by how cliquish deep learning was at the time (I first became interested in it in 2013), and even as a math PhD & data scientist, it was hard to get into the field since many researchers were not sharing the info needed 6/

In spring 2017, we implemented & taught neural style transfer, Wasserstein GANs in Pytorch, bidirectional LSTM, attentional models, 100 layers tiramisu, generative models, super-resolution, processing ImageNet in parallel, & more 7/

forums.fast.ai/t/part-2-compl…

forums.fast.ai/t/part-2-compl…

We were often implementing new papers as they came out during the course. One of the goals was for students to learn how to implement new papers on their own. We updated the course & library continually to keep up with state-of-the-art. The course was/is different every year 8/

The below statement is false (again, we taught & implemented GANs in spring 2017), but could just be a misunderstanding. However, when combined with apparently mimicking from the fastai library without attribution, it begin to look suspect. 9/

In 2018, a team of fast.ai students won a Stanford competition against better funded teams from Google & Intel. Winning this involved new research & implementations for mixed precision & distributed training 10/

fast.ai/2018/08/10/fas…

fast.ai/2018/08/10/fas…

MIT Tech review covered the win as well: technologyreview.com/2018/08/10/141… 12/

In 2018, fastai was winning competitions with new research & implementations of mixed precision & distributed training, yet in 2019, Falcon claimed this was something new Lightning just innovated towardsdatascience.com/pytorch-lightn… 13/

The fastai library has been used in many research papers. Here are two partial lists 14/

scholar.google.com/scholar?hl=en&…

researchgate.net/publication/33…

scholar.google.com/scholar?hl=en&…

researchgate.net/publication/33…

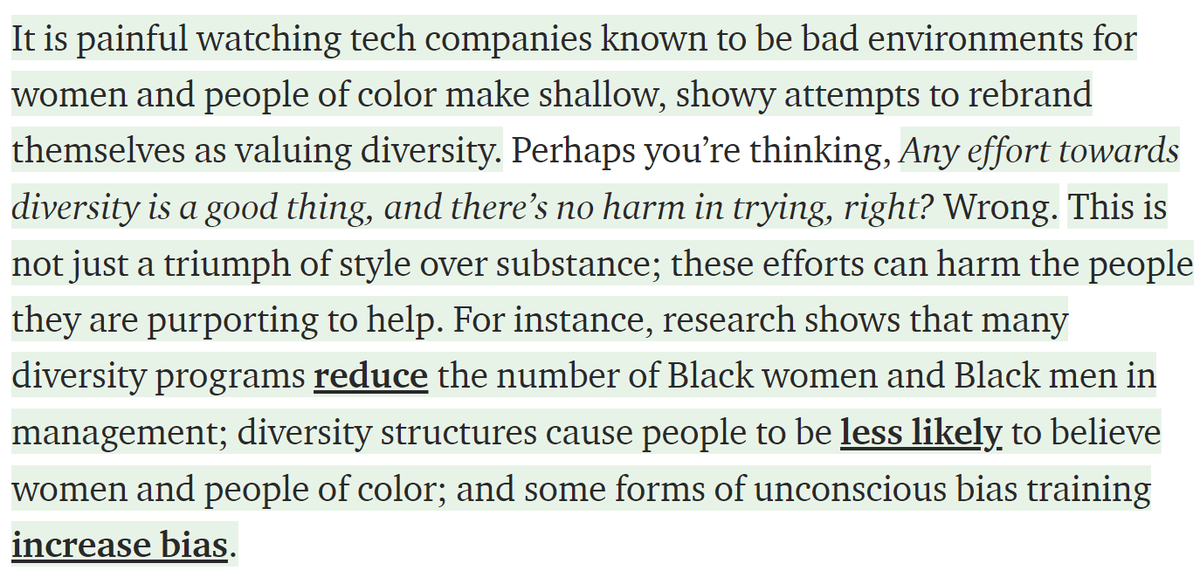

There is also a common bias at work here: the false belief that you can’t be doing something to increase diversity/include outsiders AND being doing state-of-the-art work, as though these two are in conflict (they are not) 15/

I want to credit @GuggerSylvain for his crucial role in developing fastai. He helped make fastai what it is.

Also, thank you to the fastai community, and everyone who invested time & energy into building fastai & helping others, trying to create a welcoming & collaborative env

Also, thank you to the fastai community, and everyone who invested time & energy into building fastai & helping others, trying to create a welcoming & collaborative env

In aggregate, I don’t see how this could just be a “misunderstanding.” Falcon repeatedly made false statements about fastai, did not correct them when pointed out, still has not corrected them, & is trying to pass off aspects borrowed from fastai as new “innovations” he created

It is also important to note that Falcon raised $18 million in VC funding, likely using the “innovations” of Lightning as a key selling point. This isn't just about open source libraries 19/

https://twitter.com/jeremyphoward/status/1357470481050312704?s=20

Because the falsehoods about fastai were & are public (and have not been corrected), I feel that it is important to publicly address them. 20/

One more example: Falcon published a chart full of inaccuracies, said to comment if something was missing, and then ignored comments 21/

There were many features that fastai was incorrectly listed as not having (as well as many features fastai had & lightning didn’t that were not listed). 22/

forums.fast.ai/t/fastai2-vs-p…

forums.fast.ai/t/fastai2-vs-p…

• • •

Missing some Tweet in this thread? You can try to

force a refresh