I’m hearing comments that Grid AI (Lightning) seem to have copied fastai's API without credit, and claimed to have invented it.

We wrote a paper about our design; it's great it's inspiring others.

Claiming credit for other's work? NOT great 1/

mdpi.com/2078-2489/11/2…

We wrote a paper about our design; it's great it's inspiring others.

Claiming credit for other's work? NOT great 1/

mdpi.com/2078-2489/11/2…

PyTorch Lightning is a new deep learning library, released in mid-2019. The same team launched "Flash", a higher level library, this week.

fastai was launched in 2017, based on extensive research.

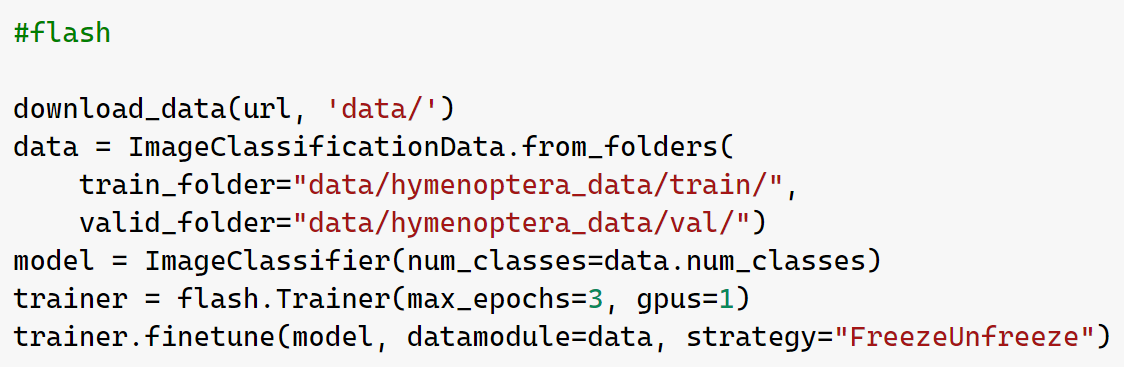

As you can see, they look *very* similar.

fastai was launched in 2017, based on extensive research.

As you can see, they look *very* similar.

The quote below is from the Flash launch post (h/t @tomcocobrico). It is very clearly not true.

fastai's focus has always been simple inference, fine-tuning, and customization of state of the art models on new data in a matter of minutes.

fastai's focus has always been simple inference, fine-tuning, and customization of state of the art models on new data in a matter of minutes.

https://twitter.com/tomcocobrico/status/1356973637396385799

A lot of folks are asking me "why didn't they just credit you?" I can't know for sure, but I'm guessing that part of the problem is that as a VC-funded startup, Grid AI need to convince their investors that they are innovating.

If they're found to be copycats? Could be bad news

If they're found to be copycats? Could be bad news

However, the current approach isn't going over very well with Lightning users either...

https://twitter.com/isaac_flath/status/1356980120582426624

The founder has a history of making (and later deleting) unfounded claims of plagiarism from others.

So it's not that he's unfamiliar with the norms around not taking credit for other people's work.

So it's not that he's unfamiliar with the norms around not taking credit for other people's work.

As @math_rachel explains in the thread below, fastai's deep capabilities are based on years of research and testing.

https://twitter.com/math_rachel/status/1357426147017953280

After creating Lightning, the founder interviewed and tried to poach nearly every significant contributor to the fastai library. (Nearly everyone said no.)

I don't think I've ever heard of this happening to an active open source project before.

I don't think I've ever heard of this happening to an active open source project before.

https://twitter.com/unkown_data/status/1356984039668736007

Whilst trying to poach our team and copy our work, at the same time they claimed fastai was not for serious researchers.

The Lightning founder implies here distributed training and 16-bit precision are unique to Lightning. But fastai created both *first*. Lightning was later.

The Lightning founder implies here distributed training and 16-bit precision are unique to Lightning. But fastai created both *first*. Lightning was later.

Our users have pointed out that misleading or wrong marketing claims from the Lightning folks are par for the course.

This kind of misinformation is damaging to the open source and deep learning communities, and I think we should discourage it.

This kind of misinformation is damaging to the open source and deep learning communities, and I think we should discourage it.

https://twitter.com/TheZachMueller/status/1357323326641864704

Unfortunately, the similarities are only skin-deep - the actual design of Flash is much less well structured than fastai. Since it was an afterthought, whereas the layered design of fastai was designed from the start, this isn't surprising. You see signs of it in the code above

E.g, why did the Flash version require "num_classes=data.num_classes" to be passed - couldn't the lib figure that out for itself?

For fastai, yes - but for Flash, no. That's because there isn't anything that combine data and model together in Flash.

That means more work for you

For fastai, yes - but for Flash, no. That's because there isn't anything that combine data and model together in Flash.

That means more work for you

More importantly, the layering in Flash is shallow, due to the lack of upfront layered design from the start in Lightning. What if your data doesn't fit the constraints of Flash's image classifier?

In fastai, just go a layer deeper to the data blocks API

docs.fast.ai/tutorial.datab…

In fastai, just go a layer deeper to the data blocks API

docs.fast.ai/tutorial.datab…

In Flash, model training is treated as the only thing that matters. But what about finding a fixing errors? What about checking your inputs?

fastai created an OO tensor design to ensure every kind of data input and model output can be visualized.

DL is about more than training.

fastai created an OO tensor design to ensure every kind of data input and model output can be visualized.

DL is about more than training.

fastai pioneered a rich encode/decode pipeline on top of PyTorch's DataLoader and Dataset.

Flash has taken the exact same idea, renamed the methods, but failed to actually implement the key underlying Transform/Pipeline details.

github.com/PyTorchLightni…

Flash has taken the exact same idea, renamed the methods, but failed to actually implement the key underlying Transform/Pipeline details.

github.com/PyTorchLightni…

This means there's a lot more things that have to be done manually - and that means more opportunities for users to make mistakes, and less opportunities for researchers to innovate.

For instance, you can't create a transform that behaves differently in validation vs training.

For instance, you can't create a transform that behaves differently in validation vs training.

It might be possible for Lightning and Flash to try to paper over these issues, but they're just a few examples of the many problems that stem from the lack of a unified layered design.

They can only be fixed be a complete redo. But Lightning's API is frozen, so it can't happen

They can only be fixed be a complete redo. But Lightning's API is frozen, so it can't happen

There were some signs yesterday that they were going to make an attempt to credit fastai. The founder even tweeted about the "amazing work" of fastai and keras.

But he deleted it soon after, and then updated the launch post without adding any apology or credit.

But he deleted it soon after, and then updated the launch post without adding any apology or credit.

One of the things I found really odd about Lightning when it was released was that it used inheritance, instead of callbacks, for extensibility. They were really proud of that for some reason.

I was confused because I thought it was well-known inheritance isn't that flexible...

I was confused because I thought it was well-known inheritance isn't that flexible...

...so then some time later, they add callbacks too! So now they have two different extensibility mechanisms, and all kinds of tech debt.

For instance, something as simple as gradient accumulation has to be added to many different files. In fastai, it's one little callback

For instance, something as simple as gradient accumulation has to be added to many different files. In fastai, it's one little callback

• • •

Missing some Tweet in this thread? You can try to

force a refresh