THREAD: How does machine learning 🤖 differ from regular programming? 🧑💻

In both, we tell the computer 𝗲𝘅𝗮𝗰𝘁𝗹𝘆 what to do.

But there is one important difference...

In both, we tell the computer 𝗲𝘅𝗮𝗰𝘁𝗹𝘆 what to do.

But there is one important difference...

2/ In regular programming, we describe each step the computer will take.

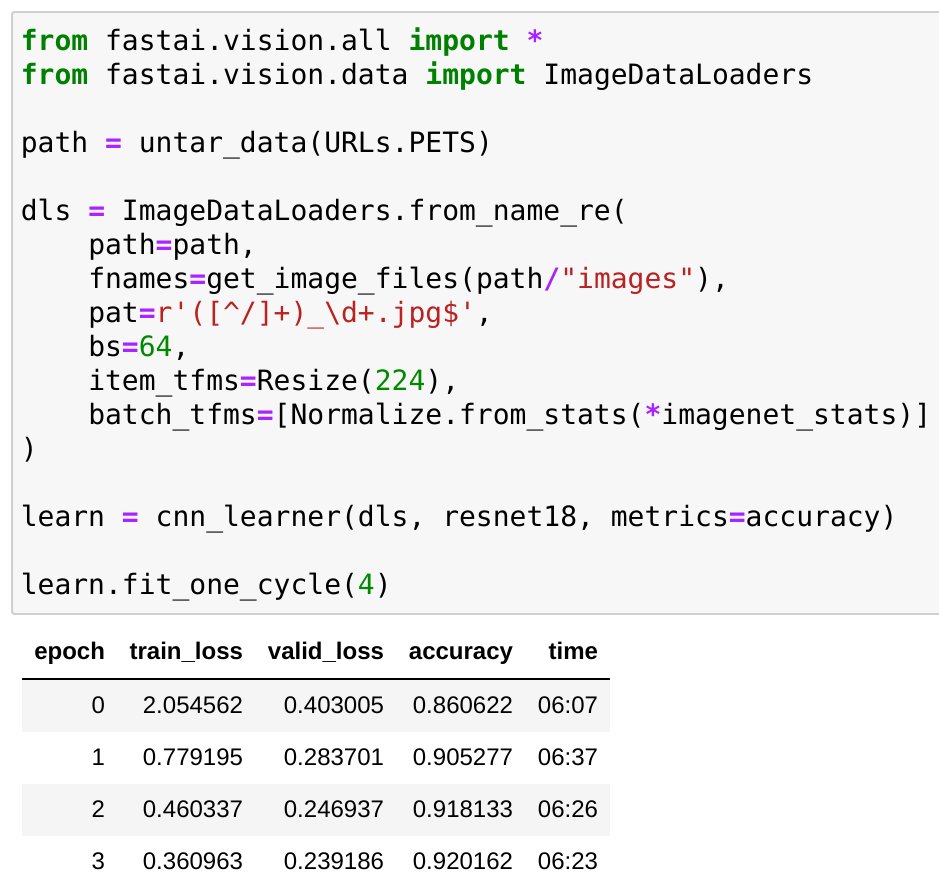

In machine learning, we write a program where the computer can alter some parameters based on the training examples.

How does this work?

In machine learning, we write a program where the computer can alter some parameters based on the training examples.

How does this work?

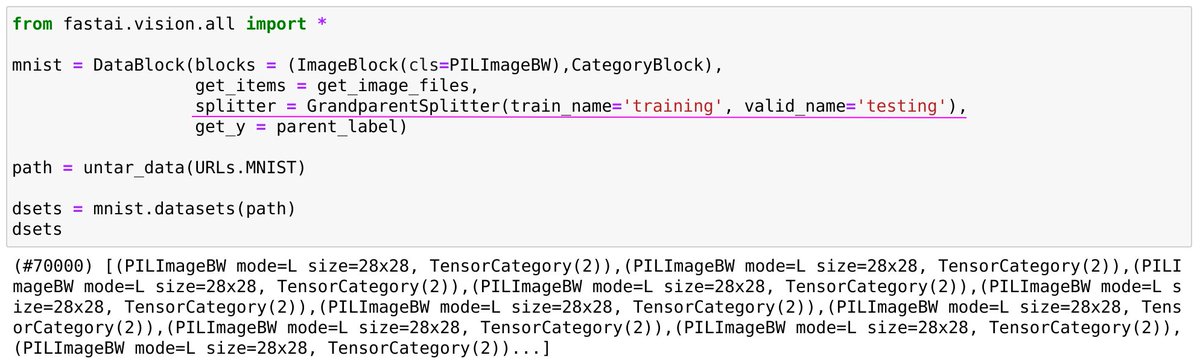

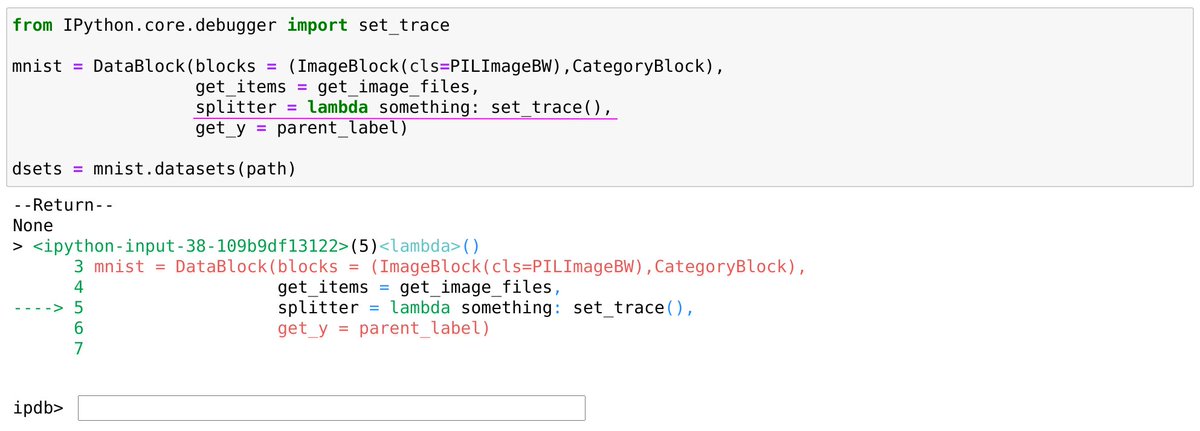

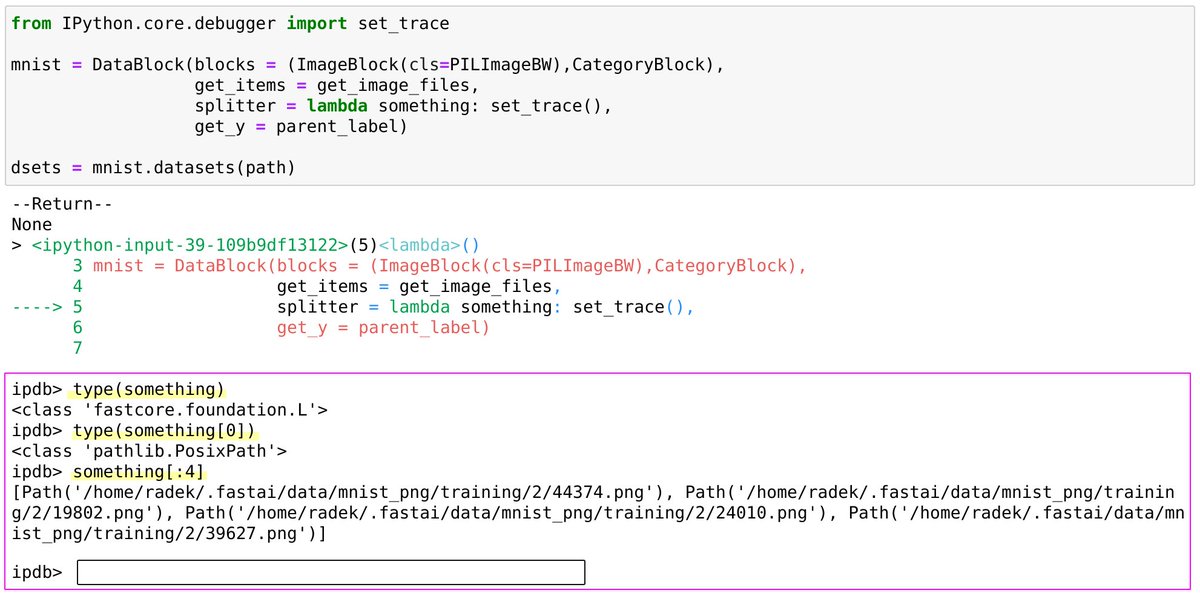

3/ Our model has a lot of tiny knobs, known as weights or parameters, that control the functioning of the program.

We show the computer a lot of examples with correct labels.

Here is how this can play out...

We show the computer a lot of examples with correct labels.

Here is how this can play out...

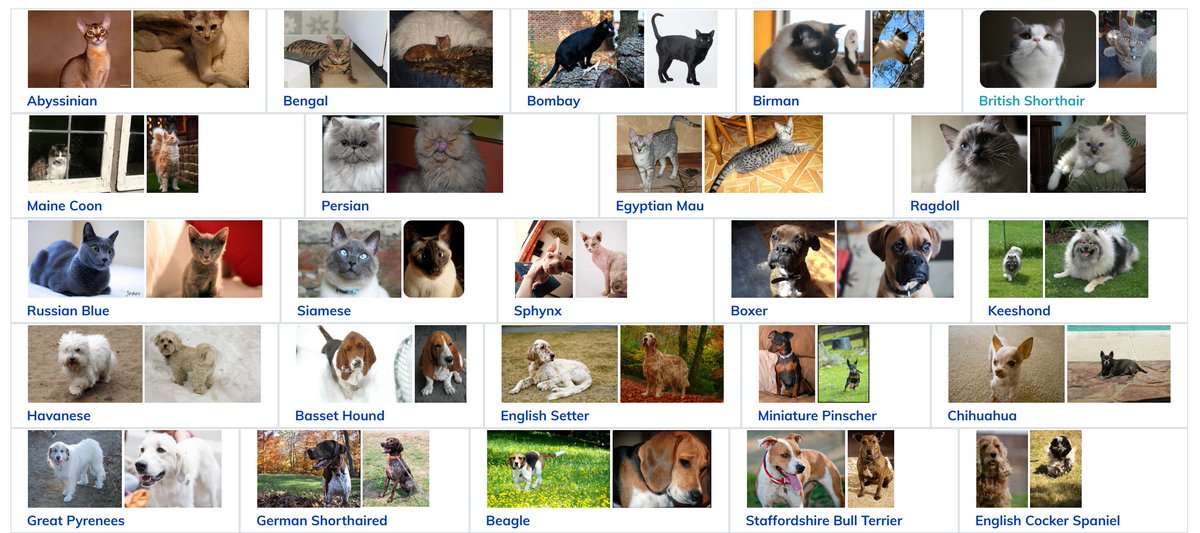

4/ "Computer, this here is a dog".

The program looks at its outputs - "oh, to me this looks only slightly like a dog, but let me tweak my parameters to improve my performance next time".

This process is called training. Many parameters get updated many, many times very quickly.

The program looks at its outputs - "oh, to me this looks only slightly like a dog, but let me tweak my parameters to improve my performance next time".

This process is called training. Many parameters get updated many, many times very quickly.

5/ Once the training is done, we get a computer program! One that has many parameters, performs many simple operations.

A program that we can save, move around and run on new data.

This is what I meant in this tweet 👇

A program that we can save, move around and run on new data.

This is what I meant in this tweet 👇

https://twitter.com/radekosmulski/status/1359851355981176835?s=20

6/ If you find this as fascinating as I do, do check out this @fastdotai lecture where @jeremyphoward covers all of the above, and more, in greater detail!

• • •

Missing some Tweet in this thread? You can try to

force a refresh