1/ In @ScienceMagazine, there has been a marked bias against women in the attention afforded to their publications in recent years. We don’t know why. #tweetorial @AcademicChatter #AcademicTwitter

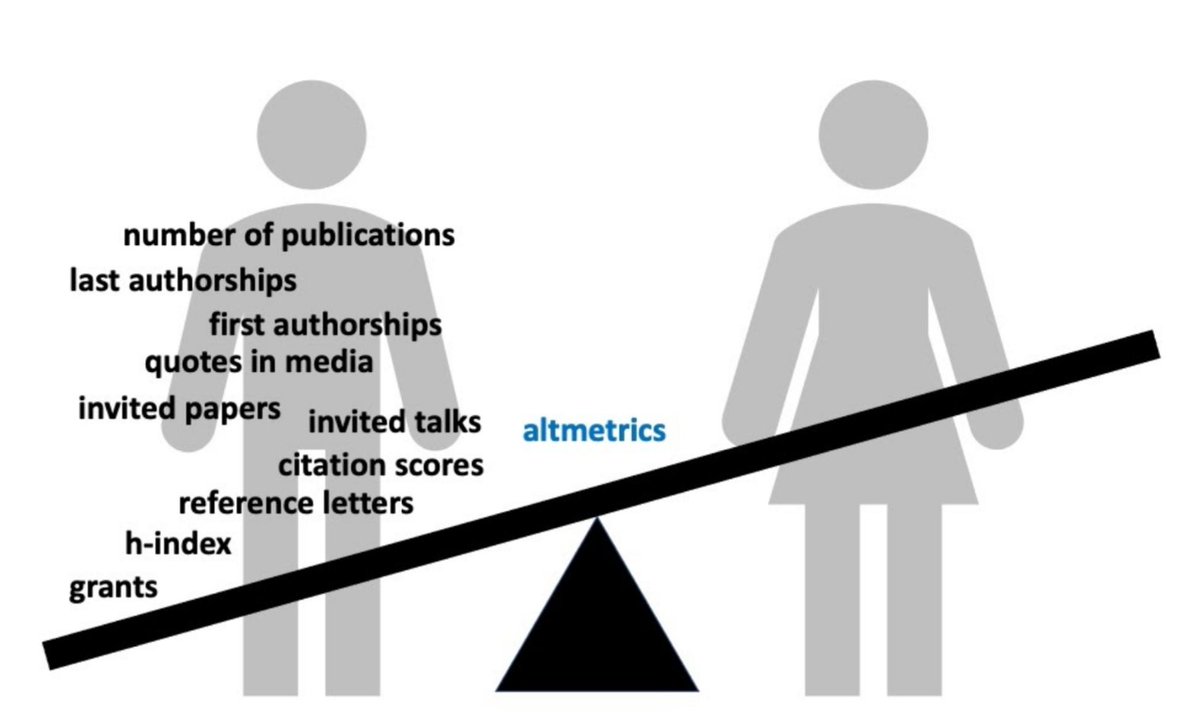

2/ Many studies show biases against #womeninSTEM, including in performance metrics used for hiring/promotion/tenure decisions. E.g. men self-cite more doi.org/10.1177%2F2378…, get better reference letters doi.org/10.1177%2F2378…, get invited to talks more doi.org/10.1073/pnas.1…

3/ Altmetrics are a new way of quantifying the attention papers get online. They scrape news and blog sites and social media for mentions of an article. The biggest aggregator is Altmetric.com @altmetric

4/ To date, nobody has looked at whether the gender biases pervasive in academia are also found in altmetric scores. This is what we did in our paper @Zia @Michelle @Bjarne @Mikey doi.org/10.1007/s11192… (behind a paywall - dm me for a pdf!)

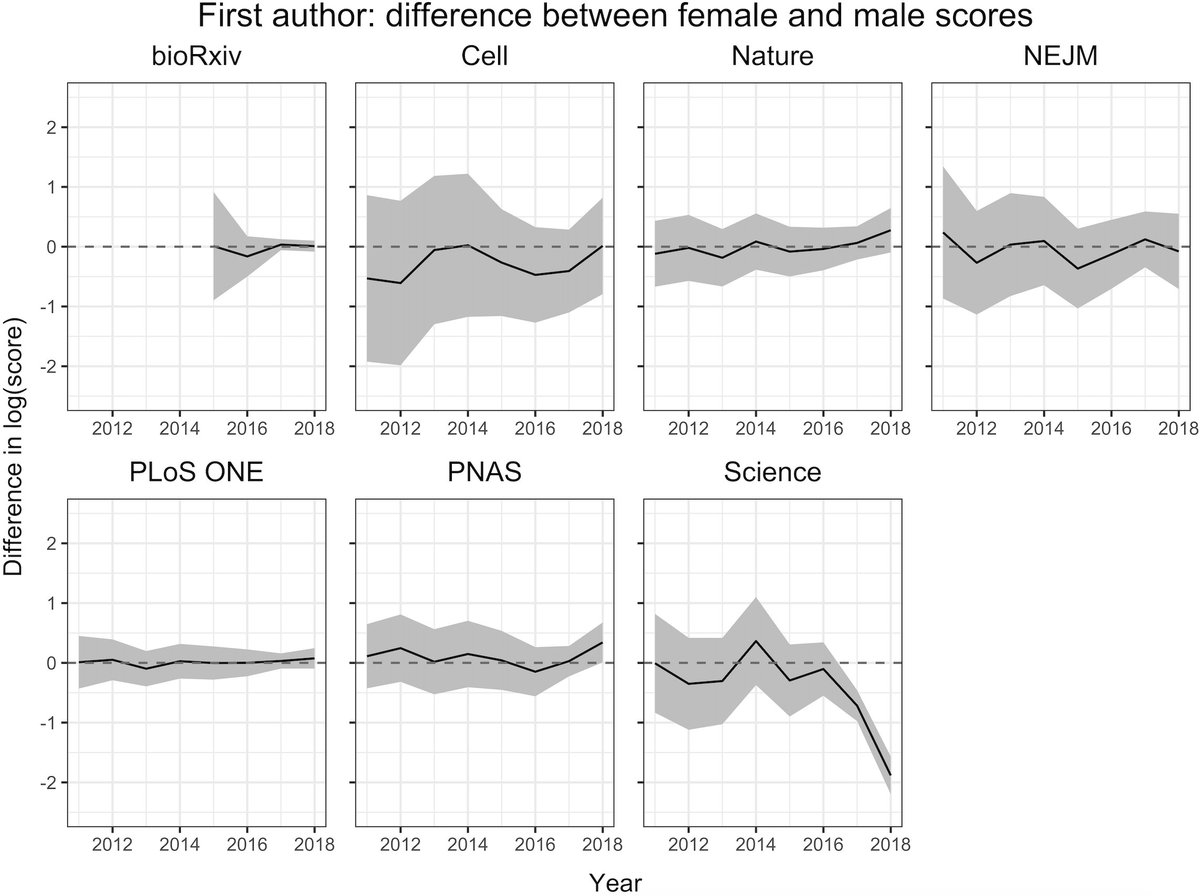

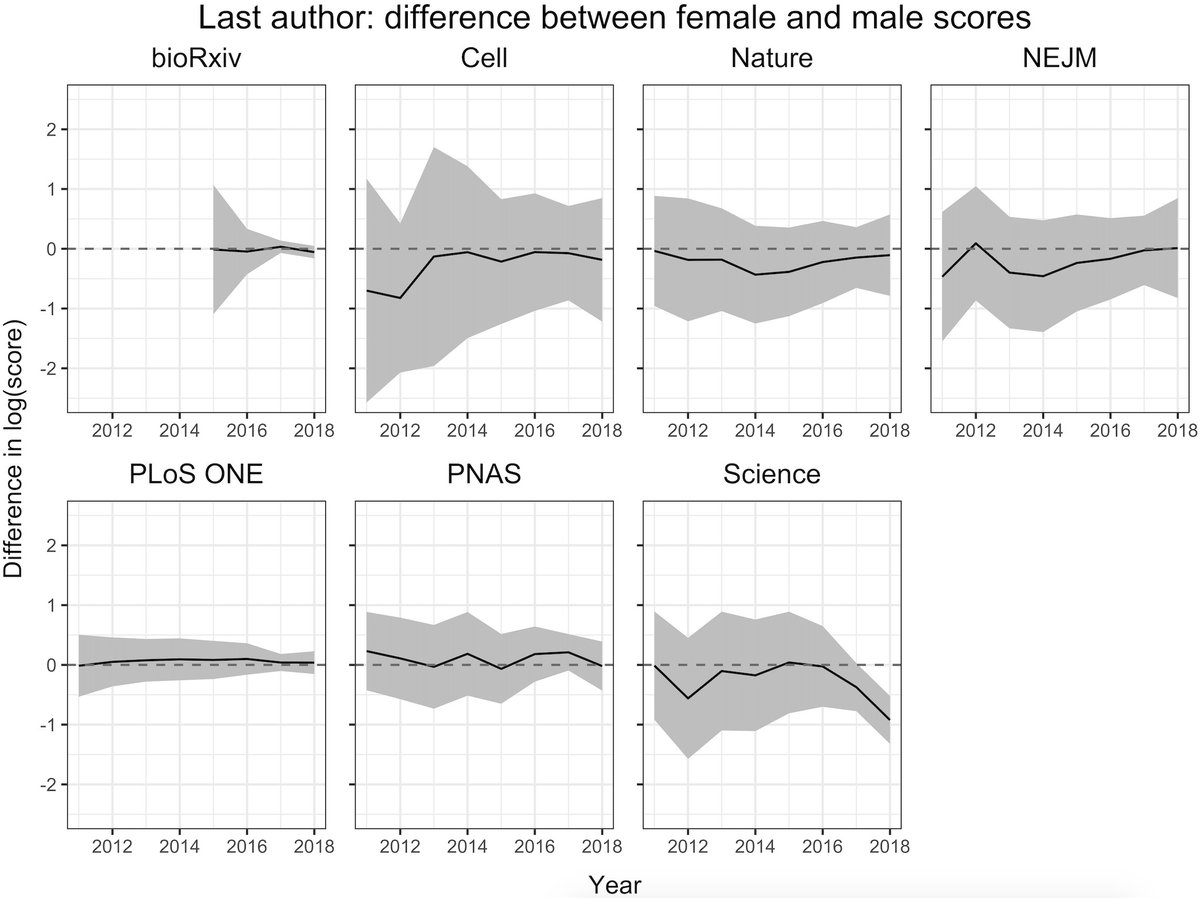

5/ We modeled scores 1 year post publication for 6 journals and a pre-print server (@Nature @NEJM @CellCellPress @bioRxivPreprint @PNASNews @PLOSONE @ScienceMagazine ) in >200,000 articles from 2011-2018. We looked at the difference in scores bw male/female first/last authors

6/ We found no evidence of bias for most journals, except a marked bias against women appeared in Science in 2017-2018. This appeared for both first and last authors, and for both mean and median scores. Why? (Below: gray = 95% C.I.)

7/ Maybe women authors weren’t self promoting as much on Twitter? Maybe journalists introduced bias by reporting more on papers authored by men? If these were driving the result, we would expect to see a dip in other journals too. But they didn’t.

8/ Maybe the way Science chose to feature or promote certain articles favoured men? Maybe Science published more papers in disciplines that are disproportionately male and of broad public interest?

9/ We spoke with the press team at Science and they have many initiatives to support women authors science.sciencemag.org/content/370/65…. (One of which is encouraging women authors to do tweetorials, so here we are! 😅) But they couldn’t tell us the reason for the results either.

10/ More research is needed to understand why the bias arose. More research is also needed to understand impacts for trans/non-binary authors.

11/11 We need to stamp out gender bias in academia. Today.

• • •

Missing some Tweet in this thread? You can try to

force a refresh