It still confuses me that in spaces in which humans can often generalize from just 2 or 3 examples, it's considered successful when a software system does so from millions of examples

Nevermind robustness issues

It's true that, you know, reading the entire internet is something that computers can do better than us. But why should they have to? I feel like the metric for success is just wild

Even if training ends up being a relatively small part of the cost of a system, I think it's the part the community could in many cases shrink down to almost nothing compared to what it currently is

And there are so many domains that don't have the luxury of millions of even thousands of examples at our disposal to begin with

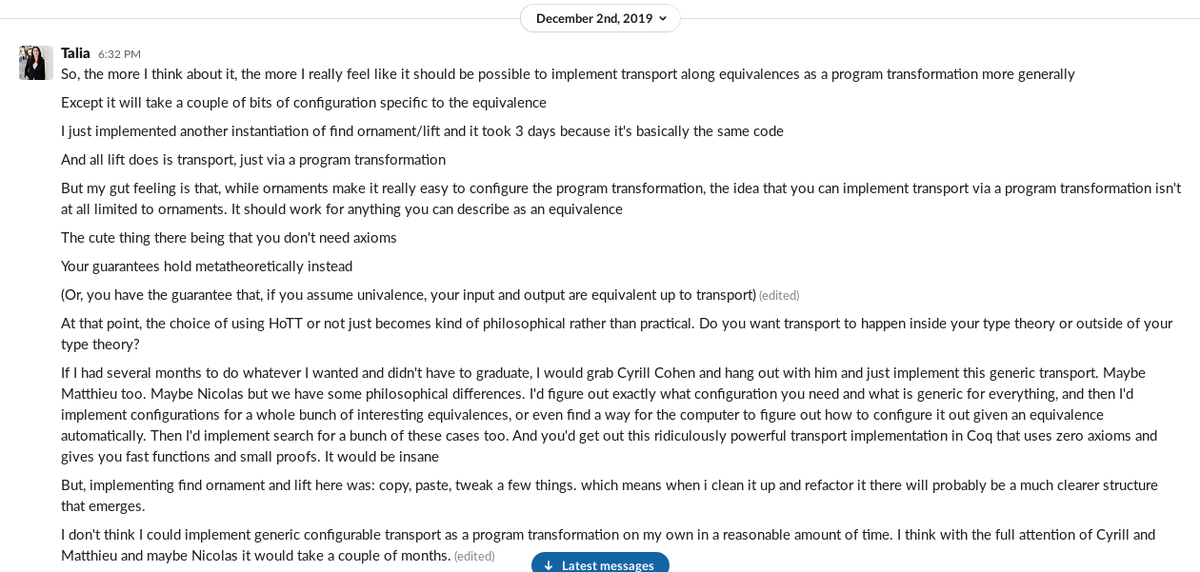

I recognize this is oversimplifying a whole field and that as someone whose work is what it is I am extremely biased here. The less "hot take" version of this is that few-shot learning problems aren't emphasized enough

The comparison between AI and humans is fraught and has in the past killed the entire field for a while. I just think there are things humans are doing that are really impressive and should serve as inspiration for how we solve a large class of problems

Also, the question of what success is, it's a really important question that steers entire research communities. It's important to reconsider it once in a while IMO, in every field

This inevitably leads to my neurosymbolic rant, which is one of 5 Talia rants, along with Eltana bagels tasting like cardboard

I hope this is more of an interesting and fun conversation for people than a heated argument---I'm enjoying the amount of work discussed but I know I said this in a way that was inflammatory, mostly because I was sleepy

Another clarification I missed: I'm not saying "this is bad and we shouldn't do it," I'm saying "we shouldn't be satisfied because I know we can do better in the near future, and I think there are useful techniques to draw on for that and we should care more about them"

I worry a bit that the way machine learning is heading, without any change, it's going to hit a wall at some point due to the lack of true generalization and the reliance on massive amounts of data, and walls like that can cause mass disillusionment about an entire field

So I just want more people thinking a bit further into the future

• • •

Missing some Tweet in this thread? You can try to

force a refresh