Within 10-20 years, nearly every branch of science will be, for all intents and purposes, a branch of computer science.

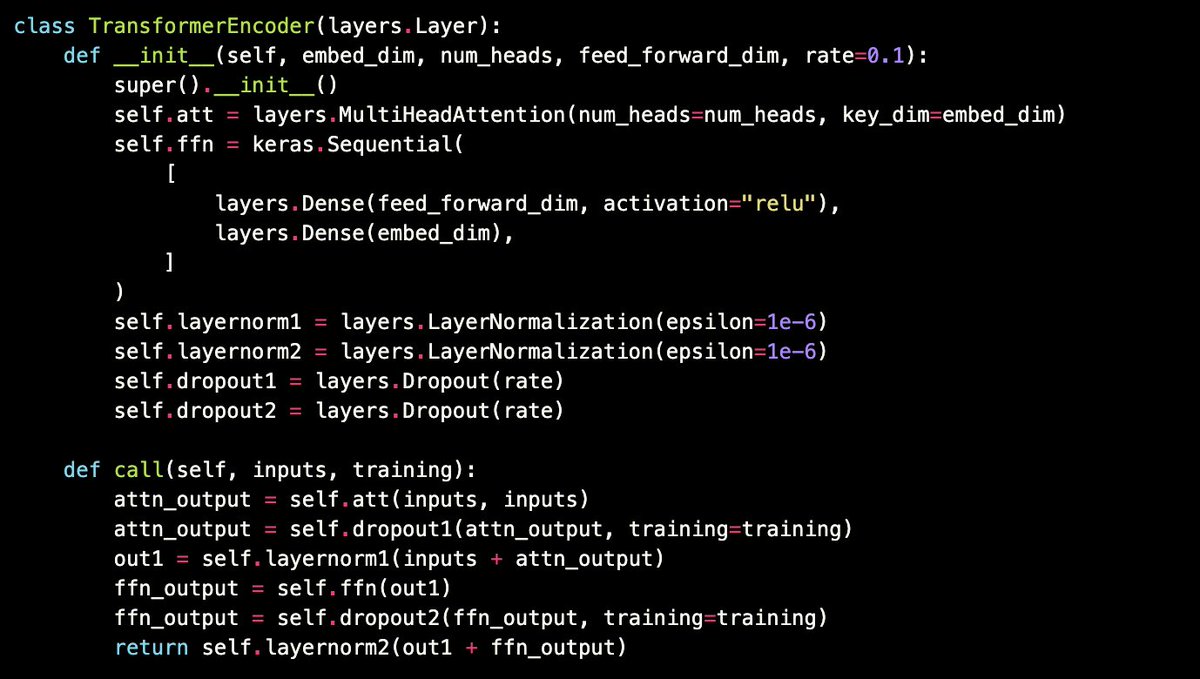

Computational physics, comp chemistry, comp biology, comp medicine... Even comp archeology. Realistic simulations, big data analysis, and ML everywhere

Computational physics, comp chemistry, comp biology, comp medicine... Even comp archeology. Realistic simulations, big data analysis, and ML everywhere

This tweet is infuriating many, apparently. Imagine the controversy if, in 2000, someone predicted that by 2020 most companies would be tech companies! Still true though. Good thing they didn't have Twitter back then :)

Don't worry though, your domain expertise will remain very important. Just like how... uh... having a strong linguistics background is essential in natural language processing (formerly computational linguistics)...

Anyway, "most science will be CS", just like "most companies will be tech cos", is a prediction you should take seriously, but not literally. It means that CS proficiency will soon be indispensable to staying relevant as a scientist: most what you will do will require CS.

In the same way that "most cos will be tech cos" means that tech proficiency will be essential to staying in business: most of your operations will critically require tech. Walmart, AXA, FedEx, etc. are "tech companies".

It doesn't mean that chemistry will be literally classified as a subfield of CS, or that Walmart will be literally classified as a tech company. Obviously...

But it does mean that, if you were a business executive in 2000, you should hire people who understand tech (including at top levels), and if you're a scientist today, you should make sure that you develop your CS chops (including ML).

• • •

Missing some Tweet in this thread? You can try to

force a refresh