Recipes that I find to be beneficial when working in low-data/imbalance regimes (vision):

* Use a weighted loss function &/or focal loss.

* Either use simpler/shallower models or use models that are known to work well in these cases. Ex: SimCLRV2, Big Transfer, DINO, etc.

1/n

* Use a weighted loss function &/or focal loss.

* Either use simpler/shallower models or use models that are known to work well in these cases. Ex: SimCLRV2, Big Transfer, DINO, etc.

1/n

* Use MixUp or CutMix in the augmentation pipeline to relax the space of marginals.

* Ensure a certain percent of minority class data is always present during each mini-batch. In @TensorFlow, this can be done using `rejection_resampling`.

tensorflow.org/guide/data#rej…

2/n

* Ensure a certain percent of minority class data is always present during each mini-batch. In @TensorFlow, this can be done using `rejection_resampling`.

tensorflow.org/guide/data#rej…

2/n

* Use semi-supervised learning recipes that combine the benefits of self-supervision and few-shot learning. Ex: PAWS by @facebookai.

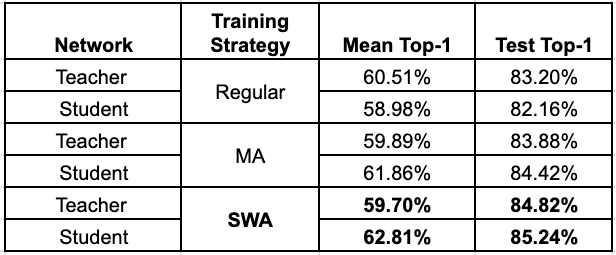

* Use of SWA is generally advised for better generalization but its use in these regimes is particularly useful.

3/n

* Use of SWA is generally advised for better generalization but its use in these regimes is particularly useful.

3/n

Has anyone done comprehensive ablations around these? Are there any resources that discuss this area really well?

It probably calls for a paper but I am open to collaborations 😅

4/n

It probably calls for a paper but I am open to collaborations 😅

4/n

• • •

Missing some Tweet in this thread? You can try to

force a refresh